泰坦尼克号乘客生存预测

泰坦尼克号乘客获救预测

1. 案例背景

泰坦尼克号沉船事故是世界上最著名的沉船事故之一。1912年4月15日,在她的处女航期间,泰坦尼克号撞上冰山后沉没,造成2224名乘客和机组人员中超过1502人的死亡。这一轰动的悲剧震惊了国际社会,并导致更好的船舶安全法规。 事故中导致死亡的一个原因是许多船员和乘客没有足够的救生艇。然而在被获救群体中也有一些比较幸运的因素;一些人群在事故中被救的几率高于其他人,比如妇女、儿童和上层阶级。 这个Case里,我们需要分析和判断出什么样的人更容易获救。最重要的是,要利用机器学习来预测出在这场灾难中哪些人会最终获救;

此项目数据集分为2份数据集titanic_train.csv和titanic_test.csv

titanic_train.csv: 训练集,共计891条数据

titanic_test.csv: 测试集,共计418条数据

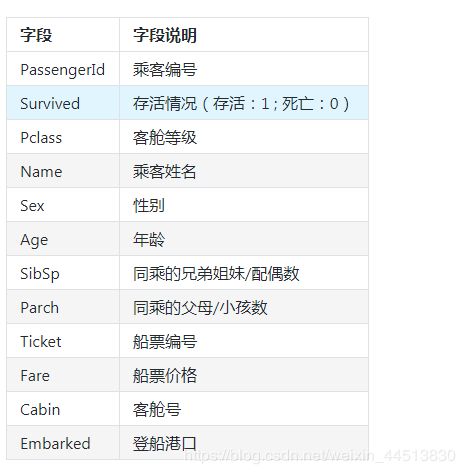

PassengerId 是数据唯一序号;Survived 是存活情况,为预测标记特征;剩下的10个是原始特征数据。

首先先看看数据

#数据分析库

import pandas as pd

#科学计算库

import numpy as np

from pandas import Series,DataFrame

data_train = pd.read_csv("datalab/1386/titanic_train.csv")

data_test = pd.read_csv("datalab/1386/titanic_test.csv")

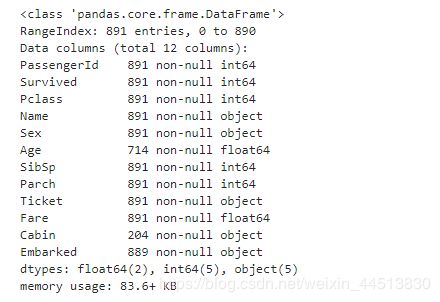

data_train.info()

从数据的信息可以发现,年龄和客舱号数据有丢失。

我们现在对缺失值进行处理,因为年龄的缺失数量比较少,所以直接用中位数进行填充,而客舱号因为缺失值太多,如果进行填充会带来很大的误差,所以对客舱号的不进行处理。

#Age列中的缺失值用Age中位数进行填充

data_train["Age"] = data_train['Age'].fillna(data_train['Age'].median())

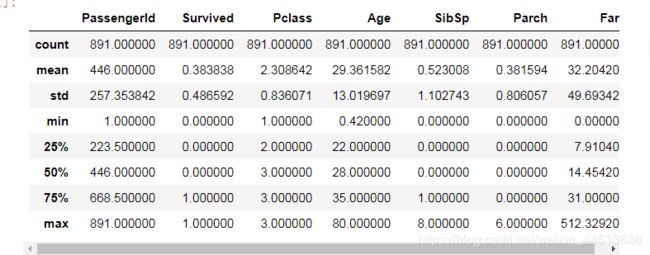

data_train.describe()

#线性回归

from sklearn.linear_model import LinearRegression

#训练集交叉验证,得到平均值

#from sklearn.cross_validation import KFold

from sklearn.model_selection import KFold

#选取简单的可用输入特征

predictors = ["Pclass","Age","SibSp","Parch","Fare"]

#初始化线性回归算法

alg = LinearRegression()

#样本平均分成3份,3折交叉验证

#我有试着改变交叉验证的折数,发现3折的时候准确率比较高。

kf = KFold(n_splits=3,shuffle=False,random_state=1)

predictions = []

for train,test in kf.split(data_train):

# train,test 是从data_train分出来的数据,和n_split有关。

train_predictors = (data_train[predictors].iloc[train,:])

train_target = data_train["Survived"].iloc[train]

# 开始训练数据

alg.fit(train_predictors,train_target)

#进行预测

test_predictions = alg.predict(data_train[predictors].iloc[test,:])

predictions.append(test_predictions)

import numpy as np

#拼接predictions

predictions = np.concatenate(predictions,axis=0)

# 把数据二值化

predictions[predictions>.5] = 1

predictions[predictions<=.5] = 0

accuracy = sum(predictions == data_train["Survived"]) / len(predictions)

print ("准确率为: ", accuracy)

from sklearn.model_selection import cross_val_score

#逻辑回归

from sklearn.linear_model import LogisticRegression

#初始化逻辑回归算法

LogRegAlg=LogisticRegression(random_state=1)

re = LogRegAlg.fit(data_train[predictors],data_train["Survived"])

#使用sklearn库里面的交叉验证函数获取预测准确率分数

scores =cross_val_score(LogRegAlg,data_train[predictors],data_train["Survived"],cv=3)

#使用交叉验证分数的平均值作为最终的准确率

print("准确率为: ",scores.mean())

增加特征Sex和Embarked列,查看对预测的影响

#Sex性别列处理:male用0,female用1

data_train.loc[data_train["Sex"] == "male","Sex"] = 0

data_train.loc[data_train["Sex"] == "female","Sex"] = 1

#缺失值用最多的S进行填充

data_train["Embarked"] = data_train["Embarked"].fillna('S')

#地点用0,1,2

data_train.loc[data_train["Embarked"] == "S","Embarked"] = 0

data_train.loc[data_train["Embarked"] == "C","Embarked"] = 1

data_train.loc[data_train["Embarked"] == "Q","Embarked"] = 2

增加2个特征Sex和Embarked,继续使用逻辑回归算法进行预测

predictors = ["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"]

LogRegAlg=LogisticRegression(random_state=1)

#Compute the accuracy score for all the cross validation folds.(much simpler than what we did before!)

re = LogRegAlg.fit(data_train[predictors],data_train["Survived"])

scores = model_selection.cross_val_score(LogRegAlg,data_train[predictors],data_train["Survived"],cv=3)

#Take the mean of the scores (because we have one for each fold)

print("准确率为: ",scores.mean())

![]()

通过增加了2个特征,模型的准确率提高到78.78%,说明好的特征有利于提升模型的预测能力。

#新增:对测试集数据进行预处理,并进行结果预测

#Age列中的缺失值用Age均值进行填充

data_test["Age"] = data_test["Age"].fillna(data_test["Age"].median())

#Fare列中的缺失值用Fare最大值进行填充

data_test["Fare"] = data_test["Fare"].fillna(data_test["Fare"].max())

#Sex性别列处理:male用0,female用1

data_test.loc[data_test["Sex"] == "male","Sex"] = 0

data_test.loc[data_test["Sex"] == "female","Sex"] = 1

#缺失值用最多的S进行填充

data_test["Embarked"] = data_test["Embarked"].fillna('S')

#地点用0,1,2

data_test.loc[data_test["Embarked"] == "S","Embarked"] = 0

data_test.loc[data_test["Embarked"] == "C","Embarked"] = 1

data_test.loc[data_test["Embarked"] == "Q","Embarked"] = 2

test_features = ["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"]

#构造测试集的Survived列,

data_test["Survived"] = -1

test_predictors = data_test[test_features]

data_test["Survived"] = LogRegAlg.predict(test_predictors)

7. 使用随机森林算法

from sklearn.model_selection import cross_val_score

from sklearn.ensemble import RandomForestClassifier

predictors=["Pclass","Sex","Age","SibSp","Parch","Fare","Embarked"]

#10棵决策树,停止的条件:样本个数为2,叶子节点个数为1

alg=RandomForestClassifier(random_state=1,n_estimators=10,min_samples_split=2,min_samples_leaf=1)

kf=model_selection.KFold(n_splits=3,shuffle=False, random_state=1)

scores=cross_val_score(alg,data_train[predictors],data_train["Survived"],cv=kf)

print(scores)

print(scores.mean())

#30棵决策树,停止的条件:样本个数为2,叶子节点个数为1

alg=RandomForestClassifier(random_state=1,n_estimators=30,min_samples_split=2,min_samples_leaf=1)

kf=model_selection.KFold(n_splits=10,shuffle=False,random_state=1)

scores=model_selection.cross_val_score(alg,data_train[predictors],data_train["Survived"],cv=kf)

print(scores)

print(scores.mean())