对AlexNet深度卷积神经网络的学习随记

只可惜自己的本子不支持gpu,跑的结果太吓人了。

trainning on cpu epoch:1, loss:0.0122, train_acc:0.417, test_acc:0.711, time:11123.8 sec--------3.08h epoch:2, loss:0.0056, train_acc:0.734, test_acc:0.772, time:10350.6 sec--------2.875h epoch:3, loss:0.0045, train_acc:0.782, test_acc:0.815, time:12629.7 sec--------3.508h epoch:4, loss:0.0040, train_acc:0.810, test_acc:0.828, time:32446.9 sec--------9.012h epoch:5, loss:0.0036, train_acc:0.831, test_acc:0.836, time:15733.0 sec--------4.370h

其中epoch4是晚上跑时,本子休眠了,早上起来接着跑的,所以用了9个小时,但其它几个epoch是正常情况下跑的,尤其是epoch5,尽然跑了4个半小时。太恐怖了。

没有对比,就没有伤害,看下在基于gpu跑的结果吧:

training on gpu(0) epoch: 1, loss:1.3018, train_acc: 0.511, test_acc: 0.745, time: 19.0 sec epoch: 2, loss:0.6527, train_acc: 0.756, test_acc: 0.809, time: 17.1 sec epoch: 3, loss:0.5350, train_acc: 0.800, test_acc: 0.837, time: 17.0 sec epoch: 4, loss:0.4712, train_acc: 0.826, test_acc: 0.855, time: 17.1 sec epoch: 5, loss:0.4267, train_acc: 0.844, test_acc: 0.866, time: 17.1 sec

本来还想调参多做几次实验呢,结果。。。。

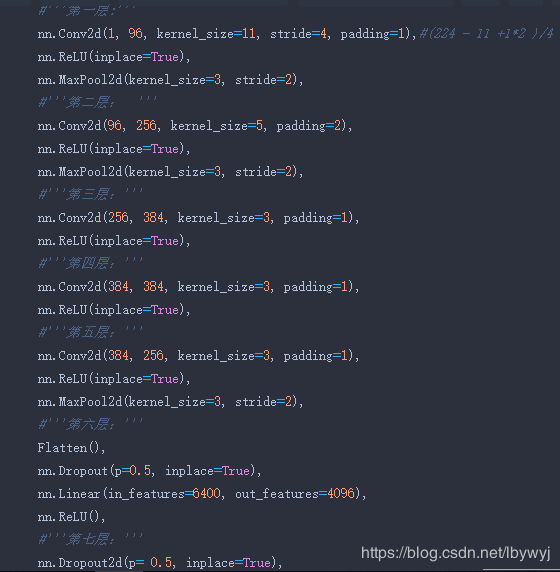

代码及过程如下:

Conv2d output.shape: torch.Size([1, 96, 54, 54]) ReLU output.shape: torch.Size([1, 96, 54, 54]) MaxPool2d output.shape: torch.Size([1, 96, 26, 26]) Conv2d output.shape: torch.Size([1, 256, 26, 26]) ReLU output.shape: torch.Size([1, 256, 26, 26]) MaxPool2d output.shape: torch.Size([1, 256, 12, 12]) Conv2d output.shape: torch.Size([1, 384, 12, 12]) ReLU output.shape: torch.Size([1, 384, 12, 12]) Conv2d output.shape: torch.Size([1, 384, 12, 12]) ReLU output.shape: torch.Size([1, 384, 12, 12]) Conv2d output.shape: torch.Size([1, 256, 12, 12]) ReLU output.shape: torch.Size([1, 256, 12, 12]) MaxPool2d output.shape: torch.Size([1, 256, 5, 5]) Flatten output.shape: torch.Size([1, 6400]) Dropout output.shape: torch.Size([1, 6400]) Linear output.shape: torch.Size([1, 4096]) ReLU output.shape: torch.Size([1, 4096]) Dropout2d output.shape: torch.Size([1, 4096]) Linear output.shape: torch.Size([1, 4096]) ReLU output.shape: torch.Size([1, 4096]) Linear output.shape: torch.Size([1, 10])