pytorch使用Alexnet实现cifar10分类

介绍Alexnet

- Alexnet使用了一些技巧避免过拟合:数据增强,正则化,dropout,局部相应归一化LRN

- 使用了gpu加速

- 使用了ReLU非线性激活函数。速度快max(0,x)。

- 局部相应归一化LRN 对局部神经元的活动创建竞争机制,使得其中响应比较大的值变得相对更大,并抑制其他反馈较小的神经元,增强了模型的泛化能力。

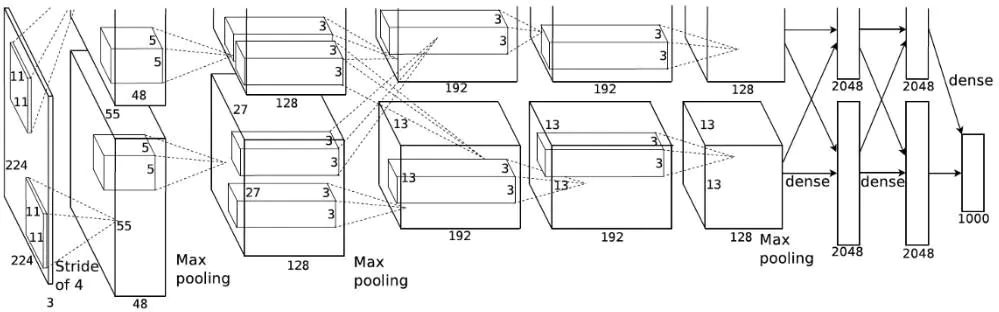

- 网络模型如下所示:

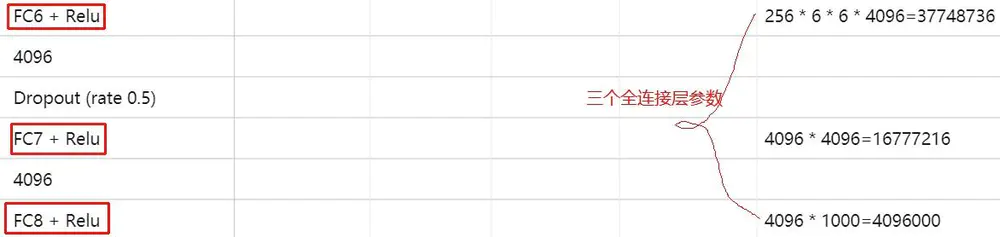

- 全连接如下

-

下面使用pytorch实现cifar10的分类

- 数据集可以自己下载,也可以使用代码下载,自己下载的地址在:http://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

- 导入必要的包:

import torch

from torch.autograd import Variable

import numpy as np

import matplotlib.pyplot as plt

from torch import nn,optim

from torch.utils.data import DataLoader

from torchvision import datasets,transforms

import torch

from torch import nn, optim

from torch.autograd import Variable

from torch.utils.data import DataLoader

from torchvision import datasets, transforms- 定义网络结构

#定义Alexnet网路结构

class AlexNet(nn.Module):

def __init__(self,num_classes):

super(AlexNet,self).__init__()

self.features=nn.Sequential(

nn.Conv2d(3,64,kernel_size=3,stride=2,padding=1),#修改了这个地方,不知道为什么就对了

# raw kernel_size=11, stride=4, padding=2. For use img size 224 * 224.

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),

nn.Conv2d(64,192,kernel_size=5,padding=2),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),

nn.Conv2d(192,384,kernel_size=3,padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(384,256,kernel_size=3,padding=1),

nn.ReLU(inplace=True),

nn.Conv2d(256,256,kernel_size=3,padding=1),

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),)

self.classifier=nn.Sequential(

nn.Dropout(),

nn.Linear(256*1*1,4096),

nn.ReLU(inplace=True),

nn.Dropout(),

nn.Linear(4096,4096),

nn.ReLU(inplace=True),

nn.Linear(4096,num_classes),)

def forward(self,x):

x=self.features(x)

x=x.view(x.size(0),256*1*1)

x=self.classifier(x)

#return F.log_softmax(inputs, dim=3)

return x- 开始训练

import torch

from torch.autograd import Variable

import numpy as np

import matplotlib.pyplot as plt

from torch import nn,optim

from torch.utils.data import DataLoader

from torchvision import datasets,transforms

#定义一些超参数

batch_size=100

learning_rate=1e-2

num_epoches=200

#预处理

data_tf=transforms.Compose([transforms.ToTensor(),transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

#将图像转化成tensor,然后继续标准化,就是减均值,除以方差

#读取数据集

train_dataset=datasets.CIFAR10(root='./data1',train=True,transform=data_tf,download=True)

test_dataset=datasets.CIFAR10(root='./data1',train=False,transform=data_tf)

#使用内置的函数导入数据集

train_loader=DataLoader(train_dataset,batch_size=batch_size,shuffle=True)

test_loader=DataLoader(test_dataset,batch_size=batch_size,shuffle=False)

#导入网络,定义损失函数和优化方法

#model=Lenet()

#model=CNN()

model=AlexNet(10)

if torch.cuda.is_available():#是否使用cuda加速

model=model.cuda()

criterion=nn.CrossEntropyLoss()

optimizer=optim.SGD(model.parameters(),lr=learning_rate)

n_epochs=5

for epoch in range(n_epochs):

total=0

running_loss=0.0

running_correct=0

print("epoch {}/{}".format(epoch,n_epochs))

print("-"*10)

for data in train_loader:

img,label=data

#img=img.view(img.size(0),-1)

img = Variable(img)

if torch.cuda.is_available():

img=img.cuda()

label=label.cuda()

else:

img=Variable(img)

label=Variable(label)

out=model(img)#得到前向传播的结果

loss=criterion(out,label)#得到损失函数

print_loss=loss.data.item()

optimizer.zero_grad()#归0梯度

loss.backward()#反向传播

optimizer.step()#优化

running_loss+=loss.item()

epoch+=1

if epoch%50==0:

print('epoch:{},loss:{:.4f}'.format(epoch,loss.data.item()))

_, predicted = torch.max(out.data, 1)

total += label.size(0)

running_correct += (predicted == label).sum()

print('第%d个epoch的识别准确率为:%d%%' % (epoch + 1, (100 * running_correct / total)))

- 训练的结果:

准确率不是特别高,这个还有待调整。。。。

参考文献

1,数据集的下载网站:https://www.cnblogs.com/cloud-ken/p/8456878.html

2,深度学习识别CIFAR10:pytorch训练LeNet、AlexNet、VGG19实现及比较(一)http://www.cnblogs.com/zhengbiqing/p/10424693.html