CS231n的第二次作业之全连接神经网络

作业二

作业内容:

在本作业中,你将练习编写反向传播代码,训练神经网络和卷积神经网络

浅层神经网络简介:

我们首先关注一个例子,本例中的神经网络只包含一个隐藏层(图3.2.1)。这是一张神经网络的图片,让我们给此图的不同部分取一些名字

我们有输入特征X1、X2、X3,它们被竖直地堆叠起来,这叫做神经网络的输入层。它包含了神经网络的输入;然后这里有另外一层我们称之为隐藏层(图3.2.1的四个结点)。待会儿我会回过头来讲解术语"隐藏"的意义;在本例中最后一层只由一个结点构成,而这个只有一个结点的层被称为输出层,它负责产生预测值。

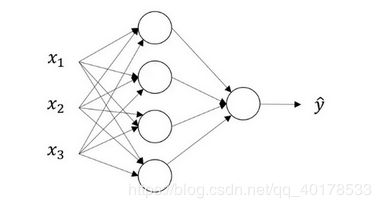

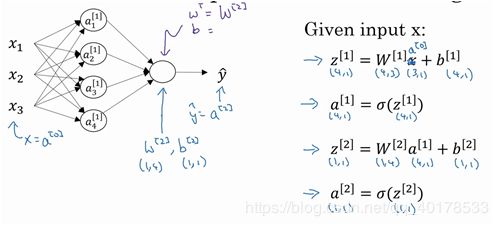

前向传播

向量化表示

1.正向传播

![]()

2.反向传播

为什么需要非线性激活函数呢?

如果你是用线性激活函数或者叫恒等激励函数,那么神经网络只是把输入线性组合再输出。那么无论你的神经网络有多少层一直在做的只是计算线性函数,所以不如直接去掉全部隐藏层,事实证明:要让你的神经网络能够计算出有趣的函数,你必须使用非线性激活函数。总而言之,不能在隐藏层用线性激活函数,可以用ReLU或者tanh或者leaky ReLU或者其他的非线性激活函数,唯一可以用线性激活函数的通常就是输出层;除了这种情况,会在隐层用线性函数的,除了一些特殊情况,比如与压缩有关的,那方面在这里将不深入讨论。

深层神层神经网络简介:

代码

from __future__ import print_function

import time

import numpy as np

import matplotlib.pyplot as plt

from cs231n.classifiers.fc_net import *

from cs231n.data_utils import get_CIFAR10_data

from cs231n.gradient_check import eval_numerical_gradient, eval_numerical_gradient_array

from cs231n.solver import Solver

%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

%load_ext autoreload

%autoreload 2

def rel_error(x, y):

""" returns relative error """

return np.max(np.abs(x - y) / (np.maximum(1e-8, np.abs(x) + np.abs(y))))

#加载数据集

data = get_CIFAR10_data()

for k, v in list(data.items()):

print(('%s: ' % k, v.shape))

# 测试前向传播函数

num_inputs = 2

input_shape = (4, 5, 6)

output_dim = 3

input_size = num_inputs * np.prod(input_shape)

weight_size = output_dim * np.prod(input_shape)

x = np.linspace(-0.1, 0.5, num=input_size).reshape(num_inputs, *input_shape)

w = np.linspace(-0.2, 0.3, num=weight_size).reshape(np.prod(input_shape), output_dim)

b = np.linspace(-0.3, 0.1, num=output_dim)

print(x.shape)

print(w.shape)

print(b.shape)

out, _ = affine_forward(x, w, b)

correct_out = np.array([[ 1.49834967, 1.70660132, 1.91485297],

[ 3.25553199, 3.5141327, 3.77273342]])

# Compare your output with ours. The error should be around e-9 or less.

print('Testing affine_forward function:')

print('difference: ', rel_error(out, correct_out))

# 测试反向传播函数

np.random.seed(231)

x = np.random.randn(10, 2, 3)

w = np.random.randn(6, 5)

b = np.random.randn(5)

dout = np.random.randn(10, 5)

dx_num = eval_numerical_gradient_array(lambda x: affine_forward(x, w, b)[0], x, dout)

dw_num = eval_numerical_gradient_array(lambda w: affine_forward(x, w, b)[0], w, dout)

db_num = eval_numerical_gradient_array(lambda b: affine_forward(x, w, b)[0], b, dout)

_, cache = affine_forward(x, w, b)

dx, dw, db = affine_backward(dout, cache)

# The error should be around e-10 or less

print('Testing affine_backward function:')

print('dx error: ', rel_error(dx_num, dx))

print('dw error: ', rel_error(dw_num, dw))

print('db error: ', rel_error(db_num, db))

# 测试ReLU函数前向传播

x = np.linspace(-0.5, 0.5, num=12).reshape(3, 4)

out, _ = relu_forward(x)

correct_out = np.array([[ 0., 0., 0., 0., ],

[ 0., 0., 0.04545455, 0.13636364,],

[ 0.22727273, 0.31818182, 0.40909091, 0.5, ]])

# Compare your output with ours. The error should be on the order of e-8

print('Testing relu_forward function:')

print('difference: ', rel_error(out, correct_out))

np.random.seed(231)

x = np.random.randn(10, 10)

dout = np.random.randn(*x.shape)

dx_num = eval_numerical_gradient_array(lambda x: relu_forward(x)[0], x, dout)

_, cache = relu_forward(x)

dx = relu_backward(dout, cache)

# The error should be on the order of e-12

print('Testing relu_backward function:')

print('dx error: ', rel_error(dx_num, dx))

from cs231n.layer_utils import affine_relu_forward, affine_relu_backward

np.random.seed(231)

x = np.random.randn(2, 3, 4)

w = np.random.randn(12, 10)

b = np.random.randn(10)

dout = np.random.randn(2, 10)

out, cache = affine_relu_forward(x, w, b)

dx, dw, db = affine_relu_backward(dout, cache)

dx_num = eval_numerical_gradient_array(lambda x: affine_relu_forward(x, w, b)[0], x, dout)

dw_num = eval_numerical_gradient_array(lambda w: affine_relu_forward(x, w, b)[0], w, dout)

db_num = eval_numerical_gradient_array(lambda b: affine_relu_forward(x, w, b)[0], b, dout)

# Relative error should be around e-10 or less

print('Testing affine_relu_forward and affine_relu_backward:')

print('dx error: ', rel_error(dx_num, dx))

print('dw error: ', rel_error(dw_num, dw))

print('db error: ', rel_error(db_num, db))

np.random.seed(231)

num_classes, num_inputs = 10, 50

x = 0.001 * np.random.randn(num_inputs, num_classes)

y = np.random.randint(num_classes, size=num_inputs)

dx_num = eval_numerical_gradient(lambda x: svm_loss(x, y)[0], x, verbose=False)

loss, dx = svm_loss(x, y)

# Test svm_loss function. Loss should be around 9 and dx error should be around the order of e-9

print('Testing svm_loss:')

print('loss: ', loss)

print('dx error: ', rel_error(dx_num, dx))

dx_num = eval_numerical_gradient(lambda x: softmax_loss(x, y)[0], x, verbose=False)

loss, dx = softmax_loss(x, y)

# Test softmax_loss function. Loss should be close to 2.3 and dx error should be around e-8

print('\nTesting softmax_loss:')

print('loss: ', loss)

print('dx error: ', rel_error(dx_num, dx))

np.random.seed(231)

N, D, H, C = 3, 5, 50, 7

X = np.random.randn(N, D)

y = np.random.randint(C, size=N)

std = 1e-3

model = TwoLayerNet(input_dim=D, hidden_dim=H, num_classes=C, weight_scale=std)

print('Testing initialization ... ')

W1_std = abs(model.params['W1'].std() - std)

b1 = model.params['b1']

W2_std = abs(model.params['W2'].std() - std)

b2 = model.params['b2']

assert W1_std < std / 10, 'First layer weights do not seem right'

assert np.all(b1 == 0), 'First layer biases do not seem right'

assert W2_std < std / 10, 'Second layer weights do not seem right'

assert np.all(b2 == 0), 'Second layer biases do not seem right'

print('Testing test-time forward pass ... ')

model.params['W1'] = np.linspace(-0.7, 0.3, num=D*H).reshape(D, H)

model.params['b1'] = np.linspace(-0.1, 0.9, num=H)

model.params['W2'] = np.linspace(-0.3, 0.4, num=H*C).reshape(H, C)

model.params['b2'] = np.linspace(-0.9, 0.1, num=C)

X = np.linspace(-5.5, 4.5, num=N*D).reshape(D, N).T

scores = model.loss(X)

correct_scores = np.asarray(

[[11.53165108, 12.2917344, 13.05181771, 13.81190102, 14.57198434, 15.33206765, 16.09215096],

[12.05769098, 12.74614105, 13.43459113, 14.1230412, 14.81149128, 15.49994135, 16.18839143],

[12.58373087, 13.20054771, 13.81736455, 14.43418138, 15.05099822, 15.66781506, 16.2846319 ]])

scores_diff = np.abs(scores - correct_scores).sum()

assert scores_diff < 1e-6, 'Problem with test-time forward pass'

print('Testing training loss (no regularization)')

y = np.asarray([0, 5, 1])

loss, grads = model.loss(X, y)

correct_loss = 3.4702243556

assert abs(loss - correct_loss) < 1e-10, 'Problem with training-time loss'

model.reg = 1.0

loss, grads = model.loss(X, y)

correct_loss = 26.5948426952

assert abs(loss - correct_loss) < 1e-10, 'Problem with regularization loss'

# Errors should be around e-7 or less

for reg in [0.0, 0.7]:

print('Running numeric gradient check with reg = ', reg)

model.reg = reg

loss, grads = model.loss(X, y)

for name in sorted(grads):

f = lambda _: model.loss(X, y)[0]

grad_num = eval_numerical_gradient(f, model.params[name], verbose=False)

print('%s relative error: %.2e' % (name, rel_error(grad_num, grads[name])))

model = TwoLayerNet()

solver = None

##############################################################################

# TODO: Use a Solver instance to train a TwoLayerNet that achieves at least #

# 50% accuracy on the validation set. #

##############################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

solver = Solver(model, data,

update_rule='sgd',

optim_config={

'learning_rate': 1e-3,

},

lr_decay=0.95,

num_epochs=10, batch_size=100,

print_every=100)

solver.train()

# 可视化训练集、验证集的精度

plt.subplot(2, 1, 1)

plt.title('Training loss')

plt.plot(solver.loss_history, 'o')

plt.xlabel('Iteration')

plt.subplot(2, 1, 2)

plt.title('Accuracy')

plt.plot(solver.train_acc_history, '-o', label='train')

plt.plot(solver.val_acc_history, '-o', label='val')

plt.plot([0.5] * len(solver.val_acc_history), 'k--')

plt.xlabel('Epoch')

plt.legend(loc='lower right')

plt.gcf().set_size_inches(15, 12)

plt.show()

np.random.seed(231)

N, D, H1, H2, C = 2, 15, 20, 30, 10

X = np.random.randn(N, D)

y = np.random.randint(C, size=(N,))

for reg in [0, 3.14]:

print('Running check with reg = ', reg)

model = FullyConnectedNet([H1, H2], input_dim=D, num_classes=C,

reg=reg, weight_scale=5e-2, dtype=np.float64)

loss, grads = model.loss(X, y)

print('Initial loss: ', loss)

# Most of the errors should be on the order of e-7 or smaller.

# NOTE: It is fine however to see an error for W2 on the order of e-5

# for the check when reg = 0.0

for name in sorted(grads):

f = lambda _: model.loss(X, y)[0]

grad_num = eval_numerical_gradient(f, model.params[name], verbose=False, h=1e-5)

print('%s relative error: %.2e' % (name, rel_error(grad_num, grads[name])))

# 使用三层网络过拟合50个训练示例

# 调整学习率和初始化规模。

num_train = 50

small_data = {

'X_train': data['X_train'][:num_train],

'y_train': data['y_train'][:num_train],

'X_val': data['X_val'],

'y_val': data['y_val'],

}

weight_scale = 1e-1 # Experiment with this!

learning_rate = 1e-3 # Experiment with this!

model = FullyConnectedNet([100, 100],

weight_scale=weight_scale, dtype=np.float64)

solver = Solver(model, small_data,

print_every=10, num_epochs=20, batch_size=25,

update_rule='sgd',

optim_config={

'learning_rate': learning_rate,

}

)

solver.train()

plt.plot(solver.loss_history, 'o')

plt.title('Training loss history')

plt.xlabel('Iteration')

plt.ylabel('Training loss')

plt.show()

# 使用五层网络过拟合50个训练示例

# 调整学习率和初始化规模。

num_train = 50

small_data = {

'X_train': data['X_train'][:num_train],

'y_train': data['y_train'][:num_train],

'X_val': data['X_val'],

'y_val': data['y_val'],

}

learning_rate = 5e-4 # Experiment with this!

weight_scale = 1e-1 # Experiment with this!

model = FullyConnectedNet([100, 100, 100, 100],

weight_scale=weight_scale, dtype=np.float64)

solver = Solver(model, small_data,

print_every=10, num_epochs=20, batch_size=25,

update_rule='sgd',

optim_config={

'learning_rate': learning_rate,

}

)

solver.train()

plt.plot(solver.loss_history, 'o')

plt.title('Training loss history')

plt.xlabel('Iteration')

plt.ylabel('Training loss')

plt.show()