DL7-波士顿房价预测问题:TensorFlow 2.0 实践

导入库

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

#显示图像

import pandas as pd

from sklearn.utils import shuffle

from sklearn.preprocessing import scale

print("TensorFlow版本是:",tf.__version__)

TensorFlow版本是: 2.0.0

通过pandas导入数据

df = pd.read_csv("data/boston.csv",header = 0)

#显示数据摘要描述信息

print(df.describe())

CRIM ZN INDUS CHAS NOX RM \

count 506.000000 506.000000 506.000000 506.000000 506.000000 506.000000

mean 3.613524 11.363636 11.136779 0.069170 0.554695 6.284634

std 8.601545 23.322453 6.860353 0.253994 0.115878 0.702617

min 0.006320 0.000000 0.460000 0.000000 0.385000 3.561000

25% 0.082045 0.000000 5.190000 0.000000 0.449000 5.885500

50% 0.256510 0.000000 9.690000 0.000000 0.538000 6.208500

75% 3.677082 12.500000 18.100000 0.000000 0.624000 6.623500

max 88.976200 100.000000 27.740000 1.000000 0.871000 8.780000

AGE DIS RAD TAX PTRATIO LSTAT \

count 506.000000 506.000000 506.000000 506.000000 506.000000 506.000000

mean 68.574901 3.795043 9.549407 408.237154 18.455534 12.653063

std 28.148861 2.105710 8.707259 168.537116 2.164946 7.141062

min 2.900000 1.129600 1.000000 187.000000 12.600000 1.730000

25% 45.025000 2.100175 4.000000 279.000000 17.400000 6.950000

50% 77.500000 3.207450 5.000000 330.000000 19.050000 11.360000

75% 94.075000 5.188425 24.000000 666.000000 20.200000 16.955000

max 100.000000 12.126500 24.000000 711.000000 22.000000 37.970000

MEDV

count 506.000000

mean 22.532806

std 9.197104

min 5.000000

25% 17.025000

50% 21.200000

75% 25.000000

max 50.000000

# Pandas 读取 数据

# 显示前3条数据

df.head(3)

| CRIM | ZN | INDUS | CHAS | NOX | RM | AGE | DIS | RAD | TAX | PTRATIO | LSTAT | MEDV | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.00632 | 18.0 | 2.31 | 0 | 0.538 | 6.575 | 65.2 | 4.0900 | 1 | 296 | 15.3 | 4.98 | 24.0 |

| 1 | 0.02731 | 0.0 | 7.07 | 0 | 0.469 | 6.421 | 78.9 | 4.9671 | 2 | 242 | 17.8 | 9.14 | 21.6 |

| 2 | 0.02729 | 0.0 | 7.07 | 0 | 0.469 | 7.185 | 61.1 | 4.9671 | 2 | 242 | 17.8 | 4.03 | 34.7 |

# 显示后3条数据

df.tail(3)

| CRIM | ZN | INDUS | CHAS | NOX | RM | AGE | DIS | RAD | TAX | PTRATIO | LSTAT | MEDV | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 503 | 0.06076 | 0.0 | 11.93 | 0 | 0.573 | 6.976 | 91.0 | 2.1675 | 1 | 273 | 21.0 | 5.64 | 23.9 |

| 504 | 0.10959 | 0.0 | 11.93 | 0 | 0.573 | 6.794 | 89.3 | 2.3889 | 1 | 273 | 21.0 | 6.48 | 22.0 |

| 505 | 0.04741 | 0.0 | 11.93 | 0 | 0.573 | 6.030 | 80.8 | 2.5050 | 1 | 273 | 21.0 | 7.88 | 11.9 |

# 获取数据集的值

ds = df.values

print(ds.shape)

(506, 13)

ds

array([[6.3200e-03, 1.8000e+01, 2.3100e+00, ..., 1.5300e+01, 4.9800e+00,

2.4000e+01],

[2.7310e-02, 0.0000e+00, 7.0700e+00, ..., 1.7800e+01, 9.1400e+00,

2.1600e+01],

[2.7290e-02, 0.0000e+00, 7.0700e+00, ..., 1.7800e+01, 4.0300e+00,

3.4700e+01],

...,

[6.0760e-02, 0.0000e+00, 1.1930e+01, ..., 2.1000e+01, 5.6400e+00,

2.3900e+01],

[1.0959e-01, 0.0000e+00, 1.1930e+01, ..., 2.1000e+01, 6.4800e+00,

2.2000e+01],

[4.7410e-02, 0.0000e+00, 1.1930e+01, ..., 2.1000e+01, 7.8800e+00,

1.1900e+01]])

划分特征数据和标签数据

x_data = ds[:,:12] #取前12列数据:506*12

y_data = ds[:,12] #取最后1列:506*1

划分数据集:训练集、验证集和测试集

train_num = 300 #训练集的数目

valid_num = 100 #验证集的数目

test_num = len(x_data) - train_num - valid_num # 测试集的数目 = 506 - 训练集 - 验证集

# 训练集划分

x_train = x_data[:train_num]

y_train = y_data[:train_num]

# 验证集划分

x_valid = x_data[train_num : train_num + valid_num]

y_valid = y_data[train_num : train_num + valid_num]

# 测试集划分

x_test = x_data[train_num + valid_num : ]

y_test = y_data[train_num + valid_num : train_num + valid_num + test_num]

特征数据的类型转换

x_train = tf.cast(x_train, dtype=tf.float32)

x_valid = tf.cast(x_valid, dtype=tf.float32)

x_test = tf.cast(x_test, dtype=tf.float32)

定义模型

def model(x, w, b):

return tf.matmul(x,w) + b

创建变量

W = tf.Variable(tf.random.normal([12,1],mean = 0.0, stddev = 1.0, dtype = tf.float32))

B = tf.Variable(tf.zeros(1), dtype = tf.float32)

tf.random_normal(shape, mean=0.0, stddev=1.0, dtype=tf.float32, seed=None, name=None)

shape: 输出张量的形状,必选

mean: 正态分布的均值,默认为0

stddev: 正态分布的标准差,默认为1.0

dtype: 输出的类型,默认为tf.float32

seed: 随机数种子,是一个整数,当设置之后,每次生成的随机数都一样

name: 操作的名称

print(W,"\n\n",B)

设置训练参数

training_epochs = 50

leraning_rate = 0.001

batch_size = 10 # 批量训练一次的样本数

定义均方差损失函数

def loss(x,y,w,b):

err = model(x,w,b) - y

squared_err = tf.square(err) #求平方,得出方差

return tf.reduce_mean(squared_err) # 求均值,得出均方差

定义梯度计算函数

# 计算样本数据[x,y]在参数[w,b]点上的梯度

def grad(x,y,w,b):

with tf.GradientTape() as tape:

loss_ = loss(x,y,w,b)

return tape.gradient(loss_, [w,b]) # 返回梯度向量

选择优化器

optimizer = tf.keras.optimizers.SGD(leraning_rate) # 随机梯度下降优化器,并乘上学习率

迭代训练

loss_list_train = [] # 用于保存训练集loss值的列表

loss_list_valid = [] # 用于保存验证集loss值的列表

total_step = int(train_num / batch_size)

for epoch in range(training_epochs):

for step in range(total_step):

xs = x_train[step * batch_size : (step+1) * batch_size, :]

# 对x_train(300*12)依次存入第0-10,10-20行......数据

ys = y_train[step * batch_size : (step+1) * batch_size] # 对y_train(300*1)

grads = grad(xs,ys,W,B) #计算梯度

optimizer.apply_gradients(zip(grads,[W,B])) # 优化器根据梯度自动调整变量 W和B

loss_train = loss(x_train, y_train, W, B).numpy() # 计算当前轮训练损失

loss_valid = loss(x_valid, y_valid, W, B).numpy() # 计算当前轮验证损失

loss_list_train.append(loss_train)

loss_list_valid.append(loss_valid)

print("epoch={:3d}, train_loss={:.4f}, valid_loss = {:.4f}".format(epoch+1,loss_train,loss_valid))

epoch= 1, train_loss=nan, valid_loss = nan

epoch= 2, train_loss=nan, valid_loss = nan

epoch= 3, train_loss=nan, valid_loss = nan

epoch= 4, train_loss=nan, valid_loss = nan

epoch= 5, train_loss=nan, valid_loss = nan

epoch= 6, train_loss=nan, valid_loss = nan

epoch= 7, train_loss=nan, valid_loss = nan

epoch= 8, train_loss=nan, valid_loss = nan

epoch= 9, train_loss=nan, valid_loss = nan

epoch= 10, train_loss=nan, valid_loss = nan

epoch= 11, train_loss=nan, valid_loss = nan

epoch= 12, train_loss=nan, valid_loss = nan

epoch= 13, train_loss=nan, valid_loss = nan

epoch= 14, train_loss=nan, valid_loss = nan

epoch= 15, train_loss=nan, valid_loss = nan

epoch= 16, train_loss=nan, valid_loss = nan

epoch= 17, train_loss=nan, valid_loss = nan

epoch= 18, train_loss=nan, valid_loss = nan

epoch= 19, train_loss=nan, valid_loss = nan

epoch= 20, train_loss=nan, valid_loss = nan

epoch= 21, train_loss=nan, valid_loss = nan

epoch= 22, train_loss=nan, valid_loss = nan

epoch= 23, train_loss=nan, valid_loss = nan

epoch= 24, train_loss=nan, valid_loss = nan

epoch= 25, train_loss=nan, valid_loss = nan

epoch= 26, train_loss=nan, valid_loss = nan

epoch= 27, train_loss=nan, valid_loss = nan

epoch= 28, train_loss=nan, valid_loss = nan

epoch= 29, train_loss=nan, valid_loss = nan

epoch= 30, train_loss=nan, valid_loss = nan

epoch= 31, train_loss=nan, valid_loss = nan

epoch= 32, train_loss=nan, valid_loss = nan

epoch= 33, train_loss=nan, valid_loss = nan

epoch= 34, train_loss=nan, valid_loss = nan

epoch= 35, train_loss=nan, valid_loss = nan

epoch= 36, train_loss=nan, valid_loss = nan

epoch= 37, train_loss=nan, valid_loss = nan

epoch= 38, train_loss=nan, valid_loss = nan

epoch= 39, train_loss=nan, valid_loss = nan

epoch= 40, train_loss=nan, valid_loss = nan

epoch= 41, train_loss=nan, valid_loss = nan

epoch= 42, train_loss=nan, valid_loss = nan

epoch= 43, train_loss=nan, valid_loss = nan

epoch= 44, train_loss=nan, valid_loss = nan

epoch= 45, train_loss=nan, valid_loss = nan

epoch= 46, train_loss=nan, valid_loss = nan

epoch= 47, train_loss=nan, valid_loss = nan

epoch= 48, train_loss=nan, valid_loss = nan

epoch= 49, train_loss=nan, valid_loss = nan

epoch= 50, train_loss=nan, valid_loss = nan

版本2:特征数据归一化

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

#显示图像

import pandas as pd

from sklearn.utils import shuffle

from sklearn.preprocessing import scale

df = pd.read_csv("data/boston.csv",header = 0)

# 获取数据集的值

ds = df.values

# 划分特征数据和标签数据

x_data = ds[:,:12] #取前12列数据:506*12

y_data = ds[:,12] #取最后1列:506*1

# 特征数据归一化

# (特征值-特征值最小值) / (特征值最大值-特征值最小值)

# 对第i列的所有数据进行归一化

for i in range(12):

x_data[:,i] = (x_data[:,i] - x_data[:,i].min()) / (x_data[:,i].max() - x_data[:,i].min())

# 划分数据集:训练集、验证集和测试集

train_num = 300 #训练集的数目

valid_num = 100 #验证集的数目

test_num = len(x_data) - train_num - valid_num # 测试集的数目 = 506 - 训练集 - 验证集

# 训练集划分

x_train = x_data[:train_num]

y_train = y_data[:train_num]

# 验证集划分

x_valid = x_data[train_num : train_num + valid_num]

y_valid = y_data[train_num : train_num + valid_num]

# 测试集划分

x_test = x_data[train_num + valid_num : ]

y_test = y_data[train_num + valid_num : train_num + valid_num + test_num]

# 特征数据的类型转换

x_train = tf.cast(x_train, dtype=tf.float32)

x_valid = tf.cast(x_valid, dtype=tf.float32)

x_test = tf.cast(x_test, dtype=tf.float32)

# 定义模型

def model(x, w, b):

return tf.matmul(x,w) + b

# 创建变量

W = tf.Variable(tf.random.normal([12,1],mean = 0.0, stddev = 1.0, dtype = tf.float32))

B = tf.Variable(tf.zeros(1), dtype = tf.float32)

# 设置训练参数

training_epochs = 50

leraning_rate = 0.001

batch_size = 10 # 批量训练一次的样本数

# 定义均方差损失函数

def loss(x,y,w,b):

err = model(x,w,b) - y

squared_err = tf.square(err) #求平方,得出方差

return tf.reduce_mean(squared_err) # 求均值,得出均方差

# 定义梯度计算函数

# 计算样本数据[x,y]在参数[w,b]点上的梯度

def grad(x,y,w,b):

with tf.GradientTape() as tape:

loss_ = loss(x,y,w,b)

return tape.gradient(loss_, [w,b]) # 返回梯度向量

loss_list_train = [] # 用于保存训练集loss值的列表

loss_list_valid = [] # 用于保存验证集loss值的列表

total_step = int(train_num / batch_size)

# 选择优化器

optimizer = tf.keras.optimizers.SGD(leraning_rate) # 随机梯度下降优化器,并乘上学习率

for epoch in range(training_epochs):

for step in range(total_step):

xs = x_train[step * batch_size : (step+1) * batch_size, :]

# 对x_train(300*12)依次存入第0-10,10-20行......数据

ys = y_train[step * batch_size : (step+1) * batch_size] # 对y_train(300*1)

grads = grad(xs,ys,W,B) #计算梯度

optimizer.apply_gradients(zip(grads,[W,B])) # 优化器根据梯度自动调整变量 W和B

loss_train = loss(x_train, y_train, W, B).numpy() # 计算当前轮训练损失

loss_valid = loss(x_valid, y_valid, W, B).numpy() # 计算当前轮验证损失

loss_list_train.append(loss_train)

loss_list_valid.append(loss_valid)

print("epoch={:3d}, train_loss={:.4f}, valid_loss = {:.4f}".format(epoch+1,loss_train,loss_valid))

epoch= 1, train_loss=515.6136, valid_loss = 333.1685

epoch= 2, train_loss=408.4633, valid_loss = 247.1792

epoch= 3, train_loss=328.1588, valid_loss = 188.2397

epoch= 4, train_loss=267.9438, valid_loss = 148.7497

epoch= 5, train_loss=222.7648, valid_loss = 123.1357

epoch= 6, train_loss=188.8417, valid_loss = 107.3244

epoch= 7, train_loss=163.3470, valid_loss = 98.3509

epoch= 8, train_loss=144.1651, valid_loss = 94.0675

epoch= 9, train_loss=129.7130, valid_loss = 92.9281

epoch= 10, train_loss=118.8064, valid_loss = 93.8281

epoch= 11, train_loss=110.5585, valid_loss = 95.9854

epoch= 12, train_loss=104.3058, valid_loss = 98.8533

epoch= 13, train_loss=99.5513, valid_loss = 102.0555

epoch= 14, train_loss=95.9230, valid_loss = 105.3386

epoch= 15, train_loss=93.1419, valid_loss = 108.5374

epoch= 16, train_loss=90.9991, valid_loss = 111.5489

epoch= 17, train_loss=89.3381, valid_loss = 114.3139

epoch= 18, train_loss=88.0412, valid_loss = 116.8035

epoch= 19, train_loss=87.0202, valid_loss = 119.0092

epoch= 20, train_loss=86.2090, valid_loss = 120.9360

epoch= 21, train_loss=85.5577, valid_loss = 122.5972

epoch= 22, train_loss=85.0289, valid_loss = 124.0113

epoch= 23, train_loss=84.5942, valid_loss = 125.1992

epoch= 24, train_loss=84.2325, valid_loss = 126.1827

epoch= 25, train_loss=83.9277, valid_loss = 126.9833

epoch= 26, train_loss=83.6676, valid_loss = 127.6219

epoch= 27, train_loss=83.4428, valid_loss = 128.1178

epoch= 28, train_loss=83.2465, valid_loss = 128.4888

epoch= 29, train_loss=83.0732, valid_loss = 128.7511

epoch= 30, train_loss=82.9189, valid_loss = 128.9193

epoch= 31, train_loss=82.7803, valid_loss = 129.0065

epoch= 32, train_loss=82.6550, valid_loss = 129.0242

epoch= 33, train_loss=82.5412, valid_loss = 128.9827

epoch= 34, train_loss=82.4372, valid_loss = 128.8908

epoch= 35, train_loss=82.3420, valid_loss = 128.7564

epoch= 36, train_loss=82.2546, valid_loss = 128.5863

epoch= 37, train_loss=82.1743, valid_loss = 128.3865

epoch= 38, train_loss=82.1004, valid_loss = 128.1621

epoch= 39, train_loss=82.0323, valid_loss = 127.9175

epoch= 40, train_loss=81.9698, valid_loss = 127.6566

epoch= 41, train_loss=81.9125, valid_loss = 127.3827

epoch= 42, train_loss=81.8599, valid_loss = 127.0987

epoch= 43, train_loss=81.8119, valid_loss = 126.8069

epoch= 44, train_loss=81.7683, valid_loss = 126.5096

epoch= 45, train_loss=81.7288, valid_loss = 126.2083

epoch= 46, train_loss=81.6932, valid_loss = 125.9047

epoch= 47, train_loss=81.6614, valid_loss = 125.6000

epoch= 48, train_loss=81.6332, valid_loss = 125.2954

epoch= 49, train_loss=81.6085, valid_loss = 124.9918

epoch= 50, train_loss=81.5871, valid_loss = 124.6898

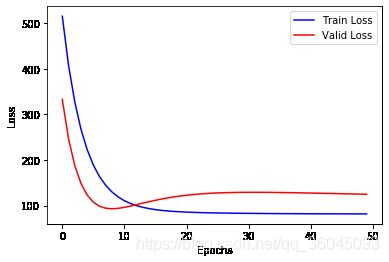

plt.xlabel("Epochs")

plt.ylabel("Loss")

plt.plot(loss_list_train, 'blue', label = "Train Loss")

plt.plot(loss_list_valid,'red', label = "Valid Loss")

plt.legend(loc = 1)# 通过参数loc指定图例位置

查看测试集损失

print("Test_loss:{:.4f}".format(loss(x_test, y_test, W, B).numpy()))

Test_loss:198.1025

应用模型

test_house_id = np.random.randint(0, test_num)

y = y_test[test_house_id]

y_pred = model(x_test, W, B)[test_house_id]

y_predict = tf.reshape(y_pred,()).numpy()

print("House id:", test_house_id, "\nActual value:", y, "\nPredicted value:", y_predict)

House id: 83

Actual value: 21.8

Predicted value: 26.273846