tensorflow——lmdb

参考官网:https://www.tensorflow.org/tutorials/keras/basic_text_classification

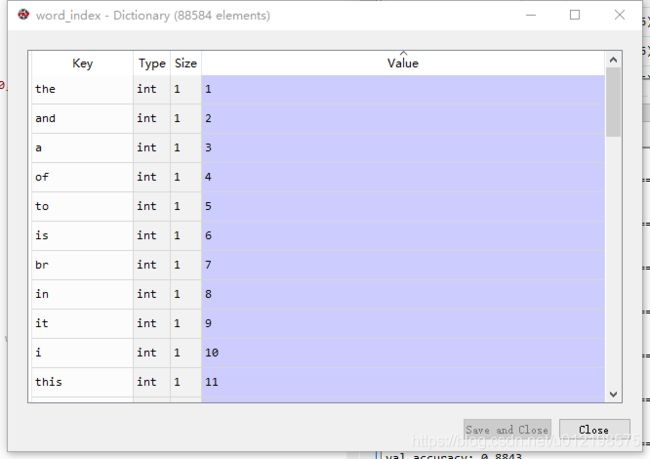

与源码不同,设置index_from=0从第0位开始,实际上map类型的word_index会从1开始。

import tensorflow as tf

from tensorflow import keras

import numpy as np

imdb = keras.datasets.imdb

(x_train, y_train), (x_test, y_test) = keras.datasets.imdb.load_data(num_words=10000, index_from=0)

print("train len >> %d, test len >> %d" %(len(x_train), len(x_test)))

print(x_train[0]) #每个整数表示字典中的一个特定字词

#将整数转换回字词

# A dictionary mapping words to an integer index

word_index = imdb.get_word_index()

# The first indices are reserved

#word_index = {k:(v+3) for k,v in word_index.items()}

#word_index[""] = 0

#word_index[""] = 1

#word_index[""] = 2 # unknown

#word_index[""] = 3

reverse_word_index = dict([(value, key) for (key, value) in word_index.items()])#将 value和 key反转

def decode_review(text):

return ' '.join([reverse_word_index.get(i, '?') for i in text])

print(decode_review(x_train[0]))

#不够256长度的评语用0补充

x_train = keras.preprocessing.sequence.pad_sequences(x_train,

value=0,

padding='post',

maxlen=256)

x_test = keras.preprocessing.sequence.pad_sequences(x_test,

value=0,

padding='post',

maxlen=256)

# input shape is the vocabulary count used for the movie reviews (10,000 words)

vocab_size = 10000

#模型序列

model = keras.Sequential()

model.add(keras.layers.Embedding(vocab_size, 16))

model.add(keras.layers.GlobalAveragePooling1D())

model.add(keras.layers.Dense(16, activation=tf.nn.relu))

model.add(keras.layers.Dense(1, activation=tf.nn.sigmoid))

model.summary()

model.compile(optimizer=tf.keras.optimizers.Adam(),

loss='binary_crossentropy',

metrics=['accuracy'])

#用训练集的前10000个训练

x_val = x_train[:10000]

partial_x_train = x_train[10000:]

y_val = y_train[:10000]

partial_y_train = y_train[10000:]

history = model.fit(partial_x_train,

partial_y_train,

epochs=40,

batch_size=512,

validation_data=(x_val, y_val),

verbose=1)

results = model.evaluate(x_test, y_test)

print(results)

history_dict = history.history #model.fit() 返回一个History对象,该对象包含一个字典,其中包括训练期间发生的所有情况

history_dict.keys()#dict_keys(['loss', 'val_loss', 'val_accuracy', 'accuracy'])

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

# "bo" is for "blue dot"

plt.plot(epochs, loss, 'bo', label='Training loss')

# b is for "solid blue line"

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.legend()

plt.show()

plt.clf() # clear figure

acc_values = history_dict['accuracy']

val_acc_values = history_dict['val_accuracy']

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.xlabel('Epochs')

plt.ylabel('Accuracy')

plt.legend()

plt.show() 存在过拟合现象。 在15~20次迭代训练处于峰值。模型在训练数据上的表现要优于在从未见过的数据上的表现。