flink1.10.0 on yarn三节点高可用集群搭建

jobmanager高可用

jobmanager负责任务调度和资源管理。

默认情况下,一个flink集群中只有一个jobmanager实例。这就存在单点故障:当jobmanager宕机时,不仅无法提交新的任务,同时正在运行的任务也会失败。

通过配置jobmanager的高可用,就可以从jobmanager的失败中恢复过来,解决jobmanager的单点故障问题。对于standalone集群和yarn集群,都可以配置jobmanager的高可用。

本文主要介绍yarn集群下的jobmanager高可用配置。

需要提前安装好Hadoop,本片文章的背景是三节点的Hadoop高可用集群。具体可以参考这篇文章配置。

当运行一个采用yarn模式的高可用集群时,只会运行一个JobManager(ApplicationMaster),失败时由YARN重新启动。(这点与standalone模式的高可用不同,standalone模式的高可用会配置多个jobmanager,其中一个为leader,其余为standby)

配置

修改yarn-site.xml,添加以下内容,默认值是2

yarn.resourcemanager.am.max-attempts

4

The maximum number of application master execution attempts.

修改flink-conf.yaml

#必须配置

high-availability: zookeeper

#必须配置

high-availability.zookeeper.quorum: flink1:2181,flink2:2181,flink3:2181

#推荐配置

high-availability.zookeeper.path.root: /flink

#推荐配置,每个集群应该配不同的名称

high-availability.cluster-id: /default_ns

#必须配置,jobmanager元数据的持久化存储目录

high-availability.storageDir: hdfs:///flink/recovery

#必须配置,值应该不大于yarn.resourcemanager.am.max-attempts的值

yarn.application-attempts: 4将上述配置同步更新到另外两台机器flink2和flink3。

启动

在配置了zk的节点上启动zk服务

启动Hadoop的resourceManager和yarn(图方便,直接start-all.sh)

启动flink机器(这里是以yarn session的客户端方式启动的)

[root@flink1 bin]# ./yarn-session.sh -n 2

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/flink-1.10.0/lib/slf4j-log4j12-1.7.15.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2020-05-20 07:28:01,768 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.rpc.address, flink1

2020-05-20 07:28:01,776 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.rpc.port, 6123

2020-05-20 07:28:01,776 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.heap.size, 1024m

2020-05-20 07:28:01,776 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: taskmanager.memory.process.size, 1568m

2020-05-20 07:28:01,776 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: taskmanager.numberOfTaskSlots, 2

2020-05-20 07:28:01,776 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: parallelism.default, 4

2020-05-20 07:28:01,776 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: high-availability, zookeeper

2020-05-20 07:28:01,777 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: high-availability.storageDir, hdfs:///flink/recovery

2020-05-20 07:28:01,777 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: high-availability.zookeeper.quorum, flink1:2181,flink2:2181,flink3:2181

2020-05-20 07:28:01,777 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: high-availability.zookeeper.path.root, /flink

2020-05-20 07:28:01,777 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: yarn.application-attempts, 4

2020-05-20 07:28:01,777 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: high-availability.cluster-id, /default_ns

2020-05-20 07:28:01,777 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: jobmanager.execution.failover-strategy, region

2020-05-20 07:28:01,777 INFO org.apache.flink.configuration.GlobalConfiguration - Loading configuration property: io.tmp.dirs, /root/flink/tmp

2020-05-20 07:28:02,527 WARN org.apache.hadoop.util.NativeCodeLoader - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-05-20 07:28:02,654 INFO org.apache.flink.runtime.security.modules.HadoopModule - Hadoop user set to root (auth:SIMPLE)

2020-05-20 07:28:02,682 INFO org.apache.flink.runtime.security.modules.JaasModule - Jaas file will be created as /root/flink/tmp/jaas-3514577917123016855.conf.

2020-05-20 07:28:02,696 WARN org.apache.flink.yarn.cli.FlinkYarnSessionCli - The configuration directory ('/opt/flink-1.10.0/conf') already contains a LOG4J config file.If you want to use logback, then please delete or rename the log configuration file.

2020-05-20 07:28:03,037 INFO org.apache.flink.runtime.clusterframework.TaskExecutorProcessUtils - The derived from fraction jvm overhead memory (156.800mb (164416719 bytes)) is less than its min value 192.000mb (201326592 bytes), min value will be used instead

2020-05-20 07:28:03,167 WARN org.apache.flink.yarn.YarnClusterDescriptor - Neither the HADOOP_CONF_DIR nor the YARN_CONF_DIR environment variable is set. The Flink YARN Client needs one of these to be set to properly load the Hadoop configuration for accessing YARN.

2020-05-20 07:28:03,201 INFO org.apache.flink.yarn.YarnClusterDescriptor - Cluster specification: ClusterSpecification{masterMemoryMB=1024, taskManagerMemoryMB=1568, slotsPerTaskManager=2}

2020-05-20 07:28:07,946 INFO org.apache.flink.yarn.YarnClusterDescriptor - Submitting application master application_1589930391605_0002

2020-05-20 07:28:08,179 INFO org.apache.hadoop.yarn.client.api.impl.YarnClientImpl - Submitted application application_1589930391605_0002

2020-05-20 07:28:08,179 INFO org.apache.flink.yarn.YarnClusterDescriptor - Waiting for the cluster to be allocated

2020-05-20 07:28:08,181 INFO org.apache.flink.yarn.YarnClusterDescriptor - Deploying cluster, current state ACCEPTED

2020-05-20 07:28:15,717 INFO org.apache.flink.yarn.YarnClusterDescriptor - YARN application has been deployed successfully.

2020-05-20 07:28:15,717 INFO org.apache.flink.yarn.YarnClusterDescriptor - Found Web Interface flink1:37120 of application 'application_1589930391605_0002'.

2020-05-20 07:28:15,776 INFO org.apache.flink.shaded.curator.org.apache.curator.framework.imps.CuratorFrameworkImpl - Starting

2020-05-20 07:28:15,782 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:zookeeper.version=3.4.10-39d3a4f269333c922ed3db283be479f9deacaa0f, built on 03/23/2017 10:13 GMT

2020-05-20 07:28:15,782 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:host.name=flink1

2020-05-20 07:28:15,782 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:java.version=1.8.0_191

2020-05-20 07:28:15,782 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:java.vendor=Oracle Corporation

2020-05-20 07:28:15,782 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:java.home=/opt/jdk1.8.0_191/jre

2020-05-20 07:28:15,782 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:java.class.path=/opt/flink-1.10.0/lib/flink-table_2.12-1.10.0.jar:/opt/flink-1.10.0/lib/flink-table-blink_2.12-1.10.0.jar:/opt/flink-1.10.0/lib/log4j-1.2.17.jar:/opt/flink-1.10.0/lib/slf4j-log4j12-1.7.15.jar:/opt/flink-1.10.0/lib/flink-dist_2.12-1.10.0.jar:/opt/hadoop/hadoop-2.8.5/etc/hadoop:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jersey-server-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-compress-1.4.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/zookeeper-3.4.6.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/hadoop-auth-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-collections-3.2.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/xmlenc-0.52.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jsr305-3.0.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/servlet-api-2.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/httpclient-4.5.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/paranamer-2.3.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jsch-0.1.54.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/stax-api-1.0-2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/hadoop-annotations-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jettison-1.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jersey-core-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-logging-1.1.3.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/slf4j-api-1.7.10.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/curator-framework-2.7.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jetty-util-6.1.26.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/junit-4.11.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/hamcrest-core-1.3.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/avro-1.7.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/log4j-1.2.17.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/asm-3.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jersey-json-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/mockito-all-1.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-digester-1.8.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/htrace-core4-4.0.1-incubating.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/netty-3.6.2.Final.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-configuration-1.6.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/api-asn1-api-1.0.0-M20.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/xz-1.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/apacheds-i18n-2.0.0-M15.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/api-util-1.0.0-M20.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jetty-sslengine-6.1.26.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-cli-1.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/activation-1.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/curator-client-2.7.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-lang-2.6.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jets3t-0.9.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-io-2.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-codec-1.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jetty-6.1.26.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-math3-3.1.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-net-3.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/guava-11.0.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/json-smart-1.3.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jsp-api-2.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/gson-2.2.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/httpcore-4.4.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/java-xmlbuilder-0.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/curator-recipes-2.7.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/lib/htrace-core-3.1.0-incubating.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/hadoop-common-2.8.5-tests.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/hadoop-common-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/common/hadoop-nfs-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/okhttp-2.4.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/xml-apis-1.3.04.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/hadoop-hdfs-client-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/netty-all-4.0.23.Final.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/asm-3.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/htrace-core4-4.0.1-incubating.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/xercesImpl-2.9.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/commons-lang-2.6.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/commons-io-2.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/guava-11.0.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/lib/okio-1.4.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/hadoop-hdfs-native-client-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/hadoop-hdfs-client-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/hadoop-hdfs-native-client-2.8.5-tests.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/hadoop-hdfs-2.8.5-tests.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/hadoop-hdfs-nfs-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/hadoop-hdfs-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/hdfs/hadoop-hdfs-client-2.8.5-tests.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jersey-server-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/zookeeper-3.4.6.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/java-util-1.9.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/leveldbjni-all-1.8.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/commons-collections-3.2.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jsr305-3.0.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/servlet-api-2.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jaxb-api-2.2.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/stax-api-1.0-2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jettison-1.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jersey-core-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/fst-2.50.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/commons-logging-1.1.3.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/javax.inject-1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/commons-math-2.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jetty-util-6.1.26.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/log4j-1.2.17.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/asm-3.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/zookeeper-3.4.6-tests.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jersey-json-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jackson-jaxrs-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/xz-1.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jackson-xc-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/commons-cli-1.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/activation-1.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/aopalliance-1.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/javassist-3.18.1-GA.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jersey-client-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/curator-client-2.7.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/commons-lang-2.6.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jackson-core-asl-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/commons-io-2.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/guice-3.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/commons-codec-1.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jetty-6.1.26.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/guava-11.0.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/curator-test-2.7.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/json-io-2.5.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-server-tests-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-registry-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-common-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-client-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-server-common-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-api-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/leveldbjni-all-1.8.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/hadoop-annotations-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/javax.inject-1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/junit-4.11.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/asm-3.2.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/xz-1.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/jackson-core-asl-1.9.13.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/commons-io-2.4.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/guice-3.0.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.8.5-tests.jar:/opt/hadoop/hadoop-2.8.5/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.8.5.jar:/opt/hadoop/hadoop-2.8.5/contrib/capacity-scheduler/*.jar:/opt/hadoop/hadoop-2.8.5/etc/hadoop:

2020-05-20 07:28:15,783 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2020-05-20 07:28:15,783 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:java.io.tmpdir=/tmp

2020-05-20 07:28:15,783 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:java.compiler=

2020-05-20 07:28:15,783 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:os.name=Linux

2020-05-20 07:28:15,783 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:os.arch=amd64

2020-05-20 07:28:15,783 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:os.version=3.10.0-1062.el7.x86_64

2020-05-20 07:28:15,783 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:user.name=root

2020-05-20 07:28:15,783 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:user.home=/root

2020-05-20 07:28:15,784 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Client environment:user.dir=/opt/flink-1.10.0/bin

2020-05-20 07:28:15,784 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ZooKeeper - Initiating client connection, connectString=flink1:2181,flink2:2181,flink3:2181 sessionTimeout=60000 watcher=org.apache.flink.shaded.curator.org.apache.curator.ConnectionState@6058e535

2020-05-20 07:28:15,830 WARN org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ClientCnxn - SASL configuration failed: javax.security.auth.login.LoginException: No JAAS configuration section named 'Client' was found in specified JAAS configuration file: '/root/flink/tmp/jaas-3514577917123016855.conf'. Will continue connection to Zookeeper server without SASL authentication, if Zookeeper server allows it.

2020-05-20 07:28:15,831 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ClientCnxn - Opening socket connection to server flink1/172.21.89.128:2181

2020-05-20 07:28:15,832 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ClientCnxn - Socket connection established to flink1/172.21.89.128:2181, initiating session

2020-05-20 07:28:15,853 ERROR org.apache.flink.shaded.curator.org.apache.curator.ConnectionState - Authentication failed

2020-05-20 07:28:15,856 INFO org.apache.flink.shaded.zookeeper.org.apache.zookeeper.ClientCnxn - Session establishment complete on server flink1/172.21.89.128:2181, sessionid = 0x1722f0fd2f80003, negotiated timeout = 40000

2020-05-20 07:28:15,857 INFO org.apache.flink.shaded.curator.org.apache.curator.framework.state.ConnectionStateManager - State change: CONNECTED

JobManager Web Interface: http://flink1:37120

(前台运行的,可以直接ctrl+z结束,但会导致FlinkYarnSessionCli进程无法关闭)

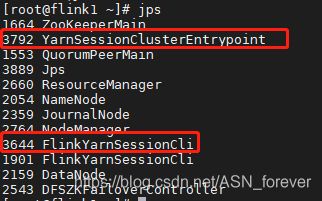

查看jps

红框标出的就是启动的两个进程(也就是yarn session模式中客户端模式启动的两个进程)。

可以看到还有一个1901进程,这就是之前启动后不小心Ctrl+z导致的进程无法关闭,且kill不掉。。。。

启动成功,访问http://flink1:37120

同时可以在zk中看到一个flink根节点:

[zk: localhost:2181(CONNECTED) 8] ls /

[cluster, brokers, zookeeper, yarn-leader-election, hadoop-ha, admin, isr_change_notification, flink, controller_epoch, rmstore, consumers, latest_producer_id_block, config, hbase]

[zk: localhost:2181(CONNECTED) 9] ls /flink

[default_ns]

[zk: localhost:2181(CONNECTED) 10] ls /flink/default_ns

[jobgraphs, leader, leaderlatch]

[zk: localhost:2181(CONNECTED) 11]

测试高可用

flink1上kill掉3792 YarnSessionClusterEntrypoint,发现在另一台机器上立马重启了一个YarnSessionClusterEntrypoint进程

[root@flink2 ~]# jps

3008 YarnSessionClusterEntrypoint

1585 QuorumPeerMain

2374 NodeManager

2059 DataNode

2156 JournalNode

2302 DFSZKFailoverController

1999 NameNode

3023 Jps

可以在当前Hadoop的active节点上查看yarn resourcemanager日志上看到切换信息:

2020-05-20 08:35:40,732 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000005 State change from LAUNCHED to RUNNING

2020-05-20 08:35:40,732 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1589930391605_0002 State change from ACCEPTED to RUNNING on event=ATTEMPT_REGISTERED

2020-05-20 08:48:41,894 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_e15_1589930391605_0002_05_000001 Container Transitioned from RUNNING to COMPLETED

2020-05-20 08:48:41,895 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=root OPERATION=AM Released Container TARGET=SchedulerApp RESULT=SUCCESS APPID=application_1589930391605_0002 CONTAINERID=container_e15_1589930391605_0002_05_000001

2020-05-20 08:48:41,895 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerNode: Released container container_e15_1589930391605_0002_05_000001 of capacity on host flink3:45454, which currently has 0 containers, used and available, release resources=true

2020-05-20 08:48:41,895 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: Updating application attempt appattempt_1589930391605_0002_000005 with final state: FAILED, and exit status: 137

2020-05-20 08:48:41,895 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000005 State change from RUNNING to FINAL_SAVING

2020-05-20 08:48:41,929 INFO org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService: Unregistering app attempt : appattempt_1589930391605_0002_000005

2020-05-20 08:48:41,929 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Application finished, removing password for appattempt_1589930391605_0002_000005

2020-05-20 08:48:41,929 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000005 State change from FINAL_SAVING to FAILED

2020-05-20 08:48:41,929 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: The number of failed attempts in previous 10000 milliseconds is 1. The max attempts is 4

2020-05-20 08:48:41,929 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1589930391605_0002 State change from RUNNING to ACCEPTED on event=ATTEMPT_FAILED

2020-05-20 08:48:41,929 INFO org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService: Registering app attempt : appattempt_1589930391605_0002_000006

2020-05-20 08:48:41,929 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000006 State change from NEW to SUBMITTED

2020-05-20 08:48:41,930 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Application Attempt appattempt_1589930391605_0002_000005 is done. finalState=FAILED

2020-05-20 08:48:41,931 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.AppSchedulingInfo: Application application_1589930391605_0002 requests cleared

2020-05-20 08:48:41,931 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application removed - appId: application_1589930391605_0002 user: root queue: default #user-pending-applications: 0 #user-active-applications: 0 #queue-pending-applications: 0 #queue-active-applications: 0

2020-05-20 08:48:41,931 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application application_1589930391605_0002 from user: root activated in queue: default

2020-05-20 08:48:41,931 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.LeafQueue: Application added - appId: application_1589930391605_0002 user: root, leaf-queue: default #user-pending-applications: 0 #user-active-applications: 1 #queue-pending-applications: 0 #queue-active-applications: 1

2020-05-20 08:48:41,931 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler: Added Application Attempt appattempt_1589930391605_0002_000006 to scheduler from user root in queue default

2020-05-20 08:48:41,932 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000006 State change from SUBMITTED to SCHEDULED

2020-05-20 08:48:41,932 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Cleaning master appattempt_1589930391605_0002_000005

2020-05-20 08:48:42,054 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_e15_1589930391605_0002_06_000001 Container Transitioned from NEW to ALLOCATED

2020-05-20 08:48:42,054 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=root OPERATION=AM Allocated Container TARGET=SchedulerApp RESULT=SUCCESS APPID=application_1589930391605_0002 CONTAINERID=container_e15_1589930391605_0002_06_000001

2020-05-20 08:48:42,054 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.SchedulerNode: Assigned container container_e15_1589930391605_0002_06_000001 of capacity on host flink1:45454, which has 1 containers, used and available after allocation

2020-05-20 08:48:42,054 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.allocator.AbstractContainerAllocator: assignedContainer application attempt=appattempt_1589930391605_0002_000006 container=container_e15_1589930391605_0002_06_000001 queue=org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.allocator.RegularContainerAllocator@49a0e254 clusterResource= type=OFF_SWITCH requestedPartition=

2020-05-20 08:48:42,055 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: Re-sorting assigned queue: root.default stats: default: capacity=1.0, absoluteCapacity=1.0, usedResources=, usedCapacity=0.041666668, absoluteUsedCapacity=0.041666668, numApps=1, numContainers=1

2020-05-20 08:48:42,055 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.ParentQueue: assignedContainer queue=root usedCapacity=0.041666668 absoluteUsedCapacity=0.041666668 used= cluster=

2020-05-20 08:48:42,055 INFO org.apache.hadoop.yarn.server.resourcemanager.security.NMTokenSecretManagerInRM: Sending NMToken for nodeId : flink1:45454 for container : container_e15_1589930391605_0002_06_000001

2020-05-20 08:48:42,057 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_e15_1589930391605_0002_06_000001 Container Transitioned from ALLOCATED to ACQUIRED

2020-05-20 08:48:42,057 INFO org.apache.hadoop.yarn.server.resourcemanager.security.NMTokenSecretManagerInRM: Clear node set for appattempt_1589930391605_0002_000006

2020-05-20 08:48:42,057 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: Storing attempt: AppId: application_1589930391605_0002 AttemptId: appattempt_1589930391605_0002_000006 MasterContainer: Container: [ContainerId: container_e15_1589930391605_0002_06_000001, Version: 0, NodeId: flink1:45454, NodeHttpAddress: flink1:8042, Resource: , Priority: 0, Token: Token { kind: ContainerToken, service: 172.21.89.128:45454 }, ]

2020-05-20 08:48:42,057 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000006 State change from SCHEDULED to ALLOCATED_SAVING

2020-05-20 08:48:42,075 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000006 State change from ALLOCATED_SAVING to ALLOCATED

2020-05-20 08:48:42,075 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Launching masterappattempt_1589930391605_0002_000006

2020-05-20 08:48:42,076 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Setting up container Container: [ContainerId: container_e15_1589930391605_0002_06_000001, Version: 0, NodeId: flink1:45454, NodeHttpAddress: flink1:8042, Resource: , Priority: 0, Token: Token { kind: ContainerToken, service: 172.21.89.128:45454 }, ] for AM appattempt_1589930391605_0002_000006

2020-05-20 08:48:42,076 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Create AMRMToken for ApplicationAttempt: appattempt_1589930391605_0002_000006

2020-05-20 08:48:42,076 INFO org.apache.hadoop.yarn.server.resourcemanager.security.AMRMTokenSecretManager: Creating password for appattempt_1589930391605_0002_000006

2020-05-20 08:48:42,105 INFO org.apache.hadoop.yarn.server.resourcemanager.amlauncher.AMLauncher: Done launching container Container: [ContainerId: container_e15_1589930391605_0002_06_000001, Version: 0, NodeId: flink1:45454, NodeHttpAddress: flink1:8042, Resource: , Priority: 0, Token: Token { kind: ContainerToken, service: 172.21.89.128:45454 }, ] for AM appattempt_1589930391605_0002_000006

2020-05-20 08:48:42,105 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000006 State change from ALLOCATED to LAUNCHED

2020-05-20 08:48:43,064 INFO org.apache.hadoop.yarn.server.resourcemanager.rmcontainer.RMContainerImpl: container_e15_1589930391605_0002_06_000001 Container Transitioned from ACQUIRED to RUNNING

2020-05-20 08:48:48,625 INFO SecurityLogger.org.apache.hadoop.ipc.Server: Auth successful for appattempt_1589930391605_0002_000006 (auth:SIMPLE)

2020-05-20 08:48:48,635 INFO org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService: AM registration appattempt_1589930391605_0002_000006

2020-05-20 08:48:48,635 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=root IP=172.21.89.128 OPERATION=Register App Master TARGET=ApplicationMasterService RESULT=SUCCESS APPID=application_1589930391605_0002 APPATTEMPTID=appattempt_1589930391605_0002_000006

2020-05-20 08:48:48,635 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1589930391605_0002_000006 State change from LAUNCHED to RUNNING

2020-05-20 08:48:48,635 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1589930391605_0002 State change from ACCEPTED to RUNNING on event=ATTEMPT_REGISTERED

至此,简单的测试通过。

对于yarn session的分离模式以及single Flink job on YARN模式在高可用集群下如何运行,还没有测试。

还有一点需要记录,由于是高可用,通过kill -9 pid的方式是关不了yarn session的。需要使用yarn application -kill APPID的方式关闭。