kaggle——Digit Recognizer

题目地址:here

数据集digit-recognizer.zip;

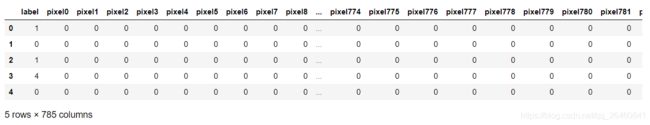

The data files train.csv and test.csv contain gray-scale images of hand-drawn digits, from zero through nine. Each image is 28 pixels in height and 28 pixels in width, for a total of 784 pixels in total. The training data set, (train.csv), has 785 columns. The first column, called “label”, is the digit that was drawn by the user. The rest of the columns contain the pixel-values of the associated image.

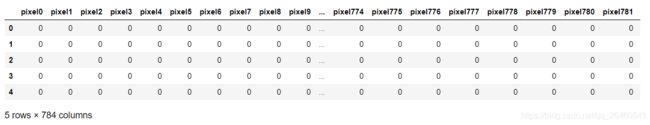

The test data set, (test.csv), is the same as the training set, except that it does not contain the “label” column.

Your submission file should be in the following format: For each of the 28000 images in the test set, output a single line containing the ImageId and the digit you predict. For example, if you predict that the first image is of a 3, the second image is of a 7, and the third image is of a 8, then your submission file would look like:

ImageId,Label

1,3

2,7

3,8

(27997 more lines)

# 我解压数据集到了这个目录下

dataset_path = "./dataset/digit-recognizer" # train.csv test.csv

# 导入包

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

%matplotlib inline

# 读取训练数据

train_datas = pd.read_csv(dataset_path+"/train.csv")

# 显示下

train_datas.head()

train_x = train_datas.iloc[:,1:]

train_y = train_datas.iloc[:, 0]

定义序列模型:

model = tf.keras.models.Sequential()

model.add(tf.keras.layers.Dense(128, input_shape=(784,), activation="relu"))

model.add(tf.keras.layers.Dense(10, input_shape=(128, ), activation="softmax"))

model.compile(optimizer='adam', loss="sparse_categorical_crossentropy", metrics="acc")

history = model.fit(train_x, train_y, batch_size=64, epochs=100)

测试集的读取:

test_datas = pd.read_csv(dataset_path+"/test.csv")

test_datas.head()

test_x = test_datas.iloc[:,]

result = model.predict(test_x)

result = tf.argmax(result, axis=1).numpy()

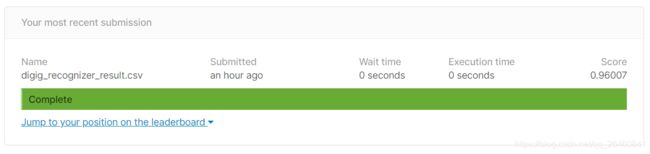

# 由于提交所需的仅仅是csv文件,那么存储一下

# 存储到csv文件中

df = pd.DataFrame({

"ImageId": range(1, len(result)+1), "Label": list(result)})

df.to_csv("digig_recognizer_result.csv", sep=",", index=False) # index表示是否显示行名,default=True