Flink入门(6)--Flink CEP

Flink CEP

- Flink CEP的 概述与分类

- Pattern API

-

- Pattern分类

- 模式序列

- 模式的检测

- 匹配事件的提取

- 超时事件的提取

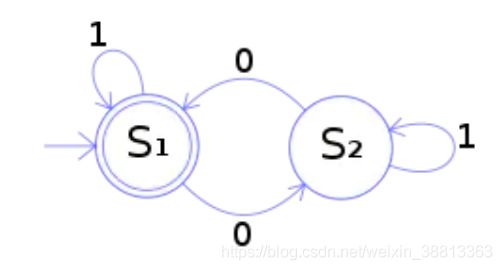

- Flink CEP原理 非确定有限自动机

Flink CEP的 概述与分类

- Flink CEP的 概述

CEP 即Complex Event Processing即复杂事件处理,Flink CEP是在Flink种实现复杂事件处理的库。处理事件的规则被叫做Pattern,Flink CEP提供了Pattern API,用于对输入数据进行复杂事件的规则定义,用来提取符合规则的事件序列。 - 三种Pattern API: 个体模式,组合模式,模式组

- 应用:实时监控恶意登录,虚假交易。金融行业识别风险、营销广告等

Pattern API

Pattern分类

- 个体模式:个体模式包括单例模式和循环模式,单例模式只接收一个事件,而循环模式可以接受多个事件。

(1)量词

(2 ) 条件 .where,.or().until() utils可作为终止条件,以便清理状态

start.times(3).where(_.behavior.startsWirh('fav'))

start.oneOrTwo//出现1次或2次

start.timesOrMore(2).optional.greedy//匹配出现0、2或多次

- 组合模式

val start = Pattern.begin('start')

- 模式组: 将一个模式序列作为条件潜逃在一个个体里面,成为一组模式

模式序列

- 严格临近,所有时间按照严格的顺序出现,中间没有任何不匹配的事件,由.next()指定

- 宽松临近,允许中间出现不匹配的事件。由.followedby指定

- 非确定性宽松临近,表示之前已经匹配过的事件也可以再次使用,由.followedByAny 指定

- 不希望出现某种临近关系,比如.notNext()

- 所有模式必须以.begin()开始,模式序列不能以.notFollowedBy()结束,not类型的模式不能被optional所修饰,可以为模式指定时间约束,用来要求在多长时间内匹配有效

模式的检测

指定要查找的模式序列后,就可以将其应用于输入流以检测潜在匹配。调用CEP.pattern(),给定输入流和模式,就能得到一个PatternStream。PatternStream里面输出的是每次匹配成功以后,按模式输出的匹配流

val input:DataStream[Event] = ...

val pattern:Pattern[Event,_] = ...

val patternStream:PatternStream[Event]=CEP.pattern(input,pattern)

匹配事件的提取

创建PatternStream之后,就可以应用select或者flatSelect方法,从检测到的事件序列中提取事件,每个匹配成功的事件序列都会调用它。select()以一个selectfunction 作为参数,每个成功匹配的事件序列都会调用它。select()以一个Map[String,Iterable[IN]] 来接收匹配到的事件序列,其中key就是每个模式的名称,而value就是所有接收到的事件的Iterable类型。

def selectFn(pattern:Map[String,Iterable[IN]]):OUT={

val startEvent = pattern.get("start").get.next

val endEvent = pattern.get("end").get.next

OUT(startEvent,endEvent)

}

package com.lagou.mycep;

import org.apache.flink.api.common.eventtime.*;

import org.apache.flink.cep.CEP;

import org.apache.flink.cep.PatternStream;

import org.apache.flink.cep.functions.PatternProcessFunction;

import org.apache.flink.cep.pattern.Pattern;

import org.apache.flink.cep.pattern.conditions.IterativeCondition;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.Collector;

import java.util.List;

import java.util.Map;

public class LoginDemo {

public static void main(String[] args) throws Exception {

/**

* 1、数据源

* 2、在数据源上做出watermark

* 3、在watermark上根据id分组keyby

* 4、做出模式pattern

* 5、在数据流上进行模式匹配

* 6、提取匹配成功的数据

*/

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.setParallelism(1);

DataStreamSource<LoginBean> data = env.fromElements(

new LoginBean(1L, "fail", 1597905234000L),

new LoginBean(1L, "success", 1597905235000L),

new LoginBean(2L, "fail", 1597905236000L),

new LoginBean(2L, "fail", 1597905237000L),

new LoginBean(2L, "fail", 1597905238000L),

new LoginBean(3L, "fail", 1597905239000L),

new LoginBean(3L, "success", 1597905240000L)

);

//2、在数据源上做出watermark

SingleOutputStreamOperator<LoginBean> watermarks = data.assignTimestampsAndWatermarks(new WatermarkStrategy<LoginBean>() {

@Override

public WatermarkGenerator<LoginBean> createWatermarkGenerator(WatermarkGeneratorSupplier.Context context) {

return new WatermarkGenerator<LoginBean>() {

long maxTimeStamp = Long.MIN_VALUE;

@Override

public void onEvent(LoginBean event, long eventTimestamp, WatermarkOutput output) {

maxTimeStamp = Math.max(maxTimeStamp, event.getTs());

}

long maxOutOfOrderness = 500l;

@Override

public void onPeriodicEmit(WatermarkOutput output) {

output.emitWatermark(new Watermark(maxTimeStamp - maxOutOfOrderness));

}

};

}

}.withTimestampAssigner((element, recordTimestamp) -> element.getTs()));

//3、在watermark上根据id分组keyby

KeyedStream<LoginBean, Long> keyed = watermarks.keyBy(value -> value.getId());

//4、做出模式pattern

Pattern<LoginBean, LoginBean> pattern = Pattern.<LoginBean>begin("start").where(new IterativeCondition<LoginBean>() {

@Override

public boolean filter(LoginBean value, Context<LoginBean> ctx) throws Exception {

return value.getState().equals("fail");

}

})

.next("next").where(new IterativeCondition<LoginBean>() {

@Override

public boolean filter(LoginBean value, Context<LoginBean> ctx) throws Exception {

return value.getState().equals("fail");

}

})

.within(Time.seconds(5));

//5、在数据流上进行模式匹配

PatternStream<LoginBean> patternStream = CEP.pattern(keyed, pattern);

//6、提取匹配成功的数据

SingleOutputStreamOperator<Long> result = patternStream.process(new PatternProcessFunction<LoginBean, Long>() {

@Override

public void processMatch(Map<String, List<LoginBean>> match, Context ctx, Collector<Long> out) throws Exception {

// System.out.println(match);

out.collect(match.get("start").get(0).getId());

}

});

result.print();

env.execute();

}

}

超时事件的提取

Flink CEP开发流程:

- DataSource种的数据转换成DataStream

- 定义Pattern,并将DataStream和Pattern组合转换成PatternStream

- Pattern经过select,process等算子转换成DataStream;

- 再次转换的DataStream经过处理后sink到目标库

SingleOutputStreamOperator<PayEvent> result = patternStream.select(orderTimeoutOutput,new PatternTimeoutFunction<PayEvent,PayEvent>(){

@Override

public PayEvent timeout(Map<String,List<PayEvent>> map,long l) throws Exception{

return map.get("begin").get(0)

},new PatternSelectFucntion<PayEvent,PayEvent>(){

@Override

public PayEvent select(Map<String,List<PayEvent>> map) throws Exception{

return map.get("pay").get(0);

}

}

})

//获得未匹配成功的测流输出

DataStream<PayEvent> sideOutput = result.getSideOutput(orderTimeoutOutput);

完整代码展示

package com.lagou.mycep;

import org.apache.flink.api.common.eventtime.*;

import org.apache.flink.cep.CEP;

import org.apache.flink.cep.PatternSelectFunction;

import org.apache.flink.cep.PatternStream;

import org.apache.flink.cep.PatternTimeoutFunction;

import org.apache.flink.cep.pattern.Pattern;

import org.apache.flink.cep.pattern.conditions.IterativeCondition;

import org.apache.flink.streaming.api.TimeCharacteristic;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.DataStreamSource;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.util.OutputTag;

import java.util.List;

import java.util.Map;

import java.util.stream.Stream;

public class PayDemo {

public static void main(String[] args) throws Exception {

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStreamTimeCharacteristic(TimeCharacteristic.EventTime);

env.setParallelism(1);

DataStreamSource<PayBean> data = env.fromElements(

new PayBean(1L, "create", 1597905234000L),

new PayBean(1L, "pay", 1597905235000L),

new PayBean(2L, "create", 1597905236000L),

new PayBean(2L, "pay", 1597905237000L),

new PayBean(3L, "create", 1597905239000L)

);

SingleOutputStreamOperator<PayBean> watermarks = data.assignTimestampsAndWatermarks(new WatermarkStrategy<PayBean>() {

@Override

public WatermarkGenerator<PayBean> createWatermarkGenerator(WatermarkGeneratorSupplier.Context context) {

return new WatermarkGenerator<PayBean>() {

long maxTimeStamp = Long.MIN_VALUE;

long maxOutOfOrderness = 500l;

@Override

public void onEvent(PayBean event, long eventTimestamp, WatermarkOutput output) {

maxTimeStamp = Math.max(maxTimeStamp, event.getTs());

}

@Override

public void onPeriodicEmit(WatermarkOutput output) {

output.emitWatermark(new Watermark(maxTimeStamp - maxOutOfOrderness));

}

};

}

}.withTimestampAssigner((element, recordTimestamp) -> element.getTs()));

KeyedStream<PayBean, Long> keyed = watermarks.keyBy(value -> value.getId());

//pattern

Pattern<PayBean, PayBean> pattern = Pattern.<PayBean>begin("start").where(new IterativeCondition<PayBean>() {

@Override

public boolean filter(PayBean value, Context<PayBean> ctx) throws Exception {

return value.getState().equals("create");

}

})

.followedBy("next").where(new IterativeCondition<PayBean>() {

@Override

public boolean filter(PayBean value, Context<PayBean> ctx) throws Exception {

return value.getState().equals("pay");

}

})

.within(Time.seconds(600));

PatternStream<PayBean> patternStream = CEP.pattern(keyed, pattern);

//select

OutputTag<PayBean> outoftime = new OutputTag<PayBean>("outoftime"){

};

SingleOutputStreamOperator<PayBean> result = patternStream.select(outoftime, new PatternTimeoutFunction<PayBean, PayBean>() {

@Override

public PayBean timeout(Map<String, List<PayBean>> pattern, long timeoutTimestamp) throws Exception {

return pattern.get("start").get(0);

}

}, new PatternSelectFunction<PayBean, PayBean>() {

@Override

public PayBean select(Map<String, List<PayBean>> pattern) throws Exception {

return pattern.get("start").get(0);

}

});

DataStream<PayBean> sideOutput = result.getSideOutput(outoftime);

sideOutput.print();

env.execute();

}

}