MMLAB系列:mmsegmentation基于u-net的各种策略修改

1.配置文件解读

按照博客所示的步骤,生成配置文件,并进行修改,配置文件的各个type都是已经注册好的,可以根据自己的需要进行修改。其中,所有的type,都可以在mmsegmentation\mmseg\models中找到。

上一篇博客 MMLAB系列:mmsegmentation的使用_樱花的浪漫的博客-CSDN博客数据可以使用labelme进行数据标注,labelme还提供了数据集格式转换脚本,可以将labelme数据集格式转换为voc数据集格式转换后:JPEGImages为图片,SegmentationClassPNG为标签。https://blog.csdn.net/qq_52053775/article/details/126796659 如下所示,选择的U-NET由ecoder-decoder,decode_head,auxiliary_head组成

norm_cfg = dict(type='SyncBN', requires_grad=True)

model = dict(

type='EncoderDecoder',

pretrained=None,

backbone=dict(

type='UNet',

in_channels=3,

base_channels=64,

num_stages=5,

strides=(1, 1, 1, 1, 1),

enc_num_convs=(2, 2, 2, 2, 2),

dec_num_convs=(2, 2, 2, 2),

downsamples=(True, True, True, True),

enc_dilations=(1, 1, 1, 1, 1),

dec_dilations=(1, 1, 1, 1),

with_cp=False,

conv_cfg=None,

norm_cfg=dict(type='SyncBN', requires_grad=True),

act_cfg=dict(type='ReLU'),

upsample_cfg=dict(type='InterpConv'),

norm_eval=False),

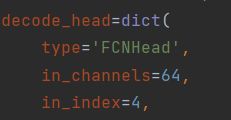

decode_head=dict(

type='FCNHead',

in_channels=64,

in_index=4,

channels=64,

num_convs=1,

concat_input=False,

dropout_ratio=0.1,

num_classes=2,

norm_cfg=dict(type='SyncBN', requires_grad=True),

align_corners=False,

loss_decode=[

dict(

type='CrossEntropyLoss', loss_name='loss_ce', loss_weight=1.0),

dict(type='DiceLoss', loss_name='loss_dice', loss_weight=3.0)

]),

auxiliary_head=dict(

type='FCNHead',

in_channels=128,

in_index=3,

channels=64,

num_convs=1,

concat_input=False,

dropout_ratio=0.1,

num_classes=2,

norm_cfg=dict(type='SyncBN', requires_grad=True),

align_corners=False,

loss_decode=dict(

type='CrossEntropyLoss', use_sigmoid=False, loss_weight=0.4)),

train_cfg=dict(),

test_cfg=dict(mode='slide', crop_size=(64, 64), stride=(42, 42)))

dataset_type = 'PascalContextDataset'

data_root = 'E:/MMLAB/mmsegmentation/data/my_cell_voc'

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

img_scale = (584, 565)

crop_size = (64, 64)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations'),

dict(type='Resize', img_scale=(584, 565), ratio_range=(0.5, 2.0)),

dict(type='RandomCrop', crop_size=(64, 64), cat_max_ratio=0.75),

dict(type='RandomFlip', prob=0.5),

dict(type='PhotoMetricDistortion'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size=(64, 64), pad_val=0, seg_pad_val=255),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_semantic_seg'])

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(584, 565),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]

data = dict(

samples_per_gpu=3,

workers_per_gpu=1,

train=dict(

type='PascalContextDataset',

data_root='E:/MMLAB/mmsegmentation/data/my_cell_voc/',

img_dir='JPEGImages',

ann_dir='SegmentationClassPNG',

split='train.txt',

pipeline=[

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations'),

dict(

type='Resize',

img_scale=(584, 565),

ratio_range=(0.5, 2.0)),

dict(

type='RandomCrop', crop_size=(64, 64), cat_max_ratio=0.75),

dict(type='RandomFlip', prob=0.5),

dict(type='PhotoMetricDistortion'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size=(64, 64), pad_val=0, seg_pad_val=255),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_semantic_seg'])

]),

val=dict(

type='PascalContextDataset',

data_root='E:/MMLAB/mmsegmentation/data/my_cell_voc/',

img_dir='JPEGImages',

ann_dir='SegmentationClassPNG',

split='val.txt',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(584, 565),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]),

test=dict(

type='PascalContextDataset',

data_root='E:/MMLAB/mmsegmentation/data/my_cell_voc/',

img_dir='JPEGImages',

ann_dir='SegmentationClassPNG',

split='test.txt',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(584, 565),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]))

log_config = dict(

interval=50, hooks=[dict(type='TextLoggerHook', by_epoch=False)])

dist_params = dict(backend='nccl')

log_level = 'INFO'

load_from = None

resume_from = None

workflow = [('train', 1)]

cudnn_benchmark = True

optimizer = dict(type='SGD', lr=0.01, momentum=0.9, weight_decay=0.0005)

optimizer_config = dict()

lr_config = dict(policy='poly', power=0.9, min_lr=0.0001, by_epoch=False)

runner = dict(type='IterBasedRunner', max_iters=40000)

checkpoint_config = dict(by_epoch=False, interval=4000)

evaluation = dict(interval=4000, metric='mDice', pre_eval=True)

work_dir = './work_dirs/fcn_unet'

gpu_ids = [0]

auto_resume = False2.编码层

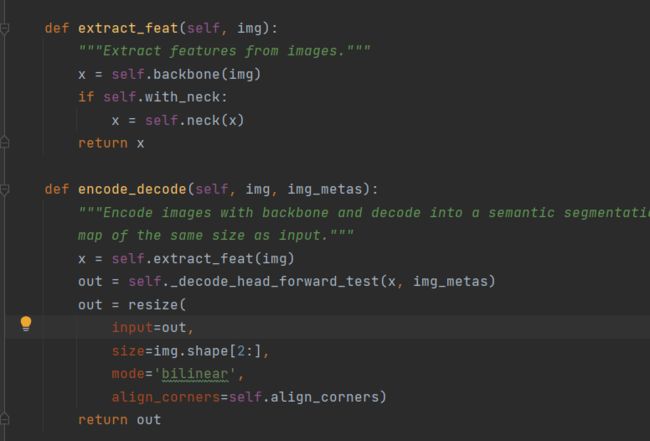

我们找到模型定义的类,mmseg/models/segmentors/encoder_decoder.py,可以看到整个encoder_decoder模型主要由backbone,neck,和head组成

模型选取的是u-net,u-net 比较简单,左侧不断的进行下采样提取特征,右侧进行上采样,同时融合左侧同一级别的特征,还原细节特征。详见我的博客:

U-net详解_樱花的浪漫的博客-CSDN博客_u-net详解

对于输出head,指定的是FC_HEAD,可以指定特定的特征图,但是需要指定输入的channels个数

同时,模型还有一层辅助输出,对训练进行深度监督

3.对模型进行修改

通过修改生成的配置文件,我们可以对模型进行修改,如下所示,我们将backbone修改为visiontransformer,同时添加FPN作为neck。如下所示,我们只需要指定mmseg提供的各种模块,并将参数写入字典中,值得注意的是,我们需要保证各个模块的通道数等能够衔接。

对于VIT的代码分析,请参考我的博客:

VIT 源码详解_樱花的浪漫的博客-CSDN博客_vit源码

backbone=dict(

type='VisionTransformer',

img_size=(96, 96),

patch_size=16,

in_channels=3,

embed_dims=768,

num_layers=12,

num_heads=12,

mlp_ratio=4,

out_indices=(2, 3, 5, 8, 11),

qkv_bias=True,

drop_rate=0.0,

attn_drop_rate=0.0,

drop_path_rate=0.0,

with_cls_token=True,

norm_cfg=dict(type='LN', eps=1e-06),

act_cfg=dict(type='GELU'),

norm_eval=False,

interpolate_mode='bicubic'),

neck=dict(

type='FPN',

in_channels=[768, 768, 768, 768, 768],

out_channels=64,

num_outs=5),

decode_head=dict(

type='FCNHead',

in_channels=64,

in_index=4,

channels=64,

num_convs=1,

concat_input=False,

dropout_ratio=0.1,

num_classes=2,

norm_cfg=dict(type='BN', requires_grad=True),

align_corners=False,

loss_decode=dict(type='FocalLoss', use_sigmoid=True, loss_weight=1.0)),

auxiliary_head=dict(

type='FCNHead',

in_channels=64,

in_index=3,

channels=64,

num_convs=1,

concat_input=False,

dropout_ratio=0.1,

num_classes=2,

norm_cfg=dict(type='BN', requires_grad=True),

align_corners=False,

loss_decode=dict(type='FocalLoss', use_sigmoid=True, loss_weight=0.4)),

train_cfg=dict(),

test_cfg=dict(mode='slide', crop_size=(96, 96), stride=(42, 42)))