基于 Hadoop 数据仓库的搭建

基于 Hadoop 数据仓库的搭建

为什么需要数据仓库?

传统的数据库中,存放的数据较多是一些定制性数据,表是二维的,一张表可以有很多字段,字段一字排开,对应的数据就一行一行写入表中,特点就是利用二维表表现多维关系。

但这种表关系的上限和下限就定死了,比如 QQ 的用户信息,直接通过查询 info 表,对应的 username、introduce 等信息即可,而此时我想知道这个用户在哪个时间段购买了什么?修改信息的次数?诸如此类的指标时,就要重新设计数据库的表结构,因此无法满足我们的分析需求。

在产品脑图中可以很清晰的看到根据业务需求设计所需的字段,因此也导致数据库是根据业务需求进行设计。

那么,为什么一开始就不考虑好这个扩展性呢?为什么数据库一开始就不以数据仓库的形式设计?

主要原因有二:

第一,数据仓库,从字面上理解就可以感受到这是一个很大的空间,而且存储的物品很杂,里面会存放酱油、沐浴露、洗发精等物品,而数据库是存放酱油、盐等厨房用品,洗浴又是一个数据库。

第二,国内互联网的发展,一开始大家都是做个软件出来,大家一起用,这个时候只要满足的了需求即可,现今不止是需求还有用户的体验等各种方面,需要根据这些分析指标做调整。

小结:

数据库是跟业务挂钩的,因此数据库的设计通常是针对一个应用进行设计的。

数据仓库是依照分析需求、分析维度、分析指标进行设计的。

什么是数据仓库?

数据仓库(Data Warehouse)简称 DW 或 DWH,是数据库的一种概念上的升级,可以说是为满足新需求设计的一种新数据库,而这个数据库是需容纳更多的数据,更加庞大的数据集,从逻辑上讲数据仓库和数据库是没有什么区别的。

为企业所有级别的决策制定过程,提供所有类型数据支撑的战略集合,主要是用于数据挖掘和数据分析,以建立数据沙盘为基础,为消灭消息孤岛和支持决策为目的而创建的。

数据仓库特点

面向主题

是企业系统信息中的数据综合、归类并进行分析的一个抽象,对应企业中某一个宏观分析领域所涉及的分析对象。

比如购物是一个主题,那么购物里面包含用户、订单、支付、物流等数据综合,对这些数据要进行归类并分析,分析这个对象数据的一个完整性、一致性的描述,能完整、统一的划分对象所设计的各项数据。

如果此时要统计一个用户从浏览到支付完成的时间时,在购物主题中缺少了支付数据或订单数据,那么这个对象数据的完整性和一致性就可能无法保证了。

数据集成

数据仓库的数据是从原有分散的数据库中的数据抽取而来的。

操作型数据和支持决策分析型(DSS)数据差别甚大,这里需要做大量的数据清洗与数据整理的工作。

第一:每一个主题的源数据在原有分散数据库中的有许多重复和不一致,且不同数据库的数据是和不同的应用逻辑捆绑的。

第二:数据仓库中的综合性数据不能从原有的数据库系统直接得到,因此在数据进入数据仓库之前要进过统一和综合。(字段同名异意,异名同义,长度等)

不可更新

数据仓库的数据主要是提供决策分析用,设计的数据主要是数据查询,一般情况下不做修改,这些数据反映的是一段较长时间内历史数据的内容,有一块修改了影响的是整个历史数据的过程数据。

数据仓库的查询量往往很大,所以对数据查询提出了更高的要求,要求采用各种复杂的索引技术,并对数据查询的界面友好性和数据凸显性提出更高的要求。

随时间不断变化

数据仓库中的数据不可更新是针对应用来说,从数据的进入到删除的整个生命周期中,数据仓库的数据是永远不变的。

数据仓库的数据是随着时间变化而不断增加新的数据。

数据仓库随着时间变化不断删去久的数据内容,数据仓库的数据也有时限的,数据库的数据时限一般是60 ~ 90天,而数据仓库的数据一般是5年~10年。

数据仓库中包含大量的综合性数据,这些数据很多是跟时间有关的,这些数据特征都包含时间项,以标明数据的历史时期。

数据仓库的选型

需求

备选:

在线收费

(1)Amazon Redshift

(2)Google BigQuery

(3)IBM Db2 Warehouse

(4)Microsoft Azure SQL Data Warehouse

(5)Oracle Autonomous Data Warehouse

(6)SAP Data Warehouse Cloud

(7)Snowflake

自建收费

自建免费

Teradata

筛选条件

(1)成本

(2)效率

(3)易用

(4)规模上限

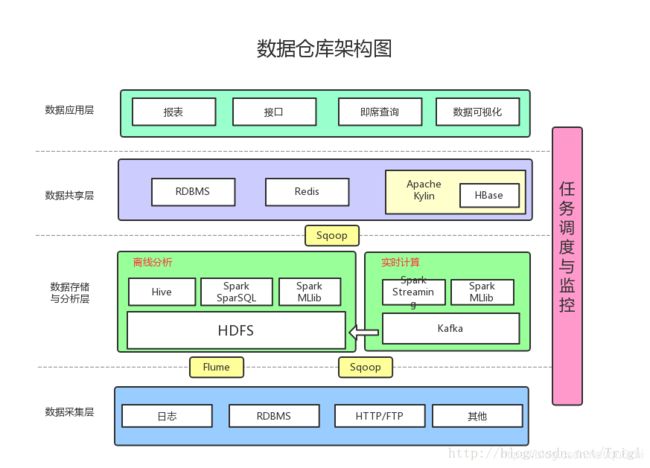

目前主流公司应用比较广泛的大数据数据仓库架构

为什么是Hadoop?

环境

CentOS-7 Minimal操作系统信息

# 查看

#

hostname

# 更新 hostname

#

hostnamectl set-hostname UXmall

# 重启生效

#

reboot配置 IP(非必要项)

vi /etc/sysconfig/network-scripts/ifcfg-ens33

#

#

#

TYPE="Ethernet"

PROXY_METHOD="none"

BROWSER_ONLY="no"

# BOOTPROTO="dhcp"

BOOTPROTO=static

DEFROUTE="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="ens33"

UUID="ced0e9c8-87c5-4550-9f73-8b519462a338"

DEVICE="ens33"

ONBOOT="yes"

#

#

#

IPADDR0=192.168.1.100

PREFIX0=24

GATEWAY0=192.168.1.1

NETMASK=255.255.255.0

DNS1=192.168.1.1

#

#

#

service network restart安装 Hadoop 依赖环境 JDK

下载页面:https://www.oracle.com/java/technologies/javase-jdk8-downloads.html#/

#

jdk-8u251-linux-x64.rpm

#

rpm -ivh jdk-8u251-linux-x64.rpm

警告:jdk-8u251-linux-x64.rpm: 头V3 RSA/SHA256 Signature, 密钥 ID ec551f03: NOKEY

准备中... ################################# [100%]

正在升级/安装...

1:jdk1.8-2000:1.8.0_251-fcs ################################# [100%]

Unpacking JAR files...

tools.jar...

plugin.jar...

javaws.jar...

deploy.jar...

rt.jar...

jsse.jar...

charsets.jar...

localedata.jar...

# 查看是否安装成功

#

java -version

java version "1.8.0_251"

Java(TM) SE Runtime Environment (build 1.8.0_251-b08)

Java HotSpot(TM) 64-Bit Server VM (build 25.251-b08, mixed mode)配置 JAVA_HOME

查看

# 查看 jdk 安装路径

#

which java

/usr/bin/java

#

ls -lr /usr/bin/java

/usr/bin/java -> /etc/alternatives/java

#

ls -lrt /etc/alternatives/java

/etc/alternatives/java -> /usr/java/jdk1.8.0_251-amd64/jre/bin/java

# 此时,确定 java 的安装目录为

/usr/java/jdk1.8.0_251-amd64/配置

# 编辑

#

vim /etc/profile

# 在最后添加

#

export JAVA_HOME=/usr/java/jdk1.8.0_251-amd64/

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

# 生效

#

source /etc/profile

# 测试

#

echo $JAVA_HOME

/usr/java/jdk1.8.0_251-amd64/安装 Hadoop

下载地址:https://mirror.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.2.1/

安装

# 建立目录

#

mkdir -p /var/Hadoop

#

cd /var/Hadoop

# 下载

#

wget https://mirror.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz

# 解压

#

tar -zxvf hadoop-3.2.1.tar.gz

# 查看

#

tree -L 3

.

├── hadoop-3.2.1

│ ├── bin

│ │ ├── container-executor

│ │ ├── hadoop

│ │ ├── hadoop.cmd

│ │ ├── hdfs

│ │ ├── hdfs.cmd

│ │ ├── mapred

│ │ ├── mapred.cmd

│ │ ├── oom-listener

│ │ ├── test-container-executor

│ │ ├── yarn

│ │ └── yarn.cmd

│ ├── etc

│ │ └── hadoop

│ ├── include

│ │ ├── hdfs.h

│ │ ├── Pipes.hh

│ │ ├── SerialUtils.hh

│ │ ├── StringUtils.hh

│ │ └── TemplateFactory.hh

│ ├── lib

│ │ └── native

│ ├── libexec

│ │ ├── hadoop-config.cmd

│ │ ├── hadoop-config.sh

│ │ ├── hadoop-functions.sh

│ │ ├── hadoop-layout.sh.example

│ │ ├── hdfs-config.cmd

│ │ ├── hdfs-config.sh

│ │ ├── mapred-config.cmd

│ │ ├── mapred-config.sh

│ │ ├── shellprofile.d

│ │ ├── tools

│ │ ├── yarn-config.cmd

│ │ └── yarn-config.sh

│ ├── LICENSE.txt

│ ├── NOTICE.txt

│ ├── README.txt

│ ├── sbin

│ │ ├── distribute-exclude.sh

│ │ ├── FederationStateStore

│ │ ├── hadoop-daemon.sh

│ │ ├── hadoop-daemons.sh

│ │ ├── httpfs.sh

│ │ ├── kms.sh

│ │ ├── mr-jobhistory-daemon.sh

│ │ ├── refresh-namenodes.sh

│ │ ├── start-all.cmd

│ │ ├── start-all.sh

│ │ ├── start-balancer.sh

│ │ ├── start-dfs.cmd

│ │ ├── start-dfs.sh

│ │ ├── start-secure-dns.sh

│ │ ├── start-yarn.cmd

│ │ ├── start-yarn.sh

│ │ ├── stop-all.cmd

│ │ ├── stop-all.sh

│ │ ├── stop-balancer.sh

│ │ ├── stop-dfs.cmd

│ │ ├── stop-dfs.sh

│ │ ├── stop-secure-dns.sh

│ │ ├── stop-yarn.cmd

│ │ ├── stop-yarn.sh

│ │ ├── workers.sh

│ │ ├── yarn-daemon.sh

│ │ └── yarn-daemons.sh

│ └── share

│ ├── doc

│ └── hadoop配置 Hadoop

# 进入目录

#

cd /var/Hadoop/hadoop-3.2.1/etc/hadoop参考

https://hadoop.apache.org/docs/stable/

一、配置 hadoop-env.sh

#

#

vim hadoop-env.sh

#

export JAVA_HOME=/usr/java/jdk1.8.0_251-amd64/二、配置 core-site.xml

#

vim core-site.xml

# 在

fs.defaultFS

hdfs://uxmall:9000/

------------------------------------

# 配置 hadoop 的公共目录

# 指定 hadoop 进程运行中产生的数据存放的工作目录,NameNode、DataNode 等就在本地工作目录下建子目录存放数据。

# 但事实上在生产系统里,NameNode、DataNode 等进程都应单独配置目录,而且配置的应该是磁盘挂载点,以方便挂载更多的磁盘扩展容量。

hadoop.tmp.dir

/var/Hadoop/hadoop-3.2.1/data/

三、配置 hdfs-site.xml

不配置也不影响启动,但方便后续排查问题与监控管理。

#

vim hdfs-site.xml

# 配置 hdfs 的副本数

# 客户端将文件存到 hdfs 的时候,会存放在多个副本。

# value 一般指定 3,但因为搭建的是伪分布式就只有一台机器,所以只能写 1。

# 在

dfs.replication

1

四、配置 mapred-site.xml

#

vim mapred-site.xml

# 指定 MapReduce 程序应该放在哪个资源调度集群上运行。

# 若不指定为 yarn,那么 MapReduce 程序就只会在本地运行而非在整个集群中运行。

# 在

mapreduce.framework.name

yarn

五、配置 yarn-site.xml

#

vim yarn-site.xml

# 在

yarn.resourcemanager.hostname

uxmall

------------------------------------

# 配置 yarn 集群中的重节点,指定 map 产生的中间结果传递给 reduce 采用的机制是 shuffle

yarn.nodemanager.aux-services

mapreduce_shuffle

启动 Hadoop

初始化

# 初始化

#

cd /var/Hadoop/hadoop-3.2.1/bin

#

./hadoop namenode -formatWARNING: Use of this script to execute namenode is deprecated.

WARNING: Attempting to execute replacement "hdfs namenode" instead.

2020-04-26 20:52:40,095 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = uxmall/172.20.10.3

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 3.2.1

STARTUP_MSG: classpath = /var/Hadoop/hadoop-3.2.1/etc/hadoop:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerby-util-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerby-xdr-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-net-3.6.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-beanutils-1.9.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/snappy-java-1.0.5.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jul-to-slf4j-1.7.25.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-server-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-cli-1.2.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jetty-xml-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-simplekdc-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/zookeeper-3.4.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jersey-json-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/guava-27.0-jre.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/javax.servlet-api-3.1.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerby-asn1-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/log4j-1.2.17.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jetty-http-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-admin-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jsch-0.1.54.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-common-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/hadoop-annotations-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/animal-sniffer-annotations-1.17.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-crypto-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/woodstox-core-5.0.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-core-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jackson-annotations-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jetty-servlet-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-identity-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jsp-api-2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-client-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-text-1.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/nimbus-jose-jwt-4.41.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/asm-5.0.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jersey-server-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jetty-security-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jackson-jaxrs-1.9.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerby-pkix-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerb-util-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jcip-annotations-1.0-1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/avro-1.7.7.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/htrace-core4-4.1.0-incubating.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-math3-3.1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jettison-1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jackson-mapper-asl-1.9.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/httpcore-4.4.10.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/paranamer-2.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-collections-3.2.2.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/httpclient-4.5.6.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/kerby-config-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/dnsjava-2.1.7.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jetty-server-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jsr311-api-1.1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jetty-util-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/hadoop-auth-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-configuration2-2.1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/curator-recipes-2.13.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-lang3-3.7.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/checker-qual-2.5.2.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/audience-annotations-0.5.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-codec-1.11.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/json-smart-2.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jackson-core-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/stax2-api-3.1.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/slf4j-api-1.7.25.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/j2objc-annotations-1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jersey-core-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/accessors-smart-1.2.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jetty-io-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jaxb-api-2.2.11.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/error_prone_annotations-2.2.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jsr305-3.0.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/metrics-core-3.2.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jetty-webapp-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jersey-servlet-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/token-provider-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-compress-1.18.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/curator-framework-2.13.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jackson-xc-1.9.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/gson-2.2.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/failureaccess-1.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-io-2.5.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jackson-core-asl-1.9.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/curator-client-2.13.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/re2j-1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/jackson-databind-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/commons-logging-1.1.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/lib/netty-3.10.5.Final.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/hadoop-kms-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/hadoop-nfs-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1-tests.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-util-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-xdr-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-net-3.6.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-beanutils-1.9.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/snappy-java-1.0.5.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-server-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-xml-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-simplekdc-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/leveldbjni-all-1.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/netty-all-4.0.52.Final.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/zookeeper-3.4.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jersey-json-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/guava-27.0-jre.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/javax.servlet-api-3.1.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-asn1-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-http-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-admin-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jsch-0.1.54.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-common-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/hadoop-annotations-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/animal-sniffer-annotations-1.17.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-crypto-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/woodstox-core-5.0.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-core-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-annotations-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-servlet-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-identity-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-client-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-text-1.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/okhttp-2.7.5.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/nimbus-jose-jwt-4.41.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/asm-5.0.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/json-simple-1.1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jersey-server-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-security-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-jaxrs-1.9.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-pkix-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerb-util-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jcip-annotations-1.0-1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/avro-1.7.7.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/htrace-core4-4.1.0-incubating.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-math3-3.1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jettison-1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-mapper-asl-1.9.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/httpcore-4.4.10.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/paranamer-2.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-collections-3.2.2.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/httpclient-4.5.6.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/kerby-config-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/dnsjava-2.1.7.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-util-ajax-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-server-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jaxb-impl-2.2.3-1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jsr311-api-1.1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-util-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/hadoop-auth-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-configuration2-2.1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/curator-recipes-2.13.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-lang3-3.7.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/checker-qual-2.5.2.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/audience-annotations-0.5.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-codec-1.11.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/json-smart-2.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-core-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/stax2-api-3.1.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/listenablefuture-9999.0-empty-to-avoid-conflict-with-guava.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/j2objc-annotations-1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jersey-core-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/accessors-smart-1.2.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-io-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jaxb-api-2.2.11.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/error_prone_annotations-2.2.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jsr305-3.0.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jetty-webapp-9.3.24.v20180605.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jersey-servlet-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/token-provider-1.0.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-compress-1.18.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/curator-framework-2.13.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-xc-1.9.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/gson-2.2.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/okio-1.6.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/failureaccess-1.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-io-2.5.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-core-asl-1.9.13.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/curator-client-2.13.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/re2j-1.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/jackson-databind-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/commons-logging-1.1.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/lib/netty-3.10.5.Final.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-nfs-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-3.2.1-tests.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-client-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-native-client-3.2.1-tests.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-httpfs-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-native-client-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-rbf-3.2.1-tests.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-rbf-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/hdfs/hadoop-hdfs-client-3.2.1-tests.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/lib/junit-4.11.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/lib/hamcrest-core-1.3.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.2.1-tests.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-common-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-core-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-app-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-uploader-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-client-nativetask-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/aopalliance-1.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/jackson-jaxrs-base-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/java-util-1.9.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/snakeyaml-1.16.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/HikariCP-java7-2.4.12.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/bcpkix-jdk15on-1.60.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/json-io-2.5.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/jackson-jaxrs-json-provider-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/jersey-client-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/swagger-annotations-1.5.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/guice-servlet-4.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/bcprov-jdk15on-1.60.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/objenesis-1.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/javax.inject-1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/jersey-guice-1.19.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/geronimo-jcache_1.0_spec-1.0-alpha-1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/fst-2.50.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/ehcache-3.3.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/metrics-core-3.2.4.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/mssql-jdbc-6.2.1.jre7.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/guice-4.0.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/lib/jackson-module-jaxb-annotations-2.9.8.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-nodemanager-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-submarine-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-sharedcachemanager-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-timeline-pluginstorage-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-common-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-services-core-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-router-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-client-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-tests-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-web-proxy-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-services-api-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-common-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-api-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-registry-3.2.1.jar:/var/Hadoop/hadoop-3.2.1/share/hadoop/yarn/hadoop-yarn-server-applicationhistoryservice-3.2.1.jar

STARTUP_MSG: build = https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842; compiled by 'rohithsharmaks' on 2019-09-10T15:56Z

STARTUP_MSG: java = 1.8.0_251

************************************************************/

2020-04-26 20:52:40,140 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

2020-04-26 20:52:40,494 INFO namenode.NameNode: createNameNode [-format]

Formatting using clusterid: CID-a0fd9016-a370-409f-b90c-806b2805d80e

2020-04-26 20:52:42,748 INFO namenode.FSEditLog: Edit logging is async:true

2020-04-26 20:52:42,799 INFO namenode.FSNamesystem: KeyProvider: null

2020-04-26 20:52:42,802 INFO namenode.FSNamesystem: fsLock is fair: true

2020-04-26 20:52:42,802 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false

2020-04-26 20:52:42,848 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

2020-04-26 20:52:42,848 INFO namenode.FSNamesystem: supergroup = supergroup

2020-04-26 20:52:42,848 INFO namenode.FSNamesystem: isPermissionEnabled = true

2020-04-26 20:52:42,848 INFO namenode.FSNamesystem: HA Enabled: false

2020-04-26 20:52:43,035 INFO common.Util: dfs.datanode.fileio.profiling.sampling.percentage set to 0. Disabling file IO profiling

2020-04-26 20:52:43,081 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit: configured=1000, counted=60, effected=1000

2020-04-26 20:52:43,081 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true

2020-04-26 20:52:43,098 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000

2020-04-26 20:52:43,099 INFO blockmanagement.BlockManager: The block deletion will start around 2020 四月 26 20:52:43

2020-04-26 20:52:43,101 INFO util.GSet: Computing capacity for map BlocksMap

2020-04-26 20:52:43,101 INFO util.GSet: VM type = 64-bit

2020-04-26 20:52:43,105 INFO util.GSet: 2.0% max memory 674.8 MB = 13.5 MB

2020-04-26 20:52:43,106 INFO util.GSet: capacity = 2^21 = 2097152 entries

2020-04-26 20:52:43,119 INFO blockmanagement.BlockManager: Storage policy satisfier is disabled

2020-04-26 20:52:43,119 INFO blockmanagement.BlockManager: dfs.block.access.token.enable = false

2020-04-26 20:52:43,131 INFO Configuration.deprecation: No unit for dfs.namenode.safemode.extension(30000) assuming MILLISECONDS

2020-04-26 20:52:43,132 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

2020-04-26 20:52:43,132 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.min.datanodes = 0

2020-04-26 20:52:43,132 INFO blockmanagement.BlockManagerSafeMode: dfs.namenode.safemode.extension = 30000

2020-04-26 20:52:43,133 INFO blockmanagement.BlockManager: defaultReplication = 1

2020-04-26 20:52:43,133 INFO blockmanagement.BlockManager: maxReplication = 512

2020-04-26 20:52:43,133 INFO blockmanagement.BlockManager: minReplication = 1

2020-04-26 20:52:43,134 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

2020-04-26 20:52:43,134 INFO blockmanagement.BlockManager: redundancyRecheckInterval = 3000ms

2020-04-26 20:52:43,134 INFO blockmanagement.BlockManager: encryptDataTransfer = false

2020-04-26 20:52:43,134 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000

2020-04-26 20:52:43,338 INFO namenode.FSDirectory: GLOBAL serial map: bits=29 maxEntries=536870911

2020-04-26 20:52:43,338 INFO namenode.FSDirectory: USER serial map: bits=24 maxEntries=16777215

2020-04-26 20:52:43,338 INFO namenode.FSDirectory: GROUP serial map: bits=24 maxEntries=16777215

2020-04-26 20:52:43,338 INFO namenode.FSDirectory: XATTR serial map: bits=24 maxEntries=16777215

2020-04-26 20:52:43,359 INFO util.GSet: Computing capacity for map INodeMap

2020-04-26 20:52:43,359 INFO util.GSet: VM type = 64-bit

2020-04-26 20:52:43,360 INFO util.GSet: 1.0% max memory 674.8 MB = 6.7 MB

2020-04-26 20:52:43,360 INFO util.GSet: capacity = 2^20 = 1048576 entries

2020-04-26 20:52:43,364 INFO namenode.FSDirectory: ACLs enabled? false

2020-04-26 20:52:43,365 INFO namenode.FSDirectory: POSIX ACL inheritance enabled? true

2020-04-26 20:52:43,365 INFO namenode.FSDirectory: XAttrs enabled? true

2020-04-26 20:52:43,365 INFO namenode.NameNode: Caching file names occurring more than 10 times

2020-04-26 20:52:43,371 INFO snapshot.SnapshotManager: Loaded config captureOpenFiles: false, skipCaptureAccessTimeOnlyChange: false, snapshotDiffAllowSnapRootDescendant: true, maxSnapshotLimit: 65536

2020-04-26 20:52:43,374 INFO snapshot.SnapshotManager: SkipList is disabled

2020-04-26 20:52:43,392 INFO util.GSet: Computing capacity for map cachedBlocks

2020-04-26 20:52:43,392 INFO util.GSet: VM type = 64-bit

2020-04-26 20:52:43,392 INFO util.GSet: 0.25% max memory 674.8 MB = 1.7 MB

2020-04-26 20:52:43,392 INFO util.GSet: capacity = 2^18 = 262144 entries

2020-04-26 20:52:43,409 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10

2020-04-26 20:52:43,409 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10

2020-04-26 20:52:43,409 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25

2020-04-26 20:52:43,413 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

2020-04-26 20:52:43,413 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

2020-04-26 20:52:43,423 INFO util.GSet: Computing capacity for map NameNodeRetryCache

2020-04-26 20:52:43,423 INFO util.GSet: VM type = 64-bit

2020-04-26 20:52:43,424 INFO util.GSet: 0.029999999329447746% max memory 674.8 MB = 207.3 KB

2020-04-26 20:52:43,424 INFO util.GSet: capacity = 2^15 = 32768 entries

Re-format filesystem in Storage Directory root= /var/Hadoop/hadoop-3.2.1/data/dfs/name; location= null ? (Y or N) Y

2020-04-26 20:53:22,882 INFO namenode.FSImage: Allocated new BlockPoolId: BP-910918937-172.20.10.3-1587948802861

2020-04-26 20:53:22,882 INFO common.Storage: Will remove files: [/var/Hadoop/hadoop-3.2.1/data/dfs/name/current/VERSION, /var/Hadoop/hadoop-3.2.1/data/dfs/name/current/seen_txid, /var/Hadoop/hadoop-3.2.1/data/dfs/name/current/fsimage_0000000000000000000.md5, /var/Hadoop/hadoop-3.2.1/data/dfs/name/current/fsimage_0000000000000000000]

#

#

#

2020-04-26 20:53:23,265 INFO common.Storage: Storage directory /var/Hadoop/hadoop-3.2.1/data/dfs/name has been successfully formatted.

#

#

#

2020-04-26 20:53:23,344 INFO namenode.FSImageFormatProtobuf: Saving image file /var/Hadoop/hadoop-3.2.1/data/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

2020-04-26 20:53:23,550 INFO namenode.FSImageFormatProtobuf: Image file /var/Hadoop/hadoop-3.2.1/data/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 399 bytes saved in 0 seconds .

2020-04-26 20:53:23,560 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

2020-04-26 20:53:23,568 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown.

2020-04-26 20:53:23,569 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at uxmall/172.20.10.3

************************************************************/修改启动文件参数

vim start-dfs.sh#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=rootvim stop-dfs.sh

#!/usr/bin/env bash

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=rootvim start-yarn.sh#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root

vim stop-yarn.sh#!/usr/bin/env bash

YARN_RESOURCEMANAGER_USER=root

HADOOP_SECURE_DN_USER=yarn

YARN_NODEMANAGER_USER=root启动

cd /var/Hadoop/hadoop-3.2.1/sbin

#

#

./start-dfs.sh

#

#

./start-yarn.sh

# 查看已成功启动的进程

jps【配置】防火墙

# 查看防火墙【服务】状态

systemctl status firewalld

# 查看防火墙【运行】状态

firewall-cmd --state

# 开启

service firewalld start

# 重启

service firewalld restart

# 关闭

service firewalld stop

# 查询端口是否开放

firewall-cmd --query-port=8080/tcp

# 开放80端口

firewall-cmd --permanent --add-port=80/tcp

firewall-cmd --permanent --add-port=8080-8088/tcp

firewall-cmd --permanent --add-port=50070/tcp

# 移除端口

firewall-cmd --permanent --remove-port=8080/tcp

# 查看防火墙的开放的端口

firewall-cmd --permanent --list-ports

# 重启防火墙(修改配置后要重启防火墙)

firewall-cmd --reload【配置】SELinux

# 临时关闭 SELinux

setenforce 0

# 临时打开 SELinux

setenforce 1

# 查看 SELinux 状态

getenforce

# 开机关闭 SELinux

# 编辑 /etc/selinux/config 文件,将 SELinux 的值设置为 disabled。

vi /etc/selinux/config

# 查看防火墙规则

firewall-cmd --list-all