手把手搭建经典神经网络系列(1)——AlexNet

一、AlexNet简介

AlexNet是Alex和Hinton参加2012年imagenet比赛时提出的卷积网络框架,夺得了当年ImageNet LSVRC的冠军,且准确率远超第二名,带来了深度学习的又一次高潮。

- 论文地址:http://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf

- 本文代码地址:

含有数据集的:链接:https://pan.baidu.com/s/1u8N_yRnxrNoIMc4aP55rcQ 提取码:6wfe

不含数据集的:链接:https://pan.baidu.com/s/1BNVj2XSajJx8u1ZlKadnmw 提取码:xrng

这里十分建议大家有时间的条件下,去自己阅读原文,可能会给您带来不一样的感悟!

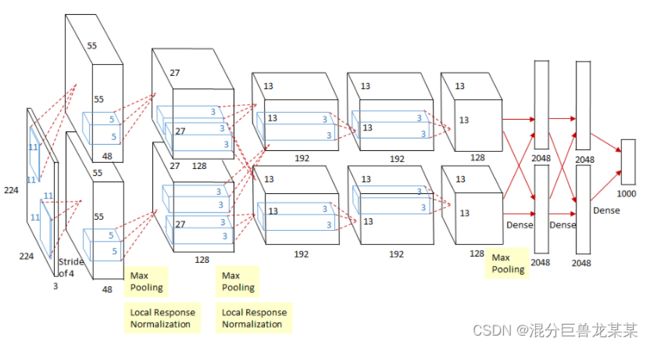

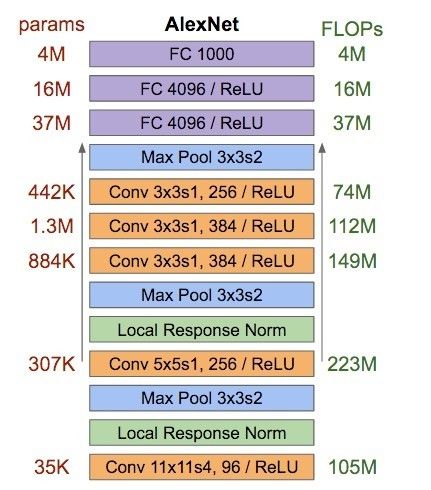

AlexNet网络结构

如上图所示,AlexNet网络架构的参数统计如下:

- 卷积层:5层

- 全连接层:3层

- 池化层:3层

- 深度:8层

- 参数个数:60M

- 神经元个数:650k

- 分类数目:1000类

特别说明:可能初次接触上方AlexNet网络结构的朋友会感到奇怪,为什么图像被被分为了两部分。其实,原始图像经预处理变为224×224×3,使用96个11×11×3的卷积核进行卷积计算,分成两份输入到两个GPU中(96/2=48),stride为4,输出size为55×55×48。(笔者没有2块GPU,所以后面基于pytorch架构搭建的AlexNet网络模型是有所改动的,当然这里无伤大雅)

前五层:卷积

- 第1层:

卷积:原始图像经预处理变为227×227×3,使用96个11×11×3的卷积核进行卷积计算,分成两份输入到两个GPU中,stride为4,输出size为55×55×48。

ReLU:将55×55×48的特征图放入ReLU激活函数,生成激活图。

池化:激活后的图像进行最大重叠池化,size为3×3,stride为2,池化后的特征图size为27×27×48。池化后进行LRN处理。(LRN处理是深度学习训练时的一种提高准确度的技术方法,LRN归一化技术首次在AlexNet模型中提出这个概念。但是在2015年 Very Deep Convolutional Networks for Large-Scale Image Recognition.提到LRN基本没什么用。) - 第2层:与第一层操作类似

卷积层使用用第1卷积层的输出作为输入,增加了2个像素值的padding,并使用256个核进行滤波,核大小为5 × 5 × 48。

第3,4,5卷积层互相连接,中间没有接入池化层或归一化层。

- 第3层:

卷积层有384(192×2)个核,核大小为3 × 3 × 256,与第2卷积层的输出相连,padding为1。 - 第4层:

同第三层。 - 第5层:

与3、4层相比多了个池化,卷积核256个,核大小为3 × 3 × 192,其余同第三层。池化选用最大池化,size为3×3,stride为2,输出特征图尺寸6×6×256。

后三层:全连接

每个全连接层有4096个神经元。

- 第6层:

卷积:因为是全连接层,卷积核size为6×6×256,4096个卷积核生成4096个特征图,尺寸为1×1。然后放入ReLU函数、Dropout处理。 - 第7层:

同第六层 - 第8层:

最后一层全连接层的输出是1000维softmax的输入,softmax会产生1000个类别预测的值。

逐层网络结构图:

AlexNet的创新点:

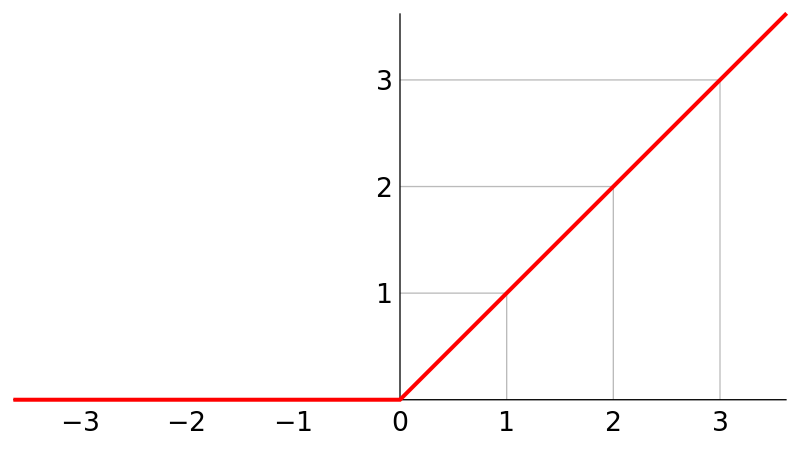

1.ReLU激活函数

AlexNet之前神经网络一般使用tanh或者sigmoid作为激活函数,这些激活函数在计算梯度的时候都比较慢,而AlexNet提出的ReLU表达式为:

![]()

实验结果表明,要将深度网络训练至training error rate达到25%的话,ReLU只需5个epochs的迭代,但tanh单元需要35个epochs的迭代,用ReLU比tanh快6倍。

ReLU激活函数的图像:

2.随机失活(dropout)

Dropout属于正则化技术中的一种,dropout的作用是增加网络的泛化能力,可以用在卷积层和全连接层。但是在卷积层一般不用dropout, dropout是用来防止过拟合的过多参数才会容易过拟合,卷积层参数本来就没有全连接层参数多,因此,dropout一般常用在全连接层。该方法通过让全连接层的神经元(该模型在前两个全连接层引入Dropout)以一定的概率失去活性(比如0.5)失活的神经元不再参与前向和反向传播,相当于约有一半的神经元不再起作用。

3.局部响应归一化(Local Response Normalization)

由于上文提到该处理手段存在较大争议,且目前大多数AlexNet的网络结构也都未曾使用该处理手段,所以这里就不言了。如果大家感兴趣可以看看上方的原论文与2015年的《Very Deep Convolutional Networks for Large-Scale Image Recognition》

4. 很多数据增强技术

1. 第一种数据增强的方法是将原图片大小为256*256中随机的提取224*224的图片,以及他们水平方向的映像。

2. 第二种数据增强的方法就是在图像中每个像素的R、G、B值上分别加上一个数,用到的方法为PCA。

3. AlexNet训练采用的是SGD。

AlexNet网络结构具有如下特点:

1.AlexNet在激活函数上选取了非线性非饱和的relu函数,在训练阶段梯度衰减快慢方面,relu函数比传统神经网络所选取的非线性饱和函数(如sigmoid函数,tanh函数)要快许多。

2.AlexNet在双gpu上运行,每个gpu负责一半网络的运算。

3.池化方式采用overlapping pooling。即池化窗口的大小大于步长,使得每次池化都有重叠的部分。(ps:这种重叠的池化方式比传统无重叠的池化方式有着更好的效果,且可以避免过拟合现象的发生)

二、AlexNet代码实现

特别说明:笔者以下代码都是基于Pytorch实现的,如若需要其他框架下的代码,可以私聊笔者,笔者无偿提供。

model.py

import numpy as np

import cv2

import torch

import torch.nn as nn

import torch.optim as optim

import torch.nn.functional as F

class AlexNet(nn.Module):

def __init__(self,num_classes=1000,init_weights=False):

super(AlexNet, self).__init__()

self.features = nn.Sequential( #Sequential能将层结构打包

nn.Conv2d(3,48,kernel_size=11,stride=4,padding=2), #input_channel=3,output_channel=48

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3,stride=2),

nn.Conv2d(48, 128, kernel_size=5, padding=2), # input_channel=3,output_channel=48

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

nn.Conv2d(128, 192, kernel_size=3, padding=1), # input_channel=3,output_channel=48

nn.ReLU(inplace=True),

nn.Conv2d(192, 192, kernel_size=3, padding=1), # input_channel=3,output_channel=48

nn.ReLU(inplace=True),

nn.Conv2d(192, 128, kernel_size=3, padding=1), # input_channel=3,output_channel=48

nn.ReLU(inplace=True),

nn.MaxPool2d(kernel_size=3, stride=2),

)

self.classifier = nn.Sequential(

nn.Dropout(p=0.5), #默认随机失活

nn.Linear(128*6*6,2048),

nn.ReLU(inplace=True),

nn.Dropout(p=0.5), # 默认随机失活

nn.Linear(2048, 2048),

nn.ReLU(inplace=True),

nn.Linear(2048,num_classes),

)

if init_weights:

self._initialize_weights()

def forward(self,x):

x = self.features(x)

x = torch.flatten(x,start_dim=1)

x = self.classifier(x)

return x

def _initialize_weights(self):

for m in self.modules():

if isinstance(m,nn.Conv2d):

nn.init.kaiming_normal_(m.weight, mode='fan_out', nonlinearity='relu') #凯明初始化,国人大佬

if m.bias is not None:

nn.init.constant_(m.bias,0)

elif isinstance(m,nn.Linear):

nn.init.normal_(m.weight,0,0.01)

nn.init.constant_(m.bias,0)在model.y文件中,搭建了AlexNet的网络结构。代码语义可以查看注释,如有疑问欢迎留言!

trian.py

import os

import sys

import json

import torch

import torch.nn as nn

from torchvision import transforms, datasets, utils

import matplotlib.pyplot as plt

import numpy as np

import torch.optim as optim

from tqdm import tqdm

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("using {} device.".format(device))

data_transform = {

"train": transforms.Compose([transforms.RandomResizedCrop(224),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]),

"val": transforms.Compose([transforms.Resize((224, 224)), # cannot 224, must (224, 224)

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])}

data_root = os.path.abspath(os.path.join(os.getcwd(), "./")) # get data root path

image_path = os.path.join(data_root, "data_set", "flower_data") # flower data set path

assert os.path.exists(image_path), "{} path does not exist.".format(image_path)

train_dataset = datasets.ImageFolder(root=os.path.join(image_path, "train"),

transform=data_transform["train"])

train_num = len(train_dataset)

# {'daisy':0, 'dandelion':1, 'roses':2, 'sunflower':3, 'tulips':4}

flower_list = train_dataset.class_to_idx

cla_dict = dict((val, key) for key, val in flower_list.items())

# write dict into json file

json_str = json.dumps(cla_dict, indent=4)

with open('class_indices.json', 'w') as json_file:

json_file.write(json_str)

batch_size = 32

nw = min([os.cpu_count(), batch_size if batch_size > 1 else 0, 8]) # number of workers

print('Using {} dataloader workers every process'.format(nw))

train_loader = torch.utils.data.DataLoader(train_dataset,

batch_size=batch_size, shuffle=True,

num_workers=nw)

validate_dataset = datasets.ImageFolder(root=os.path.join(image_path, "val"),

transform=data_transform["val"])

val_num = len(validate_dataset)

validate_loader = torch.utils.data.DataLoader(validate_dataset,

batch_size=4, shuffle=False,

num_workers=nw)

print("using {} images for training, {} images for validation.".format(train_num,

val_num))

# test_data_iter = iter(validate_loader)

# test_image, test_label = test_data_iter.next()

#

# def imshow(img):

# img = img / 2 + 0.5 # unnormalize

# npimg = img.numpy()

# plt.imshow(np.transpose(npimg, (1, 2, 0)))

# plt.show()

#

# print(' '.join('%5s' % cla_dict[test_label[j].item()] for j in range(4)))

# imshow(utils.make_grid(test_image))

net = AlexNet(num_classes=5, init_weights=True)

net.to(device)

loss_function = nn.CrossEntropyLoss()

# pata = list(net.parameters())

optimizer = optim.Adam(net.parameters(), lr=0.0002)

epochs = 10

save_path = './AlexNet.pth'

best_acc = 0.0

train_steps = len(train_loader)

for epoch in range(epochs):

# train

net.train()

running_loss = 0.0

train_bar = tqdm(train_loader, file=sys.stdout)

for step, data in enumerate(train_bar):

images, labels = data

optimizer.zero_grad()

outputs = net(images.to(device))

loss = loss_function(outputs, labels.to(device))

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

train_bar.desc = "train epoch[{}/{}] loss:{:.3f}".format(epoch + 1,

epochs,

loss)

# validate

net.eval()

acc = 0.0 # accumulate accurate number / epoch

with torch.no_grad():

val_bar = tqdm(validate_loader, file=sys.stdout)

for val_data in val_bar:

val_images, val_labels = val_data

outputs = net(val_images.to(device))

predict_y = torch.max(outputs, dim=1)[1]

acc += torch.eq(predict_y, val_labels.to(device)).sum().item()

val_accurate = acc / val_num

print('[epoch %d] train_loss: %.3f val_accuracy: %.3f' %

(epoch + 1, running_loss / train_steps, val_accurate))

if val_accurate > best_acc:

best_acc = val_accurate

torch.save(net.state_dict(), save_path)

print('Finished Training')

if __name__ == '__main__':

main()权重训练文件,本实验选取了较为经典入门的花类识别。

predict.py

import os

import json

import torch

from PIL import Image

from torchvision import transforms

import matplotlib.pyplot as plt

from model import AlexNet

def main():

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

data_transform = transforms.Compose(

[transforms.Resize((224, 224)),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))])

# load image

img_path = "./1.png"

assert os.path.exists(img_path), "file: '{}' dose not exist.".format(img_path)

img = Image.open(img_path)

plt.imshow(img)

# [N, C, H, W]

img = data_transform(img)

# expand batch dimension

img = torch.unsqueeze(img, dim=0)

# read class_indict

json_path = './class_indices.json'

assert os.path.exists(json_path), "file: '{}' dose not exist.".format(json_path)

with open(json_path, "r") as f:

class_indict = json.load(f)

# create model

model = AlexNet(num_classes=5).to(device)

# load model weights

weights_path = "./AlexNet.pth"

assert os.path.exists(weights_path), "file: '{}' dose not exist.".format(weights_path)

model.load_state_dict(torch.load(weights_path))

model.eval()

with torch.no_grad():

# predict class

output = torch.squeeze(model(img.to(device))).cpu()

predict = torch.softmax(output, dim=0)

predict_cla = torch.argmax(predict).numpy()

print_res = "class: {} prob: {:.3}".format(class_indict[str(predict_cla)],

predict[predict_cla].numpy())

plt.title(print_res)

for i in range(len(predict)):

print("class: {:10} prob: {:.3}".format(class_indict[str(i)],

predict[i].numpy()))

plt.show()

if __name__ == '__main__':

main()预测函数,用于对图片的识别。

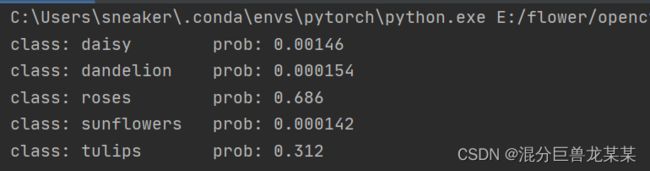

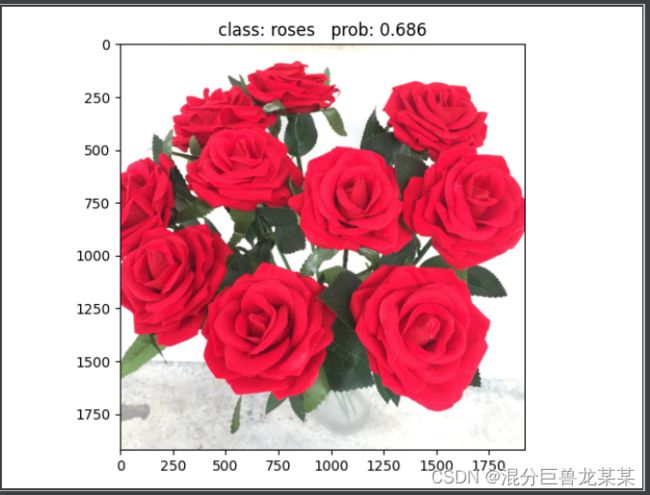

预测结果:

从最后的得分可以看出AlexNet的识别效果还行,在当时可以说是一骑绝尘的。但是,随着深度学习的发展,AlexNet网络已经多多少少显得有些疲惫了,但是学习AlexNet网络结构绝对是可以让人有新的感悟!