经典卷积神经网络模型 - ResNet

文章目录

- 经典卷积神经网络模型 - ResNet

-

- 综述与模型创新点

- 模型结构

-

- 残差模块

- 经典ResNet模型

- 模型复现

-

- 残差模块

- 构建ResNet

经典卷积神经网络模型 - ResNet

综述与模型创新点

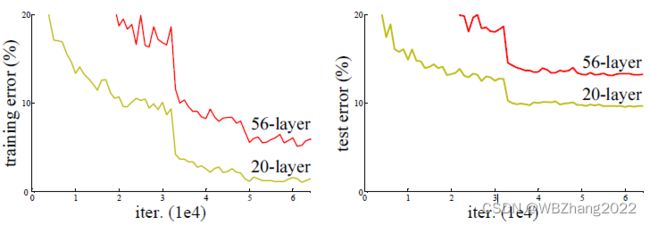

伴随着LeNet,AlexNet,VggNet,InceptionNet神经网络的相继问世,将这四类模型分别复现,并逐渐堆叠其模块的数量,理论上会让模型的预测准确率得到提高,然而现实的结果强调“中庸之道”,适当堆叠模块的层数在一定程度上会提升模型预测的准确率,然而模型越深,模型的预测准确率会出现下降的情况。其原因我们要从神经网络训练反向传播的原理开始入手,在求解误差梯度的过程中我们遵循链式求导法则,如果相乘的每一项都是小于0的情况,就会出现梯度消失,模型参数近乎不变;

【创新点】:

- 提出了残差(residual)模块,有利于实现超深的神经网络结构

- 使用Batch Normalization加速训练,丢弃dropout的方法

模型结构

残差模块

【设计思想】:

- 相比于LeNet,AlexNet,VggNet,这三种卷积神经网络前向传播的路径是一条路走到分类器的,随着模型深度的增加会出现梯度消失的现象,造成模型预测精度下降,如图所示,残差神经网络模型是以残差块为单位构成的卷积神经网络模型。

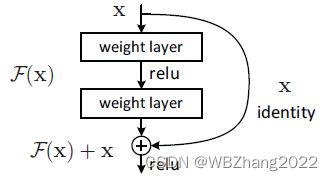

- 残差内部分前向传播的路径分为两条路来走,第一条路是需要经过若干层卷积层;第二条路是直接绕过第一条路与第一条路输出的特征图进行相加

- 特别注意:残差块分为两种,第一种残差块在第一条路径输出前后输入输出的特征图尺寸不变满足计算公式 F ( X ) ← F ( X ) + X F(X) \leftarrow F(X)+X F(X)←F(X)+X,第二种残差块在第一条路径输出前后输入输出的特征图尺寸变化满足计算公式 F ( X ) ← F ( X ) + W x ⋅ X F(X)\leftarrow F(X)+W_x \cdot X F(X)←F(X)+Wx⋅X, 其中 W x W_x Wx也是可训练参数,目的是保证第一条路线输入输出前后特征图尺寸一致便于相加

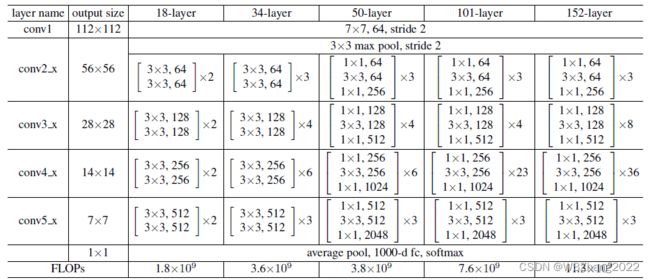

经典ResNet模型

经典的ResNet模型 – ResNet34,由不同类型的残差块构成的,实线表示的是第一种残差块,虚线表示的是第二种残差块

其它经典的ResNet模型

模型复现

残差模块

## 构建残差模块(分为两种,一种是第一条线路输入输出特征图尺寸相同--结构图为实线,另一种是第一条线路输入输出特征图尺寸不同--结构图为虚线)

class ResnetBlock(Model):

def __init__(self, filters, strides=1, residual_path=False):

super(ResnetBlock, self).__init__()

self.filters = filters

self.strides = strides

self.residual_path = residual_path

self.c1 = Conv2D(filters, (3, 3), strides=strides, padding='same', use_bias=False)

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.c2 = Conv2D(filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b2 = BatchNormalization()

# residual_path为True时,对输入进行下采样,即用1x1的卷积核做卷积操作,保证x能和F(x)维度相同,顺利相加

if residual_path:

self.down_c1 = Conv2D(filters, (1, 1), strides=strides, padding='same', use_bias=False)

self.down_b1 = BatchNormalization()

self.a2 = Activation('relu')

def call(self, inputs):

# residual等于输入值本身,即residual=x

residual = inputs

# 将输入通过卷积、BN层、激活层,计算F(x)

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.c2(x)

y = self.b2(x)

if self.residual_path:

residual = self.down_c1(inputs)

residual = self.down_b1(residual)

# 最后输出的是两部分的和,即F(x)+x或F(x)+Wx,再过激活函数

out = self.a2(y + residual)

return out

构建ResNet

以最简单的ResNet18为例子,用基于Tensorflow2.0框架实现ResNet模型

class ResNet18(Model):

def __init__(self, block_list, initial_filters=64): # block_list表示每个block有几个卷积层

super(ResNet18, self).__init__()

self.num_blocks = len(block_list)

self.block_list = block_list

self.out_filters = initial_filters

self.c1 = Conv2D(self.out_filters, (3, 3), strides=1, padding='same', use_bias=False)

self.b1 = BatchNormalization()

self.a1 = Activation('relu')

self.blocks = tf.keras.models.Sequential()

# 构建ResNet网络结构

for block_id in range(len(block_list)): # 第几个resnet block

for layer_id in range(block_list[block_id]): # 第几个卷积层

if block_id != 0 and layer_id == 0: # 对除第一个block以外的每个block的输入进行下采样

block = ResnetBlock(self.out_filters, strides=2, residual_path=True)

else:

block = ResnetBlock(self.out_filters, residual_path=False)

self.blocks.add(block)

self.out_filters *= 2

self.p1 = tf.keras.layers.GlobalAveragePooling2D()

self.f1 = tf.keras.layers.Dense(10, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())

def call(self, inputs):

x = self.c1(inputs)

x = self.b1(x)

x = self.a1(x)

x = self.blocks(x)

x = self.p1(x)

y = self.f1(x)

return y