tensorflow1.1/构建深度卷积神经网络识别物体识别

环境:tensorflow1.1,python3

#coding:utf-8

"""

python3

tensorflow 1.1

matplotlib 2.02

cifar文件包含训练集50000张,测试集合10000张

数据集为matlab类型

data_batch_1训练集样本数据10000张

data_batch_2训练集样本数据10000张

data_batch_3训练集样本数据10000张

data_batch_4训练集样本数据10000张

data_batch_5训练集样本数据10000张

"""

import tensorflow as tf

import scipy.io as sio

import numpy as np

import matplotlib.pyplot as plt

learning_rate = 0.0001

batch_size = 128

#读取数据

def read_data(filename):

with open(filename,'rb') as f:

dict = sio.loadmat(f)

#图片维度要变换

return np.array(dict['data']).reshape(10000,3,32,32).transpose(0,2,3,1),np.array(dict['labels']).reshape(10000,1)

#将图片转为灰度图

def to4d(img):

return img.reshape(img.shape[0],32,32,3).astype(np.float32)/255

#定义将label转为one_hot

def label_to_one_hot(labels_dense, num_classes=10):

num_labels = labels_dense.shape[0]

index_offset = np.arange(num_labels) * num_classes

labels_one_hot = np.zeros((num_labels, num_classes))

labels_one_hot.flat[index_offset + labels_dense.ravel()] = 1

return labels_one_hot

train_total_data,train_total_labels = read_data('/home/tuoxin/mytensorflow/my_project/project3/cifar-10-batches-mat/data_batch_1.mat')

#将数据集合并

for batch_num in range(2,6):

train_data,train_labels = read_data('/home/tuoxin/mytensorflow/my_project/project3/cifar-10-batches-mat/data_batch_'+str(batch_num)+'.mat')

train_total_data = np.vstack((train_total_data,train_data))

train_total_labels = np.vstack((train_total_labels,train_labels))

#train_data,train_labels = read_data('/home/tuoxin/mytensorflow/my_project/project3/cifar-10-batches-py/data_batch_0')

train_data = to4d(train_total_data)

train_labels = label_to_one_hot(train_total_labels,10)

test_data,test_labels = read_data('/home/tuoxin/mytensorflow/my_project/project3/cifar-10-batches-mat/test_batch.mat')

test_data = to4d(test_data)

test_labels = label_to_one_hot(test_labels,10)

#查看图片

plt.imshow(train_data[0])

plt.title('the object is:',fontdict={'size':'16','color':'c'})

plt.show()

#定义输入形状

xs = tf.placeholder(tf.float32,[None,32,32,3])

ys = tf.placeholder(tf.float32,[None,10])

keep_prob1 = tf.placeholder(tf.float32)

keep_prob2 = tf.placeholder(tf.float32)

#构建神经网络

conv1 = tf.layers.conv2d(inputs=xs,filters=32,kernel_size=3,strides=1,padding='same',activation=tf.nn.relu)

#[None,28,28,3] --->>>[None,32,32,32]

conv2 = tf.layers.conv2d(conv1,filters=32,kernel_size=3,strides=1,padding='valid',activation=tf.nn.relu)

#[None,32,32,32] --->>>[None,30,30,32]

pool1 = tf.layers.max_pooling2d(conv2,pool_size=2,strides=2)

#[None,30,30,32] --->>>[None,15,15,32]

dropout1 = tf.nn.dropout(pool1,keep_prob1)

conv3 = tf.layers.conv2d(dropout1,filters=64,kernel_size=3,strides=1,padding='same',activation=tf.nn.relu)

#[None,15,15,32] --->>>[None,15,15,64]

conv4 = tf.layers.conv2d(conv3,filters=64,kernel_size=3,strides=1,padding='valid',activation=tf.nn.relu)

#[None,15,15,64] --->>>[None,13,13,64]

pool2 = tf.layers.max_pooling2d(conv4,pool_size=2,strides=2)

#[None,13,13,64] --->>>[None,6,6,64]

dropout2 = tf.layers.dropout(pool2,keep_prob1)

flat = tf.reshape(dropout2,[-1,6*6*64])

dense = tf.layers.dense(flat,512,tf.nn.relu)

dropout3 = tf.nn.dropout(dense,keep_prob2)

output = tf.layers.dense(dropout3,10)

#计算loss

loss = tf.losses.softmax_cross_entropy(onehot_labels=ys,logits=output)

train = tf.train.AdamOptimizer(learning_rate).minimize(loss)

#计算accuracy,返回两个参数,acc和update_op

accuracy = tf.metrics.accuracy(labels=tf.argmax(ys,axis=1),predictions=tf.argmax(output,axis=1))[1]

with tf.Session() as sess:

init = tf.group(tf.global_variables_initializer(),tf.local_variables_initializer())

sess.run(init)

for step in range(1000):

i = 0

while i < len(train_data):

#按批进行训练

start = i

end = i + batch_size

batch_x = np.array(train_data[start:end])

batch_y = np.array(train_labels[start:end])

_,c = sess.run([train,loss],feed_dict={xs:batch_x,ys:batch_y,keep_prob1:0.75,keep_prob2:0.5})

i += batch_size

if step % 1 ==0:

acc = sess.run(accuracy,feed_dict={xs:test_data,ys:test_labels,keep_prob1:0.75,keep_prob2:0.5})

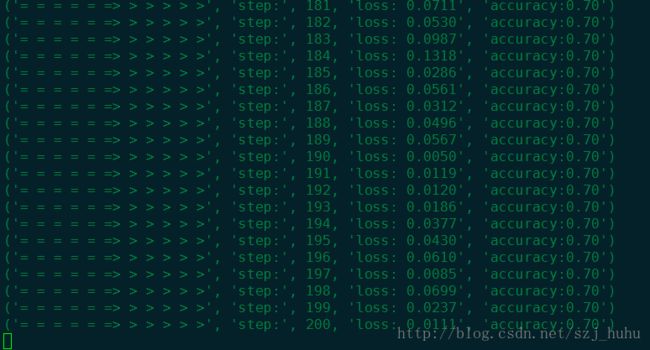

print('= = = = = => > > > > >','step:',step,'loss: %.4f' %c,'accuracy:%.2f' %acc)

结果:

数据集:链接:http://pan.baidu.com/s/1miQdmD6 密码:qmbm