机器学习之MATLAB代码--LSTM和BiLSTM预测对比(十五)

机器学习之MATLAB代码--LSTM和BiLSTM预测对比(十五)

- 代码

-

- 数据

-

- 结果

代码

1、

clc;

clear;

close all;

%% 导入数据

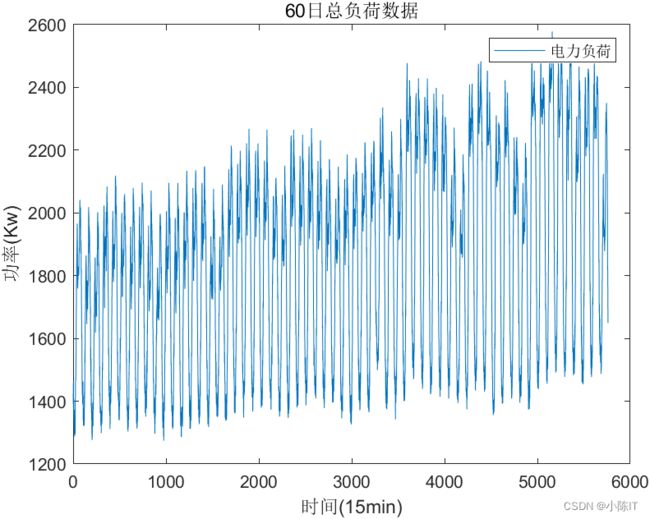

load DATA; % 导入60天的负荷数据

figure(1);

plot(fuhe);

legend('电力负荷');

xlabel('时间(15min)');

ylabel('功率(Kw)');

title('60日总负荷数据');

%% 数据归一化处理

% 归一化到0-1之间

Temp = fuhe;

[~,PS] = mapminmax(fuhe,0,1);

Temp = mapminmax('apply',Temp,PS);

%% 输入输出构建及滑动时间窗输入结构构建

%数据分组

Windows = 8; %时间窗长度

T = 96; %预测未来一天(15min一次)

%各个输入集合大小

X_Train = cell(size(Temp,2)-T-Windows,1); % 训练数据

Y_Train = cell(size(Temp,2)-T-Windows,1); % 测试数据

X_Test = cell(T,1);

Y_Test = cell(T,1);

% 训练数据构建

for i = 1:size(Temp,2)-T-Windows

X_Train{i} = Temp(:,i:i+Windows-1)';

Y_Train{i} = Temp(:,i+Windows);

end

% 测试数据构建

for i = 1:T

X_Test{i} = Temp(:,end-T+i-Windows:end-T+i-1)';

Y_Test{i} = Temp(:,end-T+i);

end

%% 定义神经网络

numFeatures = Windows; % 输入特征个数(等于滑动窗口大小)

numResponses = 1; % 输出特征个数

numHiddenUnits = 80; % 隐含层神经元个数

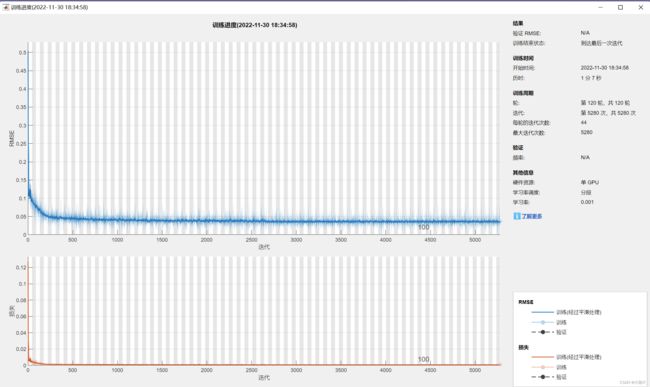

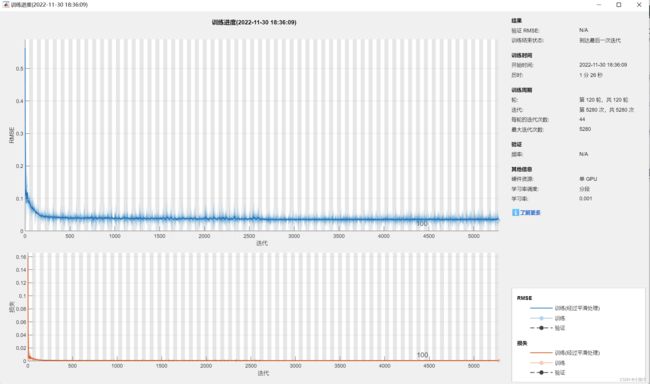

Train_number = 120; % 训练次数

dorp_rate = 0.2; % 遗忘率(可以防止过拟合,0.1表示遗忘30%)

[layer_lstm,layer_bilstm,options] = Net_definition(numFeatures,numResponses,numHiddenUnits,Train_number,dorp_rate); %网络定义函数

%% 网络训练

net_lstm = trainNetwork(X_Train,Y_Train,layer_lstm,options);

net_bilstm = trainNetwork(X_Train,Y_Train,layer_bilstm,options);

%% 网络测试

YPred_lstm = predict(net_lstm,X_Test); %lstm预测

YPred_bilstm = predict(net_bilstm,X_Test); %bi-lstm预测

% 反归一化

True = []; Predict_lstm = []; Predict_bilstm = [];

for i = 1:T

True = [True,mapminmax('reverse',Y_Test{i},PS)];

Predict_lstm = [Predict_lstm,mapminmax('reverse',YPred_lstm{i},PS)];

Predict_bilstm = [Predict_bilstm,mapminmax('reverse',YPred_bilstm{i},PS)];

end

Predict_lstm = double(Predict_lstm);

Predict_bilstm = double(Predict_bilstm);

%% 误差分析

RMSE_lstm = sqrt(mean((True-Predict_lstm).^2));

RMSE_bilstm = sqrt(mean((True-Predict_bilstm).^2));

fprintf('LSTM的预测误差为:');

disp(RMSE_lstm);

fprintf('Bi-LSTM的预测误差为:');

disp(RMSE_bilstm);

%% 结果绘制

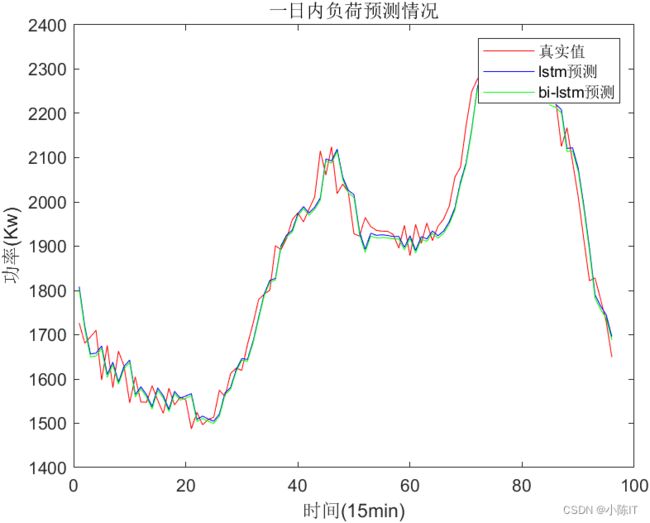

figure(2);

plot(True,'r-');

hold on

plot(Predict_lstm,'b-');

plot(Predict_bilstm,'g-');

hold off

legend('真实值','lstm预测','bi-lstm预测');

xlabel('时间(15min)');

ylabel('功率(Kw)');

title('一日内负荷预测情况');

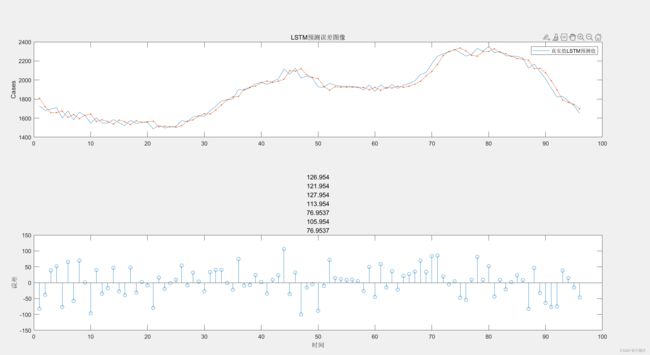

figure(3)

subplot(2,1,1)

plot(True)

hold on

plot(Predict_lstm,'.-')

hold off

legend(['真实值' 'LSTM预测值'])

ylabel('Cases')

title('LSTM预测误差图像')

subplot(2,1,2)

stem(True - Predict_lstm)

xlabel('时间')

ylabel('误差')

title('RMSE = ' + RMSE_lstm)

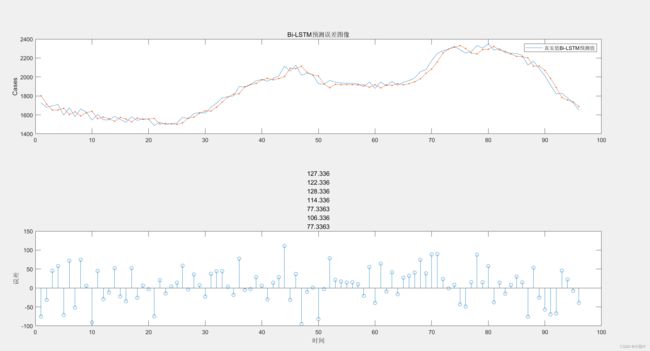

figure(4)

subplot(2,1,1)

plot(True)

hold on

plot(Predict_bilstm,'.-')

hold off

legend(['真实值' 'Bi-LSTM预测值'])

ylabel('Cases')

title('Bi-LSTM预测误差图像')

subplot(2,1,2)

stem(True - Predict_bilstm)

xlabel('时间')

ylabel('误差')

title('RMSE = ' + RMSE_bilstm)

2、

function [layer_lstm,layer_bilstm,options] = Net_definition(numFeatures,numResponses,numHiddenUnits,Train_number,dorp_rate)

%% LSTM

layer_lstm = [ ...

sequenceInputLayer(numFeatures)

lstmLayer(numHiddenUnits,'OutputMode','sequence')

dropoutLayer(dorp_rate)

fullyConnectedLayer(numResponses)

regressionLayer];

%% BI-LSTM

layer_bilstm = [ ...

sequenceInputLayer(numFeatures)

bilstmLayer(numHiddenUnits,'OutputMode','sequence')

dropoutLayer(dorp_rate)

fullyConnectedLayer(numResponses)

regressionLayer];

%%

options = trainingOptions('adam', ...

'MaxEpochs',Train_number, ...

'GradientThreshold',1, ...

'InitialLearnRate',0.005, ...

'LearnRateSchedule','piecewise', ...

'LearnRateDropPeriod',Train_number/2, ...

'LearnRateDropFactor',0.2, ...

'Verbose',0, ...

'Plots','training-progress');

end