register_backward_hook(hook)、register_forward_hook(hook)、register_forward_pre_hook(hook)方法学习笔记

参考链接: register_backward_hook(hook)

参考链接: register_forward_hook(hook)

参考链接: register_forward_pre_hook(hook)

参考链接: Problem with backward hook function #598

参考链接: [中文字幕] 深入理解 PyTorch 中的 Hook 机制

警告部分参考如下链接: Problem with backward hook function #598

警告部分代码演示说明:

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

def backward_hook(module, grad_input, grad_output):

# 分析: grad_output 是loss相对于out的梯度

# 分析: grad_input 是loss分别相对于(2*x+3*y)整体和(5*z)整体的梯度,

# 因此两者互为相反数,即相差一个负号

print("以下是Grad Input:")

print(grad_input)

print("以下是Grad Output:")

print(grad_output)

print("以下是修改后的Grad Input:")

new_grad_input_0 = grad_input[0] #+ 888888.0

new_grad_input_1 = grad_input[1] #- 777777.0

new_grad_input = (new_grad_input_0, new_grad_input_1)

print(new_grad_input)

return new_grad_input

class Model4cxq(nn.Module):

def __init__(self):

super(Model4cxq,self).__init__()

self.register_backward_hook(backward_hook)

def forward(self,x,y,z):

# 实际上是 (2*x + 3*y) - (5*z) # 当作两个张量相减

# 注意这里返回的梯度只有两项,而不是对应x、y、z三项,

# 如果需要这样的三项,必须在张量上添加钩子函数

return 2*x + 3*y - 5*z # return 2*x + 3*y - 5*z # return 2*x - 5*z + 3*y

x = torch.tensor([2.0, 3.0],requires_grad=True)

y = torch.tensor([6.0, 4.0],requires_grad=True)

z = torch.tensor([1.0, 5.0],requires_grad=True)

criterion = nn.MSELoss()

model = Model4cxq()

out = model(x,y,z)

target = torch.tensor([9.0, -1.0],requires_grad=True)

loss = criterion(out, target)

print('loss =',loss)

loss.backward()

print('backward()结束'.center(50,'-'))

print('out.grad:',out.grad)

print('x.grad:',x.grad)

print('y.grad:',y.grad)

print('z.grad:',z.grad)

print('target.grad:',target.grad)

控制台输出(前面部分是backward_hook钩子函数内使用注释部分+ 888888.0和- 777777.0的情形):

Windows PowerShell

版权所有 (C) Microsoft Corporation。保留所有权利。

尝试新的跨平台 PowerShell https://aka.ms/pscore6

加载个人及系统配置文件用了 958 毫秒。

(base) PS C:\Users\chenxuqi\Desktop\News4cxq\test4cxq> conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) PS C:\Users\chenxuqi\Desktop\News4cxq\test4cxq> & 'D:\Anaconda3\envs\ssd4pytorch1_2_0\python.exe' 'c:\Users\chenxuqi\.vscode\extensions\ms-python.python-2020.12.424452561\pythonFiles\lib\python\debugpy\launcher' '55750' '--' 'c:\Users\chenxuqi\Desktop\News4cxq\test4cxq\test23.py'

loss = tensor(50., grad_fn=)

以下是Grad Input:

(tensor([ 8., -6.]), tensor([-8., 6.]))

以下是Grad Output:

(tensor([ 8., -6.]),)

以下是修改后的Grad Input:

(tensor([888896., 888882.]), tensor([-777785., -777771.]))

-------------------backward()结束-------------------

out.grad: None

x.grad: tensor([1777792., 1777764.])

y.grad: tensor([2666688., 2666646.])

z.grad: tensor([-3888925., -3888855.])

target.grad: tensor([-8., 6.])

(ssd4pytorch1_2_0) PS C:\Users\chenxuqi\Desktop\News4cxq\test4cxq> c:; cd 'c:\Users\chenxuqi\Desktop\News4cxq\test4cxq'; & 'D:\Anaconda3\envs\ssd4pytorch1_2_0\python.exe' 'c:\Users\chenxuqi\.vscode\extensions\ms-python.python-2020.12.424452561\pythonFiles\lib\python\debugpy\launcher' '55852' '--' 'c:\Users\chenxuqi\Desktop\News4cxq\test4cxq\test23.py'

loss = tensor(50., grad_fn=)

以下是Grad Input:

(tensor([ 8., -6.]), tensor([-8., 6.]))

以下是Grad Output:

(tensor([ 8., -6.]),)

以下是修改后的Grad Input:

(tensor([ 8., -6.]), tensor([-8., 6.]))

-------------------backward()结束-------------------

out.grad: None

x.grad: tensor([ 16., -12.])

y.grad: tensor([ 24., -18.])

z.grad: tensor([-40., 30.])

target.grad: tensor([-8., 6.])

(ssd4pytorch1_2_0) PS C:\Users\chenxuqi\Desktop\News4cxq\test4cxq>

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

def backward_hook(module, grad_input, grad_output):

# 分析: grad_output 是loss相对于out的梯度

# 分析: grad_input 是loss分别相对于(2*x)整体和(3*y)整体的梯度,

# 因此两者相等

print("以下是Grad Input:")

print(grad_input)

print("以下是Grad Output:")

print(grad_output)

print("以下是修改后的Grad Input:")

new_grad_input_0 = grad_input[0] + 1000.0 #+ 1000.0

new_grad_input_1 = grad_input[1] - 2000.0 #- 2000.0

new_grad_input = (new_grad_input_0, new_grad_input_1)

print(new_grad_input)

return new_grad_input

class Model4cxq(nn.Module):

def __init__(self):

super(Model4cxq,self).__init__()

self.register_backward_hook(backward_hook)

def forward(self,x,y):

# 实际上是 (2*x) + (3*y) # 当作两个张量相加

# 注意这里返回的梯度只有两项,分别相对于(2*x)整体和(3*y)整体的梯度

# 如果需要分别相对于x和y的梯度,必须在张量上添加钩子函数

return 2*x + 3*y # 实际上是: return (2*x) + (3*y) 即两个张量相加操作

x = torch.tensor([2.0, 3.0],requires_grad=True)

y = torch.tensor([6.0, 4.0],requires_grad=True)

criterion = nn.MSELoss()

model = Model4cxq()

out = model(x,y)

target = torch.tensor([14.0, 12.0],requires_grad=True)

loss = criterion(out, target)

print('loss =',loss)

loss.backward()

print('backward()结束'.center(50,'-'))

print('out.grad:',out.grad)

print('x.grad:',x.grad)

print('y.grad:',y.grad)

print('target.grad:',target.grad)

控制台输出结果(第一部分是在backward_hook函数中注释掉#+ 1000.0和#- 2000.0的结果):

Windows PowerShell

版权所有 (C) Microsoft Corporation。保留所有权利。

尝试新的跨平台 PowerShell https://aka.ms/pscore6

加载个人及系统配置文件用了 874 毫秒。

(base) PS C:\Users\chenxuqi\Desktop\News4cxq\test4cxq> conda activate ssd4pytorch1_2_0

(ssd4pytorch1_2_0) PS C:\Users\chenxuqi\Desktop\News4cxq\test4cxq> & 'D:\Anaconda3\envs\ssd4pytorch1_2_0\python.exe' 'c:\Users\chenxuqi\.vscode\extensions\ms-python.python-2020.12.424452561\pythonFiles\lib\python\debugpy\launcher' '56239' '--' 'c:\Users\chenxuqi\Desktop\News4cxq\test4cxq\test23.py'

loss = tensor(50., grad_fn=<MeanBackward0>)

以下是Grad Input:

(tensor([8., 6.]), tensor([8., 6.]))

以下是Grad Output:

(tensor([8., 6.]),)

以下是修改后的Grad Input:

(tensor([8., 6.]), tensor([8., 6.]))

-------------------backward()结束-------------------

out.grad: None

x.grad: tensor([16., 12.])

y.grad: tensor([24., 18.])

target.grad: tensor([-8., -6.])

(ssd4pytorch1_2_0) PS C:\Users\chenxuqi\Desktop\News4cxq\test4cxq> c:; cd 'c:\Users\chenxuqi\Desktop\News4cxq\test4cxq'; & 'D:\Anaconda3\envs\ssd4pytorch1_2_0\python.exe' 'c:\Users\chenxuqi\.vscode\extensions\ms-python.python-2020.12.424452561\pythonFiles\lib\python\debugpy\launcher' '56245' '--' 'c:\Users\chenxuqi\Desktop\News4cxq\test4cxq\test23.py'

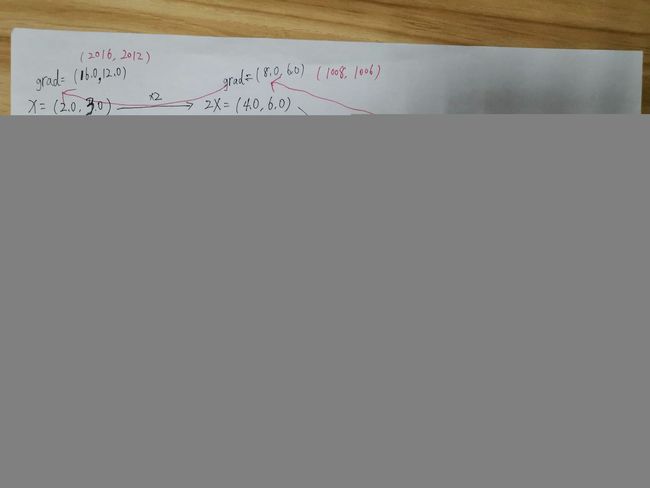

loss = tensor(50., grad_fn=<MeanBackward0>)

以下是Grad Input:

(tensor([8., 6.]), tensor([8., 6.]))

以下是Grad Output:

(tensor([8., 6.]),)

以下是修改后的Grad Input:

(tensor([1008., 1006.]), tensor([-1992., -1994.]))

-------------------backward()结束-------------------

out.grad: None

x.grad: tensor([2016., 2012.])

y.grad: tensor([-5976., -5982.])

target.grad: tensor([-8., -6.])

(ssd4pytorch1_2_0) PS C:\Users\chenxuqi\Desktop\News4cxq\test4cxq>

文档翻译:

register_backward_hook(hook)

Registers a backward hook on the module.

将一个反向传播的钩子函数登记注册到一个模块上.

The hook will be called every time the gradients with

respect to module inputs are computed. The hook should

have the following signature:

每次计算模型输入的梯度时都会调用这个钩子函数.该钩子函数应该具有

如下签名形式:

hook(module, grad_input, grad_output) -> Tensor or None

The grad_input and grad_output may be tuples if the module

has multiple inputs or outputs. The hook should not modify

its arguments, but it can optionally return a new gradient

with respect to input that will be used in place of

grad_input in subsequent computations.

如果模块的输入数据和输出数据有多个的话,那么grad_input和

grad_output可能是一个元组.该钩子函数,不应该修改它的参数,但是

它可以可选地返回一个新的相对于输入input的梯度,该梯度可以被用来

在随后的计算中代替grad_input.

Returns 返回

a handle that can be used to remove the added hook by

calling handle.remove()

返回一个句柄,该句柄通过调用handle.remove()可以移除已添加

的钩子函数.

Return type 返回类型

torch.utils.hooks.RemovableHandle

Warning 警告

The current implementation will not have the presented behavior

for complex Module that perform many operations. In some failure

cases, grad_input and grad_output will only contain the gradients

for a subset of the inputs and outputs. For such Module, you

should use torch.Tensor.register_hook() directly on a specific

input or output to get the required gradients.

当前的实现没有展现执行许多操作的复杂模块的行为.在某些错误的例子中,

grad_input和grad_output只能包含输入数据和输出数据子集的梯度.对于

这样的模块,你应该在特定的输入和输出数据上直接使用

torch.Tensor.register_hook()来获得所需的梯度.

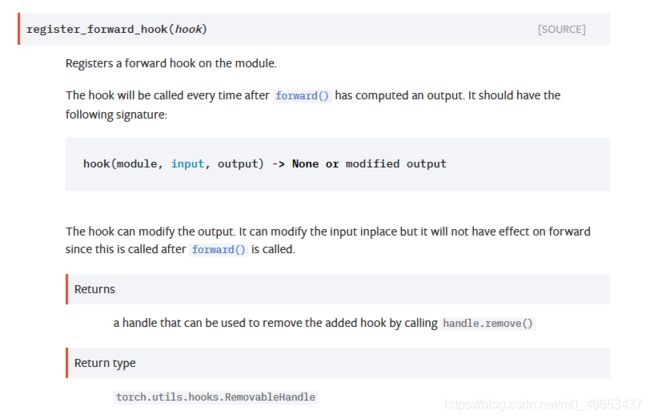

文档翻译:

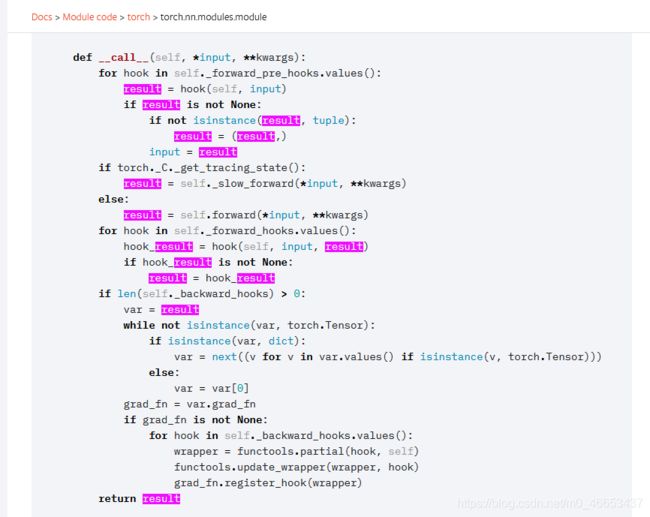

register_forward_hook(hook)

Registers a forward hook on the module.

在模块中登记注册一个前向传递过程中的钩子函数.

The hook will be called every time after forward()

has computed an output. It should have the following signature:

每次调用forward()方法计算得到输出数据之后都会执行该钩子函数.

该钩子函数具有以下签名格式:

hook(module, input, output) -> None or modified output

hook(module, input, output) -> None 或者修改过的输出数据

The hook can modify the output. It can modify the input inplace

but it will not have effect on forward since this is called

after forward() is called.

该钩子函数可以修改输出output.它能够原地inplace修改输入input,但是它不会对

前向传播产生影响,因为它的执行是在forward()方法调用之后.

Returns 返回

a handle that can be used to remove the added hook by

calling handle.remove()

返回一个句柄handle,该句柄可以通过使用handle.remove()来移除已添加的钩子函数.

Return type 返回类型

torch.utils.hooks.RemovableHandle

文档翻译:

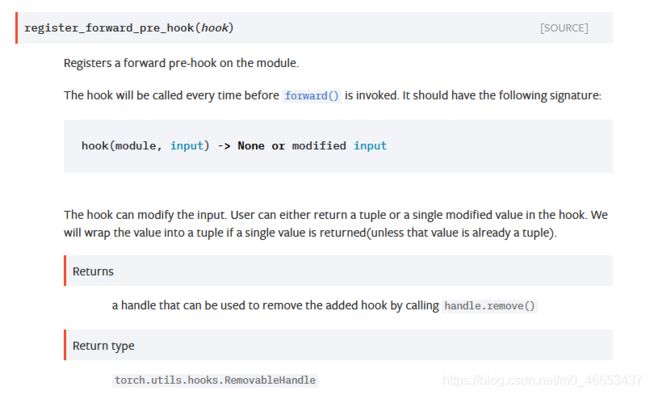

register_forward_pre_hook(hook)

Registers a forward pre-hook on the module.

在模块中登记注册一个前向传播过程中的预钩子函数pre-hook.

The hook will be called every time before forward() is invoked.

该钩子函数是在每次forward()方法调用之前执行的.

It should have the following signature:

该钩子函数具有以下签名格式:

hook(module, input) -> None or modified input

hook(module, input) -> None 或者修改过输入数据input

The hook can modify the input. User can either return a tuple or

a single modified value in the hook. We will wrap the value into

a tuple if a single value is returned(unless that value is

already a tuple).

该钩子函数可以修改输入数据input. 用户在钩子函数中要么返回一个元组,

要么返回修改过的单个值.如果返回的是单个值,

我们将会将这个单个值包装成元组(除非它已经是一个元组了).

Returns 返回

a handle that can be used to remove the added hook by

calling handle.remove()

返回一个句柄handle.该句柄具有方法handle.remove(),可以用来移除已添加的钩子函数.

Return type 返回类型

torch.utils.hooks.RemovableHandle