【目标检测】Faster RCNN代码实现——(2)

文章目录

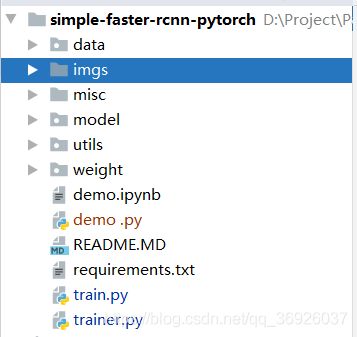

- 一、项目总览

- 二、model文件夹

-

- 2.1 faster_rcnn.py

- 2.1 faster_rcnn_vgg16.py

- 2.3 region_proposal_network.py

- 2.4 bbbox_tools.py

- 2.5 creator_tools.py

一、项目总览

二、model文件夹

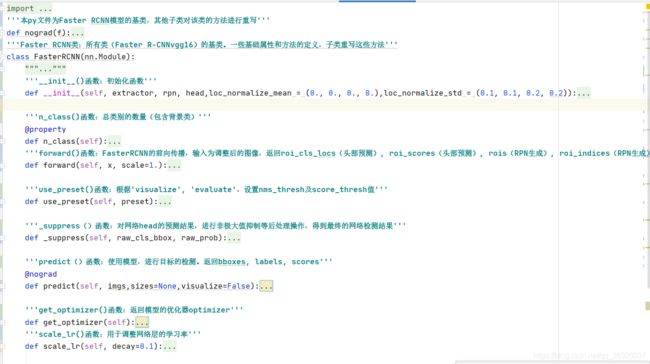

2.1 faster_rcnn.py

本py文件用于构建Faster RCNN模型的基类,子类重写该类中的方法或新增方法,来定义想要的模型。

from __future__ import absolute_import

from __future__ import division

import torch as t

import numpy as np

from utils import array_tool as at

from model.utils.bbox_tools import loc2bbox

from torchvision.ops import nms

from torch import nn

from data.dataset import preprocess

from torch.nn import functional as F

from utils.config import opt

'''本py文件为Faster RCNN模型的基类,其他子类对该类的方法进行重写'''

def nograd(f):

def new_f(*args,**kwargs):

with t.no_grad():

return f(*args,**kwargs)

return new_f

'''Faster RCNN类:所有类(Faster R-CNNvgg16)的基类。一些基础属性和方法的定义,子类重写这些方法'''

class FasterRCNN(nn.Module):

"""

3个网络阶段构成了Faster R-CNN.

1.Feature extraction: 输入图像提取共享的特征图

2.Region Proposal Networks: 使用共享特征图,产生目标的ROI

3.Localization and Classification Heads:使用共享特征图及提案ROI进行目标的分类和位置精修

Args:

extractor (nn.Module): 一个获取BCHW图像数组并返回特征图的模块。

rpn (nn.Module): 具有与以下接口相同的模块`model.region_proposal_network.RegionProposalNetwork`

head (nn.Module): 一个采用BCHW变量,RoI和RoI的批次索引的模块。 这将返回与类相关的位置和类分数。

loc_normalize_mean (tuple of four floats):位置估计值的平均值。

loc_normalize_std (tupler of four floats): 位置预测值的标准差。

"""

'''__init__()函数:初始化函数'''

def __init__(self, extractor, rpn, head,loc_normalize_mean = (0., 0., 0., 0.),loc_normalize_std = (0.1, 0.1, 0.2, 0.2)):

super(FasterRCNN, self).__init__()

'''(1)特征提取器'''

self.extractor = extractor

'''(2)RPN网络'''

self.rpn = rpn

'''(3)头部网络'''

self.head = head

'''4.位置预测的均值和标准差设定'''

self.loc_normalize_mean = loc_normalize_mean#loc_normalize_mean = (0., 0., 0., 0.)

self.loc_normalize_std = loc_normalize_std#loc_normalize_std = (0.1, 0.1, 0.2, 0.2)

'''5.nms_thresh、score_thresh阈值的设定'''

self.use_preset('evaluate')

'''n_class()函数:总类别的数量(包含背景类)'''

@property

def n_class(self):

return self.head.n_class

'''forward()函数:FasterRCNN的前向传播,输入为调整后的图像,返回roi_cls_locs(头部预测), roi_scores(头部预测), rois(RPN生成), roi_indices(RPN生成)'''

def forward(self, x, scale=1.):

"""

`scale`:RPN使用scale来确定选择小目标的阈值,这无论他们的置信度如何,都会被拒绝

`N`:批量的大小 Classes=背景+`L`类

Args:

x (autograd.Variable): 4D 图像变量

scale (float): 在预处理期间应用于原始图像的缩放比例。

Returns:

返回下面列出的四个值的元组。

roi_cls_locs:候选ROI的偏移和缩放 。形状`(R', (L + 1) \\times 4)`. `R':批量图片产生的ROI的总数。R_i第i个图片产生的ROI

roi_scores: 候选ROI的类预测 shape `(R', L + 1)`.`L` 除去背景类的类别数量

rois: RoIs proposed by RPN. shape (R', 4)`.

roi_indices : 批量图片产生的ROI的索引 shape`(R',)`.

"""

img_size = x.shape[2:]#图像的大小H, W。x=(C, H, W)

'''(1)提取x的特征图:x为调整后的图像'''

h = self.extractor(x)

'''(2)RPN网络生成ROI'''

rpn_locs, rpn_scores, rois, roi_indices, anchor = self.rpn(h, img_size, scale)#输入:调整后图像的特征图、调整后图像尺寸、图像的调整尺度

'''(3)头部,生成 roi_cls_locs, roi_scores'''

roi_cls_locs, roi_scores = self.head(h, rois, roi_indices)#输入:调整后图像的特征图、roi坐标 (x,y,x,y)、roi的索引

return roi_cls_locs, roi_scores, rois, roi_indices

'''use_preset()函数:根据'visualize', 'evaluate',设置nms_thresh及score_thresh值'''

def use_preset(self, preset):

"""Args: preset: ({'visualize', 'evaluate'): """

if preset == 'visualize':

self.nms_thresh = 0.3

self.score_thresh = 0.7

elif preset == 'evaluate':

self.nms_thresh = 0.3

self.score_thresh = 0.05

else:

raise ValueError('preset must be visualize or evaluate')

'''_suppress()函数:对网络head的预测结果,进行非极大值抑制等后处理操作,得到最终的网络检测结果'''

def _suppress(self, raw_cls_bbox, raw_prob):

bbox = list()

label = list()

score = list()

for l in range(1, self.n_class):#跳过类别0,因为是背景类

cls_bbox_l = raw_cls_bbox.reshape((-1, self.n_class, 4))[:, l, :]

prob_l = raw_prob[:, l]

mask = prob_l > self.score_thresh

cls_bbox_l = cls_bbox_l[mask]

prob_l = prob_l[mask]

keep = nms(cls_bbox_l, prob_l,self.nms_thresh)

# import ipdb;ipdb.set_trace()

# keep = cp.asnumpy(keep)

bbox.append(cls_bbox_l[keep].cpu().numpy())

# The labels are in [0, self.n_class - 2].

label.append((l - 1) * np.ones((len(keep),)))

score.append(prob_l[keep].cpu().numpy())

bbox = np.concatenate(bbox, axis=0).astype(np.float32)

label = np.concatenate(label, axis=0).astype(np.int32)

score = np.concatenate(score, axis=0).astype(np.float32)

return bbox, label, score

'''predict()函数:使用模型,进行目标的检测。返回bboxes, labels, scores'''

@nograd

def predict(self, imgs,sizes=None,visualize=False):

"""从某张图像中检测目标

Args:

imgs (iterable of numpy.ndarray): images数组.所有图像都是CHW和RGB格式,值的范围为0-255

Returns:返回一个元组,元组的每个元素为一个列表,`(bboxes, labels, scores)`.

bboxes: 浮点数组列表。hape`(R, 4)`代表 R 一张图像中的边界框的数量,每个边界框(y_{min}, x_{min}, y_{max}, x_{max}

labels: 整型数组列表 shape (R,)`.每个值代表边界框的类别。值的取值范围为`[0, L - 1]`L是前景类别的数量

scores: 浮点数组列表。 :math:`(R,)`每个值代表预测置信度

"""

'''(1)设置模型为测试模式:不进行梯度的更新'''

self.eval()

'''(2) 加载并处理图片'''

if visualize:

self.use_preset('visualize')#设置nms_thresh及score_thresh值

prepared_imgs = list()

sizes = list()

for img in imgs:

size = img.shape[1:]#读取原图的大小

img = preprocess(at.tonumpy(img))#调整图像的大小、值的范围。返回调整大小后的img数组,归一化值的范围为-1~1

prepared_imgs.append(img)#将调整后的图片加入列表

sizes.append(size)#将原始图片尺寸

else:

prepared_imgs = imgs

'''(3)执行检测'''

bboxes = list()

labels = list()

scores = list()

for img, size in zip(prepared_imgs, sizes):

img = at.totensor(img[None]).float()

'''1.获取图片的缩放尺度'''

# 原始图片的缩放因子scale,因为原始图像经过大小等的调整,这个缩放因子scale在ProposalCreator 筛选roi时有用到,即将所有候选框按这个缩放因子映射回原图,

# 超出原图边框的趋于将被截断。

scale = img.shape[3] / size[1]

'''2.图片执行forward:得到头部分支的预测roi_cls_loc, roi_scores, rois, _'''

roi_cls_loc, roi_scores, rois, _ = self(img, scale=scale)#调用FasterRCNN的前向传播函数:返回roi_cls_locs, roi_scores, rois, roi_indices

# We are assuming that batch size is 1.

roi_score = roi_scores.data

roi_cls_loc = roi_cls_loc.data

roi = at.totensor(rois) / scale

# 把预测边界框转换为图像坐标中的边界框。预测边界框是网络输入图像的预测,将其转换为适合原图像的尺寸

mean = t.Tensor(self.loc_normalize_mean).cuda(). repeat(self.n_class)[None]

std = t.Tensor(self.loc_normalize_std).cuda().repeat(self.n_class)[None]

roi_cls_loc = (roi_cls_loc * std + mean)

roi_cls_loc = roi_cls_loc.view(-1, self.n_class, 4)

roi = roi.view(-1, 1, 4).expand_as(roi_cls_loc)

'''3.解码roi_cls_loc:变为实际坐标(y,x,y,x),而不是偏移量'''

#将网络预测关于anchor box的偏移解码为边界框的直接坐标:(y,x,y,x),roi是参考框,roi_cls_loc是相对于roi的偏移

cls_bbox = loc2bbox(at.tonumpy(roi).reshape((-1, 4)),at.tonumpy(roi_cls_loc).reshape((-1, 4)))

cls_bbox = at.totensor(cls_bbox)

cls_bbox = cls_bbox.view(-1, self.n_class * 4)

'''4.将超出图像的边界框区域进行裁剪'''

cls_bbox[:, 0::2] = (cls_bbox[:, 0::2]).clamp(min=0, max=size[0])

cls_bbox[:, 1::2] = (cls_bbox[:, 1::2]).clamp(min=0, max=size[1])

prob = (F.softmax(at.totensor(roi_score), dim=1))

'''5.得到真正的检测结果:'''

# predict函数是对网络RoIhead网络输出的预处理

# 函数_suppress将得到真正的预测结果。

bbox, label, score = self._suppress(cls_bbox, prob)

bboxes.append(bbox)

labels.append(label)

scores.append(score)

self.use_preset('evaluate')

self.train()

return bboxes, labels, scores

'''get_optimizer()函数:返回模型的优化器optimizer'''

def get_optimizer(self):

"""返回优化器,如果自定义了优化器,则优化器被重写"""

lr = opt.lr

params = []

'''网络层的学习率、权重衰减'''

for key, value in dict(self.named_parameters()).items():

if value.requires_grad:

if 'bias' in key:

params += [{'params': [value], 'lr': lr * 2, 'weight_decay': 0}]

else:

params += [{'params': [value], 'lr': lr, 'weight_decay': opt.weight_decay}]

'''优化算法'''

if opt.use_adam:

self.optimizer = t.optim.Adam(params)

else:

self.optimizer = t.optim.SGD(params, momentum=0.9)

return self.optimizer

'''scale_lr()函数:用于调整网络层的学习率'''

def scale_lr(self, decay=0.1):

for param_group in self.optimizer.param_groups:

param_group['lr'] *= decay

return self.optimizer

2.1 faster_rcnn_vgg16.py

本py文件定义了faster_rcnn_vgg16类,该类重写了faster_rcnn类。

from __future__ import absolute_import

import torch as t

import torch

from torch import nn

# from torchvision.models import vgg16

from torchvision.ops import RoIPool

from model.region_proposal_network import RegionProposalNetwork

from model.faster_rcnn import FasterRCNN

from utils import array_tool as at

from utils.config import opt

'''基于VGG-16的Faster R-CNN。本类是FasterRCNN的子类'''

###################################################新增代码:decom_vgg16()中调用##############################################################

cfgs = {'A': [64, 'M', 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'B': [64, 64, 'M', 128, 128, 'M', 256, 256, 'M', 512, 512, 'M', 512, 512, 'M'],

'D': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 'M', 512, 512, 512, 'M', 512, 512, 512, 'M'],

'E': [64, 64, 'M', 128, 128, 'M', 256, 256, 256, 256, 'M', 512, 512, 512, 512, 'M', 512, 512, 512, 512, 'M'],

}

from torchvision.models import VGG

def make_layers(cfg, batch_norm=False):

layers = []

in_channels = 3

for v in cfg:

if v == 'M':

layers += [nn.MaxPool2d(kernel_size=2, stride=2)]

else:

conv2d = nn.Conv2d(in_channels, v, kernel_size=3, padding=1)

if batch_norm:

layers += [conv2d, nn.BatchNorm2d(v), nn.ReLU(inplace=True)]

else:

layers += [conv2d, nn.ReLU(inplace=True)]

in_channels = v

return nn.Sequential(*layers)

def vgg16(pretrained=False, progress=True, **kwargs):

return _vgg('vgg16', 'D', False, pretrained, progress, **kwargs)

def _vgg(arch, cfg, batch_norm, pretrained, progress, **kwargs):

if pretrained:

kwargs['init_weights'] = False

model = VGG(make_layers(cfgs[cfg], batch_norm=batch_norm), **kwargs)

if pretrained:

model.load_state_dict(torch.load(r'D:\Project\PaperProject\simple-faster-rcnn-pytorch\weight\vgg16-397923af.pth'))

return model

###################################################新增代码:decom_vgg16()中调用##############################################################

''' FasterRCNNVGG16类:父类为FasterRCNN,此类用于构建FasterRCNNVGG16网络结构'''

class FasterRCNNVGG16(FasterRCNN):

"""基于VGG-16的Faster R-CNN。父类是FasterRCNN的子类"""

feat_stride = 16 # vgg16的 conv5输出的下采样步长

'''__init__()函数:初始化函数'''

def __init__(self, n_fg_class=20,ratios=[0.5, 1, 2],anchor_scales=[8, 16, 32]):

'''

n_fg_class (int): 包含背景类的数据集类的数量

ratios (list of floats):anchors的宽和高比

anchor_scales (list of numbers): anchors的面积。这些面积将是“ anchor_scales”中元素的平方与参考窗口原始面积的乘积。

'''

'''(1)主干网络:特征提取网络,调整后的vgg16'''

extractor, classifier = decom_vgg16()

'''(2)RPN网络:返回RPN预测的 rpn_locs(相对于anchor box的偏移,(t_y,t_x,t_h,t_w))、

rpn_scores(rpn的前景类分数)、

rois(经过筛选后的rpn,格式为y,x,y,x)、roi的索引,

anchor box'''

rpn = RegionProposalNetwork(512, 512,ratios=ratios,anchor_scales=anchor_scales,feat_stride=self.feat_stride,)

#h, img_size, scale

'''(3)头部网络:得到网络预测:roi_cls_locs(边界框坐标,4维), roi_scores(各种类别分数,num_cls维)'''

head = VGG16RoIHead(n_class=n_fg_class + 1,roi_size=7,spatial_scale=(1. / self.feat_stride), classifier=classifier )

super(FasterRCNNVGG16, self).__init__(extractor, rpn,head,)

'''decom_vgg16()函数:构建vgg16网络(Faster RCNN的特征提取网络)。返回nn.Sequential(*features), classifier'''

def decom_vgg16():

'''(1)加载预训练 模型结构、权重'''

# caffe_pretrain = False # use caffe pretrained model instead of torchvision

# caffe_pretrain_path = 'checkpoints/vgg16_caffe.pth'

if opt.caffe_pretrain:

model = vgg16(pretrained=False)

if not opt.load_path:

model.load_state_dict(t.load(opt.caffe_pretrain_path))

else:

# opt.load_path= None

model = vgg16(not opt.load_path)#加载torchvision内的vgg16模型及权重

#print(model)#打印VGG16分类网络

'''(2)预训练模型 结构调整:调整vgg16网络使其适应于本项目'''

#VGG16模型有两部分:features,classifier。

#1.features保持不变

features = list(model.features)[:30]

#2.classifier层,删除最后一个分类层和其中包含的dropout层

classifier = model.classifier

classifier = list(classifier)

del classifier[6]

if not opt.use_drop:#删除dropout层

del classifier[5]

del classifier[2]

classifier = nn.Sequential(*classifier)

'''(3)固定某些层的参数,不训练'''

# freeze top4 conv

for layer in features[:10]:#features共计30层,固定其10层不训练

for p in layer.parameters():

p.requires_grad = False

return nn.Sequential(*features), classifier

'''VGG16RoIHead类:Faster RCNN的头部,得到网络预测:roi_cls_locs(边界框坐标,4维), roi_scores(各种类别分数,num_cls维)'''

class VGG16RoIHead(nn.Module):

"""基于给定的RoI 的特征图输出位置和类别。

Args:

n_class (int): 包含背景类别的总类别的数量

roi_size (int): 经过RoI-pooling作用后的特征图的高和宽.

spatial_scale (float): roi调整的尺度

classifier (nn.Module): 从vgg16移植的两层线性层

"""

'''__init__()函数:初始化函数'''

def __init__(self, n_class, roi_size, spatial_scale,classifier):

super(VGG16RoIHead, self).__init__()

'''特征提取网络的classifier网络层'''

self.classifier = classifier

'''构建头部网络的线性层:定位层和分类层(线性层),并初始化权重参数'''

self.cls_loc = nn.Linear(4096, n_class * 4)

self.score = nn.Linear(4096, n_class)

normal_init(self.cls_loc, 0, 0.001)

normal_init(self.score, 0, 0.01)

'''包含背景的类别数量、ROI调整后的大小(通过roi pooling)、ROI调整的尺度'''

self.n_class = n_class

self.roi_size = roi_size

self.spatial_scale = spatial_scale

'''构建RoIPool层'''

self.roi = RoIPool( (self.roi_size, self.roi_size),self.spatial_scale)

'''forward()函数:前向传播'''

def forward(self, x, rois, roi_indices):

""" `N` batches.

Args:

x (Variable): 调整后的图片产生的特征图

rois (Tensor): ROI边界框的坐标. 这个数组是批量所有图片的ROI 。shape : `(R', 4)`. `R_i` 第i张图片的ROI

roi_indices (Tensor): 一维数组,是ROI在批量中图片的索引shape :math:`(R',)`,每个值指示批量的哪张图片

"""

#from utils import array_tool as at

'''(1)拼接roi_indices、 rois得到indices_and_rois:rois坐标为(y,x,y,x)'''

#totensor()函数:将其他类型的数据转化为tensor类型,并迁移到cuda

roi_indices = at.totensor(roi_indices).float()

rois = at.totensor(rois).float()

indices_and_rois = t.cat([roi_indices[:, None], rois], dim=1)

'''(2)ROI由(y,x,y,x)转换为(x,y,x,y)格式,得到indices_and_rois'''

xy_indices_and_rois = indices_and_rois[:, [0, 2, 1, 4, 3]]

indices_and_rois = xy_indices_and_rois.contiguous()

'''(3)RoIPool后的ROI特征图:'''

pool = self.roi(x, indices_and_rois)

pool = pool.view(pool.size(0), -1)

'''(4)定位和分类层作用于ROI特征图,得到检测位置及检测类别'''

fc7 = self.classifier(pool)

roi_cls_locs = self.cls_loc(fc7)

roi_scores = self.score(fc7)

return roi_cls_locs, roi_scores

'''normal_init()函数:网络层权重的初始化'''

def normal_init(m, mean, stddev, truncated=False):

# x is a parameter

if truncated:

m.weight.data.normal_().fmod_(2).mul_(stddev).add_(mean) # not a perfect approximation

else:

m.weight.data.normal_(mean, stddev)

m.bias.data.zero_()

2.3 region_proposal_network.py

本py文件构建faster Rcnn的RPN网络,返回RPN预测的 rpn_locs(相对于anchor box的偏移,(t_y,t_x,t_h,t_w))、rpn_scores(rpn的前景类分数)、rois(经过筛选后的rpn,格式为y,x,y,x)、roi的索引,anchor box

import numpy as np

from torch.nn import functional as F

import torch as t

from torch import nn

from model.utils.bbox_tools import generate_anchor_base

from model.utils.creator_tool import ProposalCreator

'''RegionProposalNetwork类:构建RPN网络.返回RPN预测的 rpn_locs(相对于anchor box的偏移,(t_y,t_x,t_h,t_w))、rpn_scores(rpn的前景类分数)、rois(经过筛选后的rpn,格式为y,x,y,x)、roi的索引,anchor box'''

class RegionProposalNetwork(nn.Module):

"""利用从图像中提取的特征,在“对象”周围产生类不可知的边界框。

Args:

in_channels (int): 输入通道数

mid_channels (int): 中间张量的通道大小。

ratios (list of floats): anchor的宽度与高度之比。

anchor_scales (list of numbers): anchor的面积。这些面积是`anchor_scales`中元素的平方与参考窗口原始区域的乘积。

feat_stride (int): 从图像中提取的特征的下采样步幅大小。

initialW (callable): 初始化权重值.

proposal_creator_params (dict): Key valued paramters for

:class:`model.utils.creator_tools.ProposalCreator`.

"""

''' __init_()函数:属性以及方法的初始化函数'''

def __init__(self, in_channels=512, mid_channels=512, ratios=[0.5, 1, 2],

anchor_scales=[8, 16, 32], feat_stride=16,proposal_creator_params=dict()):

#ratios=[0.5, 1, 2],anchor_scales=[8, 16, 32]

super(RegionProposalNetwork, self).__init__()

'''生成一组先验base anchors(y_{min}, x_{min}, y_{max}, x_{max}),共9个,这组anchors在特征图上滑动,产生先验anchor box。 '''

self.anchor_base = generate_anchor_base(anchor_scales=anchor_scales, ratios=ratios)

self.feat_stride = feat_stride

'''产生ROI,作为头部网络的输入 (ROI:(y,x,y,x)):ROI,是经过NMS等筛选处理后的rpn预测框'''

self.proposal_layer = ProposalCreator(self, **proposal_creator_params)

'''构建RPN网络:参数使用normal_init初始化'''

#一组先验anchor的数量

n_anchor = self.anchor_base.shape[0]

self.conv1 = nn.Conv2d(in_channels, mid_channels, 3, 1, 1)

self.score = nn.Conv2d(mid_channels, n_anchor * 2, 1, 1, 0)

self.loc = nn.Conv2d(mid_channels, n_anchor * 4, 1, 1, 0)

normal_init(self.conv1, 0, 0.01)

normal_init(self.score, 0, 0.01)

normal_init(self.loc, 0, 0.01)

'''forward()函数:RPN网络的前向传播。输入为来自特征提取网络的特征图'''

def forward(self, x, img_size, scale=1.):#h, img_size, scale

"""

Args:

x (~torch.autograd.Variable): 特征图 shape :`(N, C, H, W)`. `N` 批量大小 `C` 输入的通道数 `H` and `W` 特征图的宽和高

img_size (tuple of ints):网络输入图像的(`height, width`)

scale (float): 网络输入图像相对于原始图像的缩放尺度

Returns: (~torch.autograd.Variable, ~torch.autograd.Variable, array, array, array):

元组包含以下5个值:

rpn_locs:网络预测边界框相对于anchor box的偏移 shape:`(N, H W A, 4)`.`A` 每个特征图单元的anchor的数量

rpn_scores : anchor box的前景类的分数 shape : `(N, H W A, 2)`.`A` 每个特征图单元的anchor的数量

rois : ROI边界框的坐标,shape `(R', 4)`坐标(y,x,y,x).`R_i` 代表第i张图片预测的ROI

roi_indices : RoI对应的图片索引的数组。 shape:(R',)`。

anchor :anchor box的坐标.shape : (H W A, 4)`,其中坐标为(y,x,y,x).

"""

'''特征图的高、宽'''

n, _, hh, ww = x.shape

'''(1)为特征图产生anchor box(y,x,y,x)'''

anchor = _enumerate_shifted_anchor(np.array(self.anchor_base),self.feat_stride, hh, ww)#参数

'''(2)为特征图,生成RPN预测:位置rpn_locs(n, -1, 4)、前景类分数预测 rpn_scores(n, -1, 2)'''

n_anchor = anchor.shape[0] // (hh * ww)

'''1.网络边界框坐标预测,坐标为相对于anchor box的偏移量(t_y,t_x,t_h,t_w)'''

h = F.relu(self.conv1(x))

rpn_locs = self.loc(h)

rpn_locs = rpn_locs.permute(0, 2, 3, 1).contiguous().view(n, -1, 4)

'''2.网络边界框前景类分数预测:经过softmax处理'''

rpn_scores = self.score(h)

rpn_scores = rpn_scores.permute(0, 2, 3, 1).contiguous()

rpn_softmax_scores = F.softmax(rpn_scores.view(n, hh, ww, n_anchor, 2), dim=4)

rpn_fg_scores = rpn_softmax_scores[:, :, :, :, 1].contiguous()

rpn_fg_scores = rpn_fg_scores.view(n, -1)

rpn_scores = rpn_scores.view(n, -1, 2)

rois = list()

roi_indices = list()

for i in range(n):#遍历批量中的每个图片

'''(3)为第i张图片产生ROI(y,x,y,x):这些ROI,是经过NMS等处理后的rpn预测框'''

roi = self.proposal_layer(rpn_locs[i].cpu().data.numpy(),rpn_fg_scores[i].cpu().data.numpy(),anchor, img_size,scale=scale)

#输入参数:rpn位置预测、rpn前景类分数预测、anchor box、调整后的图像的尺寸、图像的缩放因子

'''(4)将第i张图片的ROI添加到rois列表,并将batch_index的索引号设置为i'''

batch_index = i * np.ones((len(roi),), dtype=np.int32)

rois.append(roi)

roi_indices.append(batch_index)

rois = np.concatenate(rois, axis=0)

roi_indices = np.concatenate(roi_indices, axis=0)

'''(5)返回 rpn_locs(相对于anchor box的偏移,(t_y,t_x,t_h,t_w))、rpn_scores(rpn的前景类分数)、rois(经过筛选后的rpn,格式为y,x,y,x)、roi的索引,anchor box'''

return rpn_locs, rpn_scores, rois, roi_indices, anchor

'''_enumerate_shifted_anchor()函数:使用一组先验anchor_base为特征图产生anchor box'''

def _enumerate_shifted_anchor(anchor_base, feat_stride, height, width):

#将Aanchor_base(1,A,4)添加到单元格(图像块)K移位(K,1,4)中以获得移位后的 anchor(K,A,4),形状调整为(K * A,4)

import numpy as xp

'''(1)将图像划分为 网格(网格包含:feat_stride*feat_stride 个网格单元)'''

shift_y = xp.arange(0, height * feat_stride, feat_stride)

shift_x = xp.arange(0, width * feat_stride, feat_stride)

shift_x, shift_y = xp.meshgrid(shift_x, shift_y)

shift = xp.stack((shift_y.ravel(), shift_x.ravel(),shift_y.ravel(), shift_x.ravel()), axis=1)

'''(2)将先验base anchor分配给每个网格单元,最终得到特征图的所有anchor box的坐标'''

A = anchor_base.shape[0]

K = shift.shape[0]

anchor = anchor_base.reshape((1, A, 4)) + shift.reshape((1, K, 4)).transpose((1, 0, 2))

anchor = anchor.reshape((K * A, 4)).astype(np.float32)

return anchor

'''同上作用'''

def _enumerate_shifted_anchor_torch(anchor_base, feat_stride, height, width):

import torch as t

shift_y = t.arange(0, height * feat_stride, feat_stride)

shift_x = t.arange(0, width * feat_stride, feat_stride)

shift_x, shift_y = np.meshgrid(shift_x, shift_y)

shift = np.stack((shift_y.ravel(), shift_x.ravel(), shift_y.ravel(), shift_x.ravel()), axis=1)

A = anchor_base.shape[0]

K = shift.shape[0]

anchor = anchor_base.reshape((1, A, 4)) + shift.reshape((1, K, 4)).transpose((1, 0, 2))

anchor = anchor.reshape((K * A, 4)).astype(np.float32)

return anchor

'''normal_init()函数:权重初始化函数'''

def normal_init(m, mean, stddev, truncated=False):

""""""

# m为网络层,

if truncated:

m.weight.data.normal_().fmod_(2).mul_(stddev).add_(mean) # not a perfect approximation

else:

m.weight.data.normal_(mean, stddev)

m.bias.data.zero_()

2.4 bbbox_tools.py

import numpy as np

import numpy as xp

import six

from six import __init__

'''loc2bbox()函数:例将网络预测关于anchor box的偏移(t_y,t_x,t_h,t_w),解码为直接坐标:(y,x,y,x)。返回值和输入值相同的形状 '''

def loc2bbox(src_bbox, loc):

"""

给定网络预测的边界框rpn框(相对于anchor box偏移`t_y, t_x, t_h, t_w` )计算它的坐标公式为:

hat{g}_y = p_h t_y + p_y`

hat{g}_x = p_w t_x + p_x`

hat{g}_h = p_h \\exp(t_x)`

hat{g}_w = p_w \\exp(t_w)`

Args:

src_bbox (array): anchor box坐标。shape:math:`(R, 4)`坐标形式为:`p_{ymin}, p_{xmin}, p_{ymax}, p_{xmax}`.

loc (array):loc): loc为网络预测的边界框,这些边界框是相对于anchor box的偏移,坐标形式为:`t_y, t_x, t_h, t_w`.

src_bbox, loc两者的形状相同

Returns:数组,解码后的边界框坐标shap:`(R, 4)`.

"""

if src_bbox.shape[0] == 0:

return xp.zeros((0, 4), dtype=loc.dtype)

src_bbox = src_bbox.astype(src_bbox.dtype, copy=False)

'''(1)anchor box的w、h、y,x'''

src_height = src_bbox[:, 2] - src_bbox[:, 0]

src_width = src_bbox[:, 3] - src_bbox[:, 1]

src_ctr_y = src_bbox[:, 0] + 0.5 * src_height

src_ctr_x = src_bbox[:, 1] + 0.5 * src_width

'''(2)预测框相对于anchor box的偏移dy,dx ,dh,dw'''

dy = loc[:, 0::4]

dx = loc[:, 1::4]

dh = loc[:, 2::4]

dw = loc[:, 3::4]

'''(3)解码预测框坐标:返回解码后的预测边界框(y,x,y,x)'''

ctr_y = dy * src_height[:, xp.newaxis] + src_ctr_y[:, xp.newaxis]

ctr_x = dx * src_width[:, xp.newaxis] + src_ctr_x[:, xp.newaxis]

h = xp.exp(dh) * src_height[:, xp.newaxis]

w = xp.exp(dw) * src_width[:, xp.newaxis]

dst_bbox = xp.zeros(loc.shape, dtype=loc.dtype)

dst_bbox[:, 0::4] = ctr_y - 0.5 * h

dst_bbox[:, 1::4] = ctr_x - 0.5 * w

dst_bbox[:, 2::4] = ctr_y + 0.5 * h

dst_bbox[:, 3::4] = ctr_x + 0.5 * w

return dst_bbox

'''bbox2loc()函数:例计算anchor box相对于真值框的偏移(t_y, t_x, t_h, t_w)'''

def bbox2loc(src_bbox, dst_bbox):

"""例:给定anchor box,计算相对于真值框的偏移量和缩放比例。以将anchor框与真值框匹配。

调整后的anchor框:(y, x,p_h, p_w`)

规范化后的真值框:(`g_y, g_x` `g_h, g_w`,)

偏移和缩放比例为:(t_y, t_x, t_h, t_w` )计算公式为:

`t_y = \\frac{(g_y - p_y)} {p_h}`

`t_x = \\frac{(g_x - p_x)} {p_w}`

`t_h = \\log(\\frac{g_h} {p_h})`

`t_w = \\log(\\frac{g_w} {p_w})`

Args:

src_bbox (array): anchor框,形状为`(R, 4)`.R代表anchor框的数量,格式为(`p_{ymin}, p_{xmin}, p_{ymax}, p_{xmax}`.)

dst_bbox (array): 真值框。形状为(R, 4) 坐标格式为`g_{ymin}, g_{xmin}, g_{ymax}, g_{xmax}`.

Returns:anchor相对于真值框的偏移shape`(R, 4)`.(`t_y, t_x, t_h, t_w`.)

"""

'''(1)RPN网络产生的anchor box为(y_{min}, x_{min}, y_{max}, x_{max})形式,将其转换为(y, x,p_h, p_w`)'''

height = src_bbox[:, 2] - src_bbox[:, 0]

width = src_bbox[:, 3] - src_bbox[:, 1]

ctr_y = src_bbox[:, 0] + 0.5 * height

ctr_x = src_bbox[:, 1] + 0.5 * width

'''(2)真值框的坐标为(y_{min}, x_{min}, y_{max}, x_{max})形式,将其转换为(y, x,p_h, p_w`)'''

base_height = dst_bbox[:, 2] - dst_bbox[:, 0]

base_width = dst_bbox[:, 3] - dst_bbox[:, 1]

base_ctr_y = dst_bbox[:, 0] + 0.5 * base_height

base_ctr_x = dst_bbox[:, 1] + 0.5 * base_width

'''(3)计算anchor box 相对于真值框的偏移(t_y, t_x, t_h, t_w)'''

eps = xp.finfo(height.dtype).eps

height = xp.maximum(height, eps)

width = xp.maximum(width, eps)

dy = (base_ctr_y - ctr_y) / height

dx = (base_ctr_x - ctr_x) / width

dh = xp.log(base_height / height)

dw = xp.log(base_width / width)

loc = xp.vstack((dy, dx, dh, dw)).transpose()

return loc

'''bbox_iou()函数:返回IOU值矩阵。计算两组边界框的IOU值矩阵'''

def bbox_iou(bbox_a, bbox_b):

"""

Args:

bbox_a (array):形状:`(N, 4)`.N为框的数量

bbox_b (array):形状:`(K, 4)`.N为框的数量

Returns:数组,形状为`(N, K)`.

"""

if bbox_a.shape[1] != 4 or bbox_b.shape[1] != 4:

raise IndexError

'''相交区域的左上和右下坐标'''

tl = xp.maximum(bbox_a[:, None, :2], bbox_b[:, :2])

br = xp.minimum(bbox_a[:, None, 2:], bbox_b[:, 2:])

'''相交面积'''

area_i = xp.prod(br - tl, axis=2) * (tl < br).all(axis=2)

area_a = xp.prod(bbox_a[:, 2:] - bbox_a[:, :2], axis=1)

area_b = xp.prod(bbox_b[:, 2:] - bbox_b[:, :2], axis=1)

'''iou值矩阵'''

return area_i / (area_a[:, None] + area_b - area_i)

def __test():

pass

if __name__ == '__main__':

__test()

'''generate_anchor_base:一组先验 base anchors,这组anchors在特征图上滑动,为特征图产生先验anchor box。(y_{min}, x_{min}, y_{max}, x_{max}) '''

def generate_anchor_base(base_size=16, ratios=[0.5, 1, 2],anchor_scales=[8, 16, 32]):

"""产生按给定宽高比缩放和修改的anchor。

例如果scale是`8`,ratio是`0.25`,则基本窗口的宽度和高度被`8`拉伸。高度减半,宽度加倍。

Args:

base_size (number): 参考窗口的宽和高.

ratios (list of floats): anchor宽高比的比率

anchor_scales (list of numbers): anchor的面积

这些面积将是“ anchor_scales”中元素的平方与参考窗口原始面积的乘积。

Returns: ~numpy.ndarray。shape:`(R, 4)`.每个anchor的坐标为`(y_{min}, x_{min}, y_{max}, x_{max})`

"""

'''(1)参考窗口的宽和高'''

py = base_size / 2.#8

px = base_size / 2.#8

'''(2)每个位置的anchor的数量(一组anchors的数量)'''

anchor_base = np.zeros((len(ratios) * len(anchor_scales), 4),dtype=np.float32)#shape=[9,4]

'''(3)每个anchor的坐标。这些anchor框以(0,0)为中心'''

for i in six.moves.range(len(ratios)):

for j in six.moves.range(len(anchor_scales)):

#anchor的宽和高''',例 ratios=0.5,anchor_scales=8时,h=8*8*根下0.5=45.25,w=8*8*根下0.75=90.5

h = base_size * anchor_scales[j] * np.sqrt(ratios[i])

w = base_size * anchor_scales[j] * np.sqrt(1. / ratios[i])

'''anchor的坐标(y_{min}, x_{min}, y_{max}, x_{max}),'''

index = i * len(anchor_scales) + j

anchor_base[index, 0] = py - h / 2.

anchor_base[index, 1] = px - w / 2.

anchor_base[index, 2] = py + h / 2.

anchor_base[index, 3] = px + w / 2.

return anchor_base

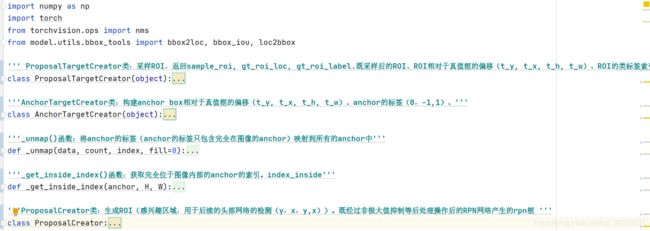

2.5 creator_tools.py

import numpy as np

import torch

from torchvision.ops import nms

from model.utils.bbox_tools import bbox2loc, bbox_iou, loc2bbox

''' ProposalTargetCreator类:采样ROI,返回sample_roi, gt_roi_loc, gt_roi_label.既采样后的ROI、ROI相对于真值框的偏移(t_y, t_x, t_h, t_w)、ROI的类标签索引'''

class ProposalTargetCreator(object):

"""把真值框分配给ROI

Args:

n_sample (int): 采样ROI的数量

pos_ratio (float): 标记为前景的区域的分数。

pos_iou_thresh (float): RoI被视为前景的IoU阈值。

neg_iou_thresh_hi (float): RoI被视为背景:如果IOU 在 [`neg_iou_thresh_hi`,`neg_iou_thresh_hi`).范围

neg_iou_thresh_lo (float): RoI被视为背景:如果IOU 在 [`neg_iou_thresh_hi`,`neg_iou_thresh_hi`).范围

"""

'''__init__()函数:初始化函数,用于定义一些参数、阈值等'''

def __init__(self,n_sample=128,pos_ratio=0.25, pos_iou_thresh=0.5, neg_iou_thresh_hi=0.5, neg_iou_thresh_lo=0.0):

#采样后的ROI的数量

self.n_sample = n_sample

self.pos_ratio = pos_ratio

self.pos_iou_thresh = pos_iou_thresh

self.neg_iou_thresh_hi = neg_iou_thresh_hi

self.neg_iou_thresh_lo = neg_iou_thresh_lo # NOTE:default 0.1 in py-faster-rcnn

'''__call__()函数:采样ROI,返回采样后的ROI、ROI相对于真值框的偏移(t_y, t_x, t_h, t_w)、ROI的类标签索引'''

def __call__(self, roi, bbox, label,loc_normalize_mean=(0., 0., 0., 0.),loc_normalize_std=(0.1, 0.1, 0.2, 0.2)):

"""Assigns ground truth to sampled proposals.

Args:

roi (array): RoIs.shape :(R, 4)`

bbox (array): 真值框的坐标。shape : (R', 4)`.

label (array):真值框的标签. shape :`(R',)`.值的范围为[0, L - 1]`, L为前景类的数量

loc_normalize_mean (tuple of four floats):标准化后边界框坐标的均值

loc_normalize_std (tupler of four floats): 边界框坐标的标准方差

Returns:(array, array, array):

sample_roi : 采样的ROI数量 。shape :`(S, 4)`.`S` 采样RoIs的总数量=n_sample`.

gt_roi_loc :采样的RoI相对于与真值框的偏移和尺度 shape :`(S, 4)`.

gt_roi_label: 采样的ROI的标签. shape :`(S,)`.取值范围`[0, L]`. 0 代表背景

"""

'''(1)计算ROI与真值框的IOU值矩阵:iou'''

#真值框的数量

n_bbox, _ = bbox.shape

roi = np.concatenate((roi, bbox), axis=0)#按轴axis连接array组成一个新的array

pos_roi_per_image = np.round(self.n_sample * self.pos_ratio)

iou = bbox_iou(roi, bbox)

'''(2)每个ROI匹配的 真值框的索引(gt_assignment)、两者之间的IOU值(max_iou)、类标签索引(gt_roi_label)'''

gt_assignment = iou.argmax(axis=1)#行中最大值的索引

max_iou = iou.max(axis=1)#行中最大IOU值

# Offset range of classes from [0, n_fg_class - 1] to [1, n_fg_class].

gt_roi_label = label[gt_assignment] + 1#0代表背景

'''(3)采样前景RoI,返回前景roi的索引pos_index: IoU>>pos_iou_thresh ,数量为pos_roi_per_this_image'''

pos_index = np.where(max_iou >= self.pos_iou_thresh)[0]

pos_roi_per_this_image = int(min(pos_roi_per_image, pos_index.size))#每张图片应该保留的前景ROI数量

if pos_index.size > 0:

pos_index = np.random.choice( pos_index, size=pos_roi_per_this_image, replace=False)

'''(4)采样背景ROI,返回背景roi的索引neg_index:IoU值范围在[neg_iou_thresh_lo, neg_iou_thresh_hi)',数量为=neg_roi_per_this_image'''

neg_index = np.where((max_iou < self.neg_iou_thresh_hi) &(max_iou >= self.neg_iou_thresh_lo))[0]

neg_roi_per_this_image = self.n_sample - pos_roi_per_this_image

neg_roi_per_this_image = int(min(neg_roi_per_this_image,neg_index.size))

if neg_index.size > 0:

neg_index = np.random.choice(neg_index, size=neg_roi_per_this_image, replace=False)

'''(5)最终采样保留的ROI的索引(pos_index, neg_index)'''

keep_index = np.append(pos_index, neg_index)

'''(6)采样后的ROI的坐标sample_roi、类标签索引gt_roi_label,'''

gt_roi_label = gt_roi_label[keep_index]

gt_roi_label[pos_roi_per_this_image:] = 0 # 背景ROI的类标签索引为0

sample_roi = roi[keep_index]

'''(7)计算采样ROI与其匹配的真值框的 偏移(t_y, t_x, t_h, t_w)'''

# Compute offsets and scales to match sampled RoIs to the GTs.

gt_roi_loc = bbox2loc(sample_roi, bbox[gt_assignment[keep_index]])

gt_roi_loc = ((gt_roi_loc - np.array(loc_normalize_mean, np.float32)) / np.array(loc_normalize_std, np.float32))

#返回采样后的ROI,ROI相对于真值框的偏移(t_y, t_x, t_h, t_w)、ROI的类标签索引

return sample_roi, gt_roi_loc, gt_roi_label

'''AnchorTargetCreator类:构建anchor box相对于匹配的真值框的偏移(t_y, t_x, t_h, t_w)、anchor的标签(0,-1,1)、'''

class AnchorTargetCreator(object):

"""将真值边界框分配给 anchor。用于训练RPN网络

把anchor匹配给真值框的偏移量和比例尺,都使用的编码方案`model.utils.bbox_tools.bbox2loc`计算

"""

''' __init__()函数:初始化函数,设置采样anchor的总数量、正负anchor的阈值、正anchor占总anchor的比例'''

def __init__(self,n_sample=256,pos_iou_thresh=0.7, neg_iou_thresh=0.3,pos_ratio=0.5):

'''

n_sample (int): The number of regions to produce.

pos_iou_thresh (float): IoU高于此阈值的anchor为正。

neg_iou_thresh (float): IoU低于此阈值的anchor为负。

pos_ratio (float): 采样anchor中正anchor的比率。'''

self.n_sample = n_sample

self.pos_iou_thresh = pos_iou_thresh

self.neg_iou_thresh = neg_iou_thresh

self.pos_ratio = pos_ratio

'''__call__()函数:返回anchor box相对于真值框的偏移(t_y, t_x, t_h, t_w)、anchor box的标签(1=positive, 0=negative, -1=ignore)'''

def __call__(self, bbox, anchor, img_size):

"""将真值框分配给采样anchor子集。

Args:

bbox (array): 真值框的坐标. shape `(R, 4)`.`R` 真值框数量

anchor (array): 图片中所有anchor box的坐标. shape `(S, 4)`.`S` anchor的数量

img_size (tuple of ints):经过调整后的图像的宽和高

Returns: (array, array):

loc: anchor box相对于真值框的偏移和缩放shape :`(S, 4)`.

label: 带有值的anchor box标签(1=positive, 0=negative, -1=ignore)`.shape:`(S,)`.

"""

'''(1)经过调整的图像的大小、anchor box的数量'''

img_H, img_W = img_size

n_anchor = len(anchor)

'''(2)获取完全在图像内部的anchor box及anchor box的索引'''

#index_inside:获取完全位于图像内部的anchor的索引。

inside_index = _get_inside_index(anchor, img_H, img_W)

#anchor:提取完全位于图像内部的anchor(anchor的坐标)

anchor = anchor[inside_index]

'''(3)每个anchor box最大iou对应的真实框索引、每个anchor box的标签数组label(1=positive, 0=negative, -1=ignore)'''

argmax_ious, label = self._create_label(inside_index, anchor, bbox)

'''(4)计算anchor box相对于匹配的真值框的偏移(t_y, t_x, t_h, t_w):anchor box和真值框的坐标都是(x,y,x,y)形式'''

loc = bbox2loc(anchor, bbox[argmax_ious])

'''(6)将anchor的标签既anchor相对于真值框的偏移,映射到所有anchor中:因为初始label、loc的计算都是对完全包含在图像中的anchor进行的,有一部分anchor可能有些区域在图像外部'''

label = _unmap(label, n_anchor, inside_index, fill=-1)#anchor的标签、anchor的数量、完全在图像内部的anchor索引

loc = _unmap(loc, n_anchor, inside_index, fill=0)

return loc, label

'''_create_label()函数:返回每个anchor box最大iou对应的真实框索引、每个anchor box的标签数组label(1=positive, 0=negative, -1=ignore)、'''

def _create_label(self, inside_index, anchor, bbox):

'''

inside_index:完全位于图像内部的anchor box的索引

anchor:完全位于图像内部的anchor box(anchor的坐标)

bbox:真值框

'''

'''(1)创建anchor的lable数组:数组初始值为-1'''

label = np.empty((len(inside_index),), dtype=np.int32)

label.fill(-1)

'''(2)每个anchor box最大iou对应的真实框索引(argmax_ious)、每个anchor box最大iou值(max_ious)、每个真值框最大iou的anchor box索引'''

argmax_ious, max_ious, gt_argmax_ious = self._calc_ious(anchor, bbox, inside_index)

'''(3)给anchor box设置标签(1=positive, 0=negative, -1=ignore):根据max_iou是否高于阈值'''

# 负标签:首先分配负标签,以便正标签可以掩盖它们

label[max_ious < self.neg_iou_thresh] = 0

#正标签:

#1.最大iou值对应的anchor设为正anchor

label[gt_argmax_ious] = 1

# 2.iou值高于阈值的anchor设为正anchor

label[max_ious >= self.pos_iou_thresh] = 1

'''(4)采样正anchor box:没有被采样的正anchor box标签label相应位置设置为-1'''

#需要保留的正anchor的数量

n_pos = int(self.pos_ratio * self.n_sample)

#正anchor的索引

pos_index = np.where(label == 1)[0]

if len(pos_index) > n_pos:

disable_index = np.random.choice(pos_index, size=(len(pos_index) - n_pos), replace=False)

label[disable_index] = -1

'''(5)采样负anchor box:'''

#需要保留的负anchor的数量

n_neg = self.n_sample - np.sum(label == 1)

#负anchor的索引

neg_index = np.where(label == 0)[0]

if len(neg_index) > n_neg:

disable_index = np.random.choice(

neg_index, size=(len(neg_index) - n_neg), replace=False)

label[disable_index] = -1

'''返回:每个anchor box最大iou对应的真实框索引、每个anchor box的标签数组label(标记anchor为正、负anchor) '''

return argmax_ious, label

'''_calc_ious()函数:返回每个anchor box最大iou对应的真实框索引(argmax_ious)、每个anchor box最大iou值(max_ious)、每个真值框最大iou的anchor box索引'''

def _calc_ious(self, anchor, bbox, inside_index):

''' '''

'''1.计算真值框和anchor的iou值矩阵。形状为【anchor数量,真值框数量】'''

ious = bbox_iou(anchor, bbox)

'''2.每个anchor box最大iou的对应的真实框索引'''

argmax_ious = ious.argmax(axis=1)#

'''3.每个anchor box最大iou值'''

max_ious = ious[np.arange(len(inside_index)), argmax_ious]#每个先验框对应的拥有最大iou的真实框的iou值

'''4.每个真值框最大iou的anchor box索引'''

gt_argmax_ious = ious.argmax(axis=0)#

gt_max_ious = ious[gt_argmax_ious, np.arange(ious.shape[1])]

gt_argmax_ious = np.where(ious == gt_max_ious)[0] # 每个真实框对应的先验框最大的iou

return argmax_ious, max_ious, gt_argmax_ious

'''_unmap()函数:将anchor的标签(anchor的标签只包含完全在图像的anchor)映射到所有的anchor中'''

def _unmap(data, count, index, fill=0):

# Unmap a subset of item (data) back to the original set of items (of size count)

'''参数:完全在图像内部的anchor的标签、图像中所有anchor的数量、完全在图像内部的anchor索引'''

if len(data.shape) == 1:

ret = np.empty((count,), dtype=data.dtype)

ret.fill(fill)

ret[index] = data

else:

ret = np.empty((count,) + data.shape[1:], dtype=data.dtype)

ret.fill(fill)

ret[index, :] = data

return ret

'''_get_inside_index()函数:获取完全位于图像内部的anchor box的索引。index_inside'''

def _get_inside_index(anchor, H, W):

# 计算完全位于指定大小图像内部的anchor索引。

index_inside = np.where(

(anchor[:, 0] >= 0) &

(anchor[:, 1] >= 0) &

(anchor[:, 2] <= H) &

(anchor[:, 3] <= W)

)[0]

return index_inside

'''ProposalCreator类:RPN网络生成ROI(感兴趣区域,用于后续的头部网络的检测(y,x,y,x))。既经过非极大值抑制等后处理操作后的RPN网络产生的rpn框 '''

class ProposalCreator:

"""为特征图生成提案框

`__call__` 通过估计边界框相对于anchor的偏移量来输出对象检测提案(ROI)。

此类使用参数来控制传递给NMS并在NMS之后保留的边界框的数量。如果参数为负,则使用提供的所有边界框或保留NMS返回的所有边界框。

"""

'''__init__()函数:初始化函数,设置一些阈值、nms相关的参数'''

def __init__(self,parent_model,nms_thresh=0.7, n_train_pre_nms=12000, n_train_post_nms=2000,n_test_pre_nms=6000,n_test_post_nms=300,min_size=16):

'''

nms_thresh (float): NMS.阈值

n_train_pre_nms (int):在训练模式下,传递到NMS之前要保留的得分最高的边界框的数量。

n_train_post_nms (int): 在训练模式下,传递到NMS后要保留的得分最高的边界框的数量。

n_test_pre_nms (int): 在测试模式下,传递到NMS之前要保留的得分最高的边界框的数量。

n_test_post_nms (int): 在测试模式下,传递到NMS后要保留的得分最高的边界框的数量。

force_cpu_nms (bool): `True`代表在CPU模式下应用NMS 。`False`:False:根据输入类型选择NMS模式

min_size (int): 用于根据边界框的大小确定丢弃边界框的阈值的参数。

'''

'''(1)模式、nms阈值'''

self.parent_model = parent_model

self.nms_thresh = nms_thresh

'''(2)不同模式下,nms处理前和nms后保留的边界框的数量'''

self.n_train_pre_nms = n_train_pre_nms

self.n_train_post_nms = n_train_post_nms

self.n_test_pre_nms = n_test_pre_nms

self.n_test_post_nms = n_test_post_nms

self.min_size = min_size

'''__call__()函数:返回ROI框(用于输入到后续头部网络),这些ROI框,是对RPN预测框的筛选后的结果'''

def __call__(self, loc, score , anchor, img_size, scale=1.):# rpn位置预测、rpn前景类分数预测、anchor box、调整后的图像的尺寸、图像的缩放因子

"""

Args:

loc (array):网络预测的相对于anchor box的偏移 shape `(R, 4)`.偏移的坐标(t_y,t_x,t_h,t_w),R为先验 anchor的总数量=特征图的宽*高*每个位置先验anchor的数量(9)

score (array): anchor为前景的预测概率 shape:(R,)`.

anchor (array): anchor box的坐标. shape:`(R, 4)`.

img_size (tuple of ints): (调整后的)图像的宽和高

scale (float):从文件读取图像后,用于缩放图像的缩放因子。

Returns:提案框的坐标数组。shape:`(S, 4)`.:`S` 在测试模式下少于n_test_post_nms在训练模式下少于n_train_post_nms。

"""

# 测试模式下faster_rcnn.eval(),self.traing = False

'''(1)不同训练模式下参数的设定:设置nms前、nms后保留的框的数量'''

if self.parent_model.training:

n_pre_nms = self.n_train_pre_nms

n_post_nms = self.n_train_post_nms

else:

n_pre_nms = self.n_test_pre_nms

n_post_nms = self.n_test_post_nms

'''(2)网络预测边界框解码,返回rpn框的坐标(y,x,y,x):之前RPN的预测坐标为相对于anchor box的偏移,解码为坐标(y,x,y,x),将坐标超出图片的边界进行裁剪'''

roi = loc2bbox(anchor, loc)# loc为rpn产生的框,是相对于anchor box的偏移(t_y,t_x,t_h,t_w)

#对预测边界框的坐标进行处理

# clip(数组,最小值,最大值):将数组中的元素限制在a_min, a_max之间,大于a_max的就使得它等于 a_max,小于a_min,的就使得它等于a_min

roi[:, slice(0, 4, 2)] = np.clip(roi[:, slice(0, 4, 2)], 0, img_size[0])

roi[:, slice(1, 4, 2)] = np.clip(roi[:, slice(1, 4, 2)], 0, img_size[1])

'''(3)去除宽和高不满足阈值的rpn框:得到筛选后的网络预测边界框(roi)和边界框的前景类分数(score)'''

# 删除高度或宽度<阈值的预测框。scale为缩放原图像的缩放因子。

min_size = self.min_size * scale

#预测框的高和宽:

hs = roi[:, 2] - roi[:, 0]

ws = roi[:, 3] - roi[:, 1]

keep = np.where((hs >= min_size) & (ws >= min_size))[0]

roi = roi[keep, :]

score = score[keep]

# Sort all (proposal, score) pairs by score from highest to lowest.

# Take top pre_nms_topN (e.g. 6000).

'''(4)非极大值抑制前,保留n_pre_nm个rpn预测框(前景类分数最大的前n_pre_nm个rpn框) '''

#对边界框的前景类分数进行排序,保留前n_pre_nms个边界框

order = score.ravel().argsort()[::-1]

if n_pre_nms > 0:

order = order[:n_pre_nms]

roi = roi[order, :]

score = score[order]

# Apply nms (e.g. threshold = 0.7).

# Take after_nms_topN (e.g. 300).

'''(5)非极大值抑制'''

keep = nms( torch.from_numpy(roi).cuda(),torch.from_numpy(score).cuda(),self.nms_thresh)

'''(6)非极大值抑制后保留的n_post_nms个rpn预测框——最终ROI'''

if n_post_nms > 0:

keep = keep[:n_post_nms]

roi = roi[keep.cpu().numpy()]

return roi#返回ROI(输入到头部网络的rpn框,坐标为y,x,y,x格式)