简单粗暴PyTorch之TensorBoard详解

TensorBoard

- 一、TensorBoard介绍

- 二、SummaryWriter学习

- 三、图像可视化

- 四、卷积核、特征图、数据流可视化

-

- 4.1 卷积核可视化

- 4.2 特征图的可视化

- 4.3 数据流可视化

一、TensorBoard介绍

TensorBoard:TensorFlow中强大的可视化工具

运行机制

- 在python脚本中记录可视化的数据,就是要监控的数据

import numpy as np

from torch.utils.tensorboard import SummaryWriter

# SummaryWriter最根本的类,创建一个writer

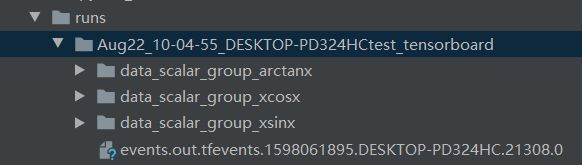

writer = SummaryWriter(comment='test_tensorboard')

# writer 记录需要可视化的数据

for x in range(100):

writer.add_scalar('y=2x', x * 2, x)

# 记录一个标量,参数:名称、Y轴、X轴

writer.add_scalar('y=pow(2, x)', 2 ** x, x)

writer.add_scalars('data/scalar_group', {"xsinx": x * np.sin(x),

"xcosx": x * np.cos(x),

"arctanx": np.arctan(x)}, x)

writer.close()

-

在终端使用tensorboard这个工具读取event file

进入runs所在文件夹

通过命令tensorboard --logdir=./runs

得到网址端口

-

TensorBoard在web端进行可视化

直接点 http://localhost:6006/ 进入到默认网址进行可视化展示

安装注意事项

pip install tensorboard的时候会报错:

ModuleNotFoundError: No module named 'past’

通过pip install future解决

二、SummaryWriter学习

SummaryWriter

功能:提供创建event file的高级接口

主要属性:

• log_dir:event file输出文件夹

• comment:不指定log_dir时, 文件夹后缀

• filename_suffix:event file文件名后缀

设置了log_dir,comment就不起作用

log_dir = "./train_log/test_log_dir"

writer = SummaryWriter(log_dir=log_dir, comment='_scalars', filename_suffix="12345678")

# writer = SummaryWriter(comment='_scalars', filename_suffix="12345678")

-

add_scalar()

功能:记录标量

• tag:图像的标签名,图的唯一标识

• scalar_value:要记录的标量

• global_step:x轴

-

add_scalars()

可以绘制多个曲线

• main_tag:该图的标签

• tag_scalar_dict:key是变量的tag,value是变量的值

- add_histogram()

功能:统计直方图与多分位数折线图

• tag:图像的标签名,图的唯一标识

• values:要统计的参数

• global_step:y轴

• bins:取直方图的bins

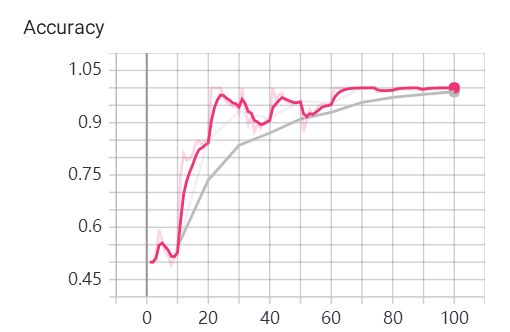

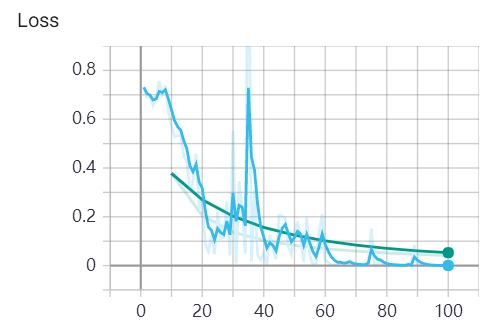

统计正确率损失,参数值与梯度

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# 记录数据,保存于event file

writer.add_scalars("Loss", {"Train": loss.item()}, iter_count)

writer.add_scalars("Accuracy", {"Train": correct / total}, iter_count)

# 每个epoch,记录梯度,权值

for name, param in net.named_parameters():

writer.add_histogram(name + '_grad', param.grad, epoch)

writer.add_histogram(name + '_data', param, epoch)

三、图像可视化

- add_image()

功能:记录图像

• tag:图像的标签名,图的唯一标识

• img_tensor:图像数据,注意尺度,像素缩放到0-255,符合可视化效果

• global_step:x轴

• dataformats:数据形式,CHW,HWC,HW

这个样子的图像不在一张图上,需要鼠标选,下面使用pytorch制作网格图像

torchvision.utils.make_grid

功能:制作网格图像

• tensor:图像数据, BCHW形式

• nrow:行数(列数自动计算)

• padding:图像间距(像素单位)

• normalize:是否将像素值标准化

• range:标准化范围

• scale_each:是否单张图维度标准化

• pad_value:padding的像素值

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

split_dir = os.path.join("..", "..", "data", "rmb_split")

train_dir = os.path.join(split_dir, "train")

# train_dir = "path to your training data"

transform_compose = transforms.Compose([transforms.Resize((32, 64)), transforms.ToTensor()])

train_data = RMBDataset(data_dir=train_dir, transform=transform_compose)

train_loader = DataLoader(dataset=train_data, batch_size=16, shuffle=True)

data_batch, label_batch = next(iter(train_loader)) # 取一个batch

# img_grid = vutils.make_grid(data_batch, nrow=4, normalize=True, scale_each=True)

img_grid = vutils.make_grid(data_batch, nrow=4, normalize=False, scale_each=False)

writer.add_image("input img", img_grid, 0)

writer.close()

四、卷积核、特征图、数据流可视化

4.1 卷积核可视化

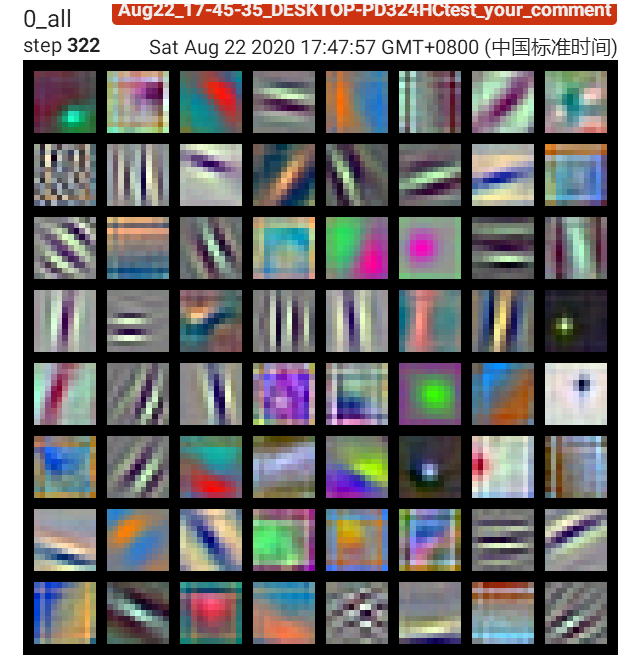

以Alexnet第一个卷积层第一个卷积核为例

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

alexnet = models.alexnet(pretrained=True) # 获取一个预训练好的Alexnet,imagenet上训练

kernel_num = -1 # 第几个卷积层

vis_max = 1 # 最大可视化层

for sub_module in alexnet.modules():

if isinstance(sub_module, nn.Conv2d):

kernel_num += 1

if kernel_num > vis_max:

break

kernels = sub_module.weight # 提取卷积核参数

c_out, c_int, k_w, k_h = tuple(kernels.shape) # 4D

for o_idx in range(c_out):

kernel_idx = kernels[o_idx, :, :, :].unsqueeze(1) # make_grid需要 BCHW,这里拓展C维度

kernel_grid = vutils.make_grid(kernel_idx, normalize=True, scale_each=True, nrow=c_int)

writer.add_image('{}_Convlayer_split_in_channel'.format(kernel_num), kernel_grid, global_step=o_idx)

kernel_all = kernels.view(-1, 3, k_h, k_w) # 3, h, w

kernel_grid = vutils.make_grid(kernel_all, normalize=True, scale_each=True, nrow=8) # c, h, w

writer.add_image('{}_all'.format(kernel_num), kernel_grid, global_step=322)

print("{}_convlayer shape:{}".format(kernel_num, tuple(kernels.shape)))

writer.close()

4.2 特征图的可视化

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 数据

path_img = "./lena.png" # your path to image

normMean = [0.49139968, 0.48215827, 0.44653124]

normStd = [0.24703233, 0.24348505, 0.26158768]

norm_transform = transforms.Normalize(normMean, normStd)

img_transforms = transforms.Compose([

transforms.Resize((224, 224)),

transforms.ToTensor(),

norm_transform])

# 数据读取

img_pil = Image.open(path_img).convert('RGB')

if img_transforms is not None:

img_tensor = img_transforms(img_pil)

img_tensor.unsqueeze_(0) # chw --> bchw

# 模型

alexnet = models.alexnet(pretrained=True)

# forward

convlayer1 = alexnet.features[0]

fmap_1 = convlayer1(img_tensor)

# 预处理

fmap_1.transpose_(0, 1) # bchw=(1, 64, 55, 55) --> (64, 1, 55, 55)

fmap_1_grid = vutils.make_grid(fmap_1, normalize=True, scale_each=True, nrow=8)

writer.add_image('feature map in conv1', fmap_1_grid, global_step=322)

writer.close()

4.3 数据流可视化

- add_graph()

功能:可视化模型计算图

• model:模型,必须是 nn.Module

• input_to_model:给模型的数据

• verbose:是否打印计算图结构信息

writer = SummaryWriter(comment='test_your_comment', filename_suffix="_test_your_filename_suffix")

# 模型

fake_img = torch.randn(1, 3, 32, 32)

lenet = LeNet(classes=2)

writer.add_graph(lenet, fake_img)

writer.close()

得到Lenet流程图

上边查看模型过于复杂,深度debug才会用,torchsummary查看模型足以

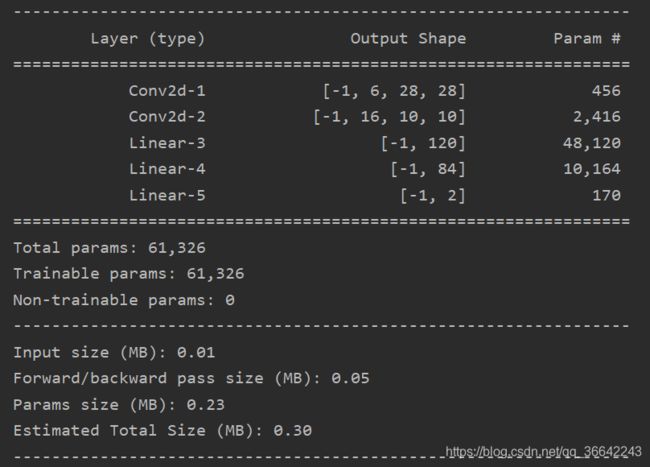

torchsummary

功能:查看模型信息,便于调试

• model:pytorch模型

• input_size:模型输入size

• batch_size:batch size

• device:“cuda” or “cpu”

from torchsummary import summary

print(summary(lenet, (3, 32, 32), device="cpu"))