【参文】应用强化学习的文章

文章目录

-

- 一、DQN框架的

-

- 1.1 Human-level control through deep reinforcement learning

- 1.2 Hybrid reward architecture for reinforcement learning

- 二、DDPG框架的

-

- 2.1 A Deep Reinforcement Learning Framework for Rebalancing Dockless Bike Sharing Systems

- 2.3

一、DQN框架的

1.1 Human-level control through deep reinforcement learning

简介:DQN算法

原文

-

摘要

强化学习理论提供了一个规范性的解释,它深深植根于动物行为的心理学和神经科学观点,解释了个体如何优化对环境的控制。然而,为了在接近现实世界复杂性的情况下成功地使用强化学习,智能体面临着一项艰巨的任务:他们必须从高维感官输入中获得对环境的有效表征,并利用这些信息将过去的经验推广到新的情况。值得注意的是,人类和其他动物似乎通过强化学习和分层感官处理系统的和谐结合来解决这个问题,前者通过大量神经数据证明,多巴胺能神经元发出的相位信号与时间差强化学习算法之间存在显著的相似性。虽然强化学习代理在许多领域都取得了一些成功,但它们的适用性以前仅限于可以手工制作有用功能的领域,或者具有完全观察到的低维状态空间的领域。在这里,我们利用训练深度神经网络的最新进展来开发一种新型人工智能体,称为深度Q网络,它可以使用端到端强化学习直接从高维感官输入中学习成功的策略。我们在经典Atari 2600游戏的挑战领域测试了这个代理。我们证明,仅接收像素和游戏分数作为输入的deep Q-network agent能够超越所有以前算法的性能,并在一组49个游戏中达到与专业人类游戏测试人员相当的水平,使用相同的算法、网络架构和超参数。这项工作弥合了高维感官输入和动作之间的鸿沟,创造了第一个能够学习在各种挑战性任务中脱颖而出的人工智能体。 -

abstract

The theory of reinforcement learning provides a normative account, deeply rooted in psychological and neuroscientific perspectives on animal behaviour, of how agents may optimize their control of an environment. To use reinforcement learning successfully in situations approaching real-world complexity, however, agents are confronted with a difficult task: they must derive efficient representations of the environment from high-dimensional sensory inputs, and use these to generalize past experience to new situations. Remarkably, humans and other animals seem to solve this problem through a harmonious combination of reinforcement learning and hierarchical sensory processing systems, the former evidenced by a wealth of neural data revealing notable parallels between the phasic signals emitted by dopaminergic neurons and temporal difference reinforcement learning algorithms. While reinforcement learning agents have achieved some successes in a variety of domains, their applicability has previously been limited to domains in which useful features can be handcrafted, or to domains with fully observed, low-dimensional state spaces. Here we use recent advances in training deep neural networks to develop a novel artificial agent, termed a deep Q-network, that can learn successful policies directly from high-dimensional sensory inputs using end-to-end reinforcement learning. We tested this agent on the challenging domain of classic Atari 2600 games. We demonstrate that the deep Q-network agent, receiving only the pixels and the game score as inputs, was able to surpass the performance of all previous algorithms and achieve a level comparable to that of a professional human games tester across a set of 49 games, using the same algorithm, network architecture and hyperparameters. This work bridges the divide between high-dimensional sensory inputs and actions, resulting in the first artificial agent that is capable of learning to excel at a diverse array of challenging tasks. -

bib

@article{2015Human,

title={Human-level control through deep reinforcement learning},

author={ Volodymyr, Mnih and Koray, Kavukcuoglu and David, Silver and Rusu, Andrei A, and Joel, Veness and Bellemare, Marc G, and Alex, Graves and Martin, Riedmiller and Fidjeland, Andreas K, and Georg, Ostrovski and },

journal={Nature},

volume={518},

number={7540},

pages={529-33页},

year={2015},

}

1.2 Hybrid reward architecture for reinforcement learning

文章翻译

文章链接

-

摘要:

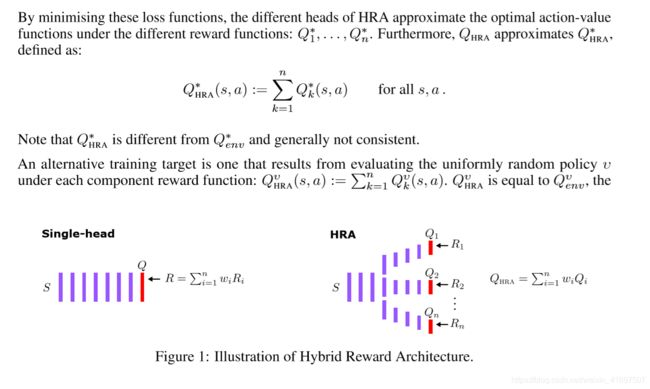

强化学习(RL)的主要挑战之一是泛化。在典型的深度RL方法中,这是通过使用深度网络用低维表示逼近最优值函数来实现的。虽然这种方法在许多领域都很有效,但在最优值函数无法轻松简化为低维表示的领域,学习可能非常缓慢且不稳定。本文提出了一种新的方法,称为混合奖励体系结构(HRA),有助于解决这些具有挑战性的领域。HRA将一个分解的奖励函数作为输入,并为每个成分的奖励函数学习一个单独的值函数。因为每个组件通常只依赖于所有特征的子集,所以整体值函数更平滑,可以通过低维表示更容易近似,从而实现更有效的学习。我们在一个玩具问题和Atari游戏《吃豆人女士》中演示了HRA,在该游戏中,HRA的表现超过了人类。 -

abstract:

One of the main challenges in reinforcement learning (RL) is generalisation. In typical deep RL methods this is achieved by approximating the optimal value function with a low-dimensional representation using a deep network. While this approach works well in many domains, in domains where the optimal value function cannot easily be reduced to a low-dimensional representation, learning can be very slow and unstable. This paper contributes towards tackling such challenging domains, by proposing a new method, called Hybrid Reward Architecture (HRA). HRA takes as input a decomposed reward function and learns a separate value function for each component reward function. Because each component typically only depends on a subset of all features, the overall value function is much smoother and can be easier approximated by a low-dimensional representation, enabling more effective learning. We demonstrate HRA on a toy-problem and the Atari game Ms. Pac-Man, where HRA achieves above-human performance. -

bib

@misc{0HYBRID,

title={HYBRID REWARD ARCHITECTURE FOR REINFORCEMENT LEARNING},

author={ Van Seijen, Harm Hendrik and Fatemi Booshehri, Seyed Mehdi and Laroche, Romain Michel Henri and Romoff, Joshua Samuel },

url = {https://arxiv.org/pdf/1706.04208.pdf},

}

二、DDPG框架的

2.1 A Deep Reinforcement Learning Framework for Rebalancing Dockless Bike Sharing Systems

原文解析

- 摘要

自行车共享为旅行提供了一种环保的方式,并在世界各地蓬勃发展。然而,由于用户出行模式的高度相似性,自行车不平衡问题不断发生,尤其是对于无停靠自行车共享系统,对服务质量和公司收入造成重大影响。因此,如何有效地解决这种不平衡已经成为自行车共享运营商的一项关键任务。在本文中,我们提出了一个新的深度强化学习框架来激励用户重新平衡这样的系统。我们将问题建模为一个马尔可夫决策过程,并考虑了空间和时间特征。我们开发了一种新的深度强化学习算法,称为分层强化定价(Hierarchical Reinforcement Pricing)(HRP),它建立在深度确定性策略梯度算法( Deep Deterministic Policy Gradient algorithm.)(DDPG)的基础上。与通常忽略空间信息并严重依赖精确预测的现有方法不同,HRP使用带有嵌入式本地化模块的分治结构(divide-and-conquer structure) 来捕获空间和时间依赖性。我们进行了广泛的实验来评估HRP,基于中国主要的无码头自行车共享公司Mobike数据集。结果表明,HRP的性能接近24时隙前瞻优化,在服务水平和自行车分配方面都优于最先进的方法。当应用于看不见的区域时,它也能很好地传输.

- abstract

Bike sharing provides an environment-friendly way for traveling and is booming all over the world. Yet, due to the high similarity of user travel patterns, the bike imbalance problem constantly occurs, especially for dockless bike sharing systems, causing significant impact on service quality and company revenue. Thus, it has become a critical task for bike sharing operators to resolve such imbalance efficiently. In this paper, we propose a novel deep reinforcement learning framework for incentivizing users to rebalance such systems. We model the problem as a Markov decision process and take both spatial and temporal features into consideration. We develop a novel deep reinforcement learning algorithm called Hierarchical Reinforcement Pricing (HRP),which builds upon the Deep Deterministic Policy Gradient algorithm. Different from existing methods that often ignore spatial information and rely heavily on accurate prediction, HRP captures both spatial and temporal dependencies using a divide-and-conquer structure with an embedded localized module. We conduct extensive experiments to evaluate HRP, based on a dataset from Mobike, a major Chinese dockless bike sharing company. Results show that HRP performs close to the 24-timeslot look-ahead optimization, and outperforms state-of-the-art methods in both service level and bike distribution. It also transfers well when applied to unseen areas. - model图片

- bib

@article{2019A,

title={A Deep Reinforcement Learning Framework for Rebalancing Dockless Bike Sharing Systems},

author={ Pan, L. and Cai, Q. and Fang, Z. and Tang, P. and Huang, L. },

journal={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={33},

pages={1393-1400},

year={2019},

doi = {https://doi.org/10.1609/aaai.v33i01.33011393},

}

2.3

V ( S , A ) ≅ ∑ i = 1 N v ( s i , a i ) + ∑ i = 1 N f ( N ( s i , a i ) ) (1) V(S,A) \cong \sum_{i=1}^N v(s_i,a_i) +\sum_{i=1}^Nf(N(s_i,a_i)) \tag{1} V(S,A)≅i=1∑Nv(si,ai)+i=1∑Nf(N(si,ai))(1)

- bib

@inproceedings{DBLP:conf/mdm/Duan019,

author = {Yubin Duan and

Jie Wu},

title = {Optimizing Rebalance Scheme for Dock-Less Bike Sharing Systems with

Adaptive User Incentive},

booktitle = {20th {IEEE} International Conference on Mobile Data Management, {MDM}

2019, Hong Kong, SAR, China, June 10-13, 2019},

pages = {176--181},

publisher = {{IEEE}},

year = {2019},

url = {https://doi.org/10.1109/MDM.2019.00-59},

doi = {10.1109/MDM.2019.00-59},

timestamp = {Wed, 16 Oct 2019 14:14:52 +0200},

biburl = {https://dblp.org/rec/conf/mdm/Duan019.bib},

bibsource = {dblp computer science bibliography, https://dblp.org}

}