EleutherAI GPT-Neo: 穷人的希望

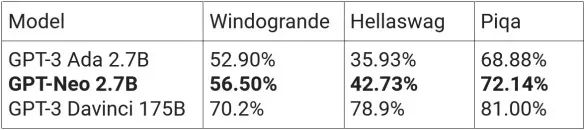

前面一篇blog finetune一个GPT3,借助openai的api finetune了一个GPT3,使用下来确实太贵,生成了1w条数据,花掉了60多美刀。肉痛,所以穷人只能想想穷人的办法,脑子就浮现出好朋友EleutherAI的GPT-Neo来。github上有两个项目GPT-Neo和GPT-NeoX,下图来自高仿也赛高?GPT-Neo真好用

之前一个blog,我是基于openai davinci做的微调,从这个角度来说,根据github上对gpt-neox的描述,得采用GPT-NeoX是最好的选择

We aim to make this repo a centralized and accessible place to gather techniques for training large-scale autoregressive language models, and accelerate research into large-scale training. Additionally, we hope to train and open source a 175B parameter GPT-3 replication along the way. Please note, however, that this is a research codebase that is primarily designed for performance over ease of use. We endeavour to make it as easy to use as is feasible, but if there's anything in the readme that is unclear or you think you've found a bug, please open an issue.

不过这个实在太大,恐怕我的3090没能力撑起这个场子,GPT-Neo也是可以将就将就的。

剩余的也就是准备数据(同之前的json),再写几行简单的finetuning code,网上东拼西凑,再debug一下,获得如下代码,仅供参考。gpt-neo-1.3B 1000条数据finetune 10个周期效果也不够好(train_data_prepared.jsonl),最终我采用openai获得的9000多条数据训练一个周期后,效果就接近openai davinci finetune的结果了。

import sys

import json

import pandas as pd

import torch

from torch.utils.data import Dataset, random_split

from transformers import GPT2Tokenizer, TrainingArguments, Trainer, AutoModelForCausalLM#, GPTNeoXTokenizerFast

torch.manual_seed(42)

modelname = "EleutherAI/gpt-neo-1.3B"

tokenizer = GPT2Tokenizer.from_pretrained(f"{modelname}", bos_token='<|startoftext|>',

eos_token='<|endoftext|>', pad_token='<|pad|>')

#special_tokens_dict = {'sep_token': '\n##\n', 'unk_token': '\n%%\n'}

#num_added_toks = tokenizer.add_special_tokens(special_tokens_dict)

#model = GPTNeoXForCausalLM.from_pretrained("EleutherAI/gpt-neox-20b")

#tokenizer = GPTNeoXTokenizerFast.from_pretrained("EleutherAI/gpt-neox-20b")

model = AutoModelForCausalLM.from_pretrained(f"{modelname}").cuda()

for name, param in model.transformer.wte.named_parameters():

param.requires_grad = False

for name, param in model.transformer.wpe.named_parameters():

param.requires_grad = False

for name, param in model.transformer.h[:16].named_parameters():

param.requires_grad = False

model.resize_token_embeddings(len(tokenizer))

prompts = []

completions = []

'''

f = open("train_data_prepared.jsonl", "r")

lines = [line.strip() for line in f.readlines()]

f.close()

for line in lines:

data = json.loads(line)

if len(data['prompt'].split(' ')) + len(data['completion'].split(' ')) > 45:

continue

prompts.append(data['prompt'])

completions.append(data['completion'])

'''

f = open("prompt_instrcut_completion.txt", "r")

lines = [line.strip() for line in f.readlines()]

f.close()

for line in lines:

items = line.split('|')

if len(items) != 3:

continue

prompt,completion = items[0]+"\n##\n",items[1]+"\n%%\n"+items[2]+"\nEND"

if len(prompt.split(' ')) + len(completion.split(' ')) > 45:

continue

prompts.append(prompt)

completions.append(completion)

max_length = 50

print("prompts length", len(prompts), "max length", max_length)

class InstructDataset(Dataset):

def __init__(self, prompts, completions, tokenizer, max_length):

self.input_ids = []

self.attn_masks = []

for prompt, completion in zip(prompts, completions):

encodings_dict = tokenizer(f'<|startoftext|>prompt:{prompt}\ncompletion:{completion}<|endoftext|>', truncation=True,

max_length=max_length, padding="max_length")

self.input_ids.append(torch.tensor(encodings_dict['input_ids']))

self.attn_masks.append(torch.tensor(encodings_dict['attention_mask']))

def __len__(self):

return len(self.input_ids)

def __getitem__(self, idx):

return self.input_ids[idx], self.attn_masks[idx]

dataset = InstructDataset(prompts, completions, tokenizer, max_length=max_length)

train_size = int(0.9 * len(dataset))

train_dataset, val_dataset = random_split(dataset, [train_size, len(dataset) - train_size])

training_args = TrainingArguments(output_dir='./results', num_train_epochs=1, logging_steps=400, save_steps=500,

per_device_train_batch_size=2, per_device_eval_batch_size=2,

warmup_steps=100, weight_decay=0.01, logging_dir='./logs')

Trainer(model=model, args=training_args, train_dataset=train_dataset,

eval_dataset=val_dataset, data_collator=lambda data: {'input_ids': torch.stack([f[0] for f in data]),

'attention_mask': torch.stack([f[1] for f in data]),

'labels': torch.stack([f[0] for f in data])}).train()

generated = tokenizer("<|startoftext|>a dog\n##\n", return_tensors="pt").input_ids.cuda()

sample_outputs = model.generate(generated, do_sample=True, top_k=50,

max_length=50, top_p=0.95, temperature=0.7, frequency_penalty=0.1, num_return_sequences=20)

for i, sample_output in enumerate(sample_outputs):

print("{}: {}".format(i, tokenizer.decode(sample_output, skip_special_tokens=True)))参考:

Guide to fine-tuning Text Generation models: GPT-2, GPT-Neo and T5