Flink_Flink 集群搭建

本文主要讲解下 flink 如何搭建 :

单节点集群

standalone 集群

yarnsession 集群

最近学习了下 Flink ,看了许多天的书,一上手搭建集群遇到了许多问题。 我在这里整理下集群搭建所遇到的问题。

单节点集群

单节点集群,其实不难。主要我是虚拟机器,内存很小,所以我们要调整 task-manager 的内存参数。

task-manager 的内存分配管理 与 参数配置 是一个大问题,我专门写了一篇文章 :

https://blog.csdn.net/u010003835/article/details/106294342

由于我搭建的环境是虚拟机,所以需要调整集群中节点的内存

调整的内存大小如下:

jobmanager.heap.size: 64m

taskmanager.memory.process.size: 512m

taskmanager.memory.framework.heap.size: 64m

taskmanager.memory.framework.off-heap.size: 64m

taskmanager.memory.jvm-metaspace.size: 64m

taskmanager.memory.jvm-overhead.fraction: 0.2

taskmanager.memory.jvm-overhead.min: 16m

taskmanager.memory.jvm-overhead.max: 64m

taskmanager.memory.network.fraction: 0.1

taskmanager.memory.network.min: 1mb

taskmanager.memory.network.max: 256mb

内存调整为如上大小后,我成功启动了单节点集群

详细配置 :

################################################################################

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

################################################################################

#=================================================================

#=================================================================

env.yarn.conf.dir: /etc/hadoop/conf.cloudera.yarn

env.hadoop.conf.dir: /etc/hadoop/conf.cloudera.hdfs

#==============================================================================

# Common

#==============================================================================

# The external address of the host on which the JobManager runs and can be

# reached by the TaskManagers and any clients which want to connect. This setting

# is only used in Standalone mode and may be overwritten on the JobManager side

# by specifying the --host parameter of the bin/jobmanager.sh executable.

# In high availability mode, if you use the bin/start-cluster.sh script and setup

# the conf/masters file, this will be taken care of automatically. Yarn/Mesos

# automatically configure the host name based on the hostname of the node where the

# JobManager runs.

jobmanager.rpc.address: cdh-manager

# The RPC port where the JobManager is reachable.

jobmanager.rpc.port: 6123

# The heap size for the JobManager JVM

jobmanager.heap.size: 64m

# The total process memory size for the TaskManager.

#

# Note this accounts for all memory usage within the TaskManager process, including JVM metaspace and other overhead.

taskmanager.memory.process.size: 512m

taskmanager.memory.framework.heap.size: 64m

taskmanager.memory.framework.off-heap.size: 64m

taskmanager.memory.jvm-metaspace.size: 64m

taskmanager.memory.jvm-overhead.fraction: 0.2

taskmanager.memory.jvm-overhead.min: 16m

taskmanager.memory.jvm-overhead.max: 64m

# To exclude JVM metaspace and overhead, please, use total Flink memory size instead of 'taskmanager.memory.process.size'.

# It is not recommended to set both 'taskmanager.memory.process.size' and Flink memory.

#

# taskmanager.memory.flink.size: 1280m

# The number of task slots that each TaskManager offers. Each slot runs one parallel pipeline.

taskmanager.numberOfTaskSlots: 1

# The parallelism used for programs that did not specify and other parallelism.

parallelism.default: 1

# The default file system scheme and authority.

#

# By default file paths without scheme are interpreted relative to the local

# root file system 'file:///'. Use this to override the default and interpret

# relative paths relative to a different file system,

# for example 'hdfs://mynamenode:12345'

#

# fs.default-scheme

#==============================================================================

# High Availability

#==============================================================================

# The high-availability mode. Possible options are 'NONE' or 'zookeeper'.

#

# high-availability: zookeeper

# The path where metadata for master recovery is persisted. While ZooKeeper stores

# the small ground truth for checkpoint and leader election, this location stores

# the larger objects, like persisted dataflow graphs.

#

# Must be a durable file system that is accessible from all nodes

# (like HDFS, S3, Ceph, nfs, ...)

#

# high-availability.storageDir: hdfs:///flink/ha/

# The list of ZooKeeper quorum peers that coordinate the high-availability

# setup. This must be a list of the form:

# "host1:clientPort,host2:clientPort,..." (default clientPort: 2181)

#

# high-availability.zookeeper.quorum: localhost:2181

# ACL options are based on https://zookeeper.apache.org/doc/r3.1.2/zookeeperProgrammers.html#sc_BuiltinACLSchemes

# It can be either "creator" (ZOO_CREATE_ALL_ACL) or "open" (ZOO_OPEN_ACL_UNSAFE)

# The default value is "open" and it can be changed to "creator" if ZK security is enabled

#

# high-availability.zookeeper.client.acl: open

#==============================================================================

# Fault tolerance and checkpointing

#==============================================================================

# The backend that will be used to store operator state checkpoints if

# checkpointing is enabled.

#

# Supported backends are 'jobmanager', 'filesystem', 'rocksdb', or the

# .

#

# state.backend: filesystem

# Directory for checkpoints filesystem, when using any of the default bundled

# state backends.

#

# state.checkpoints.dir: hdfs://namenode-host:port/flink-checkpoints

# Default target directory for savepoints, optional.

#

# state.savepoints.dir: hdfs://namenode-host:port/flink-checkpoints

# Flag to enable/disable incremental checkpoints for backends that

# support incremental checkpoints (like the RocksDB state backend).

#

# state.backend.incremental: false

# The failover strategy, i.e., how the job computation recovers from task failures.

# Only restart tasks that may have been affected by the task failure, which typically includes

# downstream tasks and potentially upstream tasks if their produced data is no longer available for consumption.

jobmanager.execution.failover-strategy: region

#==============================================================================

# Rest & web frontend

#==============================================================================

# The port to which the REST client connects to. If rest.bind-port has

# not been specified, then the server will bind to this port as well.

#

#rest.port: 8081

# The address to which the REST client will connect to

#

#rest.address: 0.0.0.0

# Port range for the REST and web server to bind to.

#

#rest.bind-port: 8080-8090

# The address that the REST & web server binds to

#

#rest.bind-address: 0.0.0.0

# Flag to specify whether job submission is enabled from the web-based

# runtime monitor. Uncomment to disable.

#web.submit.enable: false

#==============================================================================

# Advanced

#==============================================================================

# Override the directories for temporary files. If not specified, the

# system-specific Java temporary directory (java.io.tmpdir property) is taken.

#

# For framework setups on Yarn or Mesos, Flink will automatically pick up the

# containers' temp directories without any need for configuration.

#

# Add a delimited list for multiple directories, using the system directory

# delimiter (colon ':' on unix) or a comma, e.g.:

# /data1/tmp:/data2/tmp:/data3/tmp

#

# Note: Each directory entry is read from and written to by a different I/O

# thread. You can include the same directory multiple times in order to create

# multiple I/O threads against that directory. This is for example relevant for

# high-throughput RAIDs.

#

io.tmp.dirs: /tmp

# The classloading resolve order. Possible values are 'child-first' (Flink's default)

# and 'parent-first' (Java's default).

#

# Child first classloading allows users to use different dependency/library

# versions in their application than those in the classpath. Switching back

# to 'parent-first' may help with debugging dependency issues.

#

# classloader.resolve-order: child-first

# The amount of memory going to the network stack. These numbers usually need

# no tuning. Adjusting them may be necessary in case of an "Insufficient number

# of network buffers" error. The default min is 64MB, the default max is 1GB.

#

# taskmanager.memory.network.fraction: 0.1

# taskmanager.memory.network.min: 64mb

# taskmanager.memory.network.max: 1gb

taskmanager.memory.network.fraction: 0.1

taskmanager.memory.network.min: 1mb

taskmanager.memory.network.max: 256mb

#==============================================================================

# Flink Cluster Security Configuration

#==============================================================================

# Kerberos authentication for various components - Hadoop, ZooKeeper, and connectors -

# may be enabled in four steps:

# 1. configure the local krb5.conf file

# 2. provide Kerberos credentials (either a keytab or a ticket cache w/ kinit)

# 3. make the credentials available to various JAAS login contexts

# 4. configure the connector to use JAAS/SASL

# The below configure how Kerberos credentials are provided. A keytab will be used instead of

# a ticket cache if the keytab path and principal are set.

# security.kerberos.login.use-ticket-cache: true

# security.kerberos.login.keytab: /path/to/kerberos/keytab

# security.kerberos.login.principal: flink-user

# The configuration below defines which JAAS login contexts

# security.kerberos.login.contexts: Client,KafkaClient

#==============================================================================

# ZK Security Configuration

#==============================================================================

# Below configurations are applicable if ZK ensemble is configured for security

# Override below configuration to provide custom ZK service name if configured

# zookeeper.sasl.service-name: zookeeper

# The configuration below must match one of the values set in "security.kerberos.login.contexts"

# zookeeper.sasl.login-context-name: Client

#==============================================================================

# HistoryServer

#==============================================================================

# The HistoryServer is started and stopped via bin/historyserver.sh (start|stop)

# Directory to upload completed jobs to. Add this directory to the list of

# monitored directories of the HistoryServer as well (see below).

#jobmanager.archive.fs.dir: hdfs:///completed-jobs/

# The address under which the web-based HistoryServer listens.

#historyserver.web.address: 0.0.0.0

# The port under which the web-based HistoryServer listens.

#historyserver.web.port: 8082

# Comma separated list of directories to monitor for completed jobs.

#historyserver.archive.fs.dir: hdfs:///completed-jobs/

# Interval in milliseconds for refreshing the monitored directories.

#historyserver.archive.fs.refresh-interval: 10000

standalone 集群

修改 masters , slaves 文件中的内容

standalone 主要是进程运行在本地,这个时候除了修改配置文件,我们还需要修改 conf 目录下的 masters slaves 文件。

masters 文件内容如下:

cdh-manager:8081

slaves 文件内容如下:

cdh-node1

cdh-node2

masters 与 slaves 需要同步到各个节点上。

调整 conf/flink-conf.yaml 中的配置项 :

调整 conf/flink-conf.yaml 中的配置项 :

jobmanager.rpc.address

表示 Flink Cluster 集群的 JobManager RPC 通信地址,一般需要配置指定的 JobManager 的IP 地址,默认 localhost 不适合多节点集群模式。

jobmanager.heap.mb

对JobManager 的JVM堆内存大小进行配置,默认为1024M, 可以根据集群规模适当增加

taskmanager.heap.mb

对TaskManager 的JVM 堆内存大小进行配置,默认为 1024M, 可根据数据计算规模以及状态大小进行调整。

taskmanager.numberOfTaskSlots

配置每个TaskManager能够贡献出来的Slot数量,根据TaskManager 所在机器能供给Flink 的CPU 数量决定。

parallelism.default

Flink 任务默认并行度,与整个集群的CPU 数量有关,增加 parallelism 可以提高任务并行的计算的实例数,提升数据处理效率,但也会占用更多Slot.

taskmanager.tmp.dirs

集群临时文件夹地址,Flink 会将中间计算数据放置在相应路径中。

默认配置项会在集群启动的时候加载到Flink 集群中,当用户提交任务时,可以通过 -D 符号来动态设置系统参数,此时 flink-conf.yaml 配置文件中的参数就会被覆盖掉,例如使用 -Dfs.overwrite-files=true 动态参数

执行启动脚本 :

然后, 执行下 bin 目录下的 start_cluster.sh 即可。

相关节点

NodeManger 进程

16988 org.apache.flink.runtime.entrypoint.StandaloneSessionClusterEntrypoint

TaskManager 进程

17329 org.apache.flink.runtime.taskexecutor.TaskManagerRunner

YARN 集群

flink on yarn 分为两种方式

1)Yarn Session Model

2) Single Job Model

Yarn Session Model

这种模式中Flink会向Hadoop Yarn 申请足够多的资源,并在 Yarn 上启动长时间运行的 Flink Session 集群,用户可以通过 RestAPI 或 Web页面 将Flink 任务提交到 Flink Session 集群上运行。y

基本配置

1) 依赖包

首先是我们启动这样的任务,需要yarn 环境的基础依赖包。我们可以从官网上下载,并放到 lib 目录下。

参考文章 : https://blog.csdn.net/Alex_Sheng_Sea/article/details/102607937

如果我们不下载 Hadoop 相关的包,会出现如下报错 :

NoClassDefFoundError: org/apache/hadoop/yarn/exceptions/YarnException

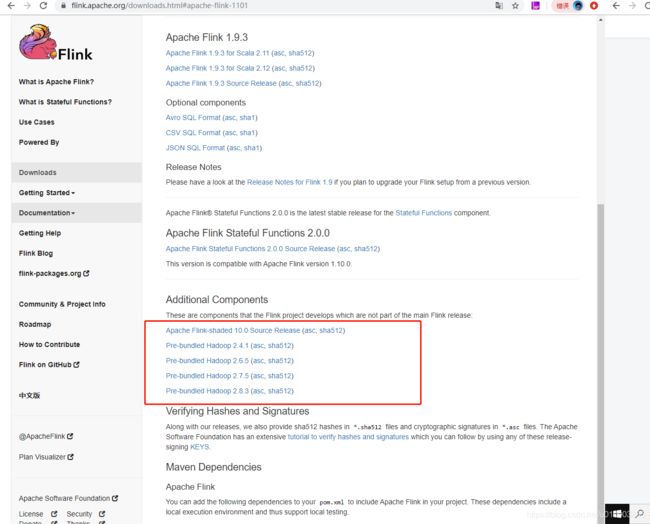

相关包 Hadoop下载地址 : 根据hadoop 版本选择合适的包 !!

https://flink.apache.org/downloads.html#apache-flink-1101

2) 环境配置

为了能找到hadoop 中相关的配置,我这里将相关的配置写到了 flink-conf.yaml 中

env.yarn.conf.dir: /etc/hadoop/conf.cloudera.yarn

env.hadoop.conf.dir: /etc/hadoop/conf.cloudera.hdfs

=====================

集群启动相关参数

启动 yarn-session 之前,我们看看都可以调整那些参数 :

Usage:

Optional

-at,--applicationType

-D

-d,--detached If present, runs the job in detached mode

-h,--help Help for the Yarn session CLI.

-id,--applicationId

-j,--jar

-jm,--jobManagerMemory

-m,--jobmanager

-nl,--nodeLabel

-nm,--name

-q,--query Display available YARN resources (memory, cores)

-qu,--queue

-s,--slots

-t,--ship

-tm,--taskManagerMemory

-yd,--yarndetached If present, runs the job in detached mode (deprecated; use non-YARN specific option instead)

-z,--zookeeperNamespace

根据以上参数,我们启动一个 On Yarn Session 集群 :

./yarn-session.sh -d -n 4 -jm 64m -tm 512m -s 4

-n 4 启动4个Yarn Container

-jm 参数配置JobManager 的JVM内存大小

-tm 参数配置TaskManager 内存大小

-s 集群总共启动16个slots 来提供应用启动task 实例

注意后台启动参数 -d 要写在第一位

-d,--detached If present, runs the job in detached mode

Single Job Model

Single Job Model 主要是通过 flink 指令进行提交,相关的参数

Action "run" compiles and runs a program.

Syntax: run [OPTIONS]

"run" action options:

-c,--class Class with the program entry point

("main()" method). Only needed if the

JAR file does not specify the class in

its manifest.

-C,--classpath Adds a URL to each user code

classloader on all nodes in the

cluster. The paths must specify a

protocol (e.g. file://) and be

accessible on all nodes (e.g. by means

of a NFS share). You can use this

option multiple times for specifying

more than one URL. The protocol must

be supported by the {@link

java.net.URLClassLoader}.

-d,--detached If present, runs the job in detached

mode

-n,--allowNonRestoredState Allow to skip savepoint state that

cannot be restored. You need to allow

this if you removed an operator from

your program that was part of the

program when the savepoint was

triggered.

-p,--parallelism The parallelism with which to run the

program. Optional flag to override the

default value specified in the

configuration.

-py,--python Python script with the program entry

point. The dependent resources can be

configured with the `--pyFiles`

option.

-pyarch,--pyArchives Add python archive files for job. The

archive files will be extracted to the

working directory of python UDF

worker. Currently only zip-format is

supported. For each archive file, a

target directory be specified. If the

target directory name is specified,

the archive file will be extracted to

a name can directory with the

specified name. Otherwise, the archive

file will be extracted to a directory

with the same name of the archive

file. The files uploaded via this

option are accessible via relative

path. '#' could be used as the

separator of the archive file path and

the target directory name. Comma (',')

could be used as the separator to

specify multiple archive files. This

option can be used to upload the

virtual environment, the data files

used in Python UDF (e.g.: --pyArchives

file:///tmp/py37.zip,file:///tmp/data.

zip#data --pyExecutable

py37.zip/py37/bin/python). The data

files could be accessed in Python UDF,

e.g.: f = open('data/data.txt', 'r').

-pyexec,--pyExecutable Specify the path of the python

interpreter used to execute the python

UDF worker (e.g.: --pyExecutable

/usr/local/bin/python3). The python

UDF worker depends on Python 3.5+,

Apache Beam (version == 2.15.0), Pip

(version >= 7.1.0) and SetupTools

(version >= 37.0.0). Please ensure

that the specified environment meets

the above requirements.

-pyfs,--pyFiles Attach custom python files for job.

These files will be added to the

PYTHONPATH of both the local client

and the remote python UDF worker. The

standard python resource file suffixes

such as .py/.egg/.zip or directory are

all supported. Comma (',') could be

used as the separator to specify

multiple files (e.g.: --pyFiles

file:///tmp/myresource.zip,hdfs:///$na

menode_address/myresource2.zip).

-pym,--pyModule Python module with the program entry

point. This option must be used in

conjunction with `--pyFiles`.

-pyreq,--pyRequirements Specify a requirements.txt file which

defines the third-party dependencies.

These dependencies will be installed

and added to the PYTHONPATH of the

python UDF worker. A directory which

contains the installation packages of

these dependencies could be specified

optionally. Use '#' as the separator

if the optional parameter exists

(e.g.: --pyRequirements

file:///tmp/requirements.txt#file:///t

mp/cached_dir).

-s,--fromSavepoint Path to a savepoint to restore the job

from (for example

hdfs:///flink/savepoint-1537).

-sae,--shutdownOnAttachedExit If the job is submitted in attached

mode, perform a best-effort cluster

shutdown when the CLI is terminated

abruptly, e.g., in response to a user

interrupt, such as typing Ctrl + C.

Options for executor mode:

-D Generic configuration options for

execution/deployment and for the configured executor.

The available options can be found at

https://ci.apache.org/projects/flink/flink-docs-stabl

e/ops/config.html

-e,--executor The name of the executor to be used for executing the

given job, which is equivalent to the

"execution.target" config option. The currently

available executors are: "remote", "local",

"kubernetes-session", "yarn-per-job", "yarn-session".

Options for yarn-cluster mode:

-d,--detached If present, runs the job in detached

mode

-m,--jobmanager Address of the JobManager (master) to

which to connect. Use this flag to

connect to a different JobManager than

the one specified in the

configuration.

-yat,--yarnapplicationType Set a custom application type for the

application on YARN

-yD use value for given property

-yd,--yarndetached If present, runs the job in detached

mode (deprecated; use non-YARN

specific option instead)

-yh,--yarnhelp Help for the Yarn session CLI.

-yid,--yarnapplicationId Attach to running YARN session

-yj,--yarnjar Path to Flink jar file

-yjm,--yarnjobManagerMemory Memory for JobManager Container with

optional unit (default: MB)

-ynl,--yarnnodeLabel Specify YARN node label for the YARN

application

-ynm,--yarnname Set a custom name for the application

on YARN

-yq,--yarnquery Display available YARN resources

(memory, cores)

-yqu,--yarnqueue Specify YARN queue.

-ys,--yarnslots Number of slots per TaskManager

-yt,--yarnship Ship files in the specified directory

(t for transfer)

-ytm,--yarntaskManagerMemory Memory per TaskManager Container with

optional unit (default: MB)

-yz,--yarnzookeeperNamespace Namespace to create the Zookeeper

sub-paths for high availability mode

-z,--zookeeperNamespace Namespace to create the Zookeeper

sub-paths for high availability mode

Options for default mode:

-m,--jobmanager Address of the JobManager (master) to which

to connect. Use this flag to connect to a

different JobManager than the one specified

in the configuration.

-z,--zookeeperNamespace Namespace to create the Zookeeper sub-paths

for high availability mode

Yarn Single Job 方式 :

./flink run -m yarn-cluster -yn 2 ./***.jar

-yn 表示任务需要的 taskmanger 数量 (1.10 已经取消了)