tensorflow dataset基础之——dataset api的使用

目录

1. 使用from_tensor_slices生成datasets

2. repeat epoch & get batch

3. interleave

4. 用元组初始化一个dataset

5.用字典初始化一个dataset

6.实战之——通过filename得到datasets,并解析

6.1 将一系列文件中的内容读取出来,形成一个dataset(使用interleave api进行操作)

6.2.解析csv文件.

6.3 构造函数,通过filename得到datasets,并解析

7. 实战之——使用手动生成的训练集,验证集,测试集完成模型的训练和推理

1. 使用from_tensor_slices生成datasets

The simplest way to create a dataset is to create it from a python list or numpy array

import tensorflow as tf

import numpy as np

dataset = tf.data.Dataset.from_tensor_slices(np.arange(10))

print(dataset)2. repeat epoch & get batch

(1) repeat epoch.(每个epoch即遍历一次数据).

(2) get batch.

dataset = dataset.repeat(3).batch(7)

for item in dataset:

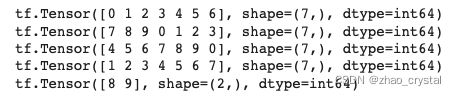

print(item)输出:

从上述的结果可以看到,数据总共遍历了3次,每个batch取7个样本

3. interleave

interleave: 对现有datasets中的每个元素做处理,产生新的结果,interleave将新的结果合并,并产生新的数据集

case:

存储一系列文件的文件名, 用interleave做变化, 遍历文件名数据集中的所有元素,把文件名对应的文件内容读取出来,

————>形成新的数据集合————>interleave把新的数据集合并。

# interleave

# case:文件 dataset ——> 具体数据集

# tf.data.Dataset.interleave() is a generalization of flat_map

# since flat_map produces the same output as tf.data.Dataset.interleave(cycle_length=1)

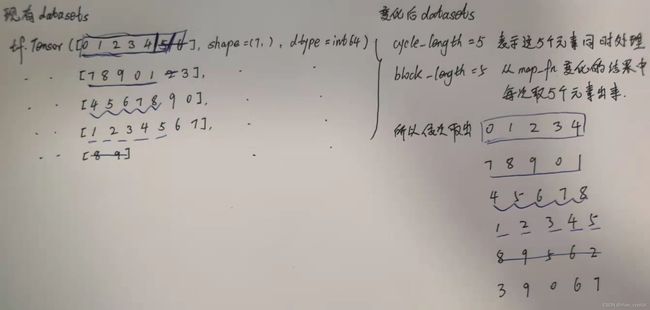

dataset2 = dataset.interleave(

lambda v: tf.data.Dataset.from_tensor_slices(v), # map_fn

cycle_length = 5, # 并行的,即同时处理datasets中的多少个元素

block_length = 5 # 从上面变换的结果中,每次取多少个元素出来

)

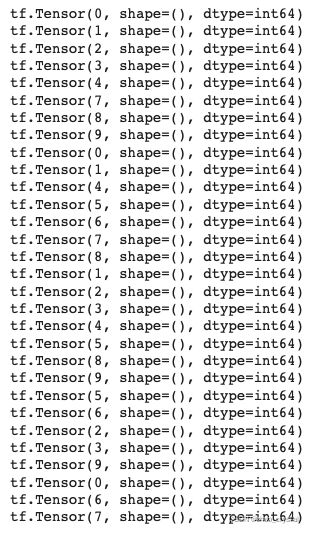

for item in dataset2:

print(item)输出:

输出为什么是这样呢?请看如下解释:

4. 用元组初始化一个dataset

用元组初始化一个datasets(将样本和标签绑定在一起)

x = np.array([[1, 2], [3, 4], [5, 6]])

y = np.array(['cat', 'dog', 'fox'])

dataset3 = tf.data.Dataset.from_tensor_slices((x, y))

print(dataset3)

for item_x, item_y in dataset3:

print(item_x, item_y)5.用字典初始化一个dataset

用字典初始化一个datasets(将样本的标签绑定在一起)

dataset4 = tf.data.Dataset.from_tensor_slices({"feature": x, "label": y})

for item in dataset4:

print(item["feature"].numpy(), item["label"].numpy())6.实战之——通过filename得到datasets,并解析

(1)filename ——>dataset.

(2) read_file ——> dataset ——> datasets ——> merge.

(3) parse csv.

import os

pwd = os.getcwd()

print(pwd)

file_list = os.listdir(pwd)

print(file_list)准备:利用california_housing的数据,得到 train_data, valid_data, test_data.

from tensorflow import keras

from sklearn.datasets import fetch_california_housing

from sklearn.model_selection import train_test_split

housing = fetch_california_housing()

x_train_all, x_test, y_train_all, y_test = train_test_split(

housing.data, housing.target, random_state = 7)

x_train, x_valid, y_train, y_valid = train_test_split(

x_train_all, y_train_all, random_state = 11)将train_data, valid_data, test_data 分成几份存到相应的文件夹中

output_dir = '/content/drive/MyDrive/data/generate_csv'

if not os.path.exists(output_dir):

os.mkdir(output_dir)

def save_to_csv(output_dir, data, name_prefix, header=None, n_parts=10):

output_dir = os.path.join(output_dir, name_prefix)

if not os.path.exists(output_dir):

os.mkdir(output_dir)

path_format = os.path.join(output_dir, "{}_{:02d}.csv")

filenames = []

data_result = np.array_split(np.arange(len(data)), n_parts)

for file_idx, row_indices in enumerate(data_result):

part_csv = path_format.format(name_prefix, file_idx)

filenames.append(part_csv)

with open(part_csv, "wt", encoding="utf-8") as f:

if header is not None:

f.write(header + "\n")

for row_index in row_indices:

f.write(",".join([repr(col) for col in data[row_index]]))

f.write('\n')

return filenamestrain_data = np.c_[x_train, y_train]

valid_data = np.c_[x_valid, y_valid]

test_data = np.c_[x_test, y_test]

header_cols = housing.feature_names + ["MidianHouseValue"]

header_str= ",".join(header_cols)

train_filenames = save_to_csv(output_dir, train_data, "train",

header_str, n_parts=20)

valid_filenames = save_to_csv(output_dir, valid_data, "valid",

header_str, n_parts=10)

test_filenames = save_to_csv(output_dir, test_data, "test",

header_str, n_parts=10)6.1 将一系列文件中的内容读取出来,形成一个dataset(使用interleave api进行操作)

# 1. filename ——> dataset

# 2. read file ——> dataset ——> datasets ——> merge

filename_dataset = tf.data.Dataset.list_files(train_filenames)

for filename in filename_dataset:

print(filename)n_readers = 5

dataset = filename_dataset.interleave(

lambda filename: tf.data.TextLineDataset(filename).skip(1), # skip(1) 表示跳过文件的第一行,这里的第一行为表头

cycle_length = n_readers

)

print(dataset)

for line in dataset.take(15): # dataset.take(15)表示只读取前面15个元素

print(line.numpy())6.2.解析csv文件.

parse csv 使用tf.io.decode_csv

sample_str = '1, 2, 3, 4, 5'

record_defaults = [tf.constant(0, dtype=tf.int32)] * 5

parsed_fields = tf.io.decode_csv(sample_str, record_defaults)

print(parsed_fields)record_defaults_1 = [

tf.constant(0, dtype=tf.int32),

0,

np.nan,

"hello",

tf.constant([])

]

parsed_fields_1 = tf.io.decode_csv(sample_str, record_defaults_1)

print(parsed_fields_1)解析dataset中的某一行特征

def parse_csv_line(line, n_fields):

defs = [tf.constant(np.nan)] * n_fields

parse_fields = tf.io.decode_csv(line, record_defaults=defs)

x = tf.stack(parse_fields[0:-1])

y = tf.stack(parse_fields[-1:])

return x, y

parse_csv_line(b'2.5885,28.0,6.267910447761194,1.3723880597014926,3470.0,2.58955223880597,33.84,-116.53,1.59', n_fields=9)6.3 构造函数,通过filename得到datasets,并解析

一个函数,实现如下3个功能

(1)filename ——>dataset.

(2) read_file ——> dataset ——> datasets ——> merge.

(3) parse csv.

import pprint

import functools

# 使用functools.partial,把一个函数的某些参数给固定住(当然,也可以简单设定parse_csv_line中,n_fields=9)

parse_csv_line_9 = functools.partial(parse_csv_line, n_fields = 9)

def csv_reader_dataset(filenames, n_readers=5, batch_size=32,

n_parse_threads=5, shuffle_buffer_size=10000):

filename_dataset = tf.data.Dataset.list_files(filenames)

filename_dataset = filename_dataset.repeat()

dataset = filename_dataset.interleave(

lambda filename: tf.data.TextLineDataset(filename).skip(1),

cycle_length = n_readers

)

dataset.shuffle(shuffle_buffer_size)

dataset = dataset.map(parse_csv_line_9,

num_parallel_calls = n_parse_threads)

dataset = dataset.batch(batch_size)

return dataset

train_set = csv_reader_dataset(train_filenames, batch_size=3)

for x_batch, y_batch in train_set.take(2):

print("x:")

pprint.pprint(x_batch)

print("y:")

pprint.pprint(y_batch)7. 实战之——使用手动生成的训练集,验证集,测试集完成模型的训练和推理

batch_size = 32

train_set = csv_reader_dataset(train_filenames, batch_size = batch_size)

valid_set = csv_reader_dataset(valid_filenames, batch_size = batch_size)

test_set = csv_reader_dataset(test_filenames, batch_size = batch_size)model = keras.models.Sequential([

keras.layers.Dense(30, activation='relu',

input_shape=x_train.shape[1:]),

keras.layers.Dense(15, activation='relu'),

keras.layers.Dense(1),

])

model.compile(loss=keras.losses.MeanSquaredError(),

optimizer=keras.optimizers.Adam(learning_rate=1e-3),

metrics=["accuracy"])

callbacks = [keras.callbacks.EarlyStopping(

patience=5, min_delta=1e-2)]

history = model.fit(train_set,

validation_data = valid_set,

steps_per_epoch = 11160 // batch_size, # 11160 为训练集的样本数

validation_steps = 3870 // batch_size, # 3870 为验证集的样本数

epochs = 100,

callbacks = callbacks)model.evaluate(test_set, steps = 5160 // batch_size) # 5160表示测试集的总样本的个数