想用GPU跑yolo数据集,但是始终使用的是cpu,运行环境调整好了,还是有报错Memory Management and PYTORCH_CUDA_ALLOC_CONF

1、首先给大家看一下我的电脑的配置,通过win+R打开cmd 输入dxdiag,打开directx的诊断工具可以看到。

此处只是证明我电脑上是装了显卡的。至于anconda环境搭建,解释器生成,pytorch安装网上都有操作步骤,我就不列出来了。

2、运行train.py看看

C:\Users\admin\.conda\envs\yolov7_ch\python.exe C:\dev\yolov7\train.py

YOLOR v0.1-116-g8c0bf3f torch 1.13.1+cu116 CUDA:0 (NVIDIA GeForce RTX 3080, 10239.5MB)

Namespace(adam=False, artifact_alias='latest', batch_size=16, bbox_interval=-1, bucket='', cache_images=False, cfg='', data='data/coco.yaml', device='', entity=None, epochs=300, evolve=False, exist_ok=False, freeze=[0], global_rank=-1, hyp='data/hyp.scratch.p5.yaml', image_weights=False, img_size=[640, 640], label_smoothing=0.0, linear_lr=False, local_rank=-1, multi_scale=False, name='exp', noautoanchor=False, nosave=False, notest=False, project='runs/train', quad=False, rect=False, resume=False, save_dir='runs\\train\\exp27', save_period=-1, single_cls=False, sync_bn=False, total_batch_size=16, upload_dataset=False, v5_metric=False, weights='yolov7.pt', workers=1, world_size=1)

tensorboard: Start with 'tensorboard --logdir runs/train', view at http://localhost:6006/

hyperparameters: lr0=0.01, lrf=0.1, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.3, cls_pw=1.0, obj=0.7, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.2, scale=0.9, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.15, copy_paste=0.0, paste_in=0.15, loss_ota=1

wandb: Install Weights & Biases for YOLOR logging with 'pip install wandb' (recommended)

Overriding model.yaml nc=80 with nc=1

from n params module arguments

0 -1 1 928 models.common.Conv [3, 32, 3, 1]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2]

2 -1 1 36992 models.common.Conv [64, 64, 3, 1]

3 -1 1 73984 models.common.Conv [64, 128, 3, 2]

4 -1 1 8320 models.common.Conv [128, 64, 1, 1]

5 -2 1 8320 models.common.Conv [128, 64, 1, 1]

6 -1 1 36992 models.common.Conv [64, 64, 3, 1]

7 -1 1 36992 models.common.Conv [64, 64, 3, 1]

8 -1 1 36992 models.common.Conv [64, 64, 3, 1]

9 -1 1 36992 models.common.Conv [64, 64, 3, 1]

10 [-1, -3, -5, -6] 1 0 models.common.Concat [1]

11 -1 1 66048 models.common.Conv [256, 256, 1, 1]

12 -1 1 0 models.common.MP []

13 -1 1 33024 models.common.Conv [256, 128, 1, 1]

14 -3 1 33024 models.common.Conv [256, 128, 1, 1]

15 -1 1 147712 models.common.Conv [128, 128, 3, 2]

16 [-1, -3] 1 0 models.common.Concat [1]

17 -1 1 33024 models.common.Conv [256, 128, 1, 1]

18 -2 1 33024 models.common.Conv [256, 128, 1, 1]

19 -1 1 147712 models.common.Conv [128, 128, 3, 1]

20 -1 1 147712 models.common.Conv [128, 128, 3, 1]

21 -1 1 147712 models.common.Conv [128, 128, 3, 1]

22 -1 1 147712 models.common.Conv [128, 128, 3, 1]

23 [-1, -3, -5, -6] 1 0 models.common.Concat [1]

24 -1 1 263168 models.common.Conv [512, 512, 1, 1]

25 -1 1 0 models.common.MP []

26 -1 1 131584 models.common.Conv [512, 256, 1, 1]

27 -3 1 131584 models.common.Conv [512, 256, 1, 1]

28 -1 1 590336 models.common.Conv [256, 256, 3, 2]

29 [-1, -3] 1 0 models.common.Concat [1]

30 -1 1 131584 models.common.Conv [512, 256, 1, 1]

31 -2 1 131584 models.common.Conv [512, 256, 1, 1]

32 -1 1 590336 models.common.Conv [256, 256, 3, 1]

33 -1 1 590336 models.common.Conv [256, 256, 3, 1]

34 -1 1 590336 models.common.Conv [256, 256, 3, 1]

35 -1 1 590336 models.common.Conv [256, 256, 3, 1]

36 [-1, -3, -5, -6] 1 0 models.common.Concat [1]

37 -1 1 1050624 models.common.Conv [1024, 1024, 1, 1]

38 -1 1 0 models.common.MP []

39 -1 1 525312 models.common.Conv [1024, 512, 1, 1]

40 -3 1 525312 models.common.Conv [1024, 512, 1, 1]

41 -1 1 2360320 models.common.Conv [512, 512, 3, 2]

42 [-1, -3] 1 0 models.common.Concat [1]

43 -1 1 262656 models.common.Conv [1024, 256, 1, 1]

44 -2 1 262656 models.common.Conv [1024, 256, 1, 1]

45 -1 1 590336 models.common.Conv [256, 256, 3, 1]

46 -1 1 590336 models.common.Conv [256, 256, 3, 1]

47 -1 1 590336 models.common.Conv [256, 256, 3, 1]

48 -1 1 590336 models.common.Conv [256, 256, 3, 1]

49 [-1, -3, -5, -6] 1 0 models.common.Concat [1]

50 -1 1 1050624 models.common.Conv [1024, 1024, 1, 1]

51 -1 1 7609344 models.common.SPPCSPC [1024, 512, 1]

52 -1 1 131584 models.common.Conv [512, 256, 1, 1]

53 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

54 37 1 262656 models.common.Conv [1024, 256, 1, 1]

55 [-1, -2] 1 0 models.common.Concat [1]

56 -1 1 131584 models.common.Conv [512, 256, 1, 1]

57 -2 1 131584 models.common.Conv [512, 256, 1, 1]

58 -1 1 295168 models.common.Conv [256, 128, 3, 1]

59 -1 1 147712 models.common.Conv [128, 128, 3, 1]

60 -1 1 147712 models.common.Conv [128, 128, 3, 1]

61 -1 1 147712 models.common.Conv [128, 128, 3, 1]

62[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1]

63 -1 1 262656 models.common.Conv [1024, 256, 1, 1]

64 -1 1 33024 models.common.Conv [256, 128, 1, 1]

65 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

66 24 1 65792 models.common.Conv [512, 128, 1, 1]

67 [-1, -2] 1 0 models.common.Concat [1]

68 -1 1 33024 models.common.Conv [256, 128, 1, 1]

69 -2 1 33024 models.common.Conv [256, 128, 1, 1]

70 -1 1 73856 models.common.Conv [128, 64, 3, 1]

71 -1 1 36992 models.common.Conv [64, 64, 3, 1]

72 -1 1 36992 models.common.Conv [64, 64, 3, 1]

73 -1 1 36992 models.common.Conv [64, 64, 3, 1]

74[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1]

75 -1 1 65792 models.common.Conv [512, 128, 1, 1]

76 -1 1 0 models.common.MP []

77 -1 1 16640 models.common.Conv [128, 128, 1, 1]

78 -3 1 16640 models.common.Conv [128, 128, 1, 1]

79 -1 1 147712 models.common.Conv [128, 128, 3, 2]

80 [-1, -3, 63] 1 0 models.common.Concat [1]

81 -1 1 131584 models.common.Conv [512, 256, 1, 1]

82 -2 1 131584 models.common.Conv [512, 256, 1, 1]

83 -1 1 295168 models.common.Conv [256, 128, 3, 1]

84 -1 1 147712 models.common.Conv [128, 128, 3, 1]

85 -1 1 147712 models.common.Conv [128, 128, 3, 1]

86 -1 1 147712 models.common.Conv [128, 128, 3, 1]

87[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1]

88 -1 1 262656 models.common.Conv [1024, 256, 1, 1]

89 -1 1 0 models.common.MP []

90 -1 1 66048 models.common.Conv [256, 256, 1, 1]

91 -3 1 66048 models.common.Conv [256, 256, 1, 1]

92 -1 1 590336 models.common.Conv [256, 256, 3, 2]

93 [-1, -3, 51] 1 0 models.common.Concat [1]

94 -1 1 525312 models.common.Conv [1024, 512, 1, 1]

95 -2 1 525312 models.common.Conv [1024, 512, 1, 1]

96 -1 1 1180160 models.common.Conv [512, 256, 3, 1]

97 -1 1 590336 models.common.Conv [256, 256, 3, 1]

98 -1 1 590336 models.common.Conv [256, 256, 3, 1]

99 -1 1 590336 models.common.Conv [256, 256, 3, 1]

100[-1, -2, -3, -4, -5, -6] 1 0 models.common.Concat [1]

101 -1 1 1049600 models.common.Conv [2048, 512, 1, 1]

102 75 1 328704 models.common.RepConv [128, 256, 3, 1]

103 88 1 1312768 models.common.RepConv [256, 512, 3, 1]

104 101 1 5246976 models.common.RepConv [512, 1024, 3, 1]

105 [102, 103, 104] 1 32310 models.yolo.Detect [1, [[12, 16, 19, 36, 40, 28], [36, 75, 76, 55, 72, 146], [142, 110, 192, 243, 459, 401]], [256, 512, 1024]]

Model Summary: 407 layers, 37194710 parameters, 37194710 gradients

Transferred 554/560 items from yolov7.pt

Scaled weight_decay = 0.0005

Optimizer groups: 95 .bias, 95 conv.weight, 92 other

train: Scanning 'C:\img\yolo\people\labels\train.cache' images and labels... 10000 found, 0 missing, 0 empty, 0 corrupted: 100%|██████████| 10000/10000 [00:00

train(hyp, opt, device, tb_writer)

File "C:\dev\yolov7\train.py", line 362, in train

pred = model(imgs) # forward

File "C:\Users\admin\.conda\envs\yolov7_ch\lib\site-packages\torch\nn\modules\module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "C:\dev\yolov7\models\yolo.py", line 599, in forward

return self.forward_once(x, profile) # single-scale inference, train

File "C:\dev\yolov7\models\yolo.py", line 625, in forward_once

x = m(x) # run

File "C:\Users\admin\.conda\envs\yolov7_ch\lib\site-packages\torch\nn\modules\module.py", line 1194, in _call_impl

return forward_call(*input, **kwargs)

File "C:\dev\yolov7\models\common.py", line 507, in forward

return self.act(self.rbr_dense(inputs) + self.rbr_1x1(inputs) + id_out)

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 26.00 MiB (GPU 0; 10.00 GiB total capacity; 9.25 GiB already allocated; 0 bytes free; 9.30 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

Process finished with exit code 1到这里就是有报错了,但是检测到使用的是GPU,

YOLOR v0.1-116-g8c0bf3f torch 1.13.1+cu116 CUDA:0 (NVIDIA GeForce RTX 3080, 10239.5MB)

torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 26.00 MiB (GPU 0; 10.00 GiB total capacity; 9.25 GiB already allocated; 0 bytes free; 9.30 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

出现的这个问题,是和显存有关吗?

错误: CUDA 内存不足。尝试分配26.00 MiB (GPU 0; 10.00 GiB 总容量; 9.25 GiB 已经分配; 0字节空闲; PyTorch 总共预留了9.30 GiB)如果预留内存 > > 分配内存尝试设置 max _ split _ size _ mb 以避免碎片。请参阅有关内存管理和 PYTORCH _ CUDA _ ALLOC _ CONF 的文档

3、调整磁盘虚拟内存看看,效果不大。

如图设置完后,需要重新启动。上面的设置验证无效,报出同样的错误。

4、查看moudle packages安装情况,使用pip查看到的结果

(yolov7_ch) C:\dev\yolov7>pip list

Package Version

----------------------- ------------

absl-py 1.4.0

backcall 0.2.0

brotlipy 0.7.0

cachetools 5.3.0

certifi 2022.12.7

cffi 1.15.1

charset-normalizer 2.0.4

colorama 0.4.6

cryptography 38.0.4

cv 1.0.0

cycler 0.11.0

decorator 5.1.1

fonttools 4.38.0

google-auth 2.16.1

google-auth-oauthlib 0.4.6

grpcio 1.51.3

idna 3.4

importlib-metadata 6.0.0

ipython 7.31.1

jedi 0.18.1

kiwisolver 1.4.4

Markdown 3.4.1

MarkupSafe 2.1.2

matplotlib 3.5.3

matplotlib-inline 0.1.6

numpy 1.21.6

oauthlib 3.2.2

opencv-contrib-python 4.7.0.72

opencv-python 4.7.0.72

packaging 23.0

panda 0.3.1

pandas 1.3.5

parso 0.8.3

pickleshare 0.7.5

Pillow 9.3.0

pip 22.3.1

prompt-toolkit 3.0.36

protobuf 3.20.3

psutil 5.9.0

pyasn1 0.4.8

pyasn1-modules 0.2.8

pycparser 2.21

Pygments 2.11.2

pyOpenSSL 22.0.0

pyparsing 3.0.9

PySocks 1.7.1

python-dateutil 2.8.2

pytz 2022.7.1

PyYAML 6.0

requests 2.28.1

requests-oauthlib 1.3.1

rsa 4.9

scipy 1.7.3

seaborn 0.12.2

setuptools 65.6.3

six 1.16.0

tensorboard 2.11.2

tensorboard-data-server 0.6.1

tensorboard-plugin-wit 1.8.1

torch 1.13.1+cu116

torchaudio 0.13.1+cu116

torchvision 0.14.1+cu116

tqdm 4.64.1

traitlets 5.7.1

typing_extensions 4.5.0

urllib3 1.26.14

wcwidth 0.2.5

Werkzeug 2.2.3

wheel 0.38.4

win-inet-pton 1.1.0

wincertstore 0.2

zipp 3.14.0

(yolov7_ch) C:\dev\yolov7>

4、使用conda查看到的结果

(yolov7_ch) C:\dev\yolov7>conda list

# packages in environment at C:\Users\admin\.conda\envs\yolov7_ch:

#

# Name Version Build Channel

absl-py 1.4.0 pypi_0 pypi

backcall 0.2.0 pyhd3eb1b0_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

brotlipy 0.7.0 py37h2bbff1b_1003 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

ca-certificates 2023.01.10 haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

cachetools 5.3.0 pypi_0 pypi

certifi 2022.12.7 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

cffi 1.15.1 py37h2bbff1b_3 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

charset-normalizer 2.0.4 pyhd3eb1b0_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

colorama 0.4.6 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

cryptography 38.0.4 py37h21b164f_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

cv 1.0.0 pypi_0 pypi

cycler 0.11.0 pypi_0 pypi

decorator 5.1.1 pyhd3eb1b0_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

fonttools 4.38.0 pypi_0 pypi

freetype 2.12.1 ha860e81_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

google-auth 2.16.1 pypi_0 pypi

google-auth-oauthlib 0.4.6 pypi_0 pypi

grpcio 1.51.3 pypi_0 pypi

idna 3.4 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

importlib-metadata 6.0.0 pypi_0 pypi

ipython 7.31.1 py37haa95532_1 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

jedi 0.18.1 py37haa95532_1 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

jpeg 9e h2bbff1b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

kiwisolver 1.4.4 pypi_0 pypi

lerc 3.0 hd77b12b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

libdeflate 1.8 h2bbff1b_5 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

libpng 1.6.37 h2a8f88b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

libprotobuf 3.20.3 h23ce68f_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

libtiff 4.5.0 h6c2663c_1 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

libwebp 1.2.4 h2bbff1b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

libwebp-base 1.2.4 h2bbff1b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

lz4-c 1.9.4 h2bbff1b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

markdown 3.4.1 pypi_0 pypi

markupsafe 2.1.2 pypi_0 pypi

matplotlib 3.5.3 pypi_0 pypi

matplotlib-inline 0.1.6 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

numpy 1.21.6 pypi_0 pypi

oauthlib 3.2.2 pypi_0 pypi

opencv-contrib-python 4.7.0.72 pypi_0 pypi

opencv-python 4.7.0.72 pypi_0 pypi

openssl 1.1.1t h2bbff1b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

packaging 23.0 pypi_0 pypi

panda 0.3.1 pypi_0 pypi

pandas 1.3.5 pypi_0 pypi

parso 0.8.3 pyhd3eb1b0_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

pickleshare 0.7.5 pyhd3eb1b0_1003 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

pillow 9.3.0 py37hd77b12b_2 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

pip 22.3.1 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

prompt-toolkit 3.0.36 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

protobuf 3.20.3 py37hd77b12b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

psutil 5.9.0 py37h2bbff1b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

pyasn1 0.4.8 pypi_0 pypi

pyasn1-modules 0.2.8 pypi_0 pypi

pycparser 2.21 pyhd3eb1b0_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

pygments 2.11.2 pyhd3eb1b0_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

pyopenssl 22.0.0 pyhd3eb1b0_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

pyparsing 3.0.9 pypi_0 pypi

pysocks 1.7.1 py37_1 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

python 3.7.16 h6244533_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

python-dateutil 2.8.2 pypi_0 pypi

pytz 2022.7.1 pypi_0 pypi

pyyaml 6.0 py37h2bbff1b_1 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

requests 2.28.1 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

requests-oauthlib 1.3.1 pypi_0 pypi

rsa 4.9 pypi_0 pypi

scipy 1.7.3 pypi_0 pypi

seaborn 0.12.2 pypi_0 pypi

setuptools 65.6.3 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

six 1.16.0 pypi_0 pypi

sqlite 3.40.1 h2bbff1b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

tensorboard 2.11.2 pypi_0 pypi

tensorboard-data-server 0.6.1 pypi_0 pypi

tensorboard-plugin-wit 1.8.1 pypi_0 pypi

tk 8.6.12 h2bbff1b_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

torch 1.13.1+cu116 pypi_0 pypi

torchaudio 0.13.1+cu116 pypi_0 pypi

torchvision 0.14.1+cu116 pypi_0 pypi

tqdm 4.64.1 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

traitlets 5.7.1 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

typing-extensions 4.5.0 pypi_0 pypi

urllib3 1.26.14 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

vc 14.2 h21ff451_1 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

vs2015_runtime 14.27.29016 h5e58377_2 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

wcwidth 0.2.5 pyhd3eb1b0_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

werkzeug 2.2.3 pypi_0 pypi

wheel 0.38.4 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

win_inet_pton 1.1.0 py37haa95532_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

wincertstore 0.2 py37haa95532_2 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

xz 5.2.10 h8cc25b3_1 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

yaml 0.2.5 he774522_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

zipp 3.14.0 pypi_0 pypi

zlib 1.2.13 h8cc25b3_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

zstd 1.5.2 h19a0ad4_0 https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main

(yolov7_ch) C:\dev\yolov7>

调的时候运行又出现了新的问题,根据网上的解决办法是把num_workers设置成为0 ,我不知道这个参数在哪里,但是和这个相关的是在下面这行代码里。

if __name__ == '__main__':

parser = argparse.ArgumentParser()

......

parser.add_argument('--workers', type=int, default=8, help='maximum number of dataloader workers')

这里default的值默认为8,运行train.py出现

[Errno 32] Broken pipe' 这个问题,这个是因为电脑处理不过来。

我将default=8改成default=0,但是运行依然报错。

这里有个测试程序,创建train_test.py,并运行下面代码,同时通过nvidia-smi查看是否在GPU上运行,并且是在哪个上面运行。

import torch

from torchvision.models import resnet50, resnet152

if __name__ == '__main__':

# 虽然这里设置cuda:0,但实际使用的是1号gpu

device = torch.device('cuda:0' if torch.cuda.is_available else 'cpu')

print(f'当前设备为:{torch.cuda.current_device()}')

model = resnet152(num_classes=10)

model.to(device)

# 使用res152做1000次前向推断,batch-size设置为16

for i in range(1000):

X = torch.randn(16,3,224,224).to(device)

y = model(X)

print(f'id:{i+1:3d}:{y}')

通过上面那个程序,测试得到确实是在GPU上运行了。这里设置的是cuda:0

从这篇博客里查到解决这个问题的方法;

RuntimeError: indices should be either on cpu or on the same device as the indexed tensor (cpu)_Ggggm_28的博客-CSDN博客YOLOv7报错:RuntimeError: indices should be either on cpu or on the same device as the indexed tensor (cpu)https://blog.csdn.net/Ggggm_28/article/details/129005769

在 yolo7/utils/loss.py文件下修改在相应代码后添加.to(torch.device('cuda:0'))

if (anchor_matching_gt > 1).sum() > 0:

_, cost_argmin = torch.min(cost[:, anchor_matching_gt > 1], dim=0)

matching_matrix[:, anchor_matching_gt > 1] *= 0.0

matching_matrix[cost_argmin, anchor_matching_gt > 1] = 1.0

fg_mask_inboxes = matching_matrix.sum(0) > 0.0

fg_mask_inboxes = fg_mask_inboxes.to(torch.device('cuda:0'))

matched_gt_inds = matching_matrix[:,

fg_mask_inboxes.to(torch.device('cuda:0'))].argmax(0)

from_which_layer = from_which_layer[fg_mask_inboxes.to(torch.device('cuda:0'))]

all_b = all_b[fg_mask_inboxes.to(torch.device('cuda:0'))]

all_a = all_a[fg_mask_inboxes.to(torch.device('cuda:0'))]

all_gj = all_gj[fg_mask_inboxes.to(torch.device('cuda:0'))]

all_gi = all_gi[fg_mask_inboxes.to(torch.device('cuda:0'))]

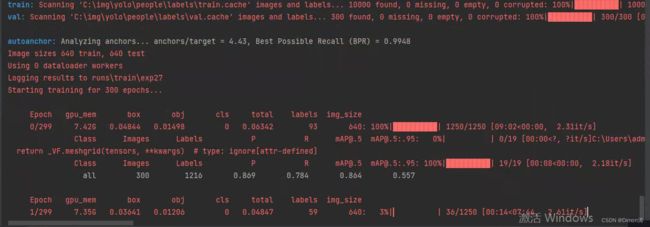

all_anch = all_anch[fg_mask_inboxes.to(torch.device('cuda:0'))]终于跑成功了,我用1万张样本数据,验证样本为300张,训练人形,看看效果怎么样。

花费了好久各种错误调试,说实话自己水平不够,有些问题也是网上查,请教其他人的。尤其是在搭建环境,模块组件的版本匹配很重要的,之前通用python3.9创建的运行环境,报错了一直没找到解决的办法,但是我重新使用python3.7创建的,经过多次尝试运行是没问题,出现的问题按照上面的情况一一解决了。

希望能给学习路上的伙伴们带来参考价值。