通过onnx.helper构建计算图

onnx是把一个网络的每一层或者说一个算子当成节点node,使用这些node去构建一个graph,即一个网络。通过onnx.helper来生成onnx

步骤如下:

第一步:node列表,里面通过onnx.helper.make_node生成多个算子节点

第二步:initializer列表,里面通过onnx.helper.make_tensor对算子节点进行初始化

第三步:input和output列表,里面通过onnx.helper.make_value_info生成输入输出

第四步:生成计算图,通过onnx.helper.make_graph将node列表、输入输出作为参数,生成计算图

第五步: 生成模型,通过onnx.helper.make_model

注释:使用onnx.helper的make_tensor,make_tensor_value_info,make_attribute,make_node,make_graph,make_node等方法来完整构建了一个ONNX模型。

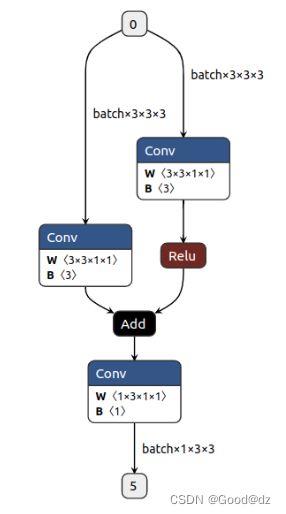

实例:通过conv、relu、add等算子来构建一个简单的模型,结构图如下所示:

代码如下:

import torch

import torch.nn as nn

import onnx

import onnx.helper as helper

import numpy as np

# reference

# https://github.com/shouxieai/learning-cuda-trt/blob/main/tensorrt-basic-1.4-onnx-editor/create-onnx.py

# 构建网络结构

class Model(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(3, 3, 1, 1)

self.relu1 = nn.ReLU()

self.conv2 = nn.Conv2d(3, 1, 1, 1)

self.conv_right = nn.Conv2d(3, 3, 1, 1)

def forward(self, x):

r = self.conv_right(x)

x = self.conv1(x)

x = self.relu1(x)

x = self.conv2(x + r)

return x

def hook_forward(fn):

# @hook_forward("torch.nn.Conv2d.forward") 对torch.nn.Conv2d.forward进行处理

fnnames = fn.split(".") #

fn_module = eval(".".join(fnnames[:-1]))

fn_name = fnnames[-1]

oldfn = getattr(fn_module, fn_name)

def make_hook(bind_fn):

ilayer = 0

def myforward(self, x):

global all_tensors

nonlocal ilayer

y = oldfn(self, x)

bind_fn(self, ilayer, x, y)

all_tensors.extend([x, y]) # 避免torch对tensor进行复用

ilayer += 1

return y

setattr(fn_module, fn_name, myforward)

return make_hook

@hook_forward("torch.nn.Conv2d.forward")

def symbolic_conv2d(self, ilayer, x, y):

print(f"{type(self)} -> Input {get_obj_idd(x)}, Output {get_obj_idd(y)}")

inputs = [

get_obj_idd(x),

append_initializer(self.weight.data, f"conv{ilayer}.weight"),

append_initializer(self.bias.data, f"conv{ilayer}.bias")

]

nodes.append(

helper.make_node(

"Conv", inputs, [get_obj_idd(y)], f"conv{ilayer}",

kernel_shape=self.kernel_size, group=self.groups, pads=[0, 0] + list(self.padding), dilations=self.dilation, strides=self.stride

)

)

@hook_forward("torch.nn.ReLU.forward")

def symbolic_relu(self, ilayer, x, y):

print(f"{type(self)} -> Input {get_obj_idd(x)}, Output {get_obj_idd(y)}")

nodes.append(

helper.make_node(

"Relu", [get_obj_idd(x)], [get_obj_idd(y)], f"relu{ilayer}"

)

)

@hook_forward("torch.Tensor.__add__")

def symbolic_add(a, ilayer, b, y):

print(f"Add -> Input {get_obj_idd(a)} + {get_obj_idd(b)}, Output {get_obj_idd(y)}")

nodes.append(

helper.make_node(

"Add", [get_obj_idd(a), get_obj_idd(b)], [get_obj_idd(y)], f"add{ilayer}"

)

)

def append_initializer(value, name):

initializers.append(

helper.make_tensor(

name=name,

data_type=helper.TensorProto.DataType.FLOAT,

dims=list(value.shape),

vals=value.data.numpy().astype(np.float32).tobytes(),

raw=True

)

)

return name

def get_obj_idd(obj):

global objmap

idd = id(obj)

if idd not in objmap:

objmap[idd] = str(len(objmap))

return objmap[idd]

all_tensors = []

objmap = {}

nodes = []

initializers = []

torch.manual_seed(31)

x = torch.full((1, 3, 3, 3), 0.55)

model = Model().eval()

y = model(x)

inputs = [

helper.make_value_info(

name="0",

type_proto=helper.make_tensor_type_proto(

elem_type=helper.TensorProto.DataType.FLOAT,

shape=["batch", x.size(1), x.size(2), x.size(3)]

)

)

]

outputs = [

helper.make_value_info(

name="5",

type_proto=helper.make_tensor_type_proto(

elem_type=helper.TensorProto.DataType.FLOAT,

shape=["batch", y.size(1), y.size(2), y.size(3)]

)

)

]

graph = helper.make_graph(

name="mymodel",

inputs=inputs,

outputs=outputs,

nodes=nodes,

initializer=initializers

)

# 如果名字不是ai.onnx,netron解析就不是太一样了 区别在可视化的时候,非ai.onnx的名字的话,每一个算子的框框颜色都是一样的

opset = [

helper.make_operatorsetid("ai.onnx", 11)

]

# producer主要是保持和pytorch一致

model = helper.make_model(graph, opset_imports=opset, producer_name="pytorch", producer_version="1.9")

onnx.save_model(model, "custom.onnx")

print(y)

参考文献:https://github.com/shouxieai/learning-cuda-trt/blob/main/tensorrt-basic-1.4-onnx-editor/create-onnx.py