文章目录

-

-

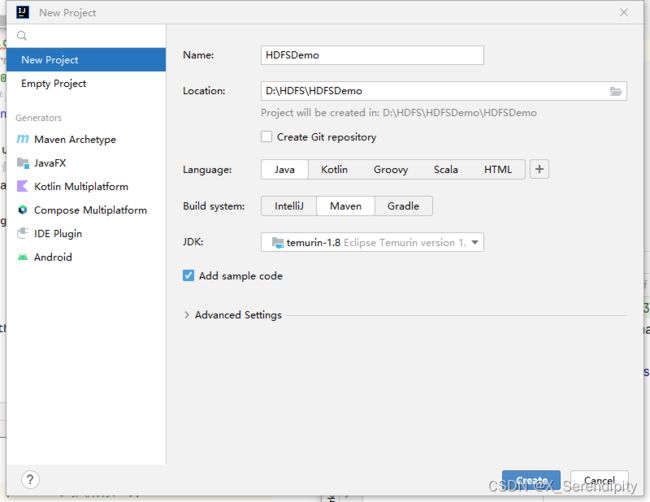

- 一、创建Maven项目

- 二、添加依赖

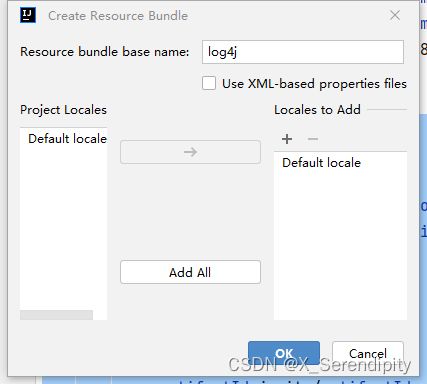

- 三、创建日志属性文件

- 四、在HDFS上创建文件

- 五、写入HDFS文件

-

- 1、将数据直接写入HDFS文件

- 2、将本地文件写入HDFS文件

- 六、读取HDFS文件

-

- 1、读取HDFS文件直接在控制台显示

- 2、读取HDFS文件,保存为本地文件

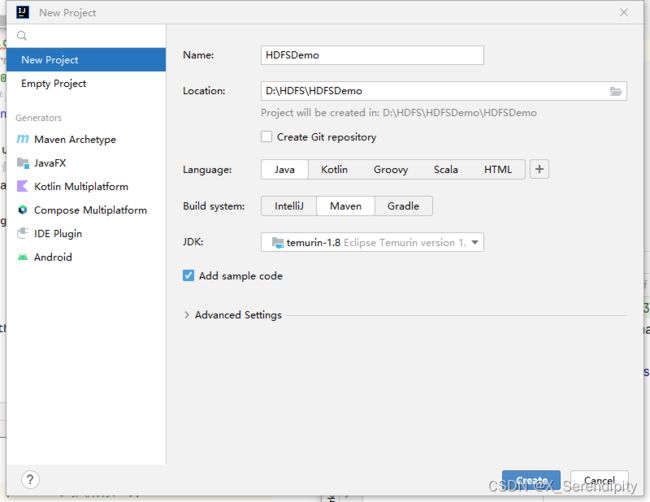

一、创建Maven项目

二、添加依赖

- 在

pom.xml文件里添加hadoop和junit依赖

<dependencies>

<dependency>

<!--hadoop客户端-->

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>3.3.4</version>

</dependency>

<!--单元调试框架-->

<dependency>

<groupId>junit</groupId>

<artifactId>junit</artifactId>

<version>4.13.2</version>

</dependency>

</dependencies>

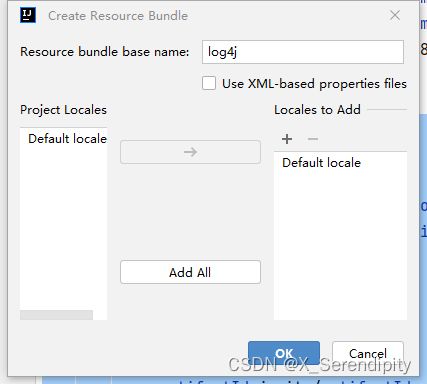

三、创建日志属性文件

- 在

resources目录里创建log4j.properties文件

- 代码

log4j.rootLogger=stdout, logfile

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/hdfs.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

四、在HDFS上创建文件

- 在

/ied01目录创建hadoop2.txt文件

- 创建

net.xxr.hdfs包,在包里创建CreateFileOnHDFS类

- 编写

create1()方法

package net.xxr.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.net.URI;

public class CreateFileOnHDFS {

public void create1() throws Exception{

Configuration conf = new Configuration();

String uri = "hdfs://master:9000";

FileSystem fs = FileSystem.get(new URI(uri), conf);

Path path = new Path(uri + "/ied01/hadoop2.txt");

boolean result = fs.createNewFile(path);

if (result) {

System.out.println("文件[" + path + "]创建成功!");

}else {

System.out.println("文件[" + path + "]创建失败!");

}

}

}

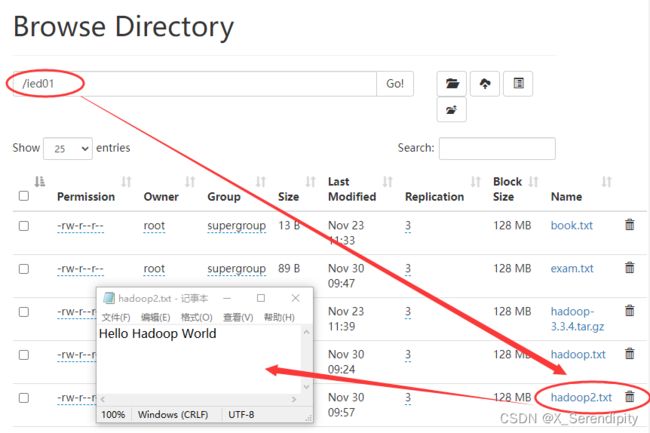

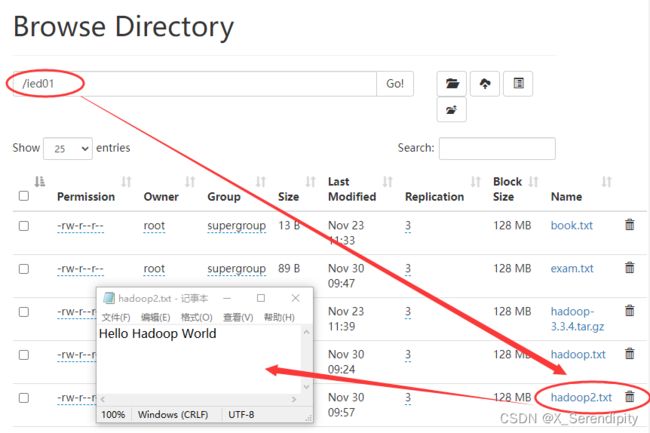

- 结果

- 利用HDFS集群WebUI查看

- 编写

create2()方法,实现判断文件是否存在

@Test

public void create2() throws Exception{

Configuration conf = new Configuration();

String uri = "hdfs://master:9000";

FileSystem fs = FileSystem.get(new URI(uri), conf);

Path path = new Path(uri + "/ied01/hadoop2.txt");

if (fs.exists(path)) {

System.out.println("文件[" + path + "]已存在!");

}else{

boolean result = fs.createNewFile(path);

if (result) {

System.out.println("文件[" + path + "]创建成功!");

}else {

System.out.println("文件[" + path + "]创建失败!");

}

}

}

- 结果

五、写入HDFS文件

- 在

net.xxr.hdfs包里创建WriteFileOnHDFS类

1、将数据直接写入HDFS文件

package net.xxr.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.net.URI;

public class WriteFileOnHDFS {

@Test

public void write1() throws Exception{

Configuration conf = new Configuration();

conf.set("dfs.client.use.datanode.hostname","true");

String uri = "hdfs://master:9000";

FileSystem fs = FileSystem.get(new URI(uri), conf,"root");

Path path = new Path(uri + "/ied01/hadoop2.txt");

FSDataOutputStream out = fs.create(path);

out.write("Hello Hadoop World".getBytes());

out.close();

fs.close();

System.out.println("文件[" + path + "]写入成功");

}

}

- 结果

- 利用HDFS集群WebUI查看

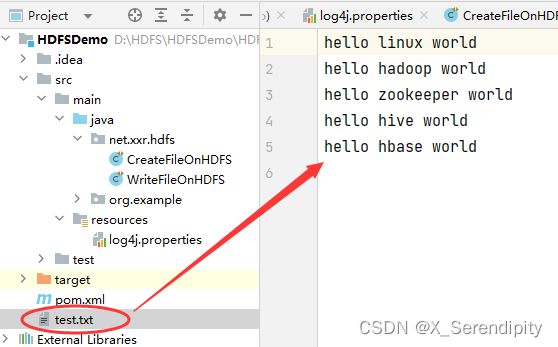

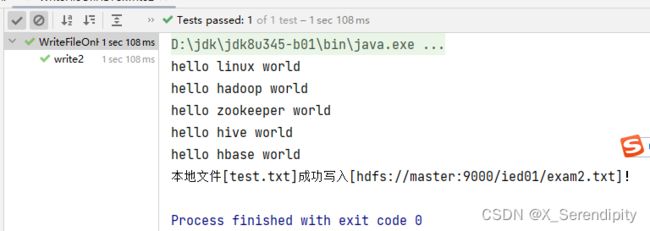

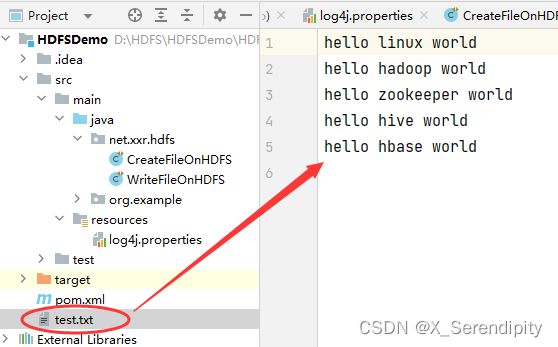

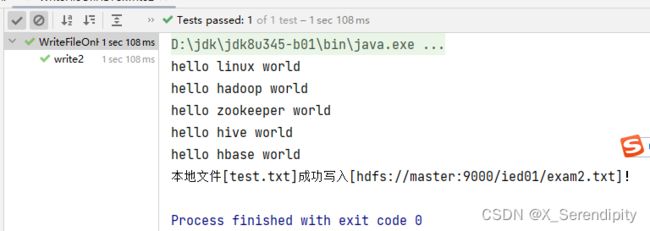

2、将本地文件写入HDFS文件

- 在项目根目录创建一个文本文件

test.txt

- 创建

create2()方法

@Test

public void write2() throws Exception {

Configuration conf = new Configuration();

conf.set("dfs.client.use.datanode.hostname", "true");

String uri = "hdfs://master:9000";

FileSystem fs = FileSystem.get(new URI(uri), conf, "root");

Path path = new Path(uri + "/ied01/exam2.txt");

FSDataOutputStream out = fs.create(path);

FileReader fr = new FileReader("test.txt");

BufferedReader br = new BufferedReader(fr);

String nextLine = "";

while ((nextLine = br.readLine()) != null) {

System.out.println(nextLine);

out.write(nextLine.getBytes());

}

out.close();

br.close();

fr.close();

System.out.println("本地文件[test.txt]成功写入[" + path + "]!");

}

- 结果

- 其实这个方法的功能就是将本地文件复制(上传)到HDFS,有没有更简单的处理方法呢?有的,通过使用一个工具类IOUtils来完成文件的相关操作

- 编写

create2_()方法

@Test

public void write2_() throws Exception {

Configuration conf = new Configuration();

conf.set("dfs.client.use.datanode.hostname", "true");

String uri = "hdfs://master:9000";

FileSystem fs = FileSystem.get(new URI(uri), conf, "root");

Path path = new Path(uri + "/ied01/test2.txt");

FSDataOutputStream out = fs.create(path);

FileInputStream in = new FileInputStream("test.txt");

IOUtils.copyBytes(in, out, conf);

in.close();

out.close();

fs.close();

System.out.println("本地文件[test.txt]成功写入[" + path + "]!");

}

- 结果

- 查看

/ied01/test.txt内容

六、读取HDFS文件

- 在

net.xxr.hdfs包里创建ReadFileOnHDFS类

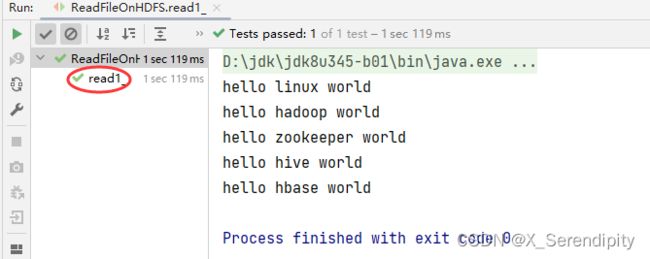

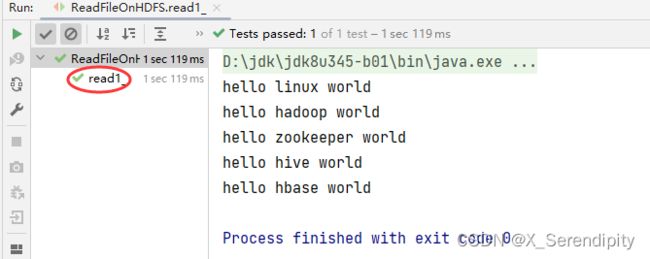

1、读取HDFS文件直接在控制台显示

package net.xxr.hdfs;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.junit.Test;

import java.io.BufferedReader;

import java.io.InputStreamReader;

import java.net.URI;

public class ReadFileOnHDFS {

@Test

public void read1() throws Exception {

Configuration conf = new Configuration();

conf.set("dfs.client.use.datanode.hostname", "true");

String uri = "hdfs://master:9000";

FileSystem fs = FileSystem.get(new URI(uri), conf, "root");

Path path = new Path(uri + "/ied01/test2.txt");

FSDataInputStream in = fs.open(path);

BufferedReader br = new BufferedReader(new InputStreamReader(in));

String nextLine = "";

while ((nextLine = br.readLine()) != null) {

System.out.println(nextLine);

}

br.close();

in.close();

fs.close();

}

}

- 结果

- 利用

IOUtils类简化代码

- 创建

read1_()测试方法

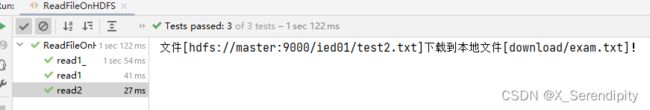

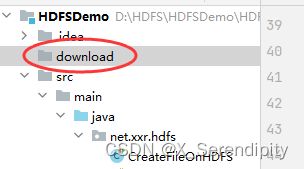

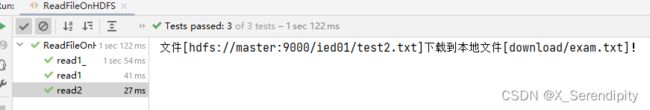

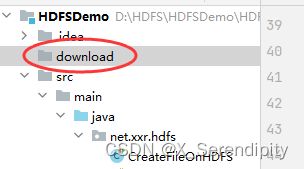

2、读取HDFS文件,保存为本地文件

- 任务:将/ied01/test2.txt下载到项目下download目录里

- 创建download目录

- 创建

read2()方法

@Test

public void read2() throws Exception {

Configuration conf = new Configuration();

conf.set("dfs.client.use.datanode.hostname", "true");

String uri = "hdfs://master:9000";

FileSystem fs = FileSystem.get(new URI(uri), conf, "root");

Path path = new Path(uri + "/ied01/test2.txt");

FSDataInputStream in = fs.open(path);

FileOutputStream out = new FileOutputStream("download/exam.txt");

IOUtils.copyBytes(in, out, conf);

in.close();

out.close();

fs.close();

System.out.println("文件[" + path + "]下载到本地文件[download/exam.txt]!");

}

- 结果