1. 前言

在 [mydocker]---网络虚拟设备veth bridge iptables 上文中已经测试了

docker网络中需要用的一些技术。所以在此基础上可以用这些技术来理解一下docker的四种网络模型.

容器的四种网络模式:

bridge 桥接模式、host 模式、container 模式和 none 模式

启动容器时可以使用 --net 参数指定,默认是桥接模式。

1.1 前期准备

创建一个网桥

br0用于后续的测试.

root@nicktming:~# ifconfig

docker0 Link encap:Ethernet HWaddr 56:84:7a:fe:97:99

inet addr:172.17.42.1 Bcast:0.0.0.0 Mask:255.255.0.0

UP BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

eth0 Link encap:Ethernet HWaddr 52:54:00:58:9a:c3

inet addr:172.19.16.7 Bcast:172.19.31.255 Mask:255.255.240.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8386208 errors:0 dropped:0 overruns:0 frame:0

TX packets:6894776 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4214874094 (4.2 GB) TX bytes:4314912989 (4.3 GB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:58 errors:0 dropped:0 overruns:0 frame:0

TX packets:58 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:5452 (5.4 KB) TX bytes:5452 (5.4 KB)

// 创建网桥br0

root@nicktming:~# ip link add name br0 type bridge

root@nicktming:~# ip link set br0 up

root@nicktming:~# ip addr add 192.168.2.1/24 dev br0

root@nicktming:~# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.19.16.1 0.0.0.0 UG 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.19.16.0 0.0.0.0 255.255.240.0 U 0 0 0 eth0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 br0

root@nicktming:~# ifconfig

br0 Link encap:Ethernet HWaddr 12:72:4e:b9:6d:f6

inet addr:192.168.2.1 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

docker0 Link encap:Ethernet HWaddr 56:84:7a:fe:97:99

inet addr:172.17.42.1 Bcast:0.0.0.0 Mask:255.255.0.0

UP BROADCAST MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

eth0 Link encap:Ethernet HWaddr 52:54:00:58:9a:c3

inet addr:172.19.16.7 Bcast:172.19.31.255 Mask:255.255.240.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:8399220 errors:0 dropped:0 overruns:0 frame:0

TX packets:6908349 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4216650910 (4.2 GB) TX bytes:4317543341 (4.3 GB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:58 errors:0 dropped:0 overruns:0 frame:0

TX packets:58 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:5452 (5.4 KB) TX bytes:5452 (5.4 KB)

root@nicktming:~#

因为已经安装了

docker, 所以有个网桥docker0, 并且地址为172.17.42.1, 掩码为255.255.0.0, 所以cidr地址为172.17.42.1/16.

当然宿主机需要开启

ip_forward功能,echo 1 > /proc/sys/net/ipv4/ip_forward.

// 加入iptables 规则

root@nicktming:~# iptables -t nat -vnL POSTROUTING

Chain POSTROUTING (policy ACCEPT 3404 packets, 208K bytes)

pkts bytes target prot opt in out source destination

7 474 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

root@nicktming:~# iptables -t nat -A POSTROUTING -s 192.168.2.0/24 -o eth0 -j MASQUERADE

root@nicktming:~# iptables -t nat -vnL POSTROUTING

Chain POSTROUTING (policy ACCEPT 1 packets, 60 bytes)

pkts bytes target prot opt in out source destination

7 474 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

0 0 MASQUERADE all -- * eth0 192.168.2.0/24 0.0.0.0/0

root@nicktming:~#

2 bridge 桥接模式

2.1 docker 桥接模型

创建两个容器并进行测试.

root@nicktming:~# docker run -d --name container01 busybox top

3b5d2352935e6572268f4ad94525ad11300bf5760cae2613bbcd230482884631

root@nicktming:~# docker run -d --name container02 busybox top

6998224ba1cb16b29cc06d43ebd1f172916e26b4ecee6817340b5b4f0ec528fc

root@nicktming:~#

root@nicktming:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6998224ba1cb busybox:latest "top" 3 seconds ago Up 2 seconds container02

3b5d2352935e busybox:latest "top" 7 seconds ago Up 6 seconds container01

root@nicktming:~# docker exec container01 ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:03

inet addr:172.17.0.3 Bcast:0.0.0.0 Mask:255.255.0.0

inet6 addr: fe80::42:acff:fe11:3/64 Scope:Link

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:8 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:648 (648.0 B) TX bytes:648 (648.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

root@nicktming:~#

root@nicktming:~# docker exec container02 ifconfig

eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:04

inet addr:172.17.0.4 Bcast:0.0.0.0 Mask:255.255.0.0

inet6 addr: fe80::42:acff:fe11:4/64 Scope:Link

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:2 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:140 (140.0 B) TX bytes:648 (648.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

root@nicktming:~#

root@nicktming:~# ip link

1: lo: mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: mtu 1500 qdisc pfifo_fast state UP mode DEFAULT group default qlen 1000

link/ether 52:54:00:58:9a:c3 brd ff:ff:ff:ff:ff:ff

3: docker0: mtu 1500 qdisc noqueue state UP mode DEFAULT group default

link/ether 56:84:7a:fe:97:99 brd ff:ff:ff:ff:ff:ff

14: br0: mtu 1500 qdisc noqueue state UNKNOWN mode DEFAULT group default

link/ether 12:72:4e:b9:6d:f6 brd ff:ff:ff:ff:ff:ff

20: veth7ab162a: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether ea:bb:fa:eb:23:72 brd ff:ff:ff:ff:ff:ff

22: veth24562cc: mtu 1500 qdisc noqueue master docker0 state UP mode DEFAULT group default

link/ether ce:cf:34:06:49:ef brd ff:ff:ff:ff:ff:ff

root@nicktming:~# brctl show

bridge name bridge id STP enabled interfaces

br0 8000.000000000000 no

docker0 8000.56847afe9799 no veth24562cc

veth7ab162a

创建了两个容器分别为

container01和container02,container01的ip地址为172.17.0.3,container02的ip地址为172.17.0.4.

bridge-mode-01.png

2.1.1 从宿主机访问容器

root@nicktming:~# ping -c 1 172.17.0.4

PING 172.17.0.4 (172.17.0.4) 56(84) bytes of data.

64 bytes from 172.17.0.4: icmp_seq=1 ttl=64 time=0.058 ms

--- 172.17.0.4 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.058/0.058/0.058/0.000 ms

root@nicktming:~# ping -c 1 172.17.0.3

PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data.

64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.050 ms

--- 172.17.0.3 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.050/0.050/0.050/0.000 ms

2.1.1 容器访问外部

容器访问外部包括别的容器, 宿主机, 互联网服务.

root@nicktming:~# docker exec -it container01 sh

// 可以看到默认网关为宿主机中网桥docker0的ip

/ # route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 172.17.42.1 0.0.0.0 UG 0 0 0 eth0

172.17.0.0 * 255.255.0.0 U 0 0 0 eth0

// 此容器的ip

/ # ip addr show dev eth0

19: eth0: mtu 1500 qdisc noqueue

link/ether 02:42:ac:11:00:03 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.3/16 scope global eth0

valid_lft forever preferred_lft forever

inet6 fe80::42:acff:fe11:3/64 scope link

valid_lft forever preferred_lft forever

// 访问容器container02

/ # ping -c 1 172.17.0.4

PING 172.17.0.4 (172.17.0.4): 56 data bytes

64 bytes from 172.17.0.4: seq=0 ttl=64 time=0.081 ms

--- 172.17.0.4 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.081/0.081/0.081 ms

// 访问容器本身ip

/ # ping -c 1 172.17.0.3

PING 172.17.0.3 (172.17.0.3): 56 data bytes

64 bytes from 172.17.0.3: seq=0 ttl=64 time=0.053 ms

--- 172.17.0.3 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.053/0.053/0.053 ms

// 访问容器本身ip

/ # ping -c 1 127.0.0.1

PING 127.0.0.1 (127.0.0.1): 56 data bytes

64 bytes from 127.0.0.1: seq=0 ttl=64 time=0.040 ms

--- 127.0.0.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.040/0.040/0.040 ms

// 访问宿主机ip

/ # ping -c 1 172.19.16.7

PING 172.19.16.7 (172.19.16.7): 56 data bytes

64 bytes from 172.19.16.7: seq=0 ttl=64 time=0.053 ms

--- 172.19.16.7 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.053/0.053/0.053 ms

/ #

// 访问宿主机网桥docker0 ip

/ # ping -c 1 172.17.42.1

PING 172.17.42.1 (172.17.42.1): 56 data bytes

64 bytes from 172.17.42.1: seq=0 ttl=64 time=0.046 ms

--- 172.17.42.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.046/0.046/0.046 ms

// 访问互联网服务

/ # ping -c 1 www.baidu.com

PING www.baidu.com (119.63.197.139): 56 data bytes

64 bytes from 119.63.197.139: seq=0 ttl=51 time=56.129 ms

--- www.baidu.com ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 56.129/56.129/56.129 ms

/ # exit

root@nicktming:~#

之所以可以访问互联网服务是因为宿主机中开启了

ip_forward功能并且加入了一条MASQUERADE规则.

root@nicktming:~# cat /proc/sys/net/ipv4/ip_forward

1

root@nicktming:~# iptables -t nat -vnL POSTROUTING --line

Chain POSTROUTING (policy ACCEPT 18 packets, 1130 bytes)

num pkts bytes target prot opt in out source destination

1 7 474 MASQUERADE all -- * !docker0 172.17.0.0/16 0.0.0.0/0

2.2 手动实现

手动实现.png

2.2.1添加network namespace

root@nicktming:~# ip netns add ns1

root@nicktming:~# ip netns add ns2

root@nicktming:~# ip netns list

ns2

ns1

root@nicktming:~# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.19.16.1 0.0.0.0 UG 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.19.16.0 0.0.0.0 255.255.240.0 U 0 0 0 eth0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 br0

// 创建veth pair

root@nicktming:~# ip link add veth0 type veth peer name veth1

// 将veth1 加入到ns1 namespace中

root@nicktming:~# ip link set veth1 netns ns1

// 将veth0 attach到br0上

root@nicktming:~# brctl addif br0 veth0

root@nicktming:~# bridge link

20: veth7ab162a state UP : mtu 1500 master docker0 state forwarding priority 32 cost 2

22: veth24562cc state UP : mtu 1500 master docker0 state forwarding priority 32 cost 2

26: veth0 state DOWN : mtu 1500 master br0 state disabled priority 32 cost 2

root@nicktming:~# ip link set veth0 up

root@nicktming:~# bridge link

20: veth7ab162a state UP : mtu 1500 master docker0 state forwarding priority 32 cost 2

22: veth24562cc state UP : mtu 1500 master docker0 state forwarding priority 32 cost 2

26: veth0 state UP : mtu 1500 master br0 state forwarding priority 32 cost 2

2.2.2 配置ns1

root@nicktming:~# ip netns exec ns1 sh

# ifconfig

# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

# ip link

1: lo: mtu 65536 qdisc noop state DOWN mode DEFAULT group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

25: veth1: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether ee:e2:da:93:d3:a5 brd ff:ff:ff:ff:ff:ff

# ip link set veth1 name eth0

# ip addr add 192.168.2.10/24 dev eth0

# ip set eth0 up

Object "set" is unknown, try "ip help".

# ip link set eth0 up

# ip link set lo up

# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

# route add default gw 192.168.2.1

# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.2.1 0.0.0.0 UG 0 0 0 eth0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

// 服务网桥br0

# ping -c 1 192.168.2.1

PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data.

64 bytes from 192.168.2.1: icmp_seq=1 ttl=64 time=0.039 ms

--- 192.168.2.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.039/0.039/0.039/0.000 ms

// 访问宿主机ip

# ping -c 1 172.19.16.7

PING 172.19.16.7 (172.19.16.7) 56(84) bytes of data.

64 bytes from 172.19.16.7: icmp_seq=1 ttl=64 time=0.051 ms

--- 172.19.16.7 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.051/0.051/0.051/0.000 ms

#

// 访问互联网服务

# ping -c 1 www.baidu.com

PING www.wshifen.com (119.63.197.139) 56(84) bytes of data.

64 bytes from 119.63.197.139: icmp_seq=1 ttl=51 time=56.1 ms

--- www.wshifen.com ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 56.122/56.122/56.122/0.000 ms

# exit

You have stopped jobs.

# exit

root@nicktming:~#

2.2.3 配置ns2

root@nicktming:~# ip link add veth2 type veth peer name veth3

root@nicktming:~# brctl addif br0 veth2

root@nicktming:~# ip link set veth2 up

root@nicktming:~# ip link set veth3 netns ns2

root@nicktming:~#

root@nicktming:~# ip netns exec ns2 sh

# ip link

1: lo: mtu 65536 qdisc noop state DOWN mode DEFAULT group default

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

27: veth3: mtu 1500 qdisc noop state DOWN mode DEFAULT group default qlen 1000

link/ether 6a:63:04:50:2d:04 brd ff:ff:ff:ff:ff:ff

# ip link set veth3 name eth0

# ip addr add 192.168.2.20/24 dev eth0

# ip link set eth0 up

# ip link set lo up

# route add default gw 192.168.2.1

# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.2.1 0.0.0.0 UG 0 0 0 eth0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

# ifconfig

eth0 Link encap:Ethernet HWaddr 6a:63:04:50:2d:04

inet addr:192.168.2.20 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::6863:4ff:fe50:2d04/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:0 (0.0 B) TX bytes:648 (648.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

// 服务网桥br0

# ping -c 1 192.168.2.1

PING 192.168.2.1 (192.168.2.1) 56(84) bytes of data.

64 bytes from 192.168.2.1: icmp_seq=1 ttl=64 time=0.057 ms

--- 192.168.2.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.057/0.057/0.057/0.000 ms

// 访问另外一个namespace ns1

# ping -c 1 192.168.2.10

PING 192.168.2.10 (192.168.2.10) 56(84) bytes of data.

64 bytes from 192.168.2.10: icmp_seq=1 ttl=64 time=0.061 ms

--- 192.168.2.10 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.061/0.061/0.061/0.000 ms

// 访问本namespace ns2

# ping -c 1 192.168.2.20

PING 192.168.2.20 (192.168.2.20) 56(84) bytes of data.

64 bytes from 192.168.2.20: icmp_seq=1 ttl=64 time=0.032 ms

--- 192.168.2.20 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.032/0.032/0.032/0.000 ms

// 访问自己

# ping -c 1 127.0.0.1

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.026 ms

--- 127.0.0.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.026/0.026/0.026/0.000 ms

// 访问宿主机ip

# ping -c 1 172.19.16.7

PING 172.19.16.7 (172.19.16.7) 56(84) bytes of data.

64 bytes from 172.19.16.7: icmp_seq=1 ttl=64 time=0.039 ms

--- 172.19.16.7 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.039/0.039/0.039/0.000 ms

// 访问互联网服务

# ping -c 1 www.baidu.com

PING www.wshifen.com (119.63.197.151) 56(84) bytes of data.

64 bytes from 119.63.197.151: icmp_seq=1 ttl=51 time=54.7 ms

--- www.wshifen.com ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 54.755/54.755/54.755/0.000 ms

# exit

root@nicktming:~#

2.2.3 从宿主机访问此两个namespace

root@nicktming:~# ping -c 1 192.168.2.10

PING 192.168.2.10 (192.168.2.10) 56(84) bytes of data.

64 bytes from 192.168.2.10: icmp_seq=1 ttl=64 time=0.078 ms

--- 192.168.2.10 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.078/0.078/0.078/0.000 ms

root@nicktming:~# ping -c 1 192.168.2.20

PING 192.168.2.20 (192.168.2.20) 56(84) bytes of data.

64 bytes from 192.168.2.20: icmp_seq=1 ttl=64 time=0.038 ms

--- 192.168.2.20 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.038/0.038/0.038/0.000 ms

2.2.4 ns1 访问 ns2

因为

ns2是在ns1后面配置的,ns2访问ns1没有问题, 看看ns1访问ns2.

root@nicktming:~# ip netns exec ns1 ping -c 1 192.168.2.20

PING 192.168.2.20 (192.168.2.20) 56(84) bytes of data.

64 bytes from 192.168.2.20: icmp_seq=1 ttl=64 time=0.066 ms

--- 192.168.2.20 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.066/0.066/0.066/0.000 ms

root@nicktming:~# ip netns exec ns1 ping -c 1 192.168.2.10

PING 192.168.2.10 (192.168.2.10) 56(84) bytes of data.

64 bytes from 192.168.2.10: icmp_seq=1 ttl=64 time=0.037 ms

--- 192.168.2.10 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.037/0.037/0.037/0.000 ms

root@nicktming:~# ip netns exec ns1 ping -c 1 127.0.0.1

PING 127.0.0.1 (127.0.0.1) 56(84) bytes of data.

64 bytes from 127.0.0.1: icmp_seq=1 ttl=64 time=0.027 ms

--- 127.0.0.1 ping statistics ---

1 packets transmitted, 1 received, 0% packet loss, time 0ms

rtt min/avg/max/mdev = 0.027/0.027/0.027/0.000 ms

root@nicktming:~#

3 host 模式

3.1 docker host模型

3.1.1 创建host模型的容器

// 宿主机默认的network namespace

root@nicktming:~# echo $$

9493

root@nicktming:~# readlink /proc/9493/ns/net

net:[4026531956]

root@nicktming:~#

root@nicktming:~# docker run -d --name container03-host --net host busybox top

07d642f06fa0713a2f0be86d45c7a0e7cbfabbfde16892e56e3726bed8a0c1ab

root@nicktming:~#

root@nicktming:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

07d642f06fa0 busybox:latest "top" 4 seconds ago Up 3 seconds container03-host

6998224ba1cb busybox:latest "top" 2 hours ago Up 2 hours container02

3b5d2352935e busybox:latest "top" 2 hours ago Up 2 hours container01

3.1.2 访问外部网络

// 可以看到该容器与宿主机默认的network namespace是一样的

// 所以该容器container03-host在启动的时候并没有做网络隔离

root@nicktming:~# docker exec -it container03-host sh

/ # echo $$

9

/ # readlink /proc/9/ns/net

net:[4026531956]

// 与宿主机网络一样

/ # ifconfig

br0 Link encap:Ethernet HWaddr 72:DB:A5:4A:F8:1D

inet addr:192.168.2.1 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:47 errors:0 dropped:0 overruns:0 frame:0

TX packets:42 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2708 (2.6 KiB) TX bytes:3014 (2.9 KiB)

docker0 Link encap:Ethernet HWaddr 56:84:7A:FE:97:99

inet addr:172.17.42.1 Bcast:0.0.0.0 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:41 errors:0 dropped:0 overruns:0 frame:0

TX packets:22 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2482 (2.4 KiB) TX bytes:2123 (2.0 KiB)

eth0 Link encap:Ethernet HWaddr 52:54:00:58:9A:C3

inet addr:172.19.16.7 Bcast:172.19.31.255 Mask:255.255.240.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:9364254 errors:0 dropped:0 overruns:0 frame:0

TX packets:7459224 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4992473581 (4.6 GiB) TX bytes:5021383698 (4.6 GiB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:60 errors:0 dropped:0 overruns:0 frame:0

TX packets:60 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:5676 (5.5 KiB) TX bytes:5676 (5.5 KiB)

veth0 Link encap:Ethernet HWaddr F2:62:19:96:44:FE

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:32 errors:0 dropped:0 overruns:0 frame:0

TX packets:36 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2236 (2.1 KiB) TX bytes:2645 (2.5 KiB)

veth2 Link encap:Ethernet HWaddr 72:DB:A5:4A:F8:1D

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:26 errors:0 dropped:0 overruns:0 frame:0

TX packets:22 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1816 (1.7 KiB) TX bytes:1577 (1.5 KiB)

veth24562cc Link encap:Ethernet HWaddr CE:CF:34:06:49:EF

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:13 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:970 (970.0 B) TX bytes:504 (504.0 B)

veth7ab162a Link encap:Ethernet HWaddr EA:BB:FA:EB:23:72

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:31 errors:0 dropped:0 overruns:0 frame:0

TX packets:32 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2228 (2.1 KiB) TX bytes:2855 (2.7 KiB)

/ # route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.19.16.1 0.0.0.0 UG 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.19.16.0 0.0.0.0 255.255.240.0 U 0 0 0 eth0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 br0

/ # ping -c 1 192.168.2.1

PING 192.168.2.1 (192.168.2.1): 56 data bytes

64 bytes from 192.168.2.1: seq=0 ttl=64 time=0.042 ms

--- 192.168.2.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.042/0.042/0.042 ms

/ # ping -c 1 192.168.2.10

PING 192.168.2.10 (192.168.2.10): 56 data bytes

64 bytes from 192.168.2.10: seq=0 ttl=64 time=0.058 ms

--- 192.168.2.10 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.058/0.058/0.058 ms

/ # ping -c 1 192.168.2.20

PING 192.168.2.20 (192.168.2.20): 56 data bytes

64 bytes from 192.168.2.20: seq=0 ttl=64 time=0.051 ms

--- 192.168.2.20 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.051/0.051/0.051 ms

/ # ping -c 1 127.0.0.1

PING 127.0.0.1 (127.0.0.1): 56 data bytes

64 bytes from 127.0.0.1: seq=0 ttl=64 time=0.044 ms

--- 127.0.0.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.044/0.044/0.044 ms

/ # ping -c 1 172.17.42.1

PING 172.17.42.1 (172.17.42.1): 56 data bytes

64 bytes from 172.17.42.1: seq=0 ttl=64 time=0.044 ms

--- 172.17.42.1 ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 0.044/0.044/0.044 ms

/ # ping -c 1 www.baidu.com

PING www.baidu.com (119.63.197.151): 56 data bytes

64 bytes from 119.63.197.151: seq=0 ttl=52 time=54.741 ms

--- www.baidu.com ping statistics ---

1 packets transmitted, 1 packets received, 0% packet loss

round-trip min/avg/max = 54.741/54.741/54.741 ms

/ #

/ # exit

root@nicktming:~#

3.2 unshare 实现

由于不需要网络隔离, 但是容器

container03-host有其余的隔离. 可以用unshare来创建一个不带网络隔离的进程.

root@nicktming:~# unshare sh

# ifconfig

br0 Link encap:Ethernet HWaddr 72:db:a5:4a:f8:1d

inet addr:192.168.2.1 Bcast:0.0.0.0 Mask:255.255.255.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:53 errors:0 dropped:0 overruns:0 frame:0

TX packets:48 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2988 (2.9 KB) TX bytes:3378 (3.3 KB)

docker0 Link encap:Ethernet HWaddr 56:84:7a:fe:97:99

inet addr:172.17.42.1 Bcast:0.0.0.0 Mask:255.255.0.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:41 errors:0 dropped:0 overruns:0 frame:0

TX packets:22 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2482 (2.4 KB) TX bytes:2123 (2.1 KB)

eth0 Link encap:Ethernet HWaddr 52:54:00:58:9a:c3

inet addr:172.19.16.7 Bcast:172.19.31.255 Mask:255.255.240.0

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:9375579 errors:0 dropped:0 overruns:0 frame:0

TX packets:7470707 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:4995739054 (4.9 GB) TX bytes:5025230121 (5.0 GB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:66 errors:0 dropped:0 overruns:0 frame:0

TX packets:66 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:6180 (6.1 KB) TX bytes:6180 (6.1 KB)

veth0 Link encap:Ethernet HWaddr f2:62:19:96:44:fe

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:35 errors:0 dropped:0 overruns:0 frame:0

TX packets:40 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:2418 (2.4 KB) TX bytes:2925 (2.9 KB)

veth2 Link encap:Ethernet HWaddr 72:db:a5:4a:f8:1d

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:29 errors:0 dropped:0 overruns:0 frame:0

TX packets:26 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1998 (1.9 KB) TX bytes:1857 (1.8 KB)

veth24562cc Link encap:Ethernet HWaddr ce:cf:34:06:49:ef

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:13 errors:0 dropped:0 overruns:0 frame:0

TX packets:8 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:970 (970.0 B) TX bytes:504 (504.0 B)

veth7ab162a Link encap:Ethernet HWaddr ea:bb:fa:eb:23:72

UP BROADCAST RUNNING MTU:1500 Metric:1

RX packets:31 errors:0 dropped:0 overruns:0 frame:0

TX packets:32 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:2228 (2.2 KB) TX bytes:2855 (2.8 KB)

# route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 172.19.16.1 0.0.0.0 UG 0 0 0 eth0

172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0

172.19.16.0 0.0.0.0 255.255.240.0 U 0 0 0 eth0

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 br0

# exit

root@nicktming:~#

4. 参考

1. https://blog.csdn.net/csdn066/article/details/77165269

2. https://blog.csdn.net/xbw_linux123/article/details/81873490

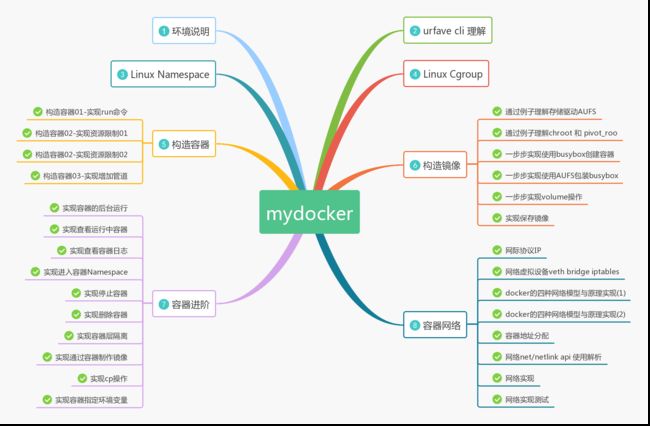

5. 全部内容

mydocker.png

mydocker.png

1. [mydocker]---环境说明

2. [mydocker]---urfave cli 理解

3. [mydocker]---Linux Namespace

4. [mydocker]---Linux Cgroup

5. [mydocker]---构造容器01-实现run命令

6. [mydocker]---构造容器02-实现资源限制01

7. [mydocker]---构造容器02-实现资源限制02

8. [mydocker]---构造容器03-实现增加管道

9. [mydocker]---通过例子理解存储驱动AUFS

10. [mydocker]---通过例子理解chroot 和 pivot_root

11. [mydocker]---一步步实现使用busybox创建容器

12. [mydocker]---一步步实现使用AUFS包装busybox

13. [mydocker]---一步步实现volume操作

14. [mydocker]---实现保存镜像

15. [mydocker]---实现容器的后台运行

16. [mydocker]---实现查看运行中容器

17. [mydocker]---实现查看容器日志

18. [mydocker]---实现进入容器Namespace

19. [mydocker]---实现停止容器

20. [mydocker]---实现删除容器

21. [mydocker]---实现容器层隔离

22. [mydocker]---实现通过容器制作镜像

23. [mydocker]---实现cp操作

24. [mydocker]---实现容器指定环境变量

25. [mydocker]---网际协议IP

26. [mydocker]---网络虚拟设备veth bridge iptables

27. [mydocker]---docker的四种网络模型与原理实现(1)

28. [mydocker]---docker的四种网络模型与原理实现(2)

29. [mydocker]---容器地址分配

30. [mydocker]---网络net/netlink api 使用解析

31. [mydocker]---网络实现

32. [mydocker]---网络实现测试