Moving Fast With High Code Quality - Engineering a

Moving Fast With High Code Quality - Engineering at Quora

A high-quality codebase boosts the long-term development speed by making iteration, collaboration, and maintenance easier. At Quora, we take the quality of our codebase seriously.

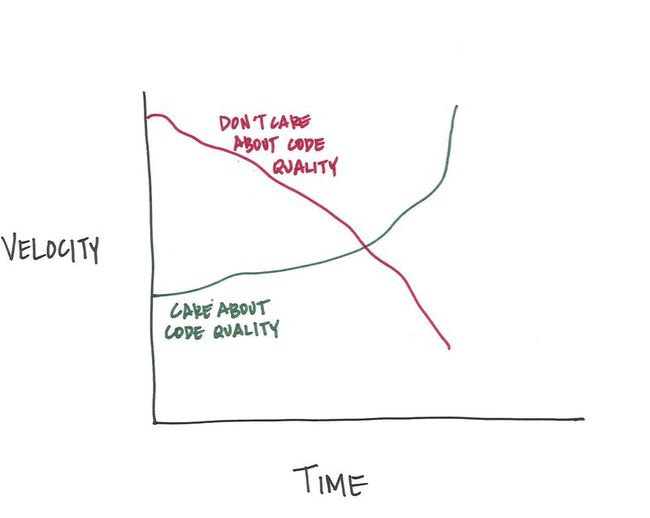

But despite the benefits, maintaining high code quality creates a non-trivial overhead and consumes real development cycles. Many people find it difficult to trade-off this overhead with the benefits and assume you have a binary choice: either move quickly with low code quality or move slowly with high code quality. Since startups optimize for a fast development cycle, people assume you have to do so with low code quality.

We have been able to break this dichotomy by devising tools and processes that enable us to move fast while maintaining a high-quality codebase. In this post, we are going to describe our approach on code quality and some concrete practices which enable us to make the best of both worlds.

Goal of Maintaining Code Quality

The main benefit of maintaining high code quality is the long-term boost to development speed, so this is what we optimize for and focus on. We just need to trade-off those long-term gains with the short-term costs of writing cleaner code upfront.

With this in mind, here are four key underlying principles for code quality that we have found useful:

Quora's Principles For Code Quality

Reading and understanding code should be easy - A lot more time is spent reading code than writing it and so reading time should be minimized even if it makes writing code a bit slower.

Different parts of code should be subject to different quality standards - Different lines of code have different lifetimes, scopes, risks of breaking, costs if something does break, costs of fixing those breakages, etc. Overall, they have very different effects on long-term iteration speed and enforcing a uniform quality standard everywhere isn't optimal.

Code quality overhead is reducible - The overhead of maintaining high code quality can often be reduced by automation, better tools, better processes, and better developer education.

Codebase consistency is important - There is value in having consistency across the whole codebase, even if locally it means something is written sub-optimally. An inconsistent codebase is harder to read and understand (see point #1), write, and improve using automated tools.

I'm now going to talk about specific ways in which we apply these principles in our development process.

Post-Commit Code Reviews

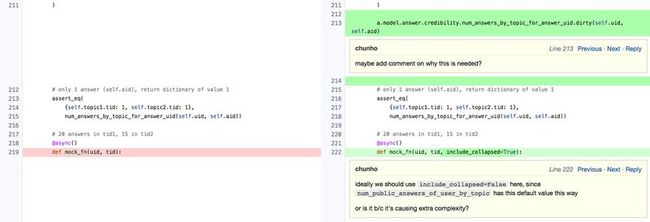

Code changes in our codebase are peer reviewed on six dimensions - correctness, privacy, performance, architecture, reusability and style. Reading code is an essential part of code reviews and so naturally, code reviews are great at improving the code readability.

But unfortunately, code reviews can also slow down development speed. For example, pre-commit code reviews are a norm in the industry, where the code must be reviewed and fixed even before pushing. Even if each round of review takes 2 days and there are 2-3 such iterations, it is easy for the author to stay blocked for good part of a week leading to wasted time.

At Quora, we generally do post-commit code reviews. That is, the code goes out live in production first and someone comes and reviews the code later. To give you an idea of the scale, yesterday 48 of us pushed code 187 times in total. Post-commit reviews are great since they unblock the author to push code and move on to other tasks. Code reviewers can also better manage their time and review code whenever it suits them the most, instead of having to do it urgently to unblock someone. Process wise, the expectation is that all code should be reviewed within a week, and in practice a lot of it is reviewed within 1-2 days. This period of one week was carefully chosen - it is long enough to give reviewers flexibility and yet short enough to minimize bad outcomes, such as others reading and propagating poor code elsewhere in the codebase. In practice, it also gels in with the fact that many developers maintain a weekly personal routine.

We can do post-commit reviews because we have a lot of trust in every single developer - after all we hire only the best and then invest a lot in their code quality education/tools. It also pushes us to test our code very well, and having good test coverage helps check for correctness even before any code review. On top of that, we use Phabricator which is a robust and highly configurable review tool. We implemented some changes to Phabricator to make it work even better with our post-commit review workflow. For example, we built a command line tool to push code to production and request review. This tool is set up such that Phabricator's diffs continue working without having to amend the commits.

All that said, we do have different bars of code reviews for different types of changes. We switch to pre-commit reviews instead of the usual post-commit review flow if the potential errors/mistakes are costly and can cause significant problems. Some examples of such cases are:

Touching code which deals with privacy and anonymity of users.

Touching a core abstraction since a lot of other code might depend on it.

Touching some parts of the infrastructure where mistakes can cause a downtime.

It's also up to the discretion of the developer — if at any time they'd like a pre-commit review of some code to get more opinions on it, they're welcome to do that instead of using the default post-commit review though it happens pretty rarely.

Routing Code Reviews

For code reviews to work well, each change should be reviewed by the people who have enough context on that change. It's even better if these code reviewers are responsible for “maintaining” the code they are reviewing so that they are incentivized to make the right long-term trade-offs.

We have implemented a simple system where module/directory level code “ownership” can be specified just by providing a meta tag at the top of the file itself. For example:

1 |

__reviewer__ = 'vanessa, kornel' |

If a commit makes changes to a file, its reviewers are parsed from the file (or its ancestor tree) and are automatically added on the commit as the reviewer. We also have extra rules to route the code reviews to the right people - e.g a data scientist is automatically added as a reviewer, if not already present, on any commit setting up an A/B test. We've in fact set up our tools to allow us to easily add more of such custom “routing” rules. As an example, if we wanted, it will be easy to add a rule to route all the commits of a new hire to their mentor.

Having a smart system for routing commits to the right people for code reviews reduces the author's burden of finding the right reviewers and ensures that the most suitable reviewers are reviewing each commit.

Testing

Testing is a critical part of our development workflow and we write lots of unit, functional, and UI tests with high coverage. A comprehensive test coverage enables developers to move fast in parallel without the worry of breaking existing functionality. We have invested a lot of time in making our testing framework (built on top of nosetests) easy/quick to use to reduce the overhead of writing tests.

We have also developed several tools to automate our testing. As described in a previous post about our continuous deployment system, a central server runs all tests before deploying any new code. The testing server is heavily parallelized so that running even our full test suite takes under 5 minutes. Such a fast turn-around time incentivizes people to write and execute tests often. We have a tool called 'test-local' that identifies and executes only the relevant test files for testing changes in the working copy of code. For this to work well, among other things, our tests need to be modular (which further only helps with quick debugging in case a test breaks). To ensure this and several other desirable properties of good test code, we maintain a shared document describing the best practices for writing tests. These guidelines are rigorously enforced through code reviews.

Similar to code reviews, we have different testing standards for different types of changes and require higher bar for test coverage whenever the cost of breaking things is high.

All these systems together enable us to get the most out of writing tests in terms of saving long term development cycles for little overhead.

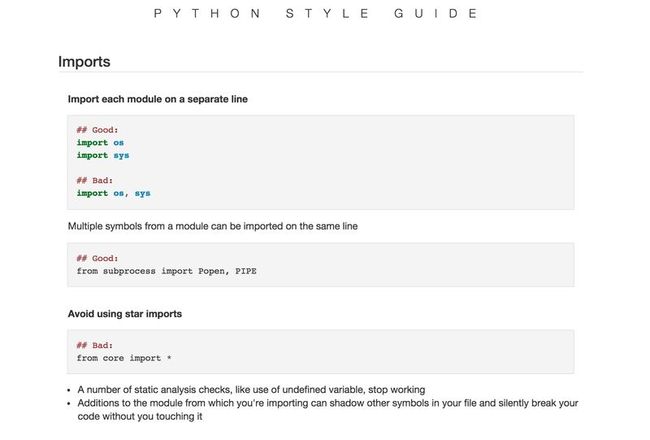

Code Quality Guidelines

We are big fans of shared guidelines for code quality standards since they do several things for us:

Serve as a good tool for new developer education.

Help us share localized wisdom and best practices with the whole team.

Set common standards that help keep the codebase consistent.

Reduce overhead during development and code reviews. For example, there is no point in discussing if the lines should be 80 chars or 100 chars on every single code review if we can just have that discussion once and crystallize the conclusion for future.

In addition to syntactical style guides for various languages, we also have guidelines for more abstract things like writing good tests or structuring modules to reduce their reading time. These guidelines are not static and keep evolving as we develop a better understanding of different tradeoffs. We also keep large scale refactor tools (some open source tools like codemod and some developed in-house) handy in case we have to go back and 'fix' all the historic code after we change a guideline.

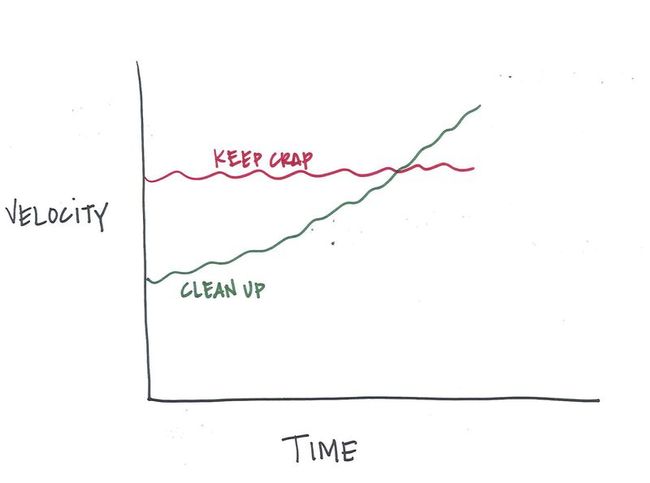

Old Code Cleanup

A fast moving team tries out a lot of different things, and it's natural and expected that some of them work and some of them don't. As a result, the codebase of any fast moving company develops lot of cruft over time - parts that aren't used anymore but are left there and make things more complex. Cleaning up this cruft keeps the codebase healthy and increases the development speed.

We periodically organize “cleanup weeks” to cleanup our cruft. During these weeks, some particular team or sometimes even the whole company spends their time just cleaning up the old code. This periodic cleanup reduces the cost of context switching between “regular work” and “cleanup work” and also makes cleanup more social and fun.

Some parts of the codebase are often easier to clean than some others. Similarly cleaning some parts of the codebase has disproportionally high impact on the development speed. To make the best use of time spent in cleanup, we prioritize modules on the basis of the cost to clean them up and the impact of their cleanup on future development speed.

Linting

It is easy to underestimate the cost of not following syntactic code quality guidelines (e.g the format of docstring or line length) in one-off instances, but they do add up over time. It also is often annoying to remember and apply many different rules, especially as the number of rules grows. As a result, it is not surprising that many people in the industry decide not to uphold them.

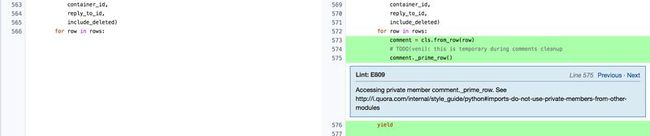

We have developed an in-house linter called qlint to reduce some of this annoyance. Qlint is a smart linter that understands both the text structure, as well as the AST and is based on flake8 and pylint. It was designed to make adding custom lint rules in the future easy. For example, we follow a convention that any 'private' variables in Python should have a leading underscore and so we added a rule to qlint which could detect any 'illegal' use of such private variables.

We have integrated qlint with many systems to provide a seamless development environment so that people don't need to watch out for lint errors themselves. For starters, qlint is fully integrated with our favorite text editors—Vim, Emacs and Sublime—and provides in-editor visual feedback (say a red mark) whenever some rule is violated. It is also integrated with our push process and is run (in interactive mode) whenever someone is trying to push code. In fact, depending on the rule that's being violated by the commit, it might even block its deployment. We have also integrated our style guides with qlint such that on every lint error, qlint spits out a hyperlink to the corresponding rule from the relevant style guide. Our Phabricator is also configured to use qlint. This way all errors found by qlint are visually flagged making their code review rather easy.

All these changes have increased the consistency and quality of our code at the cost of little overhead.

Conclusion

As described in the post, we take code quality seriously at Quora. We are very pragmatic about it and build good systems, tools, and processes that let us enhance and maintain our long-term development productivity. While we try to strike a good balance today, our team is also constantly growing and evolving, so we are confident that there are many more tools and systems to be set up in the future.