CREATE DATABASE financials; 背后究竟发生了什么?

启动CLI后,在界面输入CREATE DATABASE financials;后,到底发生了什么?

----------------------------------------------------------------------------------

其实就是读取一行命令,然后由cli来执行。

===进入processLine函数,很诧异,竟然发现了信号处理函数

SignalHandler oldSignal = null;

Signal interupSignal = null;

if (allowInterupting) {// 看到这里了

// Remember all threads that were running at the time we started

// line processing.

// Hook up the custom Ctrl+C handler while processing this line

// 看到这里了

interupSignal = new Signal("INT");

// 看到这里了

oldSignal = Signal.handle(interupSignal, new SignalHandler() {

private final Thread cliThread = Thread.currentThread();

private boolean interruptRequested;

@Override

public void handle(Signal signal) {

boolean initialRequest = !interruptRequested;

interruptRequested = true;

// Kill the VM on second ctrl+c

if (!initialRequest) {

console.printInfo("Exiting the JVM");

System.exit(127);

}

// Interrupt the CLI thread to stop the current statement

// and return

// to prompt

console.printInfo("Interrupting... Be patient, this might take some time.");

console.printInfo("Press Ctrl+C again to kill JVM");

// First, kill any running MR jobs

HadoopJobExecHelper.killRunningJobs();

HiveInterruptUtils.interrupt();

this.cliThread.interrupt();

}

});

}

以前都是在C里玩信号,现在java也可以了,还是第一次见到!!!

==========================================言归正传,继续跟踪

for (String oneCmd : line.split(";")) {

if (StringUtils.endsWith(oneCmd, "\\")) {

command += StringUtils.chop(oneCmd) + ";";

continue;

} else {

command += oneCmd;

}

if (StringUtils.isBlank(command)) {

continue;

}

ret = processCmd(command);

// wipe cli query state

SessionState ss = SessionState.get();

ss.setCommandType(null);

command = "";

lastRet = ret;

boolean ignoreErrors = HiveConf.getBoolVar(conf, HiveConf.ConfVars.CLIIGNOREERRORS);

if (ret != 0 && !ignoreErrors) {

CommandProcessorFactory.clean((HiveConf) conf);

return ret;

}

}

CommandProcessorFactory.clean((HiveConf) conf);

return lastRet;

对于每一个命令来说,最终是通过ret = processCmd(command);来跑的进入此函数,

在remote模式下,代码为

else if (ss.isRemoteMode()) { // remote mode -- connecting to remote

// hive server

// 看到这里了

HiveClient client = ss.getClient();

PrintStream out = ss.out;

PrintStream err = ss.err;

// 看到这里了

try {

client.execute(cmd_trimmed);

List<String> results;

do {

results = client.fetchN(LINES_TO_FETCH);

for (String line : results) {

out.println(line);

}

} while (results.size() == LINES_TO_FETCH);

} catch (HiveServerException e) {

---关键的就是 client.execute(cmd_trimmed);

所以需要进去此函数看命令。

public void execute(String query) throws HiveServerException, org.apache.thrift.TException

{

send_execute(query);

recv_execute();

}

这个就涉及到了Thrift的接口API了,这里直接跳到Server端查看服务器端的行为。

===============================================================================

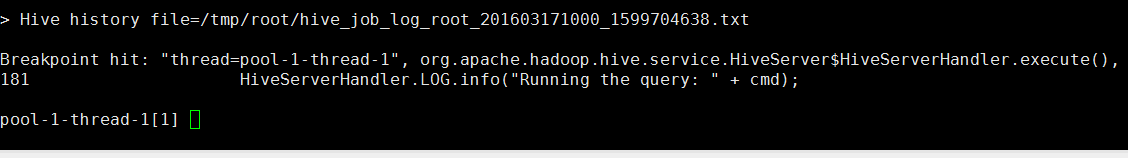

代码位于HiveServer内部类HiveServerHandler

public void execute(String cmd) throws HiveServerException, TException {

HiveServerHandler.LOG.info("Running the query: " + cmd);

SessionState session = SessionState.get();

String cmd_trimmed = cmd.trim();

String[] tokens = cmd_trimmed.split("\\s");

String cmd_1 = cmd_trimmed.substring(tokens[0].length()).trim();

int ret = 0;

String errorMessage = "";

String SQLState = null;

try {

CommandProcessor proc = CommandProcessorFactory.get(tokens[0]);

if (proc != null) {

if (proc instanceof Driver) {

isHiveQuery = true;

driver = (Driver) proc;

// In Hive server mode, we are not able to retry in the

// FetchTask

// case, when calling fetch quueries since execute() has

// returned.

// For now, we disable the test attempts.

driver.setTryCount(Integer.MAX_VALUE);

response = driver.run(cmd);

} else {

isHiveQuery = false;

driver = null;

// need to reset output for each non-Hive query

setupSessionIO(session);

response = proc.run(cmd_1);

}

ret = response.getResponseCode();

SQLState = response.getSQLState();

errorMessage = response.getErrorMessage();

}

} catch (Exception e) {

HiveServerException ex = new HiveServerException();

ex.setMessage("Error running query: " + e.toString());

ex.setErrorCode(ret == 0 ? -10000 : ret);

throw ex;

}

if (ret != 0) {

throw new HiveServerException("Query returned non-zero code: " + ret + ", cause: " + errorMessage, ret,

SQLState);

}

}

好,debug环境也OK了

剩下的就简单了,跟踪代码如下:

注意这一行CommandProcessor proc = CommandProcessorFactory.get(tokens[0]);

看起来是拿到对应的处理器,跟进去看看!

public static CommandProcessor get(String cmd, HiveConf conf) {

String cmdl = cmd.toLowerCase();

if ("set".equals(cmdl)) {

return new SetProcessor();

} else if ("dfs".equals(cmdl)) {

SessionState ss = SessionState.get();

return new DfsProcessor(ss.getConf());

} else if ("add".equals(cmdl)) {

return new AddResourceProcessor();

} else if ("delete".equals(cmdl)) {

return new DeleteResourceProcessor();

} else if (!isBlank(cmd)) {

if (conf == null) {

return new Driver();

}

Driver drv = mapDrivers.get(conf);

if (drv == null) {

drv = new Driver();

mapDrivers.put(conf, drv);

}

drv.init();

return drv;

}

return null;

}

其实最终是返回了return new Driver();---org.apache.hadoop.hive.ql.Driver类。

==============================================================================

接下来执行

if (proc instanceof Driver) {

// 看到这里了

isHiveQuery = true;

driver = (Driver) proc;

// In Hive server mode, we are not able to retry in the

// FetchTask

// case, when calling fetch quueries since execute() has

// returned.

// For now, we disable the test attempts.

driver.setTryCount(Integer.MAX_VALUE);

response = driver.run(cmd);

}

这个就是重点了,继续跟踪

终于进入到了实质性的一步。

首先需要编译命令

编译请看下一节。