(3-1)hadoop-2.6.0伪分布笔记

//查看主机名

[root@i-love-you ~]# hostnamei-love-you

//修改主机名

[root@i-love-you ~]# vim /etc/sysconfig/network

//IP与主机名绑定

[root@i-love-you ~]# vim /etc/hosts

//查看防火墙状态

[root@i-love-you ~]# chkconfig --list iptables

iptables 0:关闭 1:关闭 2:启用 3:启用 4:启用 5:启用 6:关闭

//可否ping通主机

[root@i-love-you ~]# ping 192.168.1.10

[root@i-love-you ~]# ping i-love-you

//永久关闭防火墙

[root@i-love-you ~]# chkconfig iptables off

[root@i-love-you ~]# chkconfig --list iptables

iptables 0:关闭 1:关闭 2:关闭 3:关闭 4:关闭 5:关闭 6:关闭

[root@i-love-you ~]#

//配置SSH

[root@i-love-you ~]# ssh-keygen -t rsa [root@i-love-you ~]# cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys [root@i-love-you ~]# ssh 192.168.1.10 Last login: Thu Mar 26 20:25:49 2015 from i-love-you [root@i-love-you ~]#

//jdk的安装

export JAVA_HOME=/usr/local/jdk export JRE_HOME=/usr/local/jdk/jre export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib export PATH=$JAVA_HOME/bin:$JAVA_HOME/jre/bin:$PATH

[root@i-love-you hadoop]# vim hadoop-env.sh

//配置hadoop-env.sh

# The java implementation to use. export JAVA_HOME=/usr/local/jdk

//配置core-site.xml

[root@i-love-you hadoop]# vim core-site.xml <configuration> <property> <name>fs.defaultFS</name> <value>hdfs://192.168.1.10:9000</value> </property> <property> <name>hadoop.tmp.dir</name> <value>/usr/local/mydata</value> </property> <property> <name>fs.trash.interval</name> <value>1440</value> </property> </configuration>

//配置hdfs-site.xml

[root@i-love-you hadoop]# vim hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> </property> <property> <name>dfs.permissions</name> <value>false</value> </property> </configuration>

//配置yarn-site.xml

[root@i-love-you hadoop]# vim yarn-site.xml <configuration> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> </configuration>

//配置mapred-site.xml

[root@i-love-you hadoop]# cp mapred-site.xml.template mapred-site.xml [root@i-love-you hadoop]# vim mapred-site.xml <configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> </configuration>

//格式化

[root@i-love-you hadoop]# bin/hdfs namenode -format

15/03/26 20:50:11 INFO common.Storage: Storage directory /usr/local/mydata/dfs/namehas been successfully formatted.

//启动namenode和datanode

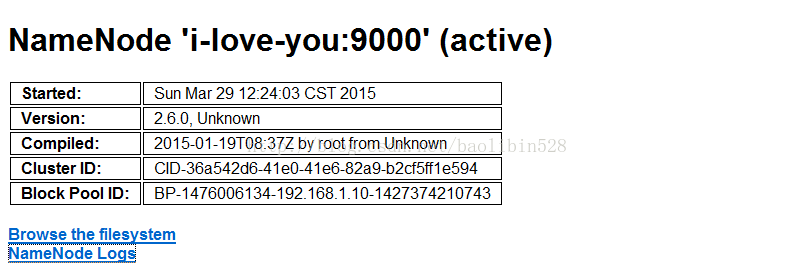

<pre name="code" class="java">[root@i-love-you hadoop]# sbin/hadoop-daemon.sh start namenode starting namenode, logging to /usr/local/hadoop/logs/hadoop-root-namenode-i-love-you.out [root@i-love-you hadoop]# sbin/hadoop-daemon.sh start datanode starting datanode, logging to /usr/local/hadoop/logs/hadoop-root-datanode-i-love-you.out [root@i-love-you hadoop]# jps 26357 NameNode 26472 Jps 26435 DataNode [root@i-love-you hadoop]#C:\Windows\System32\drivers\etc\hosts192.168.1.10 i-love-you//查看50070端口http://192.168.1.10:50070/http://192.168.1.10:50070/dfshealth.html#tab-overview //启动resourcemanager和nodemanager

[root@i-love-you hadoop]# sbin/yarn-daemon.sh start resourcemanager starting resourcemanager, logging to /usr/local/hadoop/logs/yarn-root-resourcemanager-i-love-you.out [root@i-love-you hadoop]# sbin/yarn-daemon.sh start nodemanager starting nodemanager, logging to /usr/local/hadoop/logs/yarn-root-nodemanager-i-love-you.out [root@i-love-you hadoop]# jps 26357 NameNode 26628 Jps 26563 ResourceManager 26609 NodeManager 26435 DataNode [root@i-love-you hadoop]#

//浏览器访问8088端口

http://192.168.1.10:8088/cluster

//启动historyserver

[root@i-love-you hadoop]# sbin/mr-jobhistory-daemon.sh start historyserver starting historyserver, logging to /usr/local/hadoop/logs/mapred-root-historyserver-i-love-you.out [root@i-love-you hadoop]# jps 26357 NameNode 26563 ResourceManager 26609 NodeManager 26925 JobHistoryServer 26956 Jps 26435 DataNode [root@i-love-you hadoop]#

http://192.168.1.10:19888/jobhistory

[root@i-love-you hadoop]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar wordcount /input/baozi /output

[root@i-love-you hadoop]# bin/hdfs dfs -ls -R /output -rw-r--r-- 1 root supergroup 0 2015-03-29 14:13 /output/_SUCCESS -rw-r--r-- 1 root supergroup 33 2015-03-29 14:10 /output/part-r-00000 [root@i-love-you hadoop]# bin/hdfs dfs -text /output/part-r-00000 hadoop 3 hello 1 java 2 struts 1

[root@i-love-you hadoop]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar wordcount /input/baozi /output2

15/03/29 18:04:53 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

15/03/29 18:04:57 INFO input.FileInputFormat: Total input paths to process : 1

15/03/29 18:04:57 INFO mapreduce.JobSubmitter: number of splits:1

15/03/29 18:04:58 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1427623085786_0001

15/03/29 18:04:59 INFO impl.YarnClientImpl: Submitted application application_1427623085786_0001

15/03/29 18:05:00 INFO mapreduce.Job: The url to track the job: http://i-love-you:8088/proxy/application_1427623085786_0001/

15/03/29 18:05:00 INFO mapreduce.Job: Running job: job_1427623085786_0001

15/03/29 18:05:30 INFO mapreduce.Job: Job job_1427623085786_0001 running in uber mode : false

15/03/29 18:05:30 INFO mapreduce.Job: map 0% reduce 0%

15/03/29 18:06:22 INFO mapreduce.Job: map 100% reduce 0%

15/03/29 18:07:01 INFO mapreduce.Job: map 100% reduce 100%

15/03/29 18:07:05 INFO mapreduce.Job: Job job_1427623085786_0001 completed successfully

15/03/29 18:07:08 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=55

FILE: Number of bytes written=211213

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=145

HDFS: Number of bytes written=33

HDFS: Number of read operations=6

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Data-local map tasks=1

Total time spent by all maps in occupied slots (ms)=53968

Total time spent by all reduces in occupied slots (ms)=24922

Total time spent by all map tasks (ms)=53968

Total time spent by all reduce tasks (ms)=24922

Total vcore-seconds taken by all map tasks=53968

Total vcore-seconds taken by all reduce tasks=24922

Total megabyte-seconds taken by all map tasks=55263232

Total megabyte-seconds taken by all reduce tasks=25520128

Map-Reduce Framework

Map input records=1

Map output records=7

Map output bytes=72

Map output materialized bytes=55

Input split bytes=101

Combine input records=7

Combine output records=4

Reduce input groups=4

Reduce shuffle bytes=55

Reduce input records=4

Reduce output records=4

Spilled Records=8

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=296

CPU time spent (ms)=3010

Physical memory (bytes) snapshot=301502464

Virtual memory (bytes) snapshot=1687568384

Total committed heap usage (bytes)=136450048

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=44

File Output Format Counters

Bytes Written=33

[root@i-love-you hadoop]#