Jsp显示HBase的数据

Jsp显示HBase的数据

软件:

Jdk1.7、eclipse ee、vmware安装好的CentOs6.5、Hadoop-2.6.0、HBase-0.99.2

1、建一个普通的动态Web程序,用导jar包运行,不用maven和ant。

2、把HBase和Hadoop的相应的jar包导进工程中;

主要是运行HBase API,把指定表名和行键的内容读出来。

并添加 log4j.properties 文件。

3、创建一个servlet类,并创建jsp文件,把HBase的lib里的jar包拷进web的/web_ceshi2/WebContent/WEB-INF/lib 里面去。

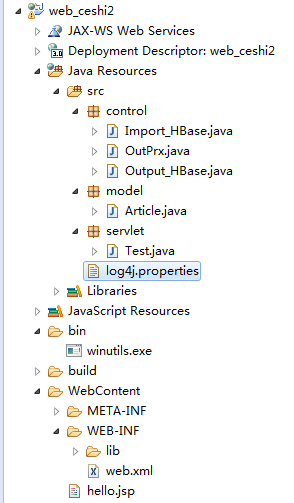

项目目录结构:

代码:

Output_HBase.java:

package control;

import java.io.IOException;

import model.Article;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.KeyValue;

import org.apache.hadoop.hbase.client.Get;

import org.apache.hadoop.hbase.client.HBaseAdmin;

import org.apache.hadoop.hbase.client.HTable;

import org.apache.hadoop.hbase.client.HTableInterface;

import org.apache.hadoop.hbase.client.HTablePool;

import org.apache.hadoop.hbase.client.Result;

import org.apache.hadoop.hbase.client.ResultScanner;

import org.apache.hadoop.hbase.client.Scan;

@SuppressWarnings("deprecation")

public class Output_HBase {

HBaseAdmin admin=null;

Configuration conf=null;

/**

* 构造函数加载配置

*/

public Output_HBase(){

conf = new Configuration();

conf.set("hbase.zookeeper.quorum", "192.168.1.200:2181");

conf.set("hbase.rootdir", "hdfs://192.168.1.200:9000/hbase");

System.out.println("初始化完毕");

try {

admin = new HBaseAdmin(conf);

} catch (IOException e) {

e.printStackTrace();

}

}

public static void main(String[] args) {

Output_HBase o=new Output_HBase();

o.get("article", "1");

}

public Article get(String tableName, String row) {

System.out.println("get执行了1");

@SuppressWarnings("resource")

HTablePool hTablePool = new HTablePool(conf, 1000);

HTableInterface table = hTablePool.getTable(tableName);

System.out.println("get执行了2");

Get get = new Get(row.getBytes());

System.out.println("get执行了3");

Article article = null;

try {

System.out.println("get执行了4");

Result result = table.get(get);

System.out.println("get执行了5");

KeyValue[] raw = result.raw();

System.out.println("get执行了6");

if (raw.length == 5) {

System.out.println("get执行了7");

article = new Article();

article.setId(new String(raw[3].getValue()));

article.setTitle(new String(raw[4].getValue()));

article.setAuthor(new String(raw[0].getValue()));

article.setDescribe(new String(raw[2].getValue()));

article.setContent(new String(raw[1].getValue()));

}

//new Start(article.getId(), article.getTitle(), article.getAuthor(), article.getDescribe(), article.getContent());

System.out.println("执行了啊--ID"+article.getId()+"\n");

System.out.println("执行了啊--标题"+article.getTitle()+"\n");

System.out.println("执行了啊--作者"+article.getAuthor()+"\n");

System.out.println("执行了啊--描述"+article.getDescribe()+"\n");

System.out.println("执行了啊--正文"+article.getContent()+"\n");

} catch (IOException e) {

e.printStackTrace();

}

return article;

}

/**

* 获取表的所有数据

* @param tableName

*/

public void getALLData(String tableName) {

try {

@SuppressWarnings("resource")

HTable hTable = new HTable(conf, tableName);

Scan scan = new Scan();

ResultScanner scanner = hTable.getScanner(scan);

for (Result result : scanner) {

if(result.raw().length==0){

System.out.println(tableName+" 表数据为空!");

}else{

for (KeyValue kv: result.raw()){

System.out.println(new String(kv.getKey())+"\t"+new String(kv.getValue()));

}

}

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

OutPrx.java:

package control;

import model.Article;

public class OutPrx {

private String id;

private String title;

private String author;

private String describe;

private String content;

public OutPrx() {

}

public void get(){

System.out.println("这里这行了1");

Output_HBase out1=new Output_HBase();

System.out.println("这里这行了2");

Article article=out1.get("article", "520");

System.out.println("这里这行了3");

this.id=article.getId();

this.title=article.getTitle();

this.author=article.getAuthor();

this.describe=article.getDescribe();

this.content=article.getContent();

System.out.println("这里这行了4");

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getAuthor() {

return author;

}

public void setAuthor(String author) {

this.author = author;

}

public String getDescribe() {

return describe;

}

public void setDescribe(String describe) {

this.describe = describe;

}

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

}

Article:

package model;

public class Article {

private String id;

private String title;

private String describe;

private String content;

private String author;

public Article(){

}

public Article(String id,String title,String describe,String content,String author){

this.id=id;

this.title=title;

this.describe=describe;

this.content=content;

this.author=author;

}

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getDescribe() {

return describe;

}

public void setDescribe(String describe) {

this.describe = describe;

}

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

public String getAuthor() {

return author;

}

public void setAuthor(String author) {

this.author = author;

}

public String toString(){

return this.id+"\t"+this.title+"\t"+this.author+"\t"+this.describe+"\t"+this.content;

}

}

(这个类跟显示无关,可以忽略)

Import_HBase:

package control;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Mutation;

import org.apache.hadoop.hbase.client.Put;

import org.apache.hadoop.hbase.mapreduce.TableMapReduceUtil;

import org.apache.hadoop.hbase.mapreduce.TableOutputFormat;

import org.apache.hadoop.hbase.mapreduce.TableReducer;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

public class Import_HBase {

public static class MyMapper extends Mapper<LongWritable, Text, LongWritable, Text>{

@Override

protected void map(LongWritable key, Text value,

Mapper<LongWritable, Text, LongWritable, Text>.Context context)

throws IOException, InterruptedException {

//设置行键+内容

context.write(key, value);

}

}

public static class MyReduce extends TableReducer<LongWritable, Text, NullWritable>{

private String family="info";

@Override

protected void reduce(LongWritable arg0, Iterable<Text> v2s,

Reducer<LongWritable, Text, NullWritable, Mutation>.Context context)

throws IOException, InterruptedException {

for (Text value : v2s) {

String line=value.toString();

String[] splited=line.split("\t");

String rowkey=splited[0];

Put put = new Put(rowkey.getBytes());

put.add(family.getBytes(), "id".getBytes(), splited[0].getBytes());

put.add(family.getBytes(), "title".getBytes(), splited[1].getBytes());

put.add(family.getBytes(), "author".getBytes(), splited[2].getBytes());

put.add(family.getBytes(), "describe".getBytes(), splited[3].getBytes());

put.add(family.getBytes(), "content".getBytes(), splited[4].getBytes());

context.write(NullWritable.get(), put);

}

}

}

private static String tableName="article";

@SuppressWarnings("deprecation")

public static void main(String[] args) throws Exception {

Configuration conf = HBaseConfiguration.create();

conf.set("hbase.rootdir", "hdfs://192.168.1.200:9000/hbase");

conf.set("hbase.zookeeper.quorum", "192.168.1.200:2181");

conf.set(TableOutputFormat.OUTPUT_TABLE, tableName);

Job job = new Job(conf, Import_HBase.class.getSimpleName());

TableMapReduceUtil.addDependencyJars(job);

job.setJarByClass(Import_HBase.class);

job.setMapperClass(MyMapper.class);

job.setReducerClass(MyReduce.class);

job.setMapOutputKeyClass(LongWritable.class);

job.setMapOutputValueClass(Text.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TableOutputFormat.class);

FileInputFormat.setInputPaths(job, "hdfs://192.168.1.200:9000/hbase_solr");

job.waitForCompletion(true);

}

}

HttpServlet:

package servlet;

import java.io.IOException;

import javax.servlet.ServletException;

import javax.servlet.http.HttpServlet;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

import javax.servlet.annotation.WebServlet;

import control.OutPrx;

/**

* Servlet implementation class Test

*/

@WebServlet("/Test")

public class Test extends HttpServlet {

private static final long serialVersionUID = 1L;

/**

* @see HttpServlet#HttpServlet()

*/

public Test() {

super();

// TODO Auto-generated constructor stub

}

/**

* @see HttpServlet#doGet(HttpServletRequest request, HttpServletResponse response)

*/

protected void doGet(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

// TODO Auto-generated method stub

OutPrx oo=new OutPrx();

oo.get();

request.setAttribute("id",oo.getId());//存值

request.setAttribute("title",oo.getTitle());//存值

request.setAttribute("author",oo.getAuthor());//存值

request.setAttribute("describe",oo.getDescribe());//存值

request.setAttribute("content",oo.getContent());//存值

System.out.println("====================================================================================");

System.out.println("执行了啊--ID"+oo.getId()+"\n");

System.out.println("执行了啊--标题"+oo.getTitle()+"\n");

System.out.println("执行了啊--作者"+oo.getAuthor()+"\n");

System.out.println("执行了啊--描述"+oo.getDescribe()+"\n");

System.out.println("执行了啊--正文"+oo.getContent()+"\n");

request.getRequestDispatcher("/hello.jsp").forward(request,response);

System.out.println("-----------------------------------------------------------------------------");

}

/**

* @see HttpServlet#doPost(HttpServletRequest request, HttpServletResponse response)

*/

protected void doPost(HttpServletRequest request, HttpServletResponse response) throws ServletException, IOException {

// TODO Auto-generated method stub

}

}

log4j.properties:

### set log levels - for more verbose logging change 'info' to 'debug' ###

log4j.rootLogger=DEBUG,stdout,file

## Disable other log

#log4j.logger.org.springframework=OFF

#log4j.logger.org.apache.struts2=OFF

#log4j.logger.com.opensymphony.xwork2=OFF

#log4j.logger.com.ibatis=OFF

#log4j.logger.org.hibernate=OFF

### direct log messages to stdout ###

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.Target=System.out

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d{ABSOLUTE} %5p %c{1}:%L - %m%n

### direct messages to file mylog.log ###

log4j.appender.file=org.apache.log4j.FileAppender

log4j.appender.file.File=logs/spider_web.log

log4j.appender.file.DatePattern = '.'yyyy-MM-dd

log4j.appender.file.layout=org.apache.log4j.PatternLayout

log4j.appender.file.layout.ConversionPattern=%d{ABSOLUTE} %5p %c{1}:%L - %m%n

### direct messages to file mylog.log ###

log4j.logger.cn.superwu.crm.service=INFO, ServerDailyRollingFile

log4j.appender.ServerDailyRollingFile=org.apache.log4j.DailyRollingFileAppender

log4j.appender.ServerDailyRollingFile.File=logs/biapp-service.log

log4j.appender.ServerDailyRollingFile.DatePattern='.'yyyy-MM-dd

log4j.appender.ServerDailyRollingFile.layout=org.apache.log4j.PatternLayout

log4j.appender.ServerDailyRollingFile.layout.ConversionPattern=%d{yyy-MM-dd HH:mm:ss } -[%r]-[%p] %m%n

#log4j.logger.cn.superwu.crm.service.DrmService=INFO, ServerDailyRollingFile

#log4j.appender.drm=org.apache.log4j.RollingFileAppender

#log4j.appender.drm.File=logs/crm-drm.log

#log4j.appender.drm.MaxFileSize=10000KB

#log4j.appender.drm.MaxBackupIndex=10

#log4j.appender.drm.Append=true

#log4j.appender.drm.layout=org.apache.log4j.PatternLayout

#log4j.appender.drm.layout.ConversionPattern=[start]%d{yyyy/MM/dd/ HH:mm:ss}[DATE]%n%p[PRIORITY]%n%x[NDC]%n%t[THREAD]%n%c[CATEGORY]%n%m[MESSAGE]%n%n

#log4j.appender.drm.layout.ConversionPattern=[%5p]%d{yyyy-MM-dd HH:mm:ss}[%c](%F:%L)%n%m%n%n

hello.jsp:

<pre name="code" class="java"><%@ page language="java" contentType="text/html; charset=UTF-8"

pageEncoding="UTF-8"%>

<!DOCTYPE html PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>Insert title here</title>

</head>

<body>

<% String id = (String)request.getAttribute("id");%>

<% String title = (String)request.getAttribute("title");%>

<% String author = (String)request.getAttribute("author");%>

<% String describe = (String)request.getAttribute("describe");%>

<% String content = (String)request.getAttribute("content");%>

<%="文章ID为:"+id %> <br><br>

<%="文章标题为:"+title %> <br><br>

<%="文章作者为:"+author %> <br><br>

<%="文章描述为:"+describe %> <br><br>

<%="文章正文为:"+content %> <br><br>

</body>

</html>

右键servlet类运行就可以了,运行界面如下:

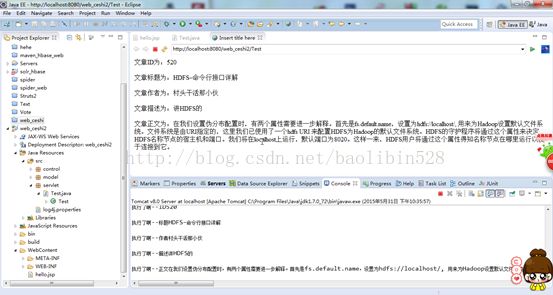

Eclipse 显示的整个界面:

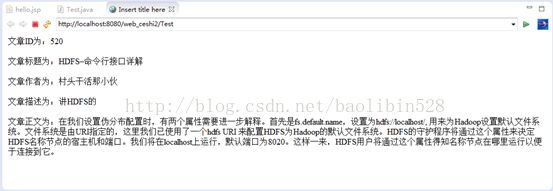

Eclipse显示的web界面:

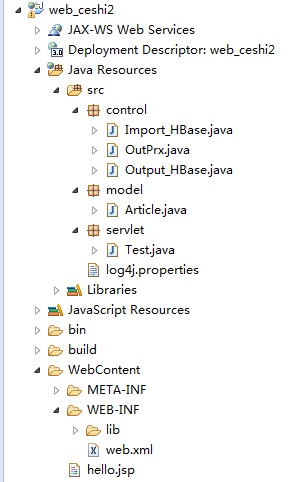

Eclipse显示的项目工程界面:

在网页显示的界面:

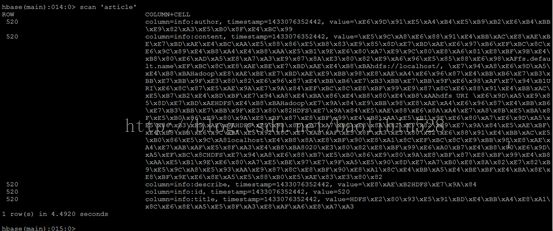

查看HBase的数据:

查看HBase表结构:

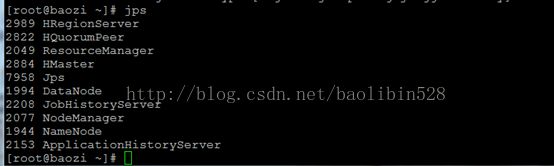

运行Web程序之前,必须确保Hadoop和HBase是开启的:

如果加载有错误,可以重新创建一个项目。

例如:@WebServlet("/Test")

还有显示提示找不到什么类的等错误,先自己重新创建个项目运行。

创建了还不好使,在具体情况具体分析。

这个纯属自己自娱自乐,当然用maven更好了,用springmvc更好了。