使用Realsense D435相机在ROS Kinetic中跑通ORB-SLAM2

文章中不足,错误的地方欢迎大家批评指正,如果哪步遇到了问题,我尽力为你解答[皱眉]。这个文章主要是想记录当时配环境的步骤,以防以后再配忘了某步。

目录

- 使用配置

- 主要步骤

- 安装基于ROS使用RealSense的包

- Step1 安装RealSense SDK

- Step2 安装ROS Kinetic

- Step3 安装基于ROS使用RealSense的包

- 配置ORB-SLAM2

- 步骤

- 单目测试

- RGB-D相机测试

- 获取相机信息,实现数据传输

- 获得相机内参矩阵

- 测试

使用配置

- 系统:Ubuntu16.04,ROS Kinetic

- 相机:Intel RealSense D435

- SLAM:ORB-SLAM

主要步骤

- 安装基于ROS使用RealSense的包(包含安装RealSense SDK和ROS Kinetic)

- 配置ORB-SLAM2

- 获取相机信息,实现数据传输

安装基于ROS使用RealSense的包

Step1 安装RealSense SDK

在librealsense/doc/这里面有两个文档,一个是distribution_linux.md,另一个是installation.md

我是根据distribution_linux.md装的,

如下:

Register the server’s public key :

sudo apt-key adv --keyserver keys.gnupg.net --recv-key C8B3A55A6F3EFCDE || sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-key C8B3A55A6F3EFCDE

Add the server to the list of repositories :

Ubuntu 16 LTS:

sudo add-apt-repository "deb http://realsense-hw-public.s3.amazonaws.com/Debian/apt-repo xenial main" -u

When upgrading, remove the old records:

sudo rm -f /etc/apt/sources.list.d/realsense-public.list.

sudo apt-get update.

In order to run demos install:

sudo apt-get install librealsense2-dkms

sudo apt-get install librealsense2-utils

The above two lines will deploy librealsense2 udev rules, build and activate kernel modules, runtime library and executable demos and tools.

Reconnect the Intel RealSense depth camera and run: realsense-viewer to verify the installation.

Developers shall install additional packages:

sudo apt-get install librealsense2-dev

sudo apt-get install librealsense2-dbg

With dev package installed, you can compile an application with librealsense using g++ -std=c++11 filename.cpp -lrealsense2 or an IDE of your choice.

Verify that the kernel is updated :

modinfo uvcvideo | grep "version:"should include realsense string

Step2 安装ROS Kinetic

官方教程

sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu $(lsb_release -sc) main" > /etc/apt/sources.list.d/ros-latest.list'sudo apt-key adv --keyserver hkp://ha.pool.sks-keyservers.net:80 --recv-key 421C365BD9FF1F717815A3895523BAEEB01FA116sudo apt-get update- Desktop-Full Install: (Recommended) : ROS, rqt, rviz, robot-generic libraries, 2D/3D simulators, navigation and 2D/3D perception (因为比较全,所以装的是完全版)

sudo apt-get install ros-kinetic-desktop-full # apt-cache search ros-kineticsudo rosdep init

rosdep updateecho "source /opt/ros/kinetic/setup.bash" >> ~/.bashrc

source ~/.bashrcsudo apt install python-rosinstall python-rosinstall-generator python-wstool build-essential

检查ROS是否成功安装的小乌龟的例子:

大概三个终端

roscorerosrun turtlesim turtlesim_noderosrun turtlesim turtle_teleop_key

鼠标点击此窗口,保证第三个终端窗口在最前,然后再按上下左右,看小乌龟是否运动,能动说明成功安装了。 鼠标点击此窗口,保证第三个终端窗口在最前,然后再按上下左右,看小乌龟是否运动,能动说明成功安装了。

Step3 安装基于ROS使用RealSense的包

官方教程

- Create a catkin workspace

mkdir -p ~/catkin_ws/src

cd ~/catkin_ws/src/ - Clone the latest Intel® RealSense™ ROS from here into ‘catkin_ws/src/’

把realsense这个文件下载/克隆到该目录下

catkin_init_workspace

cd ..

catkin_make clean

catkin_make -DCATKIN_ENABLE_TESTING=False -DCMAKE_BUILD_TYPE=Release

catkin_make install

echo "source ~/catkin_ws/devel/setup.bash" >> ~/.bashrc

source ~/.bashrc

检验是否能在ros使用realsense相机:

- install rgbd_launch

sudo apt-get install ros-kinetic-rgbd-launch roslaunch realsense2_camera rs_rgbd.launch- 再打开一个终端,输入

rviz

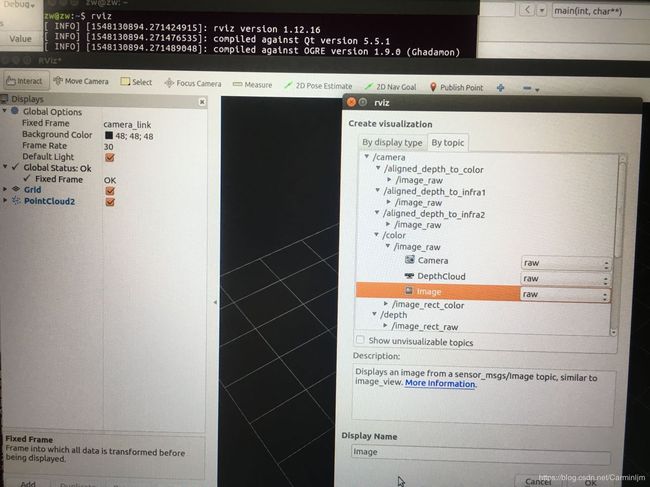

此时并不能看到什么结果 - 左上角 Displays 中 Fixed Frame 选项中,下拉菜单选择 camera_link

- 这是主要到Global Status变成了绿色

- 点击该框中的Add -> 上方点击 By topic -> /depth_registered 下的 /points 下的/PointCloud2

- 点击该框中的Add -> 上方点击 By topic -> /color 下的 /image_raw 下的image

配置ORB-SLAM2

步骤

对我帮助很大的一篇教程:ORB-SLAM2编译安装和USB摄像头例程运行

作者:WafeiShushu

此时我们已经有了一个工作空间

我首先把ORB_SLAM2的程序拷到了/catkin_ws中

-

安装git cmake 和一些库

sudo apt-get install git cmake

sudo apt-get install libblas-dev liblapack-dev -

关于Pangolin

sudo apt-get install libglew-dev

sudo apt-get install libboost-dev libboost-thread-dev libboost-filesystem-dev

sudo apt-get install libpython2.7-dev

sudo apt-get install build-essential

*我把Pangolin git到了ORB_SLAM2那个程序的文件夹中,我感觉这步应该不是这样的,不过问题不大

git clone https://github.com/stevenlovegrove/Pangolin.git

cd Pangolin -

编译安装Pangolin

mkdir build

cd build

cmake -DCPP11_NO_BOOST=1 ..

make -

安装OpenCV依赖

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev -

OpenCV

OpenCV的版本:Required at leat 2.4.3. Tested with OpenCV 2.4.11 and OpenCV 3.2.

我装的是3.2.0 -

下载完后解压,进入OpenCV文件夹进行编译安装(opencv下哪问题好像都不大)

mkdir release

cd release

cmake -D CMAKE_BUILD_TYPE=RELEASE -D

CMAKE_INSTALL_PREFIX=/usr/local ..

make

sudo make install -

Eigen3

我发现这一趟安装下来,用pkg-config --modversion eigen3查看eigen3的版本已经有3.2.92,就没继续装eigen3了

版本要求:Required at least 3.1.0.

据说:下载3.2.10版本,如果用3.3.x版本可能会造成ORB-SLAM2编译失败,因为eigen3.3.4版本修改了文件目录结构。 -

ORB_SLAM2

下载或克隆到/catkin_ws文件中。

cd ORB_SLAM2

chmod +x build.sh

gedit build.sh此步骤可以将build.sh的make -j改成make 防止多线程编译错误,之后我自己没有更改,发现没有问题。

./build.sh

此时,返回到~目录下

gedit .bashrc

写入export ROS_PACKAGE_PATH=${ROS_PACKAGE_PATH}:/home/(user)/catkin_ws/ORB_SLAM2/Examples/ROS

接着去编译build_ros.sh

chmod +x build.sh

- 可以同上步一样,将build_ros.sh的make -j改成make (不过我没做)

gedit build_ros.sh - 在/Examples/ROS/ORB-SLAM2/CMakeLists.txt文件下修改 加上 -lboost_system(我没加这个的时候会有关于stl的错误)

set(LIBS

${OpenCV_LIBS}

${EIGEN3_LIBS}

${Pangolin_LIBRARIES}

${PROJECT_SOURCE_DIR}/../../../Thirdparty/DBoW2/lib/libDBoW2.so

${PROJECT_SOURCE_DIR}/../../../Thirdparty/g2o/lib/libg2o.so

${PROJECT_SOURCE_DIR}/../../../lib/libORB_SLAM2.so

-lboost_system #此处

)

单目测试

下载测试集TUM Dataset

cd 到ORB_SLAM2文件夹下

./Examples/Monocular/mono_tum Vocabulary/ORBvoc.txt Examples/Monocular/TUMX.yaml(可以为TUM1/TUM2/TUM3) PATH_TO_SEQUENCE_FOLDER(你数据集所在的位置)

例子:

./Examples/Monocular/mono_tum Vocabulary/ORBvoc.txt Examples/Monocular/TUM1.yaml rgbd_dataset_freiburg1_desk

RGB-D相机测试

以rgbd_dataset_freiburg2_pioneer_360数据集为例

- 下载 associate.py.放在/ORB_SLAM2/Examples/RGB-D/目录下面.

- 打开终端,进入到associate.py所在目录,即/ORB_SLAM2/Examples/RGB-D/,之后运行

python associate.py ../../rgbd_dataset_freiburg2_pioneer_360/rgb.txt ../../rgbd_dataset_freiburg2_pioneer_360/depth.txt > associations.txt

在该目录中将会生成一个associations.txt文件. - 在ORB_SLAM2 主目录下运行

./Examples/RGB-D/rgbd_tum Vocabulary/ORBvoc.txt Examples/RGB-D/TUM2.yaml rgbd_dataset_freiburg2_pioneer_360 Examples/RGB-D/associations.txt

获取相机信息,实现数据传输

参考教程

获得相机内参矩阵

they’re published on a topic in the realsense ROS node. We can get those parameters by running rostopic echo /camera/color/camera_info

打开三个终端

roscoreroslaunch realsense2_camera rs_rgbd.launch(在catkin_ws目录下,这步基于我们的第一个操作)rostopic echo /camera/color/camera_info

运行之后的结构长这样

---

header:

seq: 108

stamp:

secs: 1548140470

nsecs: 392033543

frame_id: "camera_color_optical_frame"

height: 480

width: 640

distortion_model: "plumb_bob"

D: [0.0, 0.0, 0.0, 0.0, 0.0]

K: [614.4114379882812, 0.0, 324.2138671875, 0.0, 614.7125244140625, 236.86329650878906, 0.0, 0.0, 1.0]

R: [1.0, 0.0, 0.0, 0.0, 1.0, 0.0, 0.0, 0.0, 1.0]

P: [614.4114379882812, 0.0, 324.2138671875, 0.0, 0.0, 614.7125244140625, 236.86329650878906, 0.0, 0.0, 0.0, 1.0, 0.0]

binning_x: 0

binning_y: 0

roi:

x_offset: 0

y_offset: 0

height: 0

width: 0

do_rectify: False

---

K就是我们得到的内参矩阵写成了向量模式。

K = [fx 0 cx 0 fy cy 0 0 1 ]

然后就是baseline,根据官方datasheet,

D435 的baseline为55mm,bf的值为bf = baseline (in meters) * fx。

给出根据相机参数得到的新的yaml文件

%YAML:1.0

#--------------------------------------------------------------------------------------------

# Camera Parameters. Adjust them!

#--------------------------------------------------------------------------------------------

# Camera calibration and distortion parameters (OpenCV)

Camera.fx: 614.4114379882812

Camera.fy: 614.7125244140625

Camera.cx: 324.2138671875

Camera.cy: 236.86329650878906

Camera.k1: 0.0

Camera.k2: 0.0

Camera.p1: 0.0

Camera.p2: 0.0

Camera.width: 640

Camera.height: 480

# Camera frames per second

Camera.fps: 30.0

# IR projector baseline times fx (aprox.)

Camera.bf: 30.720571899

# Color order of the images (0: BGR, 1: RGB. It is ignored if images are grayscale)

Camera.RGB: 1

# Close/Far threshold. Baseline times.

ThDepth: 50.0

# Deptmap values factor

DepthMapFactor: 1000.0

#--------------------------------------------------------------------------------------------

# ORB Parameters

#--------------------------------------------------------------------------------------------

# ORB Extractor: Number of features per image

ORBextractor.nFeatures: 1000

# ORB Extractor: Scale factor between levels in the scale pyramid

ORBextractor.scaleFactor: 1.2

# ORB Extractor: Number of levels in the scale pyramid

ORBextractor.nLevels: 8

# ORB Extractor: Fast threshold

# Image is divided in a grid. At each cell FAST are extracted imposing a minimum response.

# Firstly we impose iniThFAST. If no corners are detected we impose a lower value minThFAST

# You can lower these values if your images have low contrast

ORBextractor.iniThFAST: 20

ORBextractor.minThFAST: 7

#--------------------------------------------------------------------------------------------

# Viewer Parameters

#--------------------------------------------------------------------------------------------

Viewer.KeyFrameSize: 0.05

Viewer.KeyFrameLineWidth: 1

Viewer.GraphLineWidth: 0.9

Viewer.PointSize:2

Viewer.CameraSize: 0.08

Viewer.CameraLineWidth: 3

Viewer.ViewpointX: 0

Viewer.ViewpointY: -0.7

Viewer.ViewpointZ: -1.8

Viewer.ViewpointF: 500

realsense发布(publish)的camera data 在/camera/color/image_raw 和 depth data 在 /camera/depth/image_rect_raw ,所以我们要更改 ~/ORB_SLAM2/Examples/ROS/ORB_SLAM2/src/ros_rgbd.cc下接收的位置

之后要再运行./build_ros.sh

*我记得我在重复很多遍的时候,提前就改好文件,然后运行的./build_ros.sh

测试

然后差不多所有配置都搞定了

最后,打开三个终端

roscorerosrun ORB_SLAM2 RGBD /home/(user name)/catkin_ws/ORB_SLAM2/Vocabulary/ORBvoc.txt /home/(user name)/catkin_ws/ORB_SLAM2/Examples/ROS/ORB_SLAM2/AsusD435.yamlroslaunch realsense2_camera rs_rgbd.launch

即可看到结果