Kubernetes部署记录

Kubernetes(1.8.1)部署记录

1、环境说明

服务器规划:

| IP | Hostname | Role |

|---|---|---|

| 192.168.119.180 | k8s-0、etcd-1 | Master、etcd、NFSServer |

| 192.168.119.181 | k8s-1、etcd-2 | Mission、etcd |

| 192.168.119.182 | k8s-2、etcd-3 | Mission、etcd |

| 192.168.119.183 | k8s-3 | Mission |

操作系统及软件版本:

- OS:CentOS Linux release 7.3.1611 (Core)

- ETCD:etcd-v3.2.9-linux-amd64

- Flannel:flannel.x86_64-0.7.1-2.el7

- Docker:docker.x86_64-2:1.12.6-61.git85d7426.el7.centos

- K8S:v1.8.1

所有服务器的hosts文件内容相同,如下:

[root@k8s-0 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.119.180 k8s-0 etcd-1

192.168.119.181 k8s-1 etcd-2

192.168.119.182 k8s-2 etcd-3

192.168.119.183 k8s-3关闭各个节点上的防火墙设置

[root@k8s-0 ~]# systemctl stop firewalld

[root@k8s-0 ~]# systemctl disable firewalld

[root@k8s-0 ~]# systemctl status firewalld如果防火墙玩儿得不是很溜,还是先关闭为好,避免给自己挖坑!

关闭各个节点上的swap

[root@k8s-0 ~]# swapoff -a2、ETCD集群部署

etcd is a distributed key value store that provides a reliable way to store data across a cluster of machines. It’s open-source and available on GitHub. etcd gracefully handles leader elections during network partitions and will tolerate machine failure, including the leader.

ETCD在集群中充当分布式的数据存储角色,存储kubernetes和flanneld的数据集配置信息,例如:kubernetes的节点信息、flanneld的网段信息等。

2.1、制作证书

关于证书的一些概念可以参考:网络安全相关知识简介

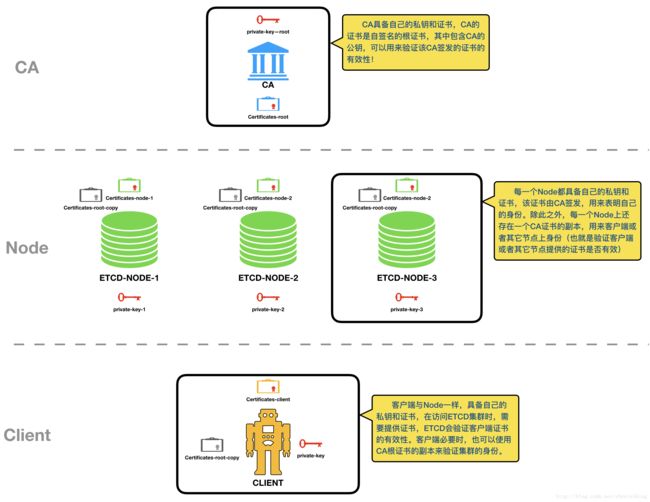

ETCD可以配置TLS证书实现客户端到服务器和服务器到服务器的身份认证。因此,集群中的每一个节点都需要有自己的证书,需要访问ETCD集群的客户端在访问集群时也需要提供证书。除此之外,所有的集群节点证书之间需要相互信任,也就是说:我们需要一个CA来生成自签名的根证书,并为集群中的每个节点和需要访问集群的客户端签发对应的证书。ETCD集群中的所有节点必须信任根证书,通过根证书来验证其它证书的真伪。集群中各个角色对应证书及其关系如下图:

这里我们不需要搭建自己的CA服务器,只需要提供根证书及其它集群角色所需要的证书即可,将根证书的副本分发到集群的各个角色上。生成证书时,我们使用CloudFlare的开源工具CFSSL。

2.1.1、安装CFSSL工具

### 下载 ###

[root@k8s-0 ~]# curl -s -L -o ./cfssl https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@k8s-0 ~]# curl -s -L -o ./cfssljson https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@k8s-0 ~]# ls -l cf*

-rw-r--r--. 1 root root 10376657 11月 7 04:25 cfssl

-rw-r--r--. 1 root root 2277873 11月 7 04:27 cfssljson

### 授权 ###

[root@k8s-0 ~]# chmod +x cf*

[root@k8s-0 ~]# ls -l cf*

-rwxr-xr-x. 1 root root 10376657 11月 7 04:25 cfssl

-rwxr-xr-x. 1 root root 2277873 11月 7 04:27 cfssljson

### 移动到/usr/local/bin目录 ###

[root@k8s-0 ~]# mv cf* /usr/local/bin/

### 测试 ###

[root@k8s-0 ~]# cfssl version

Version: 1.2.0

Revision: dev

Runtime: go1.62.1.2、生成证书文件

使用cfssl工具生成CA证书申请文件模版,JSON格式

### 生成默认的文件模版 ### [root@k8s-0 etcd]# pwd /root/cfssl/etcd [root@k8s-0 etcd]# cfssl print-defaults csr > ca-csr.json [root@k8s-0 etcd]# ls -l -rw-r--r--. 1 root root 287 11月 7 05:11 ca-csr.jsoncsr表示证书签名请求

编辑ca-csr.json模版文件,修改后的文件内容如下

{ "CN": "ETCD-Cluster", "hosts": [ "localhost", "127.0.0.1", "etcd-1", "etcd-2", "etcd-3" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Wuhan", "ST": "Hubei", "O": "Dameng", "OU": "CloudPlatform" } ] }hosts表示此证书可以在那些主机上使用

根据证书签名请求生成根证书及私钥

[root@k8s-0 etcd]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca - 2017/11/07 05:19:23 [INFO] generating a new CA key and certificate from CSR 2017/11/07 05:19:23 [INFO] generate received request 2017/11/07 05:19:23 [INFO] received CSR 2017/11/07 05:19:23 [INFO] generating key: rsa-2048 2017/11/07 05:19:24 [INFO] encoded CSR 2017/11/07 05:19:24 [INFO] signed certificate with serial number 72023613742258533689603590346479034316827863176 [root@k8s-0 etcd]# ls -l -rw-r--r--. 1 root root 1106 11月 7 05:19 ca.csr -rw-r--r--. 1 root root 390 11月 7 05:19 ca-csr.json -rw-------. 1 root root 1675 11月 7 05:19 ca-key.pem -rw-r--r--. 1 root root 1403 11月 7 05:19 ca.pemca.pem为证书文件,文件中包含CA的公钥

ca-key.pem为私钥,妥善保管

ca.csr为证书签名请求,可以使用此文件重新申请一个新的证书

使用cfssl工具生成证书签发策略文件模版,该文件告诉CA该签发什么样的证书

[root@k8s-0 etcd]# pwd /root/cfssl/etcd [root@k8s-0 etcd]# cfssl print-defaults config > ca-config.json [root@k8s-0 etcd]# ls -l -rw-r--r--. 1 root root 567 11月 7 05:39 ca-config.json -rw-r--r--. 1 root root 1106 11月 7 05:19 ca.csr -rw-r--r--. 1 root root 390 11月 7 05:19 ca-csr.json -rw-------. 1 root root 1675 11月 7 05:19 ca-key.pem -rw-r--r--. 1 root root 1403 11月 7 05:19 ca.pem

编辑ca-config.json模版文件,编辑后的文件内容如下:

{ "signing": { "default": { "expiry": "43800h" }, "profiles": { "server": { "expiry": "43800h", "usages": [ "signing", "key encipherment", "server auth" ] }, "client": { "expiry": "43800h", "usages": [ "signing", "key encipherment", "client auth" ] }, "peer": { "expiry": "43800h", "usages": [ "signing", "key encipherment", "client auth", "server auth" ] } } } }1、证书默认过期时间43800小时(5年)

2、三个profile:

server:用于服务器身份认证,存放在服务器端,表明服务器身份

client:用于客户端身份认证,存放在客户端,表明客户端身份

peer:可同时用于服务器和客户端身份认证

创建服务器证书签名请求JSON文件(certificates-node-1.json)

[root@k8s-0 etcd]# pwd /root/cfssl/etcd ### 先生成模版文件,然后填写自己的内容 ### [root@k8s-0 etcd]# cfssl print-defaults csr > certificates-node-1.json [root@k8s-0 etcd]# ls -l -rw-r--r--. 1 root root 833 11月 7 06:00 ca-config.json -rw-r--r--. 1 root root 1106 11月 7 05:19 ca.csr -rw-r--r--. 1 root root 390 11月 7 05:19 ca-csr.json -rw-------. 1 root root 1675 11月 7 05:19 ca-key.pem -rw-r--r--. 1 root root 1403 11月 7 05:19 ca.pem -rw-r--r--. 1 root root 287 11月 7 06:01 certificates-node-1.json ### 修改后的文件内容如下 ### [root@k8s-0 etcd]# cat certificates-node-1.json { "CN": "etcd-node-1", "hosts": [ "etcd-1", "localhost", "127.0.0.1" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Wuhan", "ST": "Hubei", "O": "Dameng", "OU": "CloudPlatform" } ] } ### 使用CA的私钥、证书以及证书签发策略文件签发证书 ### [root@k8s-0 etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server certificates-node-1.json | cfssljson -bare certificates-node-1 2017/11/07 06:23:02 [INFO] generate received request 2017/11/07 06:23:02 [INFO] received CSR 2017/11/07 06:23:02 [INFO] generating key: rsa-2048 2017/11/07 06:23:03 [INFO] encoded CSR 2017/11/07 06:23:03 [INFO] signed certificate with serial number 50773407225485518252456721207664284207973931225 2017/11/07 06:23:03 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for websites. For more information see the Baseline Requirements for the Issuance and Management of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org); specifically, section 10.2.3 ("Information Requirements"). [root@k8s-0 etcd]# ls -l -rw-r--r--. 1 root root 833 11月 7 06:00 ca-config.json -rw-r--r--. 1 root root 1106 11月 7 05:19 ca.csr -rw-r--r--. 1 root root 390 11月 7 05:19 ca-csr.json -rw-------. 1 root root 1675 11月 7 05:19 ca-key.pem -rw-r--r--. 1 root root 1403 11月 7 05:19 ca.pem -rw-r--r--. 1 root root 1082 11月 7 06:23 certificates-node-1.csr -rw-r--r--. 1 root root 353 11月 7 06:08 certificates-node-1.json -rw-------. 1 root root 1675 11月 7 06:23 certificates-node-1-key.pem -rw-r--r--. 1 root root 1452 11月 7 06:23 certificates-node-1.pem提示警告说证书没有hosts字段,可能导致此证书不适用于web站点。使用

openssl x509 -in certificates-node-1.pem -text -noout输出证书内容,在“X509v3 Subject Alternative Name”字段中包含了certificates-node-1.json文件中的“hosts”的内容。然后使用cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server -hostname="etcd-1,localhost,127.0.0.1" certificates-node-1.json | cfssljson -bare certificates-node-1命令生成证书时就没有警告,但是证书包含的内容是一样的。Tips:ETCD集群中的所有节点可以使用同一份证书及私钥,即将certificates-node-1.json和certificates-node-1-key.pem文件分发到etcd-1、etcd-2和etcd-3服务器上。

重复上一部操作签发etcd-2和etcd-3的证书

[root@k8s-0 etcd]# pwd /root/cfssl/etcd [root@k8s-0 etcd]# cat certificates-node-2.json { "CN": "etcd-node-2", "hosts": [ "etcd-2", "localhost", "127.0.0.1" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Wuhan", "ST": "Hubei", "O": "Dameng", "OU": "CloudPlatform" } ] } [root@k8s-0 etcd]# cat certificates-node-3.json { "CN": "etcd-node-3", "hosts": [ "etcd-3", "localhost", "127.0.0.1" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Wuhan", "ST": "Hubei", "O": "Dameng", "OU": "CloudPlatform" } ] } [root@k8s-0 etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server -hostname="etcd-2,localhost,127.0.0.1" certificates-node-2.json | cfssljson -bare certificates-node-2 2017/11/07 06:37:54 [INFO] generate received request 2017/11/07 06:37:54 [INFO] received CSR 2017/11/07 06:37:54 [INFO] generating key: rsa-2048 2017/11/07 06:37:55 [INFO] encoded CSR 2017/11/07 06:37:55 [INFO] signed certificate with serial number 53358189697471981482368171601115864435884153942 [root@k8s-0 etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=server -hostname="etcd-3,localhost,127.0.0.1" certificates-node-3.json | cfssljson -bare certificates-node-3 2017/11/07 06:38:16 [INFO] generate received request 2017/11/07 06:38:16 [INFO] received CSR 2017/11/07 06:38:16 [INFO] generating key: rsa-2048 2017/11/07 06:38:17 [INFO] encoded CSR 2017/11/07 06:38:17 [INFO] signed certificate with serial number 202032929825719668992436771371275796219870214492 [root@k8s-0 etcd]# ls -l -rw-r--r--. 1 root root 833 11月 7 06:00 ca-config.json -rw-r--r--. 1 root root 1106 11月 7 05:19 ca.csr -rw-r--r--. 1 root root 390 11月 7 05:19 ca-csr.json -rw-------. 1 root root 1675 11月 7 05:19 ca-key.pem -rw-r--r--. 1 root root 1403 11月 7 05:19 ca.pem -rw-r--r--. 1 root root 1082 11月 7 06:23 certificates-node-1.csr -rw-r--r--. 1 root root 353 11月 7 06:08 certificates-node-1.json -rw-------. 1 root root 1675 11月 7 06:23 certificates-node-1-key.pem -rw-r--r--. 1 root root 1452 11月 7 06:23 certificates-node-1.pem -rw-r--r--. 1 root root 1082 11月 7 06:37 certificates-node-2.csr -rw-r--r--. 1 root root 353 11月 7 06:36 certificates-node-2.json -rw-------. 1 root root 1675 11月 7 06:37 certificates-node-2-key.pem -rw-r--r--. 1 root root 1452 11月 7 06:37 certificates-node-2.pem -rw-r--r--. 1 root root 1082 11月 7 06:38 certificates-node-3.csr -rw-r--r--. 1 root root 353 11月 7 06:37 certificates-node-3.json -rw-------. 1 root root 1679 11月 7 06:38 certificates-node-3-key.pem -rw-r--r--. 1 root root 1452 11月 7 06:38 certificates-node-3.pem

将证书分发到对应的节点上

[root@k8s-0 etcd]# pwd /root/cfssl/etcd ### 创建证书存放目录 ### [root@k8s-0 etcd]# mkdir -p /etc/etcd/ssl [root@k8s-0 etcd]# ssh root@k8s-1 mkdir -p /etc/etcd/ssl [root@k8s-0 etcd]# ssh root@k8s-2 mkdir -p /etc/etcd/ssl ### 复制对应的证书文件到对应服务器的目录中 ### [root@k8s-0 etcd]# cp ca.pem /etc/etcd/ssl/ [root@k8s-0 etcd]# cp certificates-node-1.pem /etc/etcd/ssl/ [root@k8s-0 etcd]# cp certificates-node-1-key.pem /etc/etcd/ssl/ [root@k8s-0 etcd]# ls -l /etc/etcd/ssl/ -rw-r--r--. 1 root root 1403 11月 7 19:56 ca.pem -rw-------. 1 root root 1675 11月 7 19:57 certificates-node-1-key.pem -rw-r--r--. 1 root root 1452 11月 7 19:55 certificates-node-1.pem ### 复制文件到k8s-1节点上 ### [root@k8s-0 etcd]# scp ca.pem root@k8s-1:/etc/etcd/ssl/ [root@k8s-0 etcd]# scp certificates-node-2.pem root@k8s-1:/etc/etcd/ssl/ [root@k8s-0 etcd]# scp certificates-node-2-key.pem root@k8s-1:/etc/etcd/ssl/ [root@k8s-0 etcd]# ssh root@k8s-1 ls -l /etc/etcd/ssl/ -rw-r--r--. 1 root root 1403 11月 7 19:58 ca.pem -rw-------. 1 root root 1675 11月 7 20:00 certificates-node-2-key.pem -rw-r--r--. 1 root root 1452 11月 7 19:59 certificates-node-2.pem ### 复制文件到k8s-2节点上 ### [root@k8s-0 etcd]# scp ca.pem root@k8s-2:/etc/etcd/ssl/ [root@k8s-0 etcd]# scp certificates-node-3.pem root@k8s-2:/etc/etcd/ssl/ [root@k8s-0 etcd]# scp certificates-node-3-key.pem root@k8s-2:/etc/etcd/ssl/ [root@k8s-0 etcd]# ssh root@k8s-2 ls -l /etc/etcd/ssl/ -rw-r--r--. 1 root root 1403 11月 7 20:03 ca.pem -rw-------. 1 root root 1675 11月 7 20:04 certificates-node-3-key.pem -rw-r--r--. 1 root root 1452 11月 7 20:03 certificates-node-3.pem

查看证书内容:

openssl x509 -in ca.pem -text -noout

2.2、部署ETCD集群

下载安装包并解压

[root@k8s-0 ~]# pwd /root [root@k8s-0 ~]# wget http [root@k8s-0 ~]# ls -l -rw-r--r--. 1 root root 10176896 11月 6 19:18 etcd-v3.2.9-linux-amd64.tar.gz [root@k8s-0 ~]# tar -zxvf etcd-v3.2.9-linux-amd64.tar.gz [root@k8s-0 ~]# ls -l drwxrwxr-x. 3 chenlei chenlei 123 10月 7 01:10 etcd-v3.2.9-linux-amd64 -rw-r--r--. 1 root root 10176896 11月 6 19:18 etcd-v3.2.9-linux-amd64.tar.gz [root@k8s-0 ~]# ls -l etcd-v3.2.9-linux-amd64 drwxrwxr-x. 11 chenlei chenlei 4096 10月 7 01:10 Documentation -rwxrwxr-x. 1 chenlei chenlei 17123360 10月 7 01:10 etcd -rwxrwxr-x. 1 chenlei chenlei 14640128 10月 7 01:10 etcdctl -rw-rw-r--. 1 chenlei chenlei 33849 10月 7 01:10 README-etcdctl.md -rw-rw-r--. 1 chenlei chenlei 5801 10月 7 01:10 README.md -rw-rw-r--. 1 chenlei chenlei 7855 10月 7 01:10 READMEv2-etcdctl.md [root@k8s-0 ~]# cp etcd-v3.2.9-linux-amd64/etcd /usr/local/bin/ [root@k8s-0 ~]# cp etcd-v3.2.9-linux-amd64/etcdctl /usr/local/bin/ [root@k8s-0 ~]# etcd --version etcd Version: 3.2.9 Git SHA: f1d7dd8 Go Version: go1.8.4 Go OS/Arch: linux/amd64

创建etcd配置文件

[root@k8s-0 ~]# cat /etc/etcd/etcd.conf # [member] ETCD_NAME=etcd-1 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" #ETCD_SNAPSHOT_COUNT="10000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380" ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" #ETCD_CORS="" # #[cluster] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://etcd-1:2380" # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http:// ..." ETCD_INITIAL_CLUSTER="etcd-1=https://etcd-1:2380,etcd-2=https://etcd-2:2380,etcd-3=https://etcd-3:2380" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_ADVERTISE_CLIENT_URLS="https://etcd-1:2379" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_SRV="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_STRICT_RECONFIG_CHECK="false" #ETCD_AUTO_COMPACTION_RETENTION="0" # #[proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[security] ETCD_CERT_FILE="/etc/etcd/ssl/certificates-node-1.pem" ETCD_KEY_FILE="/etc/etcd/ssl/certificates-node-1-key.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem" ETCD_AUTO_TLS="true" ETCD_PEER_CERT_FILE="/etc/etcd/ssl/certificates-node-1.pem" ETCD_PEER_KEY_FILE="/etc/etcd/ssl/certificates-node-1-key.pem" #ETCD_PEER_CLIENT_CERT_AUTH="false" ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem" ETCD_PEER_AUTO_TLS="true" # #[logging] #ETCD_DEBUG="false" # examples for -log-package-levels etcdserver=WARNING,security=DEBUG #ETCD_LOG_PACKAGE_LEVELS="" # #[profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic"

创建Unit服务文件以及启动服务的用户

[root@k8s-0 ~]# cat /usr/lib/systemd/system/etcd.service [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target [Service] Type=notify WorkingDirectory=/var/lib/etcd/ EnvironmentFile=-/etc/etcd/etcd.conf User=etcd # set GOMAXPROCS to number of processors ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/local/bin/etcd --name=\"${ETCD_NAME}\" --cert-file=\"${ETCD_CERT_FILE}\" --key-file=\"${ETCD_KEY_FILE}\" --peer-cert-file=\"${ETCD_PEER_CERT_FILE}\" --peer-key-file=\"${ETCD_PEER_KEY_FILE}\" --trusted-ca-file=\"${ETCD_TRUSTED_CA_FILE}\" --peer-trusted-ca-file=\"${ETCD_PEER_TRUSTED_CA_FILE}\" --initial-advertise-peer-urls=\"${ETCD_INITIAL_ADVERTISE_PEER_URLS}\" --listen-peer-urls=\"${ETCD_LISTEN_PEER_URLS}\" --listen-client-urls=\"${ETCD_LISTEN_CLIENT_URLS}\" --advertise-client-urls=\"${ETCD_ADVERTISE_CLIENT_URLS}\" --initial-cluster-token=\"${ETCD_INITIAL_CLUSTER_TOKEN}\" --initial-cluster=\"${ETCD_INITIAL_CLUSTER}\" --initial-cluster-state=\"${ETCD_INITIAL_CLUSTER_STATE}\" --data-dir=\"${ETCD_DATA_DIR}\"" Restart=on-failure RestartSec=5 LimitNOFILE=65536 [Install] WantedBy=multi-user.target ### 创建etcd服务对应的用户 ### [root@k8s-0 ~]# useradd etcd -d /var/lib/etcd -s /sbin/nologin -c "etcd user" ### 修改证书文件的属主为etcd ### [root@k8s-0 ~]# chown -R etcd:etcd /etc/etcd/ [root@k8s-0 ~]# ls -lR /etc/etcd/ /etc/etcd/: -rw-r--r--. 1 etcd etcd 1752 11月 7 20:19 etcd.conf drwxr-xr-x. 2 etcd etcd 86 11月 7 19:57 ssl /etc/etcd/ssl: -rw-r--r--. 1 etcd etcd 1403 11月 7 19:56 ca.pem -rw-------. 1 etcd etcd 1675 11月 7 19:57 certificates-node-1-key.pem -rw-r--r--. 1 etcd etcd 1452 11月 7 19:55 certificates-node-1.pem

在k8s-1和k8s-2上重复上述1 - 3步骤

[root@k8s-1 ~]# cat /etc/etcd/etcd.conf # [member] ETCD_NAME=etcd-2 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" #ETCD_SNAPSHOT_COUNT="10000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380" ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" #ETCD_CORS="" # #[cluster] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://etcd-2:2380" # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http:// ..." ETCD_INITIAL_CLUSTER="etcd-1=https://etcd-1:2380,etcd-2=https://etcd-2:2380,etcd-3=https://etcd-3:2380" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_ADVERTISE_CLIENT_URLS="https://etcd-2:2379" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_SRV="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_STRICT_RECONFIG_CHECK="false" #ETCD_AUTO_COMPACTION_RETENTION="0" # #[proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[security] ETCD_CERT_FILE="/etc/etcd/ssl/certificates-node-2.pem" ETCD_KEY_FILE="/etc/etcd/ssl/certificates-node-2-key.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem" ETCD_AUTO_TLS="true" ETCD_PEER_CERT_FILE="/etc/etcd/ssl/certificates-node-2.pem" ETCD_PEER_KEY_FILE="/etc/etcd/ssl/certificates-node-2-key.pem" #ETCD_PEER_CLIENT_CERT_AUTH="false" ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem" ETCD_PEER_AUTO_TLS="true" # #[logging] #ETCD_DEBUG="false" # examples for -log-package-levels etcdserver=WARNING,security=DEBUG #ETCD_LOG_PACKAGE_LEVELS="" # #[profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic" [root@k8s-1 ~]# ls -lR /etc/etcd/ /etc/etcd/: 总用量 4 -rw-r--r--. 1 etcd etcd 1752 11月 7 20:46 etcd.conf drwxr-xr-x. 2 etcd etcd 86 11月 7 20:00 ssl /etc/etcd/ssl: 总用量 12 -rw-r--r--. 1 etcd etcd 1403 11月 7 19:58 ca.pem -rw-------. 1 etcd etcd 1675 11月 7 20:00 certificates-node-2-key.pem -rw-r--r--. 1 etcd etcd 1452 11月 7 19:59 certificates-node-2.pem [root@k8s-2 ~]# cat /etc/etcd/etcd.conf # [member] ETCD_NAME=etcd-3 ETCD_DATA_DIR="/var/lib/etcd/default.etcd" #ETCD_WAL_DIR="" #ETCD_SNAPSHOT_COUNT="10000" #ETCD_HEARTBEAT_INTERVAL="100" #ETCD_ELECTION_TIMEOUT="1000" ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380" ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379" #ETCD_MAX_SNAPSHOTS="5" #ETCD_MAX_WALS="5" #ETCD_CORS="" # #[cluster] ETCD_INITIAL_ADVERTISE_PEER_URLS="https://etcd-3:2380" # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http:// ..." ETCD_INITIAL_CLUSTER="etcd-1=https://etcd-1:2380,etcd-2=https://etcd-2:2380,etcd-3=https://etcd-3:2380" ETCD_INITIAL_CLUSTER_STATE="new" ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster" ETCD_ADVERTISE_CLIENT_URLS="https://etcd-3:2379" #ETCD_DISCOVERY="" #ETCD_DISCOVERY_SRV="" #ETCD_DISCOVERY_FALLBACK="proxy" #ETCD_DISCOVERY_PROXY="" #ETCD_STRICT_RECONFIG_CHECK="false" #ETCD_AUTO_COMPACTION_RETENTION="0" # #[proxy] #ETCD_PROXY="off" #ETCD_PROXY_FAILURE_WAIT="5000" #ETCD_PROXY_REFRESH_INTERVAL="30000" #ETCD_PROXY_DIAL_TIMEOUT="1000" #ETCD_PROXY_WRITE_TIMEOUT="5000" #ETCD_PROXY_READ_TIMEOUT="0" # #[security] ETCD_CERT_FILE="/etc/etcd/ssl/certificates-node-3.pem" ETCD_KEY_FILE="/etc/etcd/ssl/certificates-node-3-key.pem" ETCD_CLIENT_CERT_AUTH="true" ETCD_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem" ETCD_AUTO_TLS="true" ETCD_PEER_CERT_FILE="/etc/etcd/ssl/certificates-node-3.pem" ETCD_PEER_KEY_FILE="/etc/etcd/ssl/certificates-node-3-key.pem" #ETCD_PEER_CLIENT_CERT_AUTH="false" ETCD_PEER_TRUSTED_CA_FILE="/etc/etcd/ssl/ca.pem" ETCD_PEER_AUTO_TLS="true" # #[logging] #ETCD_DEBUG="false" # examples for -log-package-levels etcdserver=WARNING,security=DEBUG #ETCD_LOG_PACKAGE_LEVELS="" # #[profiling] #ETCD_ENABLE_PPROF="false" #ETCD_METRICS="basic" [root@k8s-2 ~]# ls -lR /etc/etcd/ /etc/etcd/: -rw-r--r--. 1 etcd etcd 1752 11月 7 20:50 etcd.conf drwxr-xr-x. 2 etcd etcd 86 11月 7 20:04 ssl /etc/etcd/ssl: -rw-r--r--. 1 etcd etcd 1403 11月 7 20:03 ca.pem -rw-------. 1 etcd etcd 1675 11月 7 20:04 certificates-node-3-key.pem -rw-r--r--. 1 etcd etcd 1452 11月 7 20:03 certificates-node-3.pem

启动etcd服务

### 在三个节点上分别执行 ### [root@k8s-0 ~]# systemctl start etcd [root@k8s-1 ~]# systemctl start etcd [root@k8s-2 ~]# systemctl start etcd [root@k8s-0 ~]# systemctl status etcd [root@k8s-1 ~]# systemctl status etcd [root@k8s-2 ~]# systemctl status etcd

检查集群健康状态

### 生产客户端证书 ### [root@k8s-0 etcd]# pwd /root/cfssl/etcd [root@k8s-0 etcd]# cat certificates-client.json { "CN": "etcd-client", "hosts": [ "k8s-0", "k8s-1", "k8s-2", "k8s-3", "localhost", "127.0.0.1" ], "key": { "algo": "rsa", "size": 2048 }, "names": [ { "C": "CN", "L": "Wuhan", "ST": "Hubei", "O": "Dameng", "OU": "CloudPlatform" } ] } [root@k8s-0 etcd]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=client -hostname="k8s-0,k8s-1,k8s-2,k8s-3,localhost,127.0.0.1" certificates-client.json | cfssljson -bare certificates-client 2017/11/07 21:22:52 [INFO] generate received request 2017/11/07 21:22:52 [INFO] received CSR 2017/11/07 21:22:52 [INFO] generating key: rsa-2048 2017/11/07 21:22:52 [INFO] encoded CSR 2017/11/07 21:22:52 [INFO] signed certificate with serial number 625476446160272733374126460300662233104566650826 [root@k8s-0 etcd]# ls -l -rw-r--r--. 1 root root 833 11月 7 06:00 ca-config.json -rw-r--r--. 1 root root 1106 11月 7 05:19 ca.csr -rw-r--r--. 1 root root 390 11月 7 05:19 ca-csr.json -rw-------. 1 root root 1675 11月 7 05:19 ca-key.pem -rw-r--r--. 1 root root 1403 11月 7 05:19 ca.pem -rw-r--r--. 1 root root 1110 11月 7 21:22 certificates-client.csr -rw-r--r--. 1 root root 403 11月 7 21:20 certificates-client.json -rw-------. 1 root root 1679 11月 7 21:22 certificates-client-key.pem -rw-r--r--. 1 root root 1476 11月 7 21:22 certificates-client.pem -rw-r--r--. 1 root root 1082 11月 7 06:23 certificates-node-1.csr -rw-r--r--. 1 root root 353 11月 7 06:08 certificates-node-1.json -rw-------. 1 root root 1675 11月 7 06:23 certificates-node-1-key.pem -rw-r--r--. 1 root root 1452 11月 7 06:23 certificates-node-1.pem -rw-r--r--. 1 root root 1082 11月 7 06:37 certificates-node-2.csr -rw-r--r--. 1 root root 353 11月 7 06:36 certificates-node-2.json -rw-------. 1 root root 1675 11月 7 06:37 certificates-node-2-key.pem -rw-r--r--. 1 root root 1452 11月 7 06:37 certificates-node-2.pem -rw-r--r--. 1 root root 1082 11月 7 06:38 certificates-node-3.csr -rw-r--r--. 1 root root 353 11月 7 06:37 certificates-node-3.json -rw-------. 1 root root 1679 11月 7 06:38 certificates-node-3-key.pem -rw-r--r--. 1 root root 1452 11月 7 06:38 certificates-node-3.pem [root@k8s-0 etcd]# etcdctl --ca-file=ca.pem --cert-file=certificates-client.pem --key-file=certificates-client-key.pem --endpoints=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379 cluster-health member 1a147ce6336081c1 is healthy: got healthy result from https://etcd-1:2379 member ce10c39ce110475b is healthy: got healthy result from https://etcd-3:2379 member ed2c681b974a3802 is healthy: got healthy result from https://etcd-2:2379 cluster is healthy客户端证书之后可用于kube-apiserver、flanneld等需要连接etcd集群的地方!

3、部署Kubernetes Master

Mater节点上运行的Kubernetes服务包括:kube-apiserver、kube-controller-manager和kube-scheduler。目前这三个服务需要部署在同一台服务器上。

Master节点启用TLS和TLS Bootstrapping,这里涉及到的证书交互非常复杂。为了弄明白其中的关系,我们为不同的对象建立不同的CA。首先我们来梳理一下我们可能会用到的CA:

- CA-ApiServer,用来签发kube-apiserver的证书

- CA-Client,用来签发kubectl证书、kube-proxy证书以及kubelet自动签发证书

- CA-ServiceAccount,用来签发和验证Service Account的JWT bearer tokens

kubelet自动签发证书是由CA-Client签发的证书,但是并不直接作为kubelet服务的身份证书使用。因为启用TLS Bootstrapping,kubelet的身份证书由Kubenetes(具体可能是kube-controller-manager)签发,而kubelet自动签发证书将充当二级CA,复杂签发具体的kubelet身份证书。

kubectl和kube-proxy通过kubeconfig文件获得kube-apiserver的CA根证书用来验证kube-apiserver服务的身份证书,同时向kube-apiserver出示自己的身份证书。

除了这些CA之外,我们还需要ETCD的CA根证书以及ETCD的certificates-client来访问ETCD集群服务。

3.1、制作CA证书

3.1.1、制作kubernetes根证书

[root@k8s-0 kubernetes]# pwd

/root/cfssl/kubernetes

### 生产kube-apiserver CA根证书 ###

[root@k8s-0 kubernetes]# cat kubernetes-root-ca-csr.json

{

"CN": "Kubernetes-Cluster",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Wuhan",

"ST": "Hubei",

"O": "Dameng",

"OU": "CloudPlatform"

}

]

}

[root@k8s-0 kubernetes]# cfssl gencert -initca kubernetes-root-ca-csr.json | cfssljson -bare kubernetes-root-ca

2017/11/10 19:20:36 [INFO] generating a new CA key and certificate from CSR

2017/11/10 19:20:36 [INFO] generate received request

2017/11/10 19:20:36 [INFO] received CSR

2017/11/10 19:20:36 [INFO] generating key: rsa-2048

2017/11/10 19:20:37 [INFO] encoded CSR

2017/11/10 19:20:37 [INFO] signed certificate with serial number 409390209095238242979736842166999327083180050042

[root@k8s-0 kubernetes]# ls -l

-rw-r--r--. 1 root root 1021 11月 10 19:20 kubernetes-root-ca.csr

-rw-r--r--. 1 root root 279 11月 10 18:04 kubernetes-root-ca-csr.json

-rw-------. 1 root root 1675 11月 10 19:20 kubernetes-root-ca-key.pem

-rw-r--r--. 1 root root 1395 11月 10 19:20 kubernetes-root-ca.pem

### 复制ETCD的证书策略文件 ###

[root@k8s-0 kubernetes]# cp ../etcd/ca-config.json .

[root@k8s-0 kubernetes]# ll

-rw-r--r--. 1 root root 833 11月 10 16:29 ca-config.json

-rw-r--r--. 1 root root 1021 11月 10 19:20 kubernetes-root-ca.csr

-rw-r--r--. 1 root root 279 11月 10 18:04 kubernetes-root-ca-csr.json

-rw-------. 1 root root 1675 11月 10 19:20 kubernetes-root-ca-key.pem

-rw-r--r--. 1 root root 1395 11月 10 19:20 kubernetes-root-ca.pem3.1.2、根据根证书签发kubectl证书

[root@k8s-0 kubernetes]# pwd

/root/cfssl/kubernetes

### kubenetes会提取证书中的"O"作为其RBAC模型中的"Group"值 ###

[root@k8s-0 kubernetes]# cat kubernetes-client-kubectl-csr.json

{

"CN": "kubectl-admin",

"hosts": [

"localhost",

"127.0.0.1",

"etcd-1"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Wuhan",

"ST": "Hubei",

"O": "system:masters",

"OU": "system"

}

]

}

[root@k8s-0 kubernetes]# cfssl gencert -ca=kubernetes-root-ca.pem -ca-key=kubernetes-root-ca-key.pem -config=ca-config.json -profile=client -hostname="k8s-0,localhost,127.0.0.1" kubernetes-client-kubectl-csr.json | cfssljson -bare kubernetes-client-kubectl

2017/11/10 19:28:53 [INFO] generate received request

2017/11/10 19:28:53 [INFO] received CSR

2017/11/10 19:28:53 [INFO] generating key: rsa-2048

2017/11/10 19:28:53 [INFO] encoded CSR

2017/11/10 19:28:53 [INFO] signed certificate with serial number 48283780181062525775523310004102739160256608492

[root@k8s-0 kubernetes]# ls -l

总用量 40

-rw-r--r--. 1 root root 833 11月 10 16:29 ca-config.json

-rw-r--r--. 1 root root 1086 11月 10 19:28 kubernetes-client-kubectl.csr

-rw-r--r--. 1 root root 356 11月 10 18:17 kubernetes-client-kubectl-csr.json

-rw-------. 1 root root 1675 11月 10 19:28 kubernetes-client-kubectl-key.pem

-rw-r--r--. 1 root root 1460 11月 10 19:28 kubernetes-client-kubectl.pem

-rw-r--r--. 1 root root 1021 11月 10 19:20 kubernetes-root-ca.csr

-rw-r--r--. 1 root root 279 11月 10 18:04 kubernetes-root-ca-csr.json

-rw-------. 1 root root 1675 11月 10 19:20 kubernetes-root-ca-key.pem

-rw-r--r--. 1 root root 1395 11月 10 19:20 kubernetes-root-ca.pem基于Kubenetes的RBAC模型,kubenetes会从连接集群的客户端提供的身份证书(此处是kubernetes-client-kubectl.pem)中提取”CN”和”O”,分别作为RBAC中的username和group。我们此处使用的”system:masters”是kubenetes的内置group,该group在kubenetes中被绑定了内置role “cluster-admin”,”cluster-admin”具备访问集群所有API的权限。也就是说,使用此证书访问kubenetes集群,将拥有操作kubenetes所有API的权限。”CN”的值随你喜欢,kubenetes会为你创建这个用户,然后绑定权限。

3.1.3、根据根证书签发apiserver证书

[root@k8s-0 kubernetes]# pwd

/root/cfssl/kubernetes

[root@k8s-0 kubernetes]# cat kubernetes-server-csr.json

{

"CN": "Kubernetes-Server",

"hosts": [

"localhost",

"127.0.0.1",

"k8s-0",

"10.254.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Wuhan",

"ST": "Hubei",

"O": "Dameng",

"OU": "CloudPlatform"

}

]

}

[root@k8s-0 kubernetes]# cfssl gencert -ca=kubernetes-root-ca.pem -ca-key=kubernetes-root-ca-key.pem -config=ca-config.json -profile=server kubernetes-server-csr.json | cfssljson -bare kubernetes-server

2017/11/10 19:42:40 [INFO] generate received request

2017/11/10 19:42:40 [INFO] received CSR

2017/11/10 19:42:40 [INFO] generating key: rsa-2048

2017/11/10 19:42:40 [INFO] encoded CSR

2017/11/10 19:42:40 [INFO] signed certificate with serial number 136243250541044739203078514726425397097204358889

2017/11/10 19:42:40 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-0 kubernetes]# ls -l

-rw-r--r--. 1 root root 833 11月 10 16:29 ca-config.json

-rw-r--r--. 1 root root 1086 11月 10 19:28 kubernetes-client-kubectl.csr

-rw-r--r--. 1 root root 356 11月 10 18:17 kubernetes-client-kubectl-csr.json

-rw-------. 1 root root 1675 11月 10 19:28 kubernetes-client-kubectl-key.pem

-rw-r--r--. 1 root root 1460 11月 10 19:28 kubernetes-client-kubectl.pem

-rw-r--r--. 1 root root 1021 11月 10 19:20 kubernetes-root-ca.csr

-rw-r--r--. 1 root root 279 11月 10 18:04 kubernetes-root-ca-csr.json

-rw-------. 1 root root 1675 11月 10 19:20 kubernetes-root-ca-key.pem

-rw-r--r--. 1 root root 1395 11月 10 19:20 kubernetes-root-ca.pem

-rw-r--r--. 1 root root 1277 11月 10 19:42 kubernetes-server.csr

-rw-r--r--. 1 root root 556 11月 10 19:40 kubernetes-server-csr.json

-rw-------. 1 root root 1675 11月 10 19:42 kubernetes-server-key.pem

-rw-r--r--. 1 root root 1651 11月 10 19:42 kubernetes-server.pem3.2、安装kubectl命令行客户端

3.2.1、安装

[root@k8s-0 ~]# pwd

/root

### 下载 ###

wget http://......

[root@k8s-0 ~]# ls -l

-rw-------. 1 root root 1510 10月 10 18:47 anaconda-ks.cfg

drwxr-xr-x. 3 root root 18 11月 7 05:05 cfssl

drwxrwxr-x. 3 chenlei chenlei 123 10月 7 01:10 etcd-v3.2.9-linux-amd64

-rw-r--r--. 1 root root 10176896 11月 6 19:18 etcd-v3.2.9-linux-amd64.tar.gz

-rw-r--r--. 1 root root 403881630 11月 8 07:20 kubernetes-server-linux-amd64.tar.gz

### 解压 ###

[root@k8s-0 ~]# tar -zxvf kubernetes-server-linux-amd64.tar.gz

[root@k8s-0 ~]# ls -l

-rw-------. 1 root root 1510 10月 10 18:47 anaconda-ks.cfg

drwxr-xr-x. 3 root root 18 11月 7 05:05 cfssl

drwxrwxr-x. 3 chenlei chenlei 123 10月 7 01:10 etcd-v3.2.9-linux-amd64

-rw-r--r--. 1 root root 10176896 11月 6 19:18 etcd-v3.2.9-linux-amd64.tar.gz

drwxr-x---. 4 root root 79 10月 12 07:38 kubernetes

-rw-r--r--. 1 root root 403881630 11月 8 07:24 kubernetes-server-linux-amd64.tar.gz

[root@k8s-0 ~]# cp kubernetes/server/bin/kubectl /usr/local/bin/

[root@k8s-0 ~]# kubectl version

Client Version: version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.1", GitCommit:"f38e43b221d08850172a9a4ea785a86a3ffa3b3a", GitTreeState:"clean", BuildDate:"2017-10-11T23:27:35Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server localhost:8080 was refused - did you specify the right host or port?3.2.2、配置

为了让kubectl顺利的访问apiserver,我们需要为其配置如下信息:

- kube-apiserver的服务地址

- kube-apiserver的根证书(apiserver-ca.pem),因为启用的TLS,我们需要此根证书来验明正身

- kubectl自己的证书(certificates-client-kubectl.pem)及密钥(certificates-client-kubectl-key.pem)

这些内容都定义在kubectl的kubeconfig文件中

[root@k8s-0 kubernetes]# pwd

/root/cfssl/kubernetes

### 创建证书存放路径 ###

[root@k8s-0 kubernetes]# mkdir -p /etc/kubernetes/ssl/

### 将kubectl需要用到的证书文件复制到证书存放目录 ###

[root@k8s-0 kubernetes]# cp kubernetes-root-ca.pem kubernetes-client-kubectl.pem kubernetes-client-kubectl-key.pem /etc/kubernetes/ssl/

[root@k8s-0 kubernetes]# ls -l /etc/kubernetes/ssl/

-rw-------. 1 root root 1675 11月 10 19:46 kubernetes-client-kubectl-key.pem

-rw-r--r--. 1 root root 1460 11月 10 19:46 kubernetes-client-kubectl.pem

-rw-r--r--. 1 root root 1395 11月 10 19:46 kubernetes-root-ca.pem

### 配置kubectl的kubeconfig ###

[root@k8s-0 kubernetes]# kubectl config set-cluster kubernetes-cluster --certificate-authority=/etc/kubernetes/ssl/kubernetes-root-ca.pem --embed-certs=true --server="https://k8s-0:6443"

Cluster "kubernetes-cluster" set.

[root@k8s-0 kubernetes]# kubectl config set-credentials kubernetes-kubectl --client-certificate=/etc/kubernetes/ssl/kubernetes-client-kubectl.pem --embed-certs=true --client-key=/etc/kubernetes/ssl/kubernetes-client-kubectl-key.pem

User "kubernetes-kubectl" set.

[root@k8s-0 kubernetes]# kubectl config set-context kubernetes-cluster-context --cluster=kubernetes-cluster --user=kubernetes-kubectl

Context "kubernetes-cluster-context" created.

[root@k8s-0 kubernetes]# kubectl config use-context kubernetes-cluster-context

Switched to context "kubernetes-cluster-context".

[root@k8s-0 kubernetes]# ls -l ~/.kube/

总用量 8

-rw-------. 1 root root 6445 11月 8 21:37 config如果你的kubectl需要连接多个不同的集群环境,你也可以定义多个context,根据实际需要来进行切换

set-cluster用来配置你的集群地址和CA根证书,kubernetes-cluster是集群的名称,有点Oracle TNS的感觉

set-credentials用来配置客户端证书及密钥,也就是访问集群的用户,用户信息在证书中

set-context用来组合cluster和credentials,也就是访问集群的上下文环境,你可以使用use-context来进行切换

3.2.3、测试

[root@k8s-0 kubernetes]# kubectl version

Client Version: version.Info{Major:"1", Minor:"8", GitVersion:"v1.8.1", GitCommit:"f38e43b221d08850172a9a4ea785a86a3ffa3b3a", GitTreeState:"clean", BuildDate:"2017-10-11T23:27:35Z", GoVersion:"go1.8.3", Compiler:"gc", Platform:"linux/amd64"}

The connection to the server k8s-0:6443 was refused - did you specify the right host or port?此时的提示信息中”connection to the server k8s-0:6443”是上一步配置的kubeconfig中指定的地址,只是服务尚未启动

3.3、安装kube-apiserver服务

3.3.1、安装

[root@k8s-0 ~]# pwd

/root

[root@k8s-0 ~]# cp kubernetes/server/bin/kube-apiserver /usr/local/bin/

### 创建Unit文件 ###

[root@k8s-0 ~]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

After=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/apiserver

User=kube

ExecStart=/usr/local/bin/kube-apiserver \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_ETCD_SERVERS \

$KUBE_API_ADDRESS \

$KUBE_API_PORT \

$KUBELET_PORT \

$KUBE_ALLOW_PRIV \

$KUBE_SERVICE_ADDRESSES \

$KUBE_ADMISSION_CONTROL \

$KUBE_API_ARGS

Restart=on-failure

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target接下来的步骤中,我们会创建两个EnvironmentFile:/etc/kubernetes/config和/etc/kubernetes/apiserver。ExecStart对应kube-apiserver文件存放的位置。

3.3.2、配置

3.3.2.1、准备证书

[root@k8s-0 kubernetes]# pwd

/root/cfssl/kubernetes

### 复制kubernetes-server.pem和kubernetes-server-key.pem到证书存放目录 ###

[root@k8s-0 kubernetes]# cp kubernetes-server.pem kubernetes-server-key.pem /etc/kubernetes/ssl/

### 复制kubernetes-root-ca-key.pem到证书存放目录 ###

[root@k8s-0 kubernetes]# cp kubernetes-root-ca-key.pem /etc/kubernetes/ssl/

### 准本ETCD客户端证书,这里我们直接使用上面ETCD测试用的客户端证书 ###

[root@k8s-0 etcd]# pwd

/root/cfssl/etcd

[root@k8s-0 etcd]# cp ca.pem /etc/kubernetes/ssl/etcd-root-ca.pem

[root@k8s-0 etcd]# cp certificates-client.pem /etc/kubernetes/ssl/etcd-client-kubernetes.pem

[root@k8s-0 etcd]# cp certificates-client-key.pem /etc/kubernetes/ssl/etcd-client-kubernetes-key.pem

[root@k8s-0 etcd]# ls -l /etc/kubernetes/ssl/

-rw-------. 1 root root 1679 11月 10 19:58 etcd-client-kubernetes-key.pem

-rw-r--r--. 1 root root 1476 11月 10 19:58 etcd-client-kubernetes.pem

-rw-r--r--. 1 root root 1403 11月 10 19:57 etcd-root-ca.pem

-rw-------. 1 root root 1675 11月 10 19:46 kubernetes-client-kubectl-key.pem

-rw-r--r--. 1 root root 1460 11月 10 19:46 kubernetes-client-kubectl.pem

-rw-------. 1 root root 1675 11月 10 19:57 kubernetes-root-ca-key.pem

-rw-r--r--. 1 root root 1395 11月 10 19:46 kubernetes-root-ca.pem

-rw-------. 1 root root 1675 11月 10 19:56 kubernetes-server-key.pem

-rw-r--r--. 1 root root 1651 11月 10 19:56 kubernetes-server.pem这里kubectl和kube-apiserver安装在一台服务器上,共用同一个证书存放目录

3.3.2.2、准备TLS bootstrapping配置

TLS bootstrap是指客户端证书由kube-apiserver自动签发,不需要手工为其准备身份证书。此功能目前仅支持为kubelet自动签发证书,kubelet加入集群时会向集群提出csr申请,管理员审批通过之后将自动为其签发证书。

TLS bootstrapping使用token认证,ApiServer必须先配置一个token认证,通过该token认证的用户需要具备”system:bootstrappers”组的权限。kubelet将使用token认证获得”system:bootstrappers”组的权限,然后提交CSR申请。token文件格式为:”token,username,userid,groups”,例如:

02b50b05283e98dd0fd71db496ef01e8,kubelet-bootstrap,10001,"system:bootstrappers"Token可以是任意的包涵128 bit的字符串,可以使用安全的随机数发生器生成。

[root@k8s-0 kubernetes]# pwd

/etc/kubernetes

### 生产token文件,文件名以csv结尾 ###

[root@k8s-0 kubernetes]# BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

[root@k8s-0 kubernetes]# cat > token.csv << EOF

> $BOOTSTRAP_TOKEN,kubelet-bootstrap,10001,"system:bootstrappers"

> EOF

[root@k8s-0 kubernetes]# cat token.csv

4f2c8c078e69cfc8b1ab7d640bbcb6f2,kubelet-bootstrap,10001,"system:bootstrappers"

[root@k8s-0 kubernetes]# ls -l

drwxr-xr-x. 2 kube kube 4096 11月 10 19:58 ssl

-rw-r--r--. 1 root root 80 11月 10 20:00 token.csv

### 配置kubelet bootstrapping kubeconfig ###

[root@k8s-0 kubernetes]# kubectl config set-cluster kubernetes-cluster --certificate-authority=/etc/kubernetes/ssl/kubernetes-root-ca.pem --embed-certs=true --server="https://k8s-0:6443" --kubeconfig=bootstrap.kubeconfig

Cluster "kubernetes-cluster" set.

### 确保你的${BOOTSTRAP_TOKEN}变量有效,其和上面一致 ###

[root@k8s-0 kubernetes]# kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=bootstrap.kubeconfig

User "kubelet-bootstrap" set.

[root@k8s-0 kubernetes]# kubectl config set-context kubelet-bootstrap --cluster=kubernetes-cluster --user=kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig

Context "kubelet-bootstrap" created.

[root@k8s-0 kubernetes]# kubectl config use-context kubelet-bootstrap --kubeconfig=bootstrap.kubeconfig

Switched to context "kubelet-bootstrap".

[root@k8s-0 kubernetes]# ls -l

总用量 8

-rw-------. 1 root root 2265 11月 10 20:03 bootstrap.kubeconfig

drwxr-xr-x. 2 kube kube 4096 11月 10 19:58 ssl

-rw-r--r--. 1 root root 80 11月 10 20:00 token.csv

[root@k8s-0 kubernetes]# cat bootstrap.kubeconfig

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUQyakNDQXNLZ0F3SUJBZ0lVUjdXeEh5NzdHc3h4S3R5QlJTd1VRMXgzeG5vd0RRWUpLb1pJaHZjTkFRRUwKQlFBd2N6RUxNQWtHQTFVRUJoTUNRMDR4RGpBTUJnTlZCQWdUQlVoMVltVnBNUTR3REFZRFZRUUhFd1ZYZFdoaApiakVQTUEwR0ExVUVDaE1HUkdGdFpXNW5NUll3RkFZRFZRUUxFdzFEYkc5MVpGQnNZWFJtYjNKdE1Sc3dHUVlEClZRUURFeEpMZFdKbGNtNWxkR1Z6TFVOc2RYTjBaWEl3SGhjTk1UY3hNVEV3TVRFeE5qQXdXaGNOTWpJeE1UQTUKTVRFeE5qQXdXakJ6TVFzd0NRWURWUVFHRXdKRFRqRU9NQXdHQTFVRUNCTUZTSFZpWldreERqQU1CZ05WQkFjVApCVmQxYUdGdU1ROHdEUVlEVlFRS0V3WkVZVzFsYm1jeEZqQVVCZ05WQkFzVERVTnNiM1ZrVUd4aGRHWnZjbTB4Ckd6QVpCZ05WQkFNVEVrdDFZbVZ5Ym1WMFpYTXRRMngxYzNSbGNqQ0NBU0l3RFFZSktvWklodmNOQVFFQkJRQUQKZ2dFUEFEQ0NBUW9DZ2dFQkFMQ3hXNWhQNjU4RFl3VGFCZ24xRWJIaTBNUnYyUGVCM0Y1b3M5bHZaeXZVVlZZKwpPNU9MR1plU3hZamdYcnVWRm9jTHhUTE1uUldtcmZNaUx6UG9FQlpZZ0czMXpqRzlJMG5kTm55RWVBM0ltYWdBCndsRThsZ2N5VVd6MVA3ZWx0V1FTOThnWm5QK05ieHhCT3Nick1YMytsM0ZKSDZTUXM4NFR3dVo1MVMvbi9kUWoKQ1ZFMkJvME14ZFhZZ3FESkc3MUl2WVRUcjdqWkd4d2VLZCtvWUsvTVc5ZFFjbDNraklkU1BOQUhGTW5lMVRmTwpvdlpwazF6SDRRdEJ3b3FNSHh6ZDhsUG4yd3ZzR3NRZVRkNzdqRTlsTGZjRDdOK3NyL0xiL2VLWHlQbTFPV1c3CmxLOUFtQjNxTmdBc0xZVUxGNTV1NWVQN2ZwS3pTdTU3V1Qzc3hac0NBd0VBQWFObU1HUXdEZ1lEVlIwUEFRSC8KQkFRREFnRUdNQklHQTFVZEV3RUIvd1FJTUFZQkFmOENBUUl3SFFZRFZSME9CQllFRkc4dWNWTk5tKzJtVS9CcApnbURuS2RBK3FMcGZNQjhHQTFVZEl3UVlNQmFBRkc4dWNWTk5tKzJtVS9CcGdtRG5LZEErcUxwZk1BMEdDU3FHClNJYjNEUUVCQ3dVQUE0SUJBUUJiS0pSUG1kSWpRS3E1MWNuS2lYNkV1TzJVakpVYmNYOFFFaWYzTDh2N09IVGcKcnVMY1FDUGRkbHdSNHdXUW9GYU9yZWJTbllwcmduV2EvTE4yN3lyWC9NOHNFeG83WHBEUDJoNUYybllNSFVIcAp2V1hKSUFoR3FjNjBqNmg5RHlDcGhrWVV5WUZoRkovNkVrVEJvZ241S2Z6OE1ITkV3dFdnVXdSS29aZHlGZStwCk1sL3RWOHJkYVo4eXpMY2sxejJrMXdXRDlmSWk2R2VCTG1JTnJ1ZDVVaS9QTGI2Z2YwOERZK0ZTODBIZDhZdnIKM2dTc2VCQURlOXVHMHhZZitHK1V1YUtvMHdNSHc2VGxkWGlqcVQxU0Eyc1M0ZWpGRjl0TldPaVdPcVpLakxjMgpPM2tIYllUOTVYZGQ5MHplUU1KTmR2RTU5WmdIdmpwY09sZlNEdDhOCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://k8s-0:6443

name: kubernetes-cluster

contexts:

- context:

cluster: kubernetes-cluster

user: kubelet-bootstrap

name: kubelet-bootstrap

current-context: kubelet-bootstrap

kind: Config

preferences: {}

users:

- name: kubelet-bootstrap

user:

as-user-extra: {}

token: 4f2c8c078e69cfc8b1ab7d640bbcb6f23.3.2.3、配置config

[root@k8s-0 kubernetes]# cat /etc/kubernetes/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

#KUBE_MASTER="--master=http://127.0.0.1:8080"

KUBE_MASTER="--master=http://k8s-0:8080"

### 配置apiserver文件 ###

3.3.2.4、配置apiserver

[root@k8s-0 kubernetes]# pwd

/etc/kubernetes

### 配置审计日志策略 ###

[root@k8s-0 kubernetes]# cat audit-policy.yaml

# Log all requests at the Metadata level.

apiVersion: audit.k8s.io/v1beta1

kind: Policy

rules:

- level: Metadata

[root@k8s-0 ~]# mkdir -p /var/log/kube-audit

[root@k8s-0 ~]# chown kube:kube /var/log/kube-audit/

[root@k8s-0 ~]# ls -l /var/log

drwxr-xr-x. 2 kube kube 23 11月 8 23:57 kube-audit[root@k8s-0 kubernetes]# cat apiserver

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

KUBE_API_ADDRESS="--advertise-address=192.168.119.180 --bind-address=192.168.119.180 --insecure-bind-address=0.0.0.0"

# The port on the local server to listen on.

KUBE_API_PORT="--insecure-port=8080 --secure-port=6443"

# Port minions listen on

# KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=https://etcd-1:2379,https://etcd-2:2379,https://etcd-3:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota,NodeRestriction"

# Add your own!

KUBE_API_ARGS="--authorization-mode=RBAC,Node \

--anonymous-auth=false \

--kubelet-https=true \

--enable-bootstrap-token-auth \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-32767 \

--tls-cert-file=/etc/kubernetes/ssl/kubernetes-server.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kubernetes-server-key.pem \

--client-ca-file=/etc/kubernetes/ssl/kubernetes-root-ca.pem \

--service-account-key-file=/etc/kubernetes/ssl/kubernetes-root-ca.pem \

--etcd-quorum-read=true \

--storage-backend=etcd3 \

--etcd-cafile=/etc/kubernetes/ssl/etcd-root-ca.pem \

--etcd-certfile=/etc/kubernetes/ssl/etcd-client-kubernetes.pem \

--etcd-keyfile=/etc/kubernetes/ssl/etcd-client-kubernetes-key.pem \

--enable-swagger-ui=true \

--apiserver-count=3 \

--audit-policy-file=/etc/kubernetes/audit-policy.yaml \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-audit/audit.log \

--event-ttl=1h"–service-account-key-file用于service account访问kubernetes api校验token。service account校验采用的是JWT校验方式。

3.3.3、启动

### 创建kube用户,用于启动kubernetes相关服务 ###

[root@k8s-0 ~]# useradd kube -d /var/lib/kube -s /sbin/nologin -c "Kubernetes user"

### 修改目录属主 ###

[root@k8s-0 ~]# chown -Rf kube:kube /etc/kubernetes/

[root@k8s-0 kubernetes]# ls -lR /etc/kubernetes/

/etc/kubernetes/:

-rw-r--r--. 1 kube kube 2172 11月 10 20:06 apiserver

-rw-r--r--. 1 kube kube 113 11月 8 23:42 audit-policy.yaml

-rw-------. 1 kube kube 2265 11月 10 20:03 bootstrap.kubeconfig

-rw-r--r--. 1 kube kube 696 11月 8 23:23 config

drwxr-xr-x. 2 kube kube 4096 11月 10 19:58 ssl

-rw-r--r--. 1 kube kube 80 11月 10 20:00 token.csv

/etc/kubernetes/ssl:

-rw-------. 1 kube kube 1679 11月 10 19:58 etcd-client-kubernetes-key.pem

-rw-r--r--. 1 kube kube 1476 11月 10 19:58 etcd-client-kubernetes.pem

-rw-r--r--. 1 kube kube 1403 11月 10 19:57 etcd-root-ca.pem

-rw-------. 1 kube kube 1675 11月 10 19:46 kubernetes-client-kubectl-key.pem

-rw-r--r--. 1 kube kube 1460 11月 10 19:46 kubernetes-client-kubectl.pem

-rw-------. 1 kube kube 1675 11月 10 19:57 kubernetes-root-ca-key.pem

-rw-r--r--. 1 kube kube 1395 11月 10 19:46 kubernetes-root-ca.pem

-rw-------. 1 kube kube 1675 11月 10 19:56 kubernetes-server-key.pem

-rw-r--r--. 1 kube kube 1651 11月 10 19:56 kubernetes-server.pem

[root@k8s-0 ~]# chown kube:kube /usr/local/bin/kube-apiserver

[root@k8s-0 ~]# ls -l /usr/local/bin/kube-apiserver

-rwxr-x---. 1 kube kube 192911402 11月 8 21:59 /usr/local/bin/kube-apiserver

### 启动kube-apiserver服务 ###

[root@k8s-0 ~]# systemctl start kube-apiserver

[root@k8s-0 ~]# systemctl status kube-apiserver

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; disabled; vendor preset: disabled)

Active: active (running) since 五 2017-11-10 20:13:42 CST; 9s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 3837 (kube-apiserver)

CGroup: /system.slice/kube-apiserver.service

└─3837 /usr/local/bin/kube-apiserver --logtostderr=true --v=0 --etcd-servers=https://etcd-1:2379...

11月 10 20:13:42 k8s-0 kube-apiserver[3837]: I1110 20:13:42.932647 3837 controller_utils.go:1041] W...ller

11月 10 20:13:42 k8s-0 systemd[1]: Started Kubernetes API Server.

11月 10 20:13:42 k8s-0 kube-apiserver[3837]: I1110 20:13:42.944774 3837 customresource_discovery_co...ller

11月 10 20:13:42 k8s-0 kube-apiserver[3837]: I1110 20:13:42.944835 3837 naming_controller.go:277] S...ller

11月 10 20:13:43 k8s-0 kube-apiserver[3837]: I1110 20:13:43.031094 3837 cache.go:39] Caches are syn...ller

11月 10 20:13:43 k8s-0 kube-apiserver[3837]: I1110 20:13:43.034168 3837 controller_utils.go:1048] C...ller

11月 10 20:13:43 k8s-0 kube-apiserver[3837]: I1110 20:13:43.034204 3837 cache.go:39] Caches are syn...ller

11月 10 20:13:43 k8s-0 kube-apiserver[3837]: I1110 20:13:43.039514 3837 autoregister_controller.go:...ller

11月 10 20:13:43 k8s-0 kube-apiserver[3837]: I1110 20:13:43.039527 3837 cache.go:32] Waiting for ca...ller

11月 10 20:13:43 k8s-0 kube-apiserver[3837]: I1110 20:13:43.139810 3837 cache.go:39] Caches are syn...ller

Hint: Some lines were ellipsized, use -l to show in full.3.4、安装kube-controller-manager服务

3.4.1、安装

[root@k8s-0 ~]# pwd

/root

[root@k8s-0 ~]# cp kubernetes/server/bin/kube-controller-manager /usr/local/bin/

[root@k8s-0 ~]# kube-controller-manager version

I1109 00:08:25.254275 5281 controllermanager.go:109] Version: v1.8.1

W1109 00:08:25.254380 5281 client_config.go:529] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.

W1109 00:08:25.254390 5281 client_config.go:534] error creating inClusterConfig, falling back to default config: unable to load in-cluster configuration, KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT must be defined

invalid configuration: no configuration has been provided

### 创建Unit文件 ###

[root@k8s-0 ~]# cat /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/controller-manager

User=kube

ExecStart=/usr/local/bin/kube-controller-manager \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_CONTROLLER_MANAGER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target3.4.2、配置controller-manager

### 配置/etc/kubernetes/controller-manager文件 ###

[root@k8s-0 kubernetes]# cat controller-manager

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--address=0.0.0.0 \

--service-cluster-ip-range=10.254.0.0/16 \

--cluster-name=kubernetes-cluster \

--cluster-signing-cert-file=/etc/kubernetes/ssl/kubernetes-root-ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/kubernetes-root-ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/kubernetes-root-ca-key.pem \

--root-ca-file=/etc/kubernetes/ssl/kubernetes-root-ca.pem \

--leader-elect=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=5m0s"–service-account-private-key-file与之前的–service-account-key-file对应,用于签署JWT token

–cluster-signing-*将被用来签发TLS Bootstrapping证书,需要与–client-ca-file建立信任关系(理论上,–cluster-signing的CA是–client-ca-file的下级CA也是被信任的,但是在实际操作过程中并没有达到预期的效果,kubelet顺利发送CSR申请,但是node无法加入集群,疑问待解!)。

3.4.3、启动

### 修改证书文件属主 ###

[root@k8s-0 kubernetes]# chown -R kube:kube /etc/kubernetes/

[root@k8s-0 kubernetes]# ls -lR /etc/kubernetes/

/etc/kubernetes/:

-rw-r--r--. 1 kube kube 2172 11月 10 20:06 apiserver

-rw-r--r--. 1 kube kube 113 11月 8 23:42 audit-policy.yaml

-rw-------. 1 kube kube 2265 11月 10 20:03 bootstrap.kubeconfig

-rw-r--r--. 1 kube kube 696 11月 8 23:23 config

-rw-r--r--. 1 kube kube 995 11月 10 18:32 controller-manager

drwxr-xr-x. 2 kube kube 4096 11月 10 19:58 ssl

-rw-r--r--. 1 kube kube 80 11月 10 20:00 token.csv

/etc/kubernetes/ssl:

-rw-------. 1 kube kube 1679 11月 10 19:58 etcd-client-kubernetes-key.pem

-rw-r--r--. 1 kube kube 1476 11月 10 19:58 etcd-client-kubernetes.pem

-rw-r--r--. 1 kube kube 1403 11月 10 19:57 etcd-root-ca.pem

-rw-------. 1 kube kube 1675 11月 10 19:46 kubernetes-client-kubectl-key.pem

-rw-r--r--. 1 kube kube 1460 11月 10 19:46 kubernetes-client-kubectl.pem

-rw-------. 1 kube kube 1675 11月 10 19:57 kubernetes-root-ca-key.pem

-rw-r--r--. 1 kube kube 1395 11月 10 19:46 kubernetes-root-ca.pem

-rw-------. 1 kube kube 1675 11月 10 19:56 kubernetes-server-key.pem

-rw-r--r--. 1 kube kube 1651 11月 10 19:56 kubernetes-server.pem

[root@k8s-0 kubernetes]# chown kube:kube /usr/local/bin/kube-controller-manager

[root@k8s-0 kubernetes]# ls -l /usr/local/bin/kube-controller-manager

-rwxr-x---. 1 kube kube 128087389 11月 9 00:08 /usr/local/bin/kube-controller-manager

[root@k8s-0 kubernetes]# systemctl start kube-controller-manager

[root@k8s-0 kubernetes]# systemctl status kube-controller-manager

● kube-controller-manager.service - Kubernetes Controller Manager

Loaded: loaded (/usr/lib/systemd/system/kube-controller-manager.service; disabled; vendor preset: disabled)

Active: active (running) since 五 2017-11-10 20:14:12 CST; 1s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 3851 (kube-controller)

CGroup: /system.slice/kube-controller-manager.service

└─3851 /usr/local/bin/kube-controller-manager --logtostderr=true --v=0 --master=http://k8s-0:808...

11月 10 20:14:13 k8s-0 kube-controller-manager[3851]: I1110 20:14:13.971671 3851 controller_utils.go...ler

11月 10 20:14:13 k8s-0 kube-controller-manager[3851]: I1110 20:14:13.992354 3851 controller_utils.go...ler

11月 10 20:14:14 k8s-0 kube-controller-manager[3851]: I1110 20:14:14.035607 3851 controller_utils.go...ler

11月 10 20:14:14 k8s-0 kube-controller-manager[3851]: I1110 20:14:14.041518 3851 controller_utils.go...ler

11月 10 20:14:14 k8s-0 kube-controller-manager[3851]: I1110 20:14:14.049764 3851 controller_utils.go...ler

11月 10 20:14:14 k8s-0 kube-controller-manager[3851]: I1110 20:14:14.049799 3851 garbagecollector.go...age

11月 10 20:14:14 k8s-0 kube-controller-manager[3851]: I1110 20:14:14.071155 3851 controller_utils.go...ler

11月 10 20:14:14 k8s-0 kube-controller-manager[3851]: I1110 20:14:14.071394 3851 controller_utils.go...ler

11月 10 20:14:14 k8s-0 kube-controller-manager[3851]: I1110 20:14:14.071563 3851 controller_utils.go...ler

11月 10 20:14:14 k8s-0 kube-controller-manager[3851]: I1110 20:14:14.092450 3851 controller_utils.go...ler

Hint: Some lines were ellipsized, use -l to show in full.3.5、安装kube-scheduler

3.5.1、安装

[root@k8s-0 ~]# pwd

/root

[root@k8s-0 ~]# cp kubernetes/server/bin/kube-scheduler /usr/local/bin/

### 创建Unit文件 ###

[root@k8s-0 ~]# cat /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler Plugin

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/scheduler

User=kube

ExecStart=/usr/local/bin/kube-scheduler \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target3.5.2、配置

### 配置/etc/kubernetes/scheduler文件 ###

[root@k8s-0 ~]# cat /etc/kubernetes/scheduler

###

# kubernetes scheduler config

# default config should be adequate

# Add your own!

KUBE_SCHEDULER_ARGS="--leader-elect=true --address=0.0.0.0"3.5.3、启动

### 修改启动文件属主 ###

[root@k8s-0 ~]# chown kube:kube /usr/local/bin/kube-scheduler

[root@k8s-0 ~]# ls -l /usr/local/bin/kube-scheduler

-rwxr-x---. 1 kube kube 53754721 11月 9 01:04 /usr/local/bin/kube-scheduler

### 启动服务 ###

[root@k8s-0 ~]# systemctl start kube-scheduler

[root@k8s-0 ~]# systemctl status kube-scheduler

● kube-scheduler.service - Kubernetes Scheduler Plugin

Loaded: loaded (/usr/lib/systemd/system/kube-scheduler.service; disabled; vendor preset: disabled)

Active: active (running) since 五 2017-11-10 20:14:24 CST; 4s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 3862 (kube-scheduler)

CGroup: /system.slice/kube-scheduler.service

└─3862 /usr/local/bin/kube-scheduler --logtostderr=true --v=0 --master=http://k8s-0:8080 --leade...

11月 10 20:14:24 k8s-0 systemd[1]: Started Kubernetes Scheduler Plugin.

11月 10 20:14:24 k8s-0 systemd[1]: Starting Kubernetes Scheduler Plugin...

11月 10 20:14:24 k8s-0 kube-scheduler[3862]: I1110 20:14:24.904984 3862 controller_utils.go:1041] W...ller

11月 10 20:14:25 k8s-0 kube-scheduler[3862]: I1110 20:14:25.005451 3862 controller_utils.go:1048] C...ller

11月 10 20:14:25 k8s-0 kube-scheduler[3862]: I1110 20:14:25.005533 3862 leaderelection.go:174] atte...e...

11月 10 20:14:25 k8s-0 kube-scheduler[3862]: I1110 20:14:25.015298 3862 leaderelection.go:184] succ...uler

11月 10 20:14:25 k8s-0 kube-scheduler[3862]: I1110 20:14:25.015761 3862 event.go:218] Event(v1.Obje...ader

Hint: Some lines were ellipsized, use -l to show in full.3.6、检查Master节点服务状态

[root@k8s-0 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"} 出现上述信息,说明目前Master节点上的服务部署正常,且kubectl与集群之间通信正常。

扩展阅读:

1、什么是 JWT

2、Kubernetes里的证书认证

4、部署Kubernetes Mision

Mater节点上运行的Kubernetes服务包括:kubelet和kube-proxy。因为之前配置了TLS Bootstrapping,kubelet的证书由Master自动签发。前提是kubelet通过token认证,取得”system:bootstrappers”组权限。kube-proxy属于客户端,需要根证书client-ca.pem签发对应的客户端证书。

4.1、安装docker

### 直接使用yum安装,选查看版本信息 ###

[root@k8s-1 ~]# yum list | grep docker-common

[root@k8s-1 ~]# yum list | grep docker

docker-client.x86_64 2:1.12.6-61.git85d7426.el7.centos

docker-common.x86_64 2:1.12.6-61.git85d7426.el7.centos

cockpit-docker.x86_64 151-1.el7.centos extras

docker.x86_64 2:1.12.6-61.git85d7426.el7.centos

docker-client-latest.x86_64 1.13.1-26.git1faa135.el7.centos

docker-devel.x86_64 1.3.2-4.el7.centos extras

docker-distribution.x86_64 2.6.2-1.git48294d9.el7 extras

docker-forward-journald.x86_64 1.10.3-44.el7.centos extras

docker-latest.x86_64 1.13.1-26.git1faa135.el7.centos

docker-latest-logrotate.x86_64 1.13.1-26.git1faa135.el7.centos

docker-latest-v1.10-migrator.x86_64 1.13.1-26.git1faa135.el7.centos

docker-logrotate.x86_64 2:1.12.6-61.git85d7426.el7.centos

docker-lvm-plugin.x86_64 2:1.12.6-61.git85d7426.el7.centos

docker-novolume-plugin.x86_64 2:1.12.6-61.git85d7426.el7.centos

docker-python.x86_64 1.4.0-115.el7 extras

docker-registry.x86_64 0.9.1-7.el7 extras

docker-unit-test.x86_64 2:1.12.6-61.git85d7426.el7.centos

docker-v1.10-migrator.x86_64 2:1.12.6-61.git85d7426.el7.centos

pcp-pmda-docker.x86_64 3.11.8-7.el7 base

python-docker-py.noarch 1.10.6-3.el7 extras

python-docker-pycreds.noarch 1.10.6-3.el7 extras

### 安装docker ###

[root@k8s-1 ~]# yum install -y docker

### 启动docker服务 ###

[root@k8s-1 ~]# systemctl start docker

[root@k8s-1 ~]# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/usr/lib/systemd/system/docker.service; disabled; vendor preset: disabled)

Active: active (running) since 四 2017-11-09 02:29:12 CST; 14s ago

Docs: http://docs.docker.com

Main PID: 4833 (dockerd-current)

CGroup: /system.slice/docker.service

├─4833 /usr/bin/dockerd-current --add-runtime docker-runc=/usr/libexec/docker/docker-runc-curren...

└─4837 /usr/bin/docker-containerd-current -l unix:///var/run/docker/libcontainerd/docker-contain...

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.015481471+08:00" level=info ms...ase"

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.049872681+08:00" level=info ms...nds"

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.050724567+08:00" level=info ms...rt."

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.068030608+08:00" level=info ms...lse"

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.128054846+08:00" level=info ms...ess"

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.189306705+08:00" level=info ms...ne."

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.189594801+08:00" level=info ms...ion"

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.189610061+08:00" level=info ms...12.6

11月 09 02:29:12 k8s-1 systemd[1]: Started Docker Application Container Engine.

11月 09 02:29:12 k8s-1 dockerd-current[4833]: time="2017-11-09T02:29:12.210430475+08:00" level=info ms...ock"

Hint: Some lines were ellipsized, use -l to show in full.

[root@k8s-1 ~]# ip address

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:50:56:39:3d:4c brd ff:ff:ff:ff:ff:ff

inet 192.168.119.181/24 brd 192.168.119.255 scope global ens33

valid_lft forever preferred_lft forever

3: docker0: mtu 1500 qdisc noqueue state DOWN

link/ether 02:42:cd:de:a1:b0 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 scope global docker0

valid_lft forever preferred_lft forever docker info命令可以查看Cgroup Driver

4.2、安装kubelet服务

前面在Master节点上下载的kubernetes-server-linux-amd64.tar.gz文件中有部署kubernetes集群所需要的全部二进制文件。

4.2.1、安装

### 传输kubelet文件到mision节点上 ###

[root@k8s-0 ~]# scp kubernetes/server/bin/kubelet root@k8s-1:/usr/local/bin/

### 创建Unit文件 ###

[root@k8s-1 ~]# cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/local/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_API_SERVER \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target4.2.2、配置

4.2.2.1、准备bootstrap.kubeconfig文件

### 创建目录 ###

[root@k8s-1 ~]# mkdir -p /etc/kubernetes/ssl

### 将bootstrap.kubeconfig文件从master节点复制到mission节点 ###

[root@k8s-0 ~]# scp /etc/kubernetes/bootstrap.kubeconfig root@k8s-1:/etc/kubernetes/

[root@k8s-1 ~]# ls -l /etc/kubernetes/

-rw-------. 1 root root 2265 11月 10 20:26 bootstrap.kubeconfig

drwxr-xr-x. 2 root root 6 11月 9 02:34 ssl

4.2.2.2、为TLS Bootstrapping对应的用户kubelet-bootstrap绑定角色

[root@k8s-0 ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding "kubelet-bootstrap" createdkubelet-bootstrap是TLS Bootstrapping token文件中指定的用户,如果不为此用户绑定角色,将无法提交CSR申请。启动kubelet是肯出现异常:

error: failed to run Kubelet: cannot create certificate signing request: ce

rtificatesigningrequests.certificates.k8s.io is forbidden: User “kubelet-bootstrap” cannot create certificatesign

ingrequests.certificates.k8s.io at the cluster scope

4.2.2.3、配置config

[root@k8s-1 kubernetes]# pwd

/etc/kubernetes

[root@k8s-1 kubernetes]# cat config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=true"

# How the controller-manager, scheduler, and proxy find the apiserver

#KUBE_MASTER="--master=http://127.0.0.1:8080"4.2.2.4、配置kubelet

[root@k8s-1 kubernetes]# pwd

/etc/kubernetes

[root@k8s-1 kubernetes]# cat kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=192.168.119.181"

# The port for the info server to serve on

# KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=kubernetes-mision-1"

# location of the api-server

# KUBELET_API_SERVER="--api-servers=http://127.0.0.1:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=gcr.io/google_containers/pause-amd64:3.0"

# Add your own!

KUBELET_ARGS="--cgroup-driver=systemd \

--cluster-dns=10.254.0.2 \

--resolv-conf=/etc/resolv.conf \

--experimental-bootstrap-kubeconfig=/etc/kubernetes/bootstrap.kubeconfig \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--fail-swap-on=false \

--cert-dir=/etc/kubernetes/ssl \

--cluster-domain=cluster.local. \

--hairpin-mode=promiscuous-bridge \

--serialize-image-pulls=false \

--runtime-cgroups=/systemd/system.slice \

--kubelet-cgroups=/systemd/system.slice"

[root@k8s-1 kubernetes]# ls -l

-rw-------. 1 root root 2265 11月 10 20:26 bootstrap.kubeconfig

-rw-r--r--. 1 root root 655 11月 9 02:48 config

-rw-r--r--. 1 root root 1205 11月 10 15:40 kubelet

drwxr-xr-x. 2 root root 6 11月 10 17:46 ssl4.2.3、启动

### 启动kubelet服务 ###

[root@k8s-1 kubernetes]# systemctl start kubelet

[root@k8s-1 kubernetes]# systemctl status kubelet

● kubelet.service - Kubernetes Kubelet Server

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; disabled; vendor preset: disabled)

Active: active (running) since 五 2017-11-10 20:27:39 CST; 7s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 3837 (kubelet)

CGroup: /system.slice/kubelet.service

└─3837 /usr/local/bin/kubelet --logtostderr=true --v=0 --address=192.168.119.181 --hostname-over...

11月 10 20:27:39 k8s-1 systemd[1]: Started Kubernetes Kubelet Server.

11月 10 20:27:39 k8s-1 systemd[1]: Starting Kubernetes Kubelet Server...

11月 10 20:27:39 k8s-1 kubelet[3837]: I1110 20:27:39.543227 3837 feature_gate.go:156] feature gates: map[]

11月 10 20:27:39 k8s-1 kubelet[3837]: I1110 20:27:39.543479 3837 controller.go:114] kubelet config...oller

11月 10 20:27:39 k8s-1 kubelet[3837]: I1110 20:27:39.543483 3837 controller.go:118] kubelet config...flags

11月 10 20:27:40 k8s-1 kubelet[3837]: I1110 20:27:40.064289 3837 client.go:75] Connecting to docke....sock

11月 10 20:27:40 k8s-1 kubelet[3837]: I1110 20:27:40.064322 3837 client.go:95] Start docker client...=2m0s

11月 10 20:27:40 k8s-1 kubelet[3837]: W1110 20:27:40.067246 3837 cni.go:196] Unable to update cni ...net.d

11月 10 20:27:40 k8s-1 kubelet[3837]: I1110 20:27:40.076866 3837 feature_gate.go:156] feature gates: map[]

11月 10 20:27:40 k8s-1 kubelet[3837]: W1110 20:27:40.076980 3837 server.go:289] --cloud-provider=a...citly

Hint: Some lines were ellipsized, use -l to show in full.4.2.4、查询CSR申请并审批

[root@k8s-0 kubernetes]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-ZWf_4Q3ljwNqPJ_KKxLnS2s5ddOa5nCw9b0o0FLbPro 10s kubelet-bootstrap Pending

[root@k8s-0 kubernetes]# kubectl certificate approve node-csr-ZWf_4Q3ljwNqPJ_KKxLnS2s5ddOa5nCw9b0o0FLbPro

certificatesigningrequest "node-csr-ZWf_4Q3ljwNqPJ_KKxLnS2s5ddOa5nCw9b0o0FLbPro" approved

[root@k8s-0 kubernetes]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-ZWf_4Q3ljwNqPJ_KKxLnS2s5ddOa5nCw9b0o0FLbPro 2m kubelet-bootstrap Approved,Issued

[root@k8s-0 kubernetes]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-1 Ready 6s v1.8.1 4.3、安装kube-proxy服务

4.3.1、安装

[root@k8s-0 ~]# pwd

/root

[root@k8s-0 ~]# scp kubernetes/server/bin/kube-proxy root@k8s-1:/usr/local/bin/

[root@k8s-1 kubernetes]# cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/proxy

ExecStart=/usr/local/bin/kube-proxy \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBE_MASTER \

$KUBE_PROXY_ARGS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target4.3.2、配置

4.3.2.1、签发kube-proxy证书

[root@k8s-0 kubernetes]# pwd

/root/cfssl/kubernetes

[root@k8s-0 kubernetes]# cat kubernetes-client-proxy-csr.json

{

"CN": "system:kube-proxy",

"hosts": [

"localhost",

"127.0.0.1",

"k8s-1",

"k8s-2",

"k8s-3"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Wuhan",

"ST": "Hubei",

"O": "Dameng",

"OU": "system"

}

]

}

[root@k8s-0 kubernetes]# cfssl gencert -ca=kubernetes-root-ca.pem -ca-key=kubernetes-root-ca-key.pem -config=ca-config.json -profile=client kubernetes-client-proxy-csr.json | cfssljson -bare kubernetes-client-proxy

2017/11/10 20:50:27 [INFO] generate received request

2017/11/10 20:50:27 [INFO] received CSR

2017/11/10 20:50:27 [INFO] generating key: rsa-2048

2017/11/10 20:50:28 [INFO] encoded CSR

2017/11/10 20:50:28 [INFO] signed certificate with serial number 319926141282708642124995329378952678953790336868

2017/11/10 20:50:28 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-0 kubernetes]# ls -l

-rw-r--r--. 1 root root 833 11月 10 16:29 ca-config.json

-rw-r--r--. 1 root root 1086 11月 10 19:28 kubernetes-client-kubectl.csr

-rw-r--r--. 1 root root 356 11月 10 18:17 kubernetes-client-kubectl-csr.json

-rw-------. 1 root root 1675 11月 10 19:28 kubernetes-client-kubectl-key.pem

-rw-r--r--. 1 root root 1460 11月 10 19:28 kubernetes-client-kubectl.pem

-rw-r--r--. 1 root root 1074 11月 10 20:50 kubernetes-client-proxy.csr

-rw-r--r--. 1 root root 347 11月 10 20:48 kubernetes-client-proxy-csr.json

-rw-------. 1 root root 1679 11月 10 20:50 kubernetes-client-proxy-key.pem

-rw-r--r--. 1 root root 1452 11月 10 20:50 kubernetes-client-proxy.pem

-rw-r--r--. 1 root root 1021 11月 10 19:20 kubernetes-root-ca.csr

-rw-r--r--. 1 root root 279 11月 10 18:04 kubernetes-root-ca-csr.json

-rw-------. 1 root root 1675 11月 10 19:20 kubernetes-root-ca-key.pem

-rw-r--r--. 1 root root 1395 11月 10 19:20 kubernetes-root-ca.pem

-rw-r--r--. 1 root root 1277 11月 10 19:42 kubernetes-server.csr

-rw-r--r--. 1 root root 556 11月 10 19:40 kubernetes-server-csr.json

-rw-------. 1 root root 1675 11月 10 19:42 kubernetes-server-key.pem

-rw-r--r--. 1 root root 1651 11月 10 19:42 kubernetes-server.pem

[root@k8s-0 kubernetes]# cp kubernetes-client-proxy.pem kubernetes-client-proxy-key.pem /etc/kubernetes/ssl/

[root@k8s-0 kubernetes]# ls -l /etc/kubernetes/ssl/

-rw-------. 1 kube kube 1679 11月 10 19:58 etcd-client-kubernetes-key.pem

-rw-r--r--. 1 kube kube 1476 11月 10 19:58 etcd-client-kubernetes.pem

-rw-r--r--. 1 kube kube 1403 11月 10 19:57 etcd-root-ca.pem

-rw-------. 1 kube kube 1675 11月 10 19:46 kubernetes-client-kubectl-key.pem

-rw-r--r--. 1 kube kube 1460 11月 10 19:46 kubernetes-client-kubectl.pem

-rw-------. 1 root root 1679 11月 10 20:51 kubernetes-client-proxy-key.pem

-rw-r--r--. 1 root root 1452 11月 10 20:51 kubernetes-client-proxy.pem

-rw-------. 1 kube kube 1675 11月 10 19:57 kubernetes-root-ca-key.pem

-rw-r--r--. 1 kube kube 1395 11月 10 19:46 kubernetes-root-ca.pem

-rw-------. 1 kube kube 1675 11月 10 19:56 kubernetes-server-key.pem

-rw-r--r--. 1 kube kube 1651 11月 10 19:56 kubernetes-server.pem4.3.2.2、配置kube-proxy.kubeconfig文件

[root@k8s-0 kubernetes]# pwd

/etc/kubernetes

[root@k8s-0 kubernetes]# kubectl config set-cluster kubernetes-cluster --certificate-authority=/etc/kubernetes/ssl/kubernetes-root-ca.pem --embed-certs=true --server="https://k8s-0:6443" --kubeconfig=kube-proxy.kubeconfig

Cluster "kubernetes-cluster" set.

[root@k8s-0 kubernetes]# kubectl config set-credentials kube-proxy --client-certificate=/etc/kubernetes/ssl/kubernetes-client-proxy.pem --client-key=/etc/kubernetes/ssl/kubernetes-client-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

User "kube-proxy" set.

[root@k8s-0 kubernetes]# kubectl config set-context kube-proxy --cluster=kubernetes-cluster --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

Context "kube-proxy" created.

[root@k8s-0 kubernetes]# kubectl config use-context kube-proxy --kubeconfig=kube-proxy.kubeconfig

Switched to context "kube-proxy".

[root@k8s-0 kubernetes]# ls -l

-rw-r--r--. 1 kube kube 2172 11月 10 20:06 apiserver

-rw-r--r--. 1 kube kube 113 11月 8 23:42 audit-policy.yaml

-rw-------. 1 kube kube 2265 11月 10 20:03 bootstrap.kubeconfig

-rw-r--r--. 1 kube kube 696 11月 8 23:23 config

-rw-r--r--. 1 kube kube 991 11月 10 20:35 controller-manager

-rw-------. 1 root root 6421 11月 10 20:57 kube-proxy.kubeconfig

-rw-r--r--. 1 kube kube 148 11月 9 01:07 scheduler

drwxr-xr-x. 2 kube kube 4096 11月 10 20:51 ssl

-rw-r--r--. 1 kube kube 80 11月 10 20:00 token.csv

[root@k8s-0 kubernetes]# scp kube-proxy.kubeconfig root@k8s-1:/etc/kubernetes/4.3.2.3、配置proxy

[root@k8s-1 kubernetes]# pwd

/etc/kubernetes

[root@k8s-1 kubernetes]# cat proxy

###

# kubernetes proxy config

# default config should be adequate

# Add your own!

KUBE_PROXY_ARGS="--bind-address=192.168.119.181 \

--hostname-override=kubernetes-mision-1 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \

--cluster-cidr=10.254.0.0/16"4.3.2.4、启动kube-proxy

[root@k8s-1 kubernetes]# systemctl start kube-proxy

[root@k8s-1 kubernetes]# systemctl status kube-proxy

● kube-proxy.service - Kubernetes Kube-Proxy Server

Loaded: loaded (/usr/lib/systemd/system/kube-proxy.service; disabled; vendor preset: disabled)

Active: active (running) since 五 2017-11-10 21:07:08 CST; 6s ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 4334 (kube-proxy)

CGroup: /system.slice/kube-proxy.service

‣ 4334 /usr/local/bin/kube-proxy --logtostderr=true --v=0 --bind-address=192.168.119.181 --hostn...

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.159055 4334 conntrack.go:98] Set sysctl 'ne...1072

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.159083 4334 conntrack.go:52] Setting nf_con...1072

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.159107 4334 conntrack.go:98] Set sysctl 'ne...6400

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.159120 4334 conntrack.go:98] Set sysctl 'ne...3600

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.159958 4334 config.go:202] Starting service...ller

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.159968 4334 controller_utils.go:1041] Waiti...ller

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.160091 4334 config.go:102] Starting endpoin...ller

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.160101 4334 controller_utils.go:1041] Waiti...ller

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.260670 4334 controller_utils.go:1048] Cache...ller

11月 10 21:07:08 k8s-1 kube-proxy[4334]: I1110 21:07:08.260862 4334 controller_utils.go:1048] Cache...ller

Hint: Some lines were ellipsized, use -l to show in full.4.4、其它mission节点(增加节点到已有集群)

部署方式:重复4.1到4.3,只是不需要在生成证书和kubeconfig文件

4.4.1、安装

[root@k8s-2 ~]# yum install -y docker

[root@k8s-0 ~]# scp kubernetes/server/bin/kubelet root@k8s-2:/usr/local/bin/

[root@k8s-0 ~]# scp kubernetes/server/bin/kube-proxy root@k8s-2:/usr/local/bin/

[root@k8s-2 ~]# mkdir -p /etc/kubernetes/ssl/

[root@k8s-0 ~]# scp /etc/kubernetes/bootstrap.kubeconfig root@k8s-2:/etc/kubernetes/

[root@k8s-0 ~]# scp /etc/kubernetes/kube-proxy.kubeconfig root@k8s-2:/etc/kubernetes/

[root@k8s-2 ~]# ls -lR /etc/kubernetes/

/etc/kubernetes/:

-rw-------. 1 root root 2265 11月 12 11:49 bootstrap.kubeconfig

-rw-------. 1 root root 6453 11月 12 11:50 kube-proxy.kubeconfig

drwxr-xr-x. 2 root root 6 11月 12 11:49 ssl

/etc/kubernetes/ssl:

[root@k8s-2 ~]# cat /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

EnvironmentFile=-/etc/kubernetes/config

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/local/bin/kubelet \

$KUBE_LOGTOSTDERR \

$KUBE_LOG_LEVEL \

$KUBELET_API_SERVER \

$KUBELET_ADDRESS \

$KUBELET_PORT \

$KUBELET_HOSTNAME \

$KUBE_ALLOW_PRIV \

$KUBELET_POD_INFRA_CONTAINER \

$KUBELET_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@k8s-2 ~]# cat /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]