PyTorch 1.0 基础教程(4):训练分类器

PyTorch 1.0 基础教程(4):训练分类器

- 关于数据

- 训练一个图像分类器

- 1.加载和规范化CIFAR10

- 2.定义一个卷积神经网络

- 3.定义损失函数和优化器

- 4.训练网络

- 5.用测试集测试网络

- 在GPU上训练

- 在多GPU上训练

- 更多

- 参考

关于数据

目前为止,我们已经定义了神经网络,计算损失,更新网络权重.

接下来,我们要考虑数据的相关问题.

通常来说,我们可以使用标准的python工具包将诸如图像,文本,音频,视频这些数据加载成为numpy数组. 然后我们可以转换这些数组到torch.*Tensor形式.

- 对于图像,可以使用的工具包有Pillow,OpenCV

- 对于音频,可以使用scipy和librosa

- 对于文本,可以使用原始python或Cython,或NLTK和SpaCy

针对视觉来说,可以使用torchvision,它包含一般通用数据集的加载器,如Imagenet,CIFAR10,MNIST等. 以及图像数据转换器,即torchvision.datasets和torch.utils.data.DataLoader.

以上这些工具包为我们的工作提供了很大的便利,同时可以避免重复地编写样板代码.

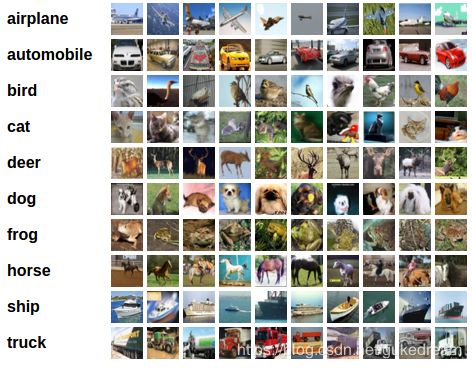

在本篇中,我们会使用CIFAR10数据集. 它包含的类别有: ‘airplane’, ‘automobile’, ‘bird’, ‘cat’, ‘deer’, ‘dog’, ‘frog’, ‘horse’, ‘ship’, ‘truck’.图像的size是3x32x32,即32x32像素的3通道彩色图像.

训练一个图像分类器

要进行的步骤顺序如下:

1.使用torchvision加载和规范化CIFAR10的训练集和测试集

2.定义一个卷积神经网络

3.定义一个损失函数

4.使用训练数据训练网络

5.使用测试数据测试网络

1.加载和规范化CIFAR10

使用torchvision可以非常简单的加载CIFAR10

import torch

import torchvision

import torchvision.transform as transforms

用torchvision获得的数据集是PILImage 图像数据类型,它像素值的范围是[0, 1],我们需要将其转换到[-1, 1].

transform = transforms.Compose(

[transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

] )

trainset = torchvision.datasets.CIFAR10(root='./data', train=True,

download=True, transform=transform)

trainloader = torch.utils.data.DataLoader(trainset, batch_size=4

shuffle=True, num_workers=2)

testset = torchvision.datasets.CIFAR10(root='./data', train=False,

download=True, transform=transform)

testloader = torch.utils.data.DataLoader(testset, batch_size=4,

shuffle=True, num_workers=2)

classes = ('plane', 'car', 'bird', 'cat',

'deer', 'dog', 'frog', 'horse', 'ship', 'truck')

Out:

Downloading https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz to ./data/cifar-10-python.tar.gz

Files already downloaded and verified

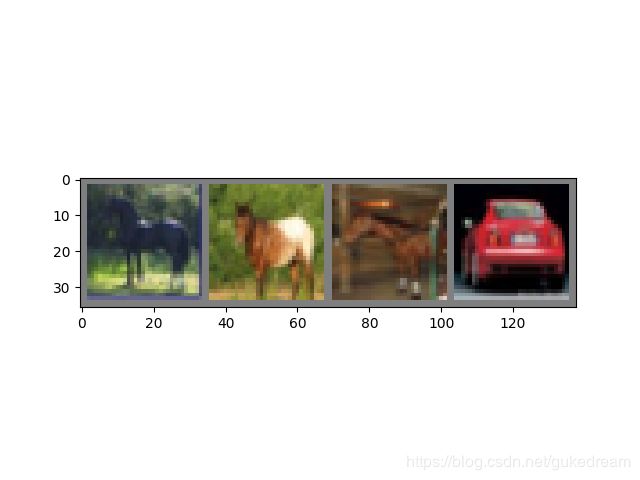

现在我们看看刚刚加载的图像.

import matplotlib.pyplot as plt

import numpy as np

# functions to show an image

def imshow(img):

img = img/2 + 0.5 # unnormalize

npimg = img.numpy()

plt.imshow(np.transpose(npimg, (1, 2, 0)))

plt.show()

# get some random training images

dataiter = iter(trainloader)

images, labels = dataiter.next()

# show images

imshow(torchvisoin.utils.make_grid(images))

# print labels

print(' '.join('%5s'%classes[label[j]] for j in range(4)))

Out:

horse horse horse car

2.定义一个卷积神经网络

import torch.nn as nn

import torch.nn.functional as F

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16*5*5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

net = Net()

3.定义损失函数和优化器

本文使用分类中常用的交叉熵损失函数以及带有动量的SGD优化方法.

import torch.optim as optim

criterion = nn.CrossEntropyLoss()

optimizer = optim.SGD(net.parameters(), lr=0.001, momentun=0.9)

4.训练网络

现在开始,有趣的地方才刚开始. 我们简单的将数据进行迭代输入到网络中并且不断的优化.

for epoch in range(2):

running_loss = 0.0

for i, data in enumerate(trainloader, 0):

# get the input

inputs, labels = data

# zero the parameter gradients

optimizer.zero_grad()

# forward + backward + optimize

outputs = net(inputs)

loss = criterion(output, labels)

loss.backward()

optimizer.step()

# print statistics

running_loss += loss.item()

if i % 2000 == 1999: # print every 2000 mini-batches

print('[%d, %5d] loss: %.3f'%

(epoch + 1, i + 1, running_loss /2000))

running_loss = 0.0

print('Finished Training')

Out:

[1, 2000] loss: 2.182

[1, 4000] loss: 1.819

[1, 6000] loss: 1.648

[1, 8000] loss: 1.569

[1, 10000] loss: 1.511

[1, 12000] loss: 1.473

[2, 2000] loss: 1.414

[2, 4000] loss: 1.365

[2, 6000] loss: 1.358

[2, 8000] loss: 1.322

[2, 10000] loss: 1.298

[2, 12000] loss: 1.282

Finished Training

5.用测试集测试网络

我们已经用训练数据将网络训练了2轮. 但我们需要检查网络是否已经学习到了分类能力.

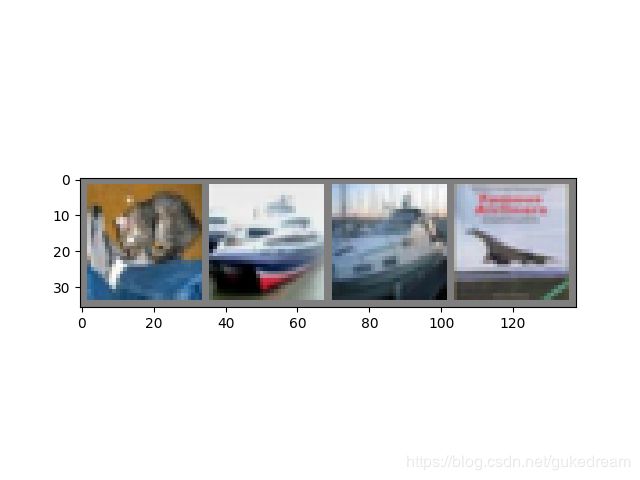

我们接下来使用刚刚训练好的网络预测输入图像的类别,并与该图像的真实值做对比. 我们还会将输出结果显示出来.

dataiter = iter(testloader)

images, labels = dataiter.next()

# print images

imshow(torchvision.utils.make_grid(images))

print('GroundTruth:', ''.join('%5s' % classes[labels[j]] for j in range(4)))

GroundTruth: cat ship ship plane

好,现在我们看看网络对上述图像的预测结果:

outputs = net(images)

输出是10个类别的能量值. 某个类别的能量值越高,代表了网络将输入预测为该类别的程度越大 . 所以,接下来我们将最大能量值对应的索引获取.

_, predicted = torch.max(outputs, 1)

print('Predicted:', ''.join('%5s'%classes[predicted[j]] for j in range(4)))

Out:

Predicted: dog ship ship plane

可见,预测结果还行.

接下来看看网络在整个测试集上的识别率能达到多少.

correct = 0

with torch.no_grad():

for data in dataloader:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

print('Accuracy of the network on the 10000 test images:%d %%' %(

100* correct / total))

Out:

Accuracy of the network on the 10000 test images: 55 %

恩,结果比随机猜测要好得多,%55 VS 10%. 这说明网络确实学习到了一些知识.

那么,那些类别表现的较佳,哪些类别又比较差呢:

class_correct = list(0. for i in range(10))

class_total = list(0. for i in range(10))

with torch.no_grad():

for data in testloader:

images, labels = data

outputs = net(images)

_, predicted = torch.max(outputs, 1)

c = (predicted == labels).squeeze()

for i in range(4):

label = labels[i]

class_correct[label] += c[i].item()

class_total[label] += 1

for i in range(10):

print('Accuracy of %5s : %2d %%' % (

classes[i], 100*class_correct[i] /class_total[i] ))

Out:

Accuracy of plane : 70 %

Accuracy of car : 70 %

Accuracy of bird : 28 %

Accuracy of cat : 25 %

Accuracy of deer : 37 %

Accuracy of dog : 60 %

Accuracy of frog : 66 %

Accuracy of horse : 62 %

Accuracy of ship : 69 %

Accuracy of truck : 61 %

在GPU上训练

就像将Tensor迁移到GPU一样,也可以把神经网络迁移到GPU.

首先,我们将可以用的第一块cuda设备定义:

device = torch.device("cuda:0" if torch.cuda.is_avaliable() else "cpu")

# Assuming that we are on a CUDA machine, this should print a CUDA device:

print(device)

Out:

cuda:0

下面这个方法可以递归地追溯所有模块,并且将他们的参数和缓存转移到CUDA张量中:

net.to(device)

需要注意的是,每一次迭代时,还需要将样本对,包括样本值和标签转移到GPU中.

inputs, labels = inputs.to(device), labels.to(device)

由于本文所述的模型规模较小,所以没办法体现GPU巨大的加速比.

**练习:**尝试增大网络的宽度(第一个nn.Conv2d的第二个参数,以及第二个nn.Conv2d的第一个参数–这两个参数需要一致),看看能通过GPU获得多大的加速比.

本文达到的目标:

- 在更高的层次上理解PyTorch的张量库和神经网络.

- 训练一个小型的神经网络

在多GPU上训练

未获得更大的加速比,我们可以同时使用多块GPU来训练我们的模型,这部分内容将在下一篇博客中介绍.

更多

- Train neural nets to play video games

- Train a state-of-the-art ResNet network on imagenet

- Train a face generator using Generative Adversarial Networks

- Train a word-level language model using Recurrent LSTM networks

- More examples

- More tutorials

- Discuss PyTorch on the Forums

- Chat with other users on Slack

参考

pytorch官方网站