抓包框架:php-spider

简介:

The easiest way to install PHP-Spider is with composer. Find it on Packagist.

PHP-Spoder在Composer上安装非常简单。

特性:

(1)supports two traversal algorithms: breadth-first and depth-first

(1)支持两种遍历算法:广度优先和深度优先

(2)supports crawl depth limiting, queue size limiting and max downloads limiting

(2)支持抓取深度限制,队列大小限制和最大下载限制

(3)supports adding custom URI discovery logic, based on XPath, CSS selectors, or plain old PHP

(3)支持基于XPath,CSS选择器,或普通的PHP的自定义url设计模式

(4)comes with a useful set of URI filters, such as Domain limiting

(4)配备一套有用的URI的过滤器,如限域

(5)supports custom URI filters, both prefetch (URI) and postfetch (Resource content)

(5)支持自定义URL过滤器,预取(URI)和postfetch(资源量)

(6)supports custom request handling logic

(6)支持自定义请求处理逻辑

(7)comes with a useful set of persistence handlers (memory, file. Redis soon to follow)

(7)自带一个有用的持久化处理程序集(内存,文件。redis跟随)

(8)supports custom persistence handlers

(8)支持自定义持久处理程序

(9)collects statistics about the crawl for reporting

(9)收集关于报告的抓取的统计信息

(10)dispatches useful events, allowing developers to add even more custom behavior

(10)将有用的事件,允许开发者添加更多的自定义行为

(11)supports a politeness policy

(11)符合法律规定

(12)will soon come with many default discoverers: RSS, Atom, RDF, etc.

(12)即将支持(暂未实现):RSS,原子,RDF,等。

(13)will soon support multiple queueing mechanisms (file, memcache, redis)

即将支持(暂未实现)多队列机制(文件、Memcache、Redis)

(14) will eventually support distributed spidering with a central queue

(14)最终将支持分布式搜索与中央队列

使用教程:

Windows 上安装 需要composer 环境

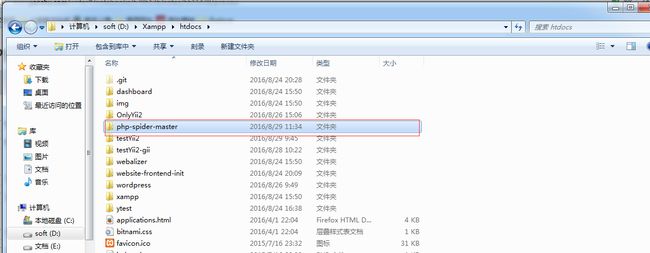

(1)下载:https://github.com/mvdbos/php-spider 下载到本地,放在xampp环境下的htdoc下。

(2)进入 目录 使用 composer update 更新

cd php-spider-master // 打开目录

composer update // 更新目录

网页打开即可使用

http://localhost/php-spider-master/example/example_simple.php

使用技巧:非直译,有误请指正。

(1)This is a very simple example. This code can be found in example/example_simple.php. For a more complete example with some logging, caching and filters, see example/example_complex.php. That file contains a more real-world example.

First create the spider

简单的例子:代码在 example/example_simple.php。

完整例子:example/example_complex.php。能查看日志,缓存和文件。

$spider = new Spider('http://www.dmoz.org');

(2)Add a URI discoverer. Without it, the spider does nothing. In this case, we want all

nodes from a certain

$spider->getDiscovererSet()->set(new XPathExpressionDiscoverer("//div[@id='catalogs']//a"));

译:创建一个抓包url。 如果没有这个url,什么也不能做、、例如,我盟可以从 中获取所有节点

$spider->getDiscovererSet()->set(new) XPathExpressionDiscoverer("//div[@id='catalogs']//a"));

(3)Set some sane options for this example. In this case, we only get the first 10 items from the start page.

$spider->getDiscovererSet()->maxDepth = 1;$spider->getQueueManager()->maxQueueSize = 10;

译:对于这些例子做一些限制。例如,我们在开始页面中,只获取10个项目。

(4)Add a listener to collect stats from the Spider and the QueueManager.There are more components that dispatch events you can use.

$statsHandler = new StatsHandler();$spider->getQueueManager()->getDispatcher()->addSubscriber($statsHandler);$spider->getDispatcher()->addSubscriber($statsHandler);

Execute the crawl

$spider->crawl();

译:在队列和抓包过程中添加监听器,这样你可以调度更多的事件去只用那些组件。

(5)When crawling is done, we could get some info about the crawl

echo "\n ENQUEUED: " . count($statsHandler->getQueued());echo "\n SKIPPED: " . count($statsHandler->getFiltered());echo "\n FAILED: " . count($statsHandler->getFailed());echo "\n PERSISTED: " . count($statsHandler->getPersisted());

译“抓包完成后,我们通过以下方法能获取抓取的信息。

(6)

Finally we could do some processing on the downloaded resources. In this example, we will echo the title of all resources

echo "\n\nDOWNLOADED RESOURCES: ";foreach ($spider->getDownloader()->getPersistenceHandler() as $resource) { echo "\n - " . $resource->getCrawler()->filterXpath('//title')->text();}

译:最后我们可以下载这些资源来使用。在例子中,我们将获取title和所有资源。

[贡献](https://github.com/mvdbos/php-spider#contributing)

demo:测试

修改:文件

修改源网址。此步骤会请求网络,获取源代码。

$spider = new Spider('http://www.hao123.com/');

// Add a URI discoverer. Without it, the spider does nothing. In this case, we want tags from a certain

筛选出自己想要的节点。

echo "\n - ". $spider->getDiscovererSet()->set(new XPathExpressionDiscoverer("//div[@id='layoutContainer']//a")) ;

常用方法:

1 .设置我们抓取的url:

// The URI we want to start crawling with

$seed = 'http://www.dmoz.org';

2. 设置是否允许获取子域内容。

// We want to allow all subdomains of dmoz.org

$allowSubDomains = true;

3.创建一个抓包url。。

// Create spider

$spider = new Spider($seed);

$spider->getDownloader()->setDownloadLimit(10);

4. 设置 队列和日志 管理,用于监听管理,抓取信息。

$statsHandler = new StatsHandler();

$LogHandler = new LogHandler();

$queueManager = new InMemoryQueueManager();

$queueManager->getDispatcher()->addSubscriber($statsHandler);

$queueManager->getDispatcher()->addSubscriber($LogHandler);

5.设置网页的最大深度 和网页队列的最大资源数为10,

// Set some sane defaults for this example. We only visit the first level of www.dmoz.org. We stop at 10 queued resources

$spider->getDiscovererSet()->maxDepth = 1;

6.我们设置为广度优先,默认是深度优先。

// This time, we set the traversal algorithm to breadth-first. The default is depth-first

$queueManager->setTraversalAlgorithm(InMemoryQueueManager::ALGORITHM_BREADTH_FIRST);

$spider->setQueueManager($queueManager);

7.添加url队列,如果没有url,就不能抓包获取资源。

// We add an URI discoverer. Without it, the spider wouldn't get past the seed resource.

$spider->getDiscovererSet()->set(new XPathExpressionDiscoverer("//*[@id='cat-list-content-2']/div/a"));

8.保存资源到文件

// Let's tell the spider to save all found resources on the filesystem

$spider->getDownloader()->setPersistenceHandler(

new \VDB\Spider\PersistenceHandler\FileSerializedResourcePersistenceHandler(__DIR__ . '/results')

);

9.添加一些过滤器,用于在请求数据之前执行

拥有这些,可以更少的执行http请求

// Add some prefetch filters. These are executed before a resource is requested.

// The more you have of these, the less HTTP requests and work for the processors

$spider->getDiscovererSet()->addFilter(new AllowedSchemeFilter(array('http')));

$spider->getDiscovererSet()->addFilter(new AllowedHostsFilter(array($seed), $allowSubDomains));

$spider->getDiscovererSet()->addFilter(new UriWithHashFragmentFilter());

$spider->getDiscovererSet()->addFilter(new UriWithQueryStringFilter());

10.我们添加监听器用来实现爬虫。对于每个域名的请求我们需要等待450ms。

// We add an eventlistener to the crawler that implements a politeness policy. We wait 450ms between every request to the same domain

$politenessPolicyEventListener = new PolitenessPolicyListener(100);

$spider->getDownloader()->getDispatcher()->addListener(

SpiderEvents::SPIDER_CRAWL_PRE_REQUEST,

array($politenessPolicyEventListener, 'onCrawlPreRequest')

);

$spider->getDispatcher()->addSubscriber($statsHandler);

$spider->getDispatcher()->addSubscriber($LogHandler);

11. 添加一些东西,停止脚本。

// Let's add something to enable us to stop the script

$spider->getDispatcher()->addListener(

SpiderEvents::SPIDER_CRAWL_USER_STOPPED,

function (Event $event) {

echo "\nCrawl aborted by user.\n";

exit();

}

);

12.添加CLI进度表

// Let's add a CLI progress meter for fun

echo "\nCrawling";

$spider->getDownloader()->getDispatcher()->addListener(

SpiderEvents::SPIDER_CRAWL_POST_REQUEST,

function (Event $event) {

echo '.';

}

);

13.对每个http的抓包请求,设置缓存,日志打印和文件。

// Set up some caching, logging and profiling on the HTTP client of the spider

$guzzleClient = $spider->getDownloader()->getRequestHandler()->getClient();

$tapMiddleware = Middleware::tap([$timerMiddleware, 'onRequest'], [$timerMiddleware, 'onResponse']);

$guzzleClient->getConfig('handler')->push($tapMiddleware, 'timer');

14.开始抓取

// Execute the crawl

$result = $spider->crawl();

15.执行结果

// Report

echo "\n\nSPIDER ID: " . $statsHandler->getSpiderId();

echo "\n ENQUEUED: " . count($statsHandler->getQueued());

echo "\n SKIPPED: " . count($statsHandler->getFiltered());

echo "\n FAILED: " . count($statsHandler->getFailed());

echo "\n PERSISTED: " . count($statsHandler->getPersisted());

16.从插件和监听器中,获取一些确定的指标

// With the information from some of plugins and listeners, we can determine some metrics

$peakMem = round(memory_get_peak_usage(true) / 1024 / 1024, 2);

$totalTime = round(microtime(true) - $start, 2);

$totalDelay = round($politenessPolicyEventListener->totalDelay / 1000 / 1000, 2);

echo "\n\nMETRICS:";

echo "\n PEAK MEM USAGE: " . $peakMem . 'MB';

echo "\n TOTAL TIME: " . $totalTime . 's';

echo "\n REQUEST TIME: " . $timerMiddleware->getTotal() . 's';

echo "\n POLITENESS WAIT TIME: " . $totalDelay . 's';

echo "\n PROCESSING TIME: " . ($totalTime - $timerMiddleware->getTotal() - $totalDelay) . 's';

17.最后:执行下载资源的进度

// Finally we could start some processing on the downloaded resources

echo "\n\nDOWNLOADED RESOURCES: ";

$downloaded = $spider->getDownloader()->getPersistenceHandler();

foreach ($downloaded as $resource) {

$title = $resource->getCrawler()->filterXpath('//title')->text();

$contentLength = (int) $resource->getResponse()->getHeaderLine('Content-Length');

// do something with the data

echo "\n - " . str_pad("[" . round($contentLength / 1024), 4, ' ', STR_PAD_LEFT) . "KB] $title";

}

echo "\n";