《视觉SLAM十四讲》第十章g2o代码的简化

在学习SLAM过程中,第十章的解决大规模BA问题的g2o代码比较难读懂,不太适合我这种slam小白,于是我写了一个简化的版本,不过基本是参考书上的代码写的,尽最大的可能写的简单一些,同时也是在学习g2o的用法。数据集可以在这里下载:http://grail.cs.washington.edu/projects/bal/

文件会出现这种格式:

前三行为旋转向量,四五六行为平移向量,剩下为f , k1 , k2

1.5741515942940262e-02

-1.2790936163850642e-02

-4.4008498081980789e-03

-3.4093839577186584e-02

-1.0751387104921525e-01

1.1202240291236032e+00

3.9975152639358436e+02

-3.1770643852803579e-07

5.8820490534594022e-13 代码附上:

#include

#include

#include

#include

#include

#include

#include "g2o/core/base_vertex.h"

#include "g2o/core/base_binary_edge.h"

#include "g2o/stuff/sampler.h"

#include "g2o/core/sparse_optimizer.h"

#include "g2o/core/block_solver.h"

#include "g2o/core/solver.h"

#include "g2o/core/robust_kernel_impl.h"

#include "g2o/core/batch_stats.h"

#include "g2o/core/optimization_algorithm_levenberg.h"

#include "g2o/core/optimization_algorithm_dogleg.h"

#include "g2o/solvers/cholmod/linear_solver_cholmod.h"

#include "g2o/solvers/dense/linear_solver_dense.h"

#include "g2o/solvers/eigen/linear_solver_eigen.h"

#include "g2o/solvers/pcg/linear_solver_pcg.h"

#include "g2o/types/sba/types_six_dof_expmap.h"

#include "g2o/solvers/structure_only/structure_only_solver.h"

#include

using namespace Eigen;

using namespace std;

using namespace cv;

using Sophus::SE3;

using Sophus::SO3;

//定义求解器 pose:九维 路标点:三维

typedef g2o::BlockSolver > BalBlockSolver;

typedef Eigen::Matrix< double, 9, 1 > Vector9d;

void World2Camera(const Vector9d camera, const Eigen::Vector3d P_w, Eigen::Vector3d& P_c);

void SolveBALProblem(const string filename);

inline void CamProjectionWithDistortion(const Vector9d camera, const Vector3d point, Vector2d& u);

//BAL相机顶点

class VertexCameraBAL : public g2o::BaseVertex<9,Vector9d>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

VertexCameraBAL() {}

virtual void setToOriginImpl() {}

virtual void oplusImpl ( const double* update )

{

Vector9d::ConstMapType v ( update );

_estimate += v;

}

virtual bool read ( std::istream& in ) { return false;}

virtual bool write ( std::ostream& out ) const {return false;}

};

//BAL 空间点顶点

class VertexPointBAL : public g2o::BaseVertex<3, Eigen::Vector3d>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

VertexPointBAL() {}

virtual void setToOriginImpl() {}

virtual void oplusImpl ( const double* update )

{

Eigen::Vector3d::ConstMapType v ( update );

_estimate += v;

}

virtual bool read ( std::istream& in ) { return false;}

virtual bool write ( std::ostream& out ) const {return false;}

};

//BAL 边

class EdgeObservationBAL : public g2o::BaseBinaryEdge<2, Eigen::Vector2d,VertexCameraBAL, VertexPointBAL>

{

public:

EIGEN_MAKE_ALIGNED_OPERATOR_NEW;

EdgeObservationBAL() {}

virtual bool read ( std::istream& in ) {return false;}

virtual bool write ( std::ostream& out ) const {return false;}

//使用G20数值导,不像书上调用ceres的自动求导功能.

virtual void computeError() override

{

//这里将第0个顶点,相机位姿取出来。

const VertexCameraBAL* cam = static_cast ( vertex ( 0 ) );

//这里将第1个顶点,空间点位置取出来。

const VertexPointBAL* point = static_cast ( vertex ( 1 ) );

Eigen::Vector2d u;

CamProjectionWithDistortion(cam->estimate(), point->estimate(), u );

_error[0] = u[0] - _measurement[0];

_error[1] = u[1] - _measurement[1];

}

};

//加载BAL的文本文件

class LoadBALProblem

{

public:

LoadBALProblem( string filename ):filename_(filename) {}

~LoadBALProblem()

{

delete[] point_index_;

delete[] camera_index_;

delete[] observations_;

delete[] observations_cameras;

delete[] observations_points;

}

//返回相机数量,空间点数量,观测点数量

int num_cameras() const{ return num_cameras_; }

int num_points() const{ return num_points_; }

int num_observations() const{ return num_observations_;}

double num_observations_cameras(int index) { return observations_cameras[index];}

double num_observations_points(int index) { return observations_points[index];}

double num_observations_uv(int index) { return observations_[index];}

int point_index(int index) { return point_index_[index];}

int camera_index(int index) { return camera_index_[index];}

//读取数据

void ReadData();

//将pose和point生成点云.

void WriteToPLYFile(const std::string& filename);

//当优化完成,重新将数据写入数组,覆盖原来未被优化的数据

double* ReWritePoses(){return observations_cameras;}

double* ReWritePoints(){return observations_points;}

//坐标系操作,相机坐标转换到世界坐标

void Camera2World(const cv::Mat angleAxis, const Eigen::Vector3d P_c, Eigen::Vector3d& P_w);

private:

int num_cameras_;

int num_points_;

int num_observations_;

int observations_cameras_;

int observations_points_;

string filename_;

int* point_index_;

int* camera_index_;

double* observations_;

double* observations_cameras;

double* observations_points;

};

int main(int argc, char** argv)

{

const string filename = "../problem-16-22106-pre.txt";

//const string filename = "../problem-52-64053-pre.txt";

SolveBALProblem(filename);

return 0;

}

/***

* 解决BAL问题

* @param filename 将.txt文件传进来,最后将优化的后点云输出

*/

void SolveBALProblem(const string filename)

{

//生成初始数据

LoadBALProblem loadBALProblem(filename);

loadBALProblem.ReadData();

//生成初始为未被优化的ply点云文件

loadBALProblem.WriteToPLYFile("../old.ply");

//创建一个稀疏优化器对象

g2o::SparseOptimizer optimizer;

//使用稀疏求解器

g2o::LinearSolver* linearSolver =

new g2o::LinearSolverCholmod();

dynamic_cast* >(linearSolver)->setBlockOrdering(true);

//矩阵块求解器

BalBlockSolver* solver_ptr = new BalBlockSolver(linearSolver);

//使用LM算法

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(solver_ptr);

//优化器构建完成

optimizer.setAlgorithm(solver);

//打开调试输出

optimizer.setVerbose(true);

//增加pose顶点

const int num_cam = loadBALProblem.num_cameras();

for(int i = 0; i < num_cam; i++)

{

Vector9d temVecCamera;

for (int j = 0; j < 9; j++)

{

temVecCamera[j] = loadBALProblem.num_observations_cameras(9*i+j);

}

VertexCameraBAL* pCamera = new VertexCameraBAL();

pCamera->setEstimate(temVecCamera);

pCamera->setId(i);

optimizer.addVertex(pCamera);

}

//增加路标顶点

const int point_num = loadBALProblem.num_points();

for(int i = 0; i < point_num; i++)

{

Vector3d temVecPoint;

for (int j = 0; j < 3; j++)

{

temVecPoint[j] = loadBALProblem.num_observations_points(3*i+j);

}

VertexPointBAL* pPoint = new VertexPointBAL();

pPoint->setEstimate(temVecPoint);

//这里加上pose的数量,避免跟pose的ID重复

pPoint->setId(i + num_cam);

pPoint->setMarginalized(true);

optimizer.addVertex(pPoint);

}

//增加边

const int num_observations =loadBALProblem.num_observations();

for(int i = 0; i < num_observations; ++i)

{

EdgeObservationBAL* bal_edge = new EdgeObservationBAL();

//得到相机ID和空间点ID

const int camera_id = loadBALProblem.camera_index(i);

const int point_id = loadBALProblem.point_index(i) + num_cam;

//使用了鲁棒核函数

g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber;

rk->setDelta(1.0);

bal_edge->setRobustKernel(rk);

bal_edge->setVertex(0,dynamic_cast(optimizer.vertex(camera_id)));

bal_edge->setVertex(1,dynamic_cast(optimizer.vertex(point_id)));

bal_edge->setInformation(Eigen::Matrix2d::Identity());

bal_edge->setMeasurement(Eigen::Vector2d(loadBALProblem.num_observations_uv(2*i + 0),

loadBALProblem.num_observations_uv(2*i + 1)));

optimizer.addEdge(bal_edge);

}

optimizer.initializeOptimization();

optimizer.setVerbose(true);

optimizer.optimize(20);

double* cameras = loadBALProblem.ReWritePoses();

for(int i = 0; i < num_cam; i++)

{

VertexCameraBAL* pCamera = dynamic_cast(optimizer.vertex(i));

Vector9d NewCameraVec = pCamera->estimate();

memcpy(cameras + i * 9, NewCameraVec.data(), sizeof(double) * 9);

}

double* points = loadBALProblem.ReWritePoints();

for(int j = 0; j < point_num; j++)

{

VertexPointBAL* pPoint = dynamic_cast(optimizer.vertex(j + num_cam));

Eigen::Vector3d NewPointVec = pPoint->estimate();

memcpy(points + j * 3, NewPointVec.data(), sizeof(double) * 3);

}

loadBALProblem.WriteToPLYFile("../new.ply");

cout<<"finished..." <(1,3)<(0,0),Rcw.at(0,1),Rcw.at(0,2), camera[3],

Rcw.at(1,0),Rcw.at(1,1),Rcw.at(1,2), camera[4],

Rcw.at(2,0),Rcw.at(2,1),Rcw.at(2,2), camera[5],

0,0,0,1;

//这里的非齐次坐标的变换要注意

Vector4d Pw(P_w[0],P_w[1],P_w[2],1);

Vector4d P = T*Pw;

P_c[0] = P[0];

P_c[1] = P[1];

P_c[2] = P[2];

}

/***

* 去畸变,世界坐标下的空间点转换成相机坐标系下的空间,最后变成像素坐标的像素点,基本跟书上的一致

* 思想如下:

* P = R * X + t (conversion from world to camera coordinates)

* p = -P / P.z (perspective division) 这里有个负号需要注意

* p' = f * r(p) * p (conversion to pixel coordinates)

* r(p) = 1.0 + k1 * ||p||^2 + k2 * ||p||^4.

* @param camera 相机的9的数据,实际上只用到前面6个数据

* @param point 世界坐标系下的空间点

* @param u 像素坐标的像素点

*/

inline void CamProjectionWithDistortion(const Vector9d camera, const Vector3d point, Vector2d& u)

{

Eigen::Vector3d p;

World2Camera(camera, point, p);

// Compute the center fo distortion

double xp = -p[0]/p[2];

double yp = -p[1]/p[2];

// Apply second and fourth order radial distortion

const double k1 = camera[7];

const double k2 = camera[8];

double r2 = xp*xp + yp*yp;

double distortion = 1.0 + r2 * k1 + k2*r2*r2 ;

const double f = camera[6];

u[0] = f * distortion * xp;

u[1] = f * distortion * yp;

}

/***

* 实现将pose转换到世界坐标系

* @param angleAxis 旋转向量

* @param P_c pose的t

* @param P_w 世界坐标系pose的t

*/

void LoadBALProblem::Camera2World( const cv::Mat angleAxis, const Eigen::Vector3d P_c, Eigen::Vector3d& P_w)

{

cv::Mat Rcw;

//这里调用opencv将旋转向量转换成旋转矩阵的API

cv::Rodrigues(angleAxis,Rcw);

Eigen::Matrix4d Tcw;

Tcw << Rcw.at(0,0),Rcw.at(0,1),Rcw.at(0,2),P_c[0],

Rcw.at(1,0),Rcw.at(1,1),Rcw.at(1,2),P_c[1],

Rcw.at(2,0),Rcw.at(2,1),Rcw.at(2,2),P_c[2],

0,0,0,1;

Eigen::Matrix4d Twc;

//Twc = Tcw^-1

Twc = Tcw.inverse();

P_w[0] = Twc(0,3);

P_w[1] = Twc(1,3);

P_w[2] = Twc(2,3);

}

/***

*生成点云文件,基本跟书上一样

* @param filename

*/

void LoadBALProblem::WriteToPLYFile(const std::string& filename)

{

std::ofstream of(filename.c_str());

of<< "ply"

<< '\n' << "format ascii 1.0"

<< '\n' << "element vertex " << num_cameras_ + num_points_

<< '\n' << "property float x"

<< '\n' << "property float y"

<< '\n' << "property float z"

<< '\n' << "property uchar red"

<< '\n' << "property uchar green"

<< '\n' << "property uchar blue"

<< '\n' << "end_header" << std::endl;

for(int i = 0; i < num_cameras(); ++i)

{

Eigen::Vector3d P_c;

Eigen::Vector3d P_w;

cv::Mat angleAxis = (cv::Mat_(1,3)<>num_cameras_;

fin>>num_points_;

fin>>num_observations_;

//输出第一行数据,这里包括,相机的姿态数量,空间点的数量,观测数据数量

std::cout << "pose number: " << num_cameras_ <>camera_index_[i];

fin>>point_index_[i];

fin>>observations_[2*i];

fin>>observations_[2*i+1];

}

//这里读取本文文件里一行一个数字的内容,相机的参数有9个,分别为R,t,f,k1,k2,这个R是旋转向量,空间为三个一组

observations_cameras_ = 9*num_cameras_;

observations_points_ = 3*num_points_;

observations_cameras = new double[observations_cameras_];

observations_points = new double[observations_points_];

//读取相机初始数据

for (int i = 0; i < observations_cameras_; ++i)

{

fin>>observations_cameras[i];

}

//读取空间初始数据

for (int i = 0; i < observations_points_; ++i)

{

fin>>observations_points[i];

}

} CMakeLists文件:

cmake_minimum_required( VERSION 2.8 )

project( BAL )

set( CMAKE_BUILD_TYPE "Release" )

set( CMAKE_CXX_FLAGS "-std=c++11 -O3" )

list( APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules )

# 寻找G2O Cholmod eigen3

find_package( G2O REQUIRED )

find_package( Cholmod )

include_directories(

${G2O_INCLUDE_DIRS} ${CHOLMOD_INCLUDE_DIR}

"/usr/include/eigen3"

)

# sophus

find_package( Sophus REQUIRED )

include_directories( ${Sophus_INCLUDE_DIRS} )

#OpenCV

find_package( OpenCV REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

add_executable( BAL main.cpp )

target_link_libraries( BAL

g2o_types_slam2d

g2o_types_slam3d

g2o_core

g2o_stuff

g2o_types_sclam2d

${CHOLMOD_LIBRARIES}

${Sophus_LIBRARIES}

${OpenCV_LIBRARIES}

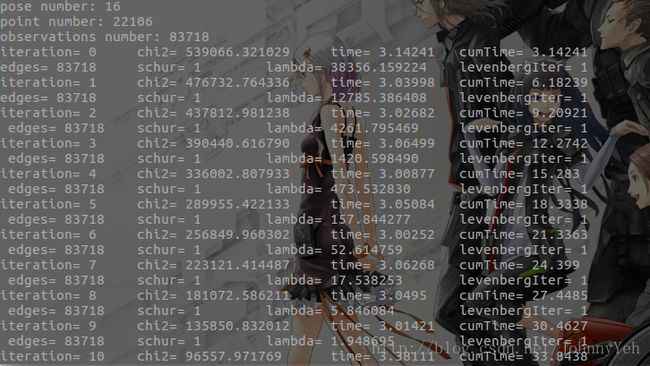

)终端运行效果如下:

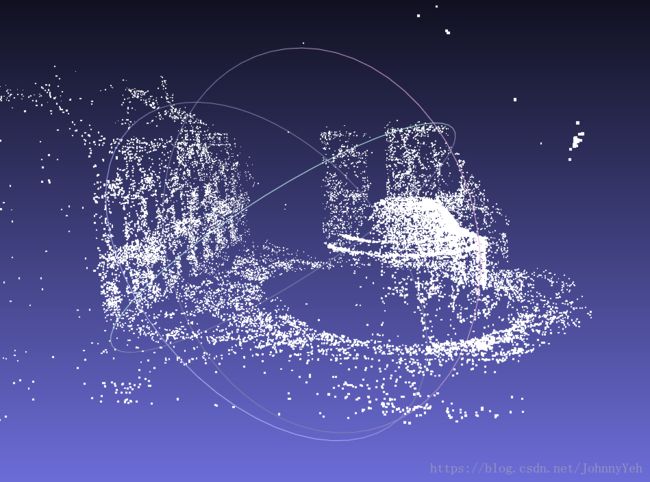

使用meshlab 打开ply文件 命令为meshlab xxx.ply

下一篇博客会实际写出雅克比矩阵,而不是使用g2o帮住求导。

点这里到达下一篇https://blog.csdn.net/JohnnyYeh/article/details/82315543

如果本文有什么错误的地方,请联系我,我及时修改。

转载请注明出处:http://blog.csdn.net/johnnyyeh/article/details/79310655