机器学习:回声状态网络(Echo State Networks)

1. 回声状态网络结构与算法推导

1.1 网络结构

ESN通过随机地部署大规模系数链接的神经元构成网络隐层,一般称为"储备池"。ESN网络具有的特点如下:

- 包含数目相对较多的神经元;

- 神经元之间的连接关系随机产生;

- 神经元之间的链接具有稀疏性;

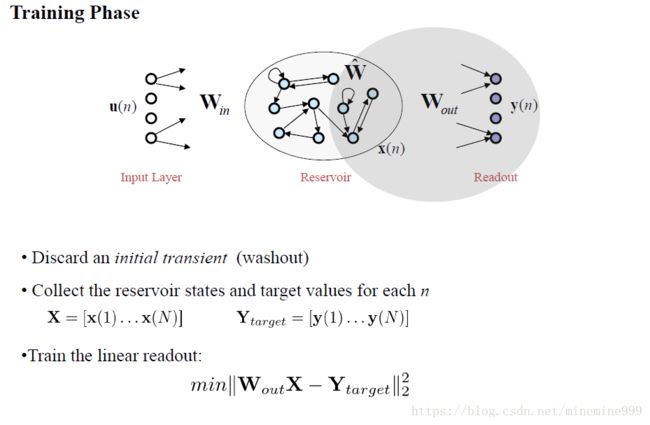

1.输入层(Input Layer):

- 输入向量 u ( n ) u(n) u(n)其维度为: n × 1 n \times 1 n×1

- 输入层 → \rightarrow →存储池的连接权重为: W i n m × n W_{in}^{m\times n} Winm×n

注意该权重是不需要训练的,随机初始化完成即可

2.储存池(Reservoir):

存储池接受两个方向的输入一个来自于输入层 u ( n ) u(n) u(n),另外一个来自存储池前一个状态的输出 x ( n − 1 ) x(n-1) x(n−1),其中状态反馈权重 W ^ \hat{W} W^与 W i n m × n W_{in}^{m\times n} Winm×n相同均不需要训练,由随机初始状态决定,所以 W ^ \hat{W} W^为大型稀疏矩阵,其中的非0元素表明了存储池中被激活的神经元:

x m × 1 ( n ) = t a n h ( W i n m × n u n × 1 ( n ) + W ^ x m × 1 ( n − 1 ) ) x^{m\times 1}(n)=tanh(W_{in}^{m\times n}u^{n\times 1}(n) + \hat{W}x^{m\times 1}(n-1)) xm×1(n)=tanh(Winm×nun×1(n)+W^xm×1(n−1))

3.输出层(Readout):

存储池 → \rightarrow →输出层为线性连接关系,即满足:

y ( n ) = W o u t l × m x m × 1 ( n ) y(n) = W_{out}^{l\times m}x^{m \times 1}(n) y(n)=Woutl×mxm×1(n)

实际训练过程中需要训练线性连接的权重。

核心思想:使用大规模随机稀疏网络(存储池)作为信息处理媒介,将输入信号从低维输入空间映射到高维度状态空间,在高维状态空间采用线性回归方法对网络的部分连接权重进行训练,而其他随机连接的权重在网络训练过程中保持不变。

1.2 ESN关键参数

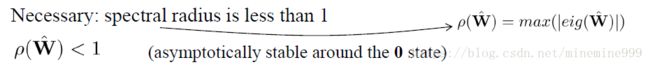

一般情况下, ρ ( W r e s m × m ) < 1 \rho(W_{res}^{m\times m})<1 ρ(Wresm×m)<1,ESN才具有回声状态属性,从而确保网络的状态和输入在经过足够长的时间后对网络的影响会消失。

1.3 ESN算法过程

1. 权重参数初始化

上述介绍的是训练算法分为离线训练和在线训练两种情况。一般情况下,由于矩阵 X X X可能为奇异矩阵,所以 X X X的逆矩阵采用伪逆算法或者正则化技术:岭回归(Ridge Regression)算法

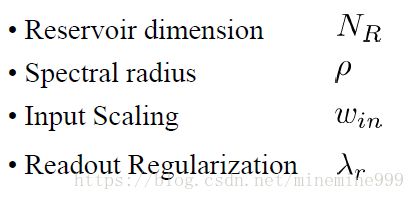

1.3 ESN超参数

- 储存池的规模 N R N_{R} NR

- 谱半径设置 ρ \rho ρ

- 输入尺度因子 w i n w_{in} win

- 输出正则化因子 λ r \lambda_{r} λr

2. 回声状态网络代码实现和案例

2.1 simple_esn代码实现

重要的算法代码块实现

def _fit_transform(self, X):

n_samples, n_features = X.shape

X = check_array(X, ensure_2d=True)

self.weights_ = self.random_state.rand(self.n_components, self.n_components)-0.5

spectral_radius = np.max(np.abs(la.eig(self.weights_)[0]))

self.weights_ *= self.weight_scaling / spectral_radius

self.input_weights_ = self.random_state.rand(self.n_components,

1+n_features)-0.5

self.readout_idx_ = self.random_state.permutation(arange(1+n_features,

1+n_features+self.n_components))[:self.n_readout]

self.components_ = zeros(shape=(1+n_features+self.n_components,

n_samples))

curr_ = zeros(shape=(self.n_components, 1))

U = concatenate((ones(shape=(n_samples, 1)), X), axis=1)

for t in range(n_samples):

u = array(U[t,:], ndmin=2).T

curr_ = (1-self.damping)*curr_ + self.damping*tanh(

self.input_weights_.dot(u) + self.weights_.dot(curr_))

self.components_[:,t] = vstack((u, curr_))[:,0]

return self

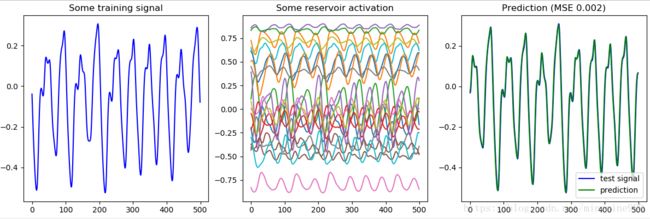

2.2 案例测试

测试数据集为MackeyGlass_t17.txt文件,原始信号为一维信号,其信号图为:

利用ESN网络对上述数据集进行预测,查看预测效果。

from simple_esn import SimpleESN

from sklearn.linear_model import Ridge

from sklearn.grid_search import GridSearchCV

from sklearn.pipeline import Pipeline

from sklearn.metrics import mean_squared_error

from numpy import loadtxt, atleast_2d

import matplotlib.pyplot as plt

from pprint import pprint

from time import time

import numpy as np

%matplotlib

if __name__ == '__main__':

X = loadtxt('MackeyGlass_t17.txt')

X = atleast_2d(X).T

train_length = 2000

test_length = 2000

X_train = X[:train_length]

y_train = X[1:train_length+1]

X_test = X[train_length:train_length+test_length]

y_test = X[train_length+1:train_length+test_length+1]

################## Simple training#####################

my_esn = SimpleESN(n_readout=1000, n_components=1000,

damping = 0.3, weight_scaling = 1.25)

echo_train = my_esn.fit_transform(X_train)

regr = Ridge(alpha = 0.01)

regr.fit(echo_train, y_train)

echo_test = my_esn.transform(X_test)

y_true, y_pred = y_test, regr.predict(echo_test)

err = mean_squared_error(y_true, y_pred)

fp = plt.figure(figsize=(12, 4))

trainplot = fp.add_subplot(1, 3, 1)

trainplot.plot(X_train[100:600], 'b')

trainplot.set_title('Some training signal')

echoplot = fp.add_subplot(1, 3, 2)

echoplot.plot(echo_train[100:600,:20])

echoplot.set_title('Some reservoir activation')

testplot = fp.add_subplot(1, 3, 3)

testplot.plot(X_test[-500:], 'b', label='test signal')

testplot.plot(y_pred[-500:], 'g', label='prediction')

testplot.set_title('Prediction (MSE %0.3f)' % err)

testplot.legend(loc='lower right')

plt.tight_layout(0.5)

#################### Grid search#########################

pipeline = Pipeline([('esn', SimpleESN(n_readout=1000)),

('ridge', Ridge(alpha = 0.01))])

parameters = {

'esn__n_readout': [1000],

'esn__n_components': [1000],

'esn__weight_scaling': [0.9, 1.25],

'esn__damping': [0.3],

'ridge__alpha': [0.01, 0.001]

}

grid_search = GridSearchCV(pipeline, parameters, n_jobs=-1, verbose=1, cv=3)

print ("Starting grid search with parameters")

pprint (parameters)

t0 = time()

grid_search.fit(X_train, y_train)

print ("done in %0.3f s" % (time()-t0))

print ("Best score on training is: %0.3f" % grid_search.best_score_)

best_parameters = grid_search.best_estimator_.get_params()

for param_name in sorted(parameters.keys()):

print ("\t%s: %r" % (param_name, best_parameters[param_name]))

y_true, y_pred = y_test, grid_search.predict(X_test)

err = mean_squared_error(y_true, y_pred)

fg = plt.figure(figsize=(9, 4))

echoplot = fg.add_subplot(1, 2, 1)

echoplot.plot(echo_train[100:600,:20])

echoplot.set_title('Some reservoir activation')

testplot = fg.add_subplot(1, 2, 2)

testplot.plot(X_test[-500:], 'b', label='test signal')

testplot.plot(y_pred[-500:], 'g', label='prediction')

testplot.set_title('Prediction after GridSearch (MSE %0.3f)' % err)

testplot.legend(loc='lower right')

plt.tight_layout(0.5)

plt.show()

测试结果:

数据集下载链接:https://download.csdn.net/download/minemine999/10501371