Lucene入门及实际项目应用场景

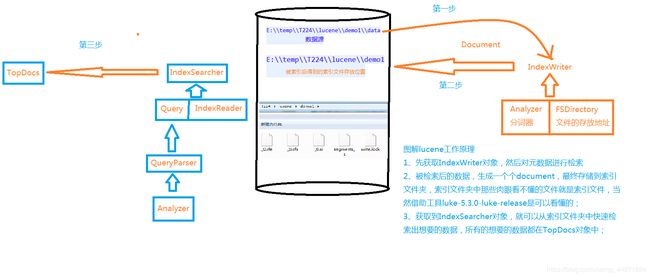

Lucene的思想

Helloword实现

前提,建一个maven项目,导入maven依赖(常规操作)

org.apache.lucene

lucene-core

5.3.1

org.apache.lucene

lucene-queryparser

5.3.1

org.apache.lucene

lucene-analyzers-common

5.3.1

生成索引

目的:索引数据目录,在指定目录生成索引文件

1、构造方法 实例化IndexWriter

u 获取索引文件存放地址对象

u 获取输出流

设置输出流的对应配置

给输出流配置设置分词器

2、关闭索引输出流

3、索引指定路径下的所有文件

4、索引指定的文件

5、获取文档(索引文件中包含的重要信息,key-value的形式)

6、测试

import java.io.File;

import java.io.FileReader;

import java.nio.file.Paths;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.store.FSDirectory;

/**

* 配合Demo1.java进行lucene的helloword实现

* @author Administrator

*

*/

public class IndexCreate {

private IndexWriter indexWriter;

/**

* 1、构造方法 实例化IndexWriter

* @param indexDir

* @throws Exception

*/

public IndexCreate(String indexDir) throws Exception{

// 获取索引文件的存放地址对象

FSDirectory dir = FSDirectory.open(Paths.get(indexDir));

// 标准分词器(针对英文)

Analyzer analyzer = new StandardAnalyzer();

// 索引输出流配置对象

IndexWriterConfig conf = new IndexWriterConfig(analyzer);

indexWriter = new IndexWriter(dir, conf);

}

/**

* 2、关闭索引输出流

* @throws Exception

*/

public void closeIndexWriter() throws Exception{

indexWriter.close();

}

/**

* 3、索引指定路径下的所有文件

* @param dataDir

* @return

* @throws Exception

*/

public int index(String dataDir) throws Exception{

File[] files = new File(dataDir).listFiles();

for (File file : files) {

indexFile(file);

}

return indexWriter.numDocs();

}

/**

* 4、索引指定的文件

* @param file

* @throws Exception

*/

private void indexFile(File file) throws Exception{

System.out.println("被索引文件的全路径:"+file.getCanonicalPath());

Document doc = getDocument(file);

indexWriter.addDocument(doc);

}

/**

* 5、获取文档(索引文件中包含的重要信息,key-value的形式)

* @param file

* @return

* @throws Exception

*/

private Document getDocument(File file) throws Exception{

Document doc = new Document();

doc.add(new TextField("contents", new FileReader(file)));

// Field.Store.YES是否存储到硬盘

doc.add(new TextField("fullPath", file.getCanonicalPath(),Field.Store.YES));

doc.add(new TextField("fileName", file.getName(),Field.Store.YES));

return doc;

}

}

package com.javaxl.lucene;

public class Demo1 {

public static void main(String[] args) {

// 索引文件将要存放的位置

String indexDir = "E:\\temp\\T224\\lucene\\demo1";

// 数据源地址

String dataDir = "E:\\temp\\T224\\lucene\\demo1\\data";

IndexCreate ic = null;

try {

ic = new IndexCreate(indexDir);

long start = System.currentTimeMillis();

int num = ic.index(dataDir);

long end = System.currentTimeMillis();

System.out.println("检索指定路径下"+num+"个文件,一共花费了"+(end-start)+"毫秒");

} catch (Exception e) {

e.printStackTrace();

}finally {

try {

ic.closeIndexWriter();

} catch (Exception e) {

e.printStackTrace();

}

}

}

}

使用索引

从索引文件中拿数据

1、获取输入流(通过dirReader)

2、获取索引搜索对象(通过输入流来拿)

3、获取查询对象(通过查询解析器来获取,解析器是通过分词器获取)

4、获取包含关键字排前面的文档对象集合

5、可以获取对应文档的内容

import java.nio.file.Paths;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.queryparser.classic.QueryParser;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.FSDirectory;

/**

* 配合Demo2.java进行lucene的helloword实现

* @author Administrator

*

*/

public class IndexUse {

/**

* 通过关键字在索引目录中查询

* @param indexDir 索引文件所在目录

* @param q 关键字

*/

public static void search(String indexDir, String q) throws Exception{

FSDirectory indexDirectory = FSDirectory.open(Paths.get(indexDir));

// 注意:索引输入流不是new出来的,是通过目录读取工具类打开的

IndexReader indexReader = DirectoryReader.open(indexDirectory);

// 获取索引搜索对象

IndexSearcher indexSearcher = new IndexSearcher(indexReader);

Analyzer analyzer = new StandardAnalyzer();

QueryParser queryParser = new QueryParser("contents", analyzer);

// 获取符合关键字的查询对象

Query query = queryParser.parse(q);

long start=System.currentTimeMillis();

// 获取关键字出现的前十次

TopDocs topDocs = indexSearcher.search(query , 10);

long end=System.currentTimeMillis();

System.out.println("匹配 "+q+" ,总共花费"+(end-start)+"毫秒"+"查询到"+topDocs.totalHits+"个记录");

for (ScoreDoc scoreDoc : topDocs.scoreDocs) {

int docID = scoreDoc.doc;

// 索引搜索对象通过文档下标获取文档

Document doc = indexSearcher.doc(docID);

System.out.println("通过索引文件:"+doc.get("fullPath")+"拿数据");

}

indexReader.close();

}

}

/**

* 查询索引测试

* @author Administrator

*

*/

public class Demo2 {

public static void main(String[] args) {

String indexDir = "E:\\temp\\T224\\lucene\\demo1";

String q = "EarlyTerminating-Collector";

try {

IndexUse.search(indexDir, q);

} catch (Exception e) {

e.printStackTrace();

}

}

}

构建索引

import java.nio.file.Paths;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.store.FSDirectory;

import org.junit.Before;

import org.junit.Test;

/**

* 构建索引

* 对索引的增删改

* @author Administrator

*

*/

public class Demo3 {

private String ids[]={"1","2","3"};

private String citys[]={"qingdao","nanjing","shanghai"};

private String descs[]={

"Qingdao is a beautiful city.",

"Nanjing is a city of culture.",

"Shanghai is a bustling city."

};

private FSDirectory dir;

/**

* 每次都生成索引文件

* @throws Exception

*/

@Before

public void setUp() throws Exception {

dir = FSDirectory.open(Paths.get("E:\\temp\\T224\\lucene\\demo2\\indexDir"));

IndexWriter indexWriter = getIndexWriter();

for (int i = 0; i < ids.length; i++) {

Document doc = new Document();

doc.add(new StringField("id", ids[i], Field.Store.YES));

doc.add(new StringField("city", citys[i], Field.Store.YES));

doc.add(new TextField("desc", descs[i], Field.Store.NO));

indexWriter.addDocument(doc);

}

indexWriter.close();

}

/**

* 获取索引输出流

* @return

* @throws Exception

*/

private IndexWriter getIndexWriter() throws Exception{

Analyzer analyzer = new StandardAnalyzer();

IndexWriterConfig conf = new IndexWriterConfig(analyzer);

return new IndexWriter(dir, conf );

}

/**

* 测试写了几个索引文件

* @throws Exception

*/

@Test

public void getWriteDocNum() throws Exception {

IndexWriter indexWriter = getIndexWriter();

System.out.println("索引目录下生成"+indexWriter.numDocs()+"个索引文件");

}

/**

* 打上标记,该索引实际并未删除

* @throws Exception

*/

@Test

public void deleteDocBeforeMerge() throws Exception {

IndexWriter indexWriter = getIndexWriter();

System.out.println("最大文档数:"+indexWriter.maxDoc());

indexWriter.deleteDocuments(new Term("id", "1"));

indexWriter.commit();

System.out.println("最大文档数:"+indexWriter.maxDoc());

System.out.println("实际文档数:"+indexWriter.numDocs());

indexWriter.close();

}

/**

* 对应索引文件已经删除,但是该版本的分词会保留

* @throws Exception

*/

@Test

public void deleteDocAfterMerge() throws Exception {

// https://blog.csdn.net/asdfsadfasdfsa/article/details/78820030

// org.apache.lucene.store.LockObtainFailedException: Lock held by this virtual machine:indexWriter是单例的、线程安全的,不允许打开多个。

IndexWriter indexWriter = getIndexWriter();

System.out.println("最大文档数:"+indexWriter.maxDoc());

indexWriter.deleteDocuments(new Term("id", "1"));

indexWriter.forceMergeDeletes(); //强制删除

indexWriter.commit();

System.out.println("最大文档数:"+indexWriter.maxDoc());

System.out.println("实际文档数:"+indexWriter.numDocs());

indexWriter.close();

}

/**

* 测试更新索引

* @throws Exception

*/

@Test

public void testUpdate()throws Exception{

IndexWriter writer=getIndexWriter();

Document doc=new Document();

doc.add(new StringField("id", "1", Field.Store.YES));

doc.add(new StringField("city","qingdao",Field.Store.YES));

doc.add(new TextField("desc", "dsss is a city.", Field.Store.NO));

writer.updateDocument(new Term("id","1"), doc);

writer.close();

}

}

删除索引

注意:

大数据时用合并前的删除,知识给索引文件打标,定时清理打标的索引文件。

数据量不是特别大的时候,可以及时删除索引文件。

修改索引

注意:5.3的版本修改前的分词不会消失

文档域加权

import java.nio.file.Paths;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.analysis.standard.StandardAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.Term;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.junit.Before;

import org.junit.Test;

/**

* 文档域加权

* @author Administrator

*

*/

public class Demo4 {

private String ids[]={"1","2","3","4"};

private String authors[]={"Jack","Marry","John","Json"};

private String positions[]={"accounting","technician","salesperson","boss"};

private String titles[]={"Java is a good language.","Java is a cross platform language","Java powerful","You should learn java"};

private String contents[]={

"If possible, use the same JRE major version at both index and search time.",

"When upgrading to a different JRE major version, consider re-indexing. ",

"Different JRE major versions may implement different versions of Unicode,",

"For example: with Java 1.4, `LetterTokenizer` will split around the character U+02C6,"

};

private Directory dir;//索引文件目录

@Before

public void setUp()throws Exception {

dir = FSDirectory.open(Paths.get("E:\\temp\\T224\\lucene\\demo3\\indexDir"));

IndexWriter writer = getIndexWriter();

for (int i = 0; i < authors.length; i++) {

Document doc = new Document();

doc.add(new StringField("id", ids[i], Field.Store.YES));

doc.add(new StringField("author", authors[i], Field.Store.YES));

doc.add(new StringField("position", positions[i], Field.Store.YES));

TextField textField = new TextField("title", titles[i], Field.Store.YES);

// Json投钱做广告,把排名刷到第一了

if("boss".equals(positions[i])) {

textField.setBoost(2f);//设置权重,默认为1

}

doc.add(textField);

// TextField会分词,StringField不会分词

doc.add(new TextField("content", contents[i], Field.Store.NO));

writer.addDocument(doc);

}

writer.close();

}

private IndexWriter getIndexWriter() throws Exception{

Analyzer analyzer = new StandardAnalyzer();

IndexWriterConfig conf = new IndexWriterConfig(analyzer);

return new IndexWriter(dir, conf);

}

@Test

public void index() throws Exception{

IndexReader reader = DirectoryReader.open(dir);

IndexSearcher searcher = new IndexSearcher(reader);

String fieldName = "title";

String keyWord = "java";

Term t = new Term(fieldName, keyWord);

Query query = new TermQuery(t);

TopDocs hits = searcher.search(query, 10);

System.out.println("关键字:‘"+keyWord+"’命中了"+hits.totalHits+"次");

for (ScoreDoc scoreDoc : hits.scoreDocs) {

Document doc = searcher.doc(scoreDoc.doc);

System.out.println(doc.get("author"));

}

}

}

注意:关键字加权有利于排名的提升。

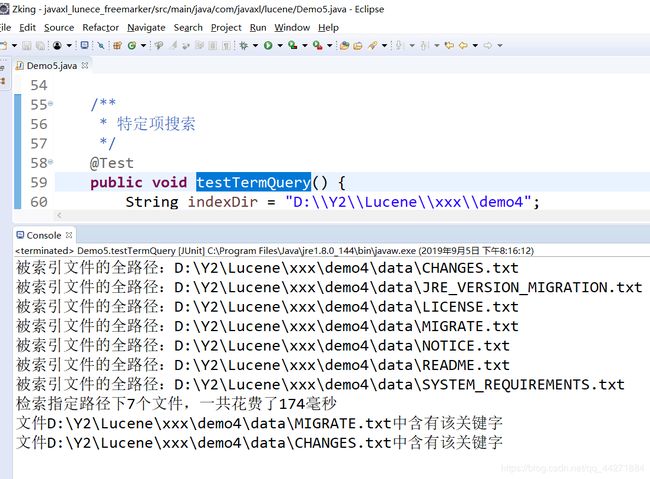

索引搜索功能

import java.io.IOException;

import java.nio.file.Paths;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexReader;

import org.apache.lucene.index.Term;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TermQuery;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.store.FSDirectory;

import org.junit.Before;

import org.junit.Test;

public class Demo5 {

@Before

public void setUp() {

// 索引文件将要存放的位置

String indexDir = "E:\\temp\\T224\\lucene\\demo4";

// 数据源地址

String dataDir = "E:\\temp\\T224\\lucene\\demo4\\data";

IndexCreate ic = null;

try {

ic = new IndexCreate(indexDir);

long start = System.currentTimeMillis();

int num = ic.index(dataDir);

long end = System.currentTimeMillis();

System.out.println("检索指定路径下" + num + "个文件,一共花费了" + (end - start) + "毫秒");

} catch (Exception e) {

e.printStackTrace();

} finally {

try {

ic.closeIndexWriter();

} catch (Exception e) {

e.printStackTrace();

}

}

}

@Test

public void testTermQuery() {

String indexDir = "E:\\temp\\T224\\lucene\\demo4";

String fld = "contents";

String text = "indexformattoooldexception";

// 特定项片段名和关键字

Term t = new Term(fld , text);

TermQuery tq = new TermQuery(t );

try {

FSDirectory indexDirectory = FSDirectory.open(Paths.get(indexDir));

// 注意:索引输入流不是new出来的,是通过目录读取工具类打开的

IndexReader indexReader = DirectoryReader.open(indexDirectory);

// 获取索引搜索对象

IndexSearcher is = new IndexSearcher(indexReader);

TopDocs hits = is.search(tq, 100);

// System.out.println(hits.totalHits);

for(ScoreDoc scoreDoc: hits.scoreDocs) {

Document doc = is.doc(scoreDoc.doc);

System.out.println("文件"+doc.get("fullPath")+"中含有该关键字");

}

} catch (IOException e) {

e.printStackTrace();

}

}

}

查询表达式(queryParser)

@Test

public void testQueryParser() {

String indexDir = "E:\\temp\\T224\\lucene\\demo4";

// 获取查询解析器(通过哪种分词器去解析哪种片段)

QueryParser queryParser = new QueryParser("contents", new StandardAnalyzer());

try {

FSDirectory indexDirectory = FSDirectory.open(Paths.get(indexDir));

// 注意:索引输入流不是new出来的,是通过目录读取工具类打开的

IndexReader indexReader = DirectoryReader.open(indexDirectory);

// 获取索引搜索对象

IndexSearcher is = new IndexSearcher(indexReader);

// 由解析器去解析对应的关键字

TopDocs hits = is.search(queryParser.parse("indexformattoooldexception") , 100);

for(ScoreDoc scoreDoc: hits.scoreDocs) {

Document doc = is.doc(scoreDoc.doc);

System.out.println("文件"+doc.get("fullPath")+"中含有该关键字");

}

} catch (IOException e) {

e.printStackTrace();

} catch (ParseException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

注意:与特定项搜索结果一样的。但是,特定项搜索是没有指定分词器的

分页功能

方案一

一次全部查出来到session中,分页的时候从session中那集合截取显示。优势是只要查询一次,缺陷是占用内存。并发的可能性很高。得到命中文档数组,通过下标拿命中文档,从而获取内容。

方案二

每次上一页下一页都是一次查询,占用时间。但是通常少有人点击下一页、得到命中文档数组,通过下标拿命中文档,从而获取内容。

推荐:使用第二种方案

其他查询方法

u 指定数字范围查询(numbericRangeQuery)

public class Demo6 {

private int ids[]={1,2,3};

private String citys[]={"qingdao","nanjing","shanghai"};

private String descs[]={

"Qingdao is a beautiful city.",

"Nanjing is a city of culture.",

"Shanghai is a bustling city."

};

private FSDirectory dir;

/**

* 每次都生成索引文件

* @throws Exception

*/

@Before

public void setUp() throws Exception {

dir = FSDirectory.open(Paths.get("E:\\temp\\T224\\lucene\\demo2\\indexDir"));

IndexWriter indexWriter = getIndexWriter();

for (int i = 0; i < ids.length; i++) {

Document doc = new Document();

doc.add(new IntField("id", ids[i], Field.Store.YES));

doc.add(new StringField("city", citys[i], Field.Store.YES));

doc.add(new TextField("desc", descs[i], Field.Store.NO));

indexWriter.addDocument(doc);

}

indexWriter.close();

}

/**

* 获取索引输出流

* @return

* @throws Exception

*/

private IndexWriter getIndexWriter() throws Exception{

Analyzer analyzer = new StandardAnalyzer();

IndexWriterConfig conf = new IndexWriterConfig(analyzer);

return new IndexWriter(dir, conf );

}

@Test

public void testNumericRangeQuery()throws Exception{

IndexReader reader = DirectoryReader.open(dir);

IndexSearcher is = new IndexSearcher(reader);

NumericRangeQuery query=NumericRangeQuery.newIntRange("id", 1, 2, true, true);

TopDocs hits=is.search(query, 10);

for(ScoreDoc scoreDoc:hits.scoreDocs){

Document doc=is.doc(scoreDoc.doc);

System.out.println(doc.get("id"));

System.out.println(doc.get("city"));

System.out.println(doc.get("desc"));

}

}

}

u 指定字符串开头字母查询

@Test

public void testPrefixQuery()throws Exception{

IndexReader reader = DirectoryReader.open(dir);

IndexSearcher is = new IndexSearcher(reader);

PrefixQuery query=new PrefixQuery(new Term("city","n"));

TopDocs hits=is.search(query, 10);

for(ScoreDoc scoreDoc:hits.scoreDocs){

Document doc=is.doc(scoreDoc.doc);

System.out.println(doc.get("id"));

System.out.println(doc.get("city"));

System.out.println(doc.get("desc"));

}

}

u 组合查询(booleanQuery)重点

@Test

public void testBooleanQuery()throws Exception{

IndexReader reader = DirectoryReader.open(dir);

IndexSearcher is = new IndexSearcher(reader);

NumericRangeQuery query1=NumericRangeQuery.newIntRange("id", 1, 2, true, true);

PrefixQuery query2=new PrefixQuery(new Term("city","s"));

BooleanQuery.Builder booleanQuery=new BooleanQuery.Builder();

booleanQuery.add(query1,BooleanClause.Occur.MUST);

booleanQuery.add(query2,BooleanClause.Occur.MUST);

TopDocs hits=is.search(booleanQuery.build(), 10);

for(ScoreDoc scoreDoc:hits.scoreDocs){

Document doc=is.doc(scoreDoc.doc);

System.out.println(doc.get("id"));

System.out.println(doc.get("city"));

System.out.println(doc.get("desc"));

}

}

中文分词&&高亮显示

private Integer ids[]={1,2,3};

private String citys[]={"青岛","南京","上海"};

private String descs[]={

"青岛是个美丽的城市。",

"南京是个有文化的城市。",

"上海市个繁华的城市。"

};

使用标准分词器对中文进行分词的结果如下,把每个字都当作了一个词,并没有达到我们想要的效果,也就是说标准分词器StandardAnalyzer已经不能满足我们的开发需要了。

中文分词

依赖

org.apache.lucene

lucene-analyzers-smartcn

5.3.1

将标准分词器换成中文分词器

public IndexCreate(String indexDir) throws Exception{

Analyzer analyzer = new SmartChineseAnalyzer();

IndexWriterConfig conf = new IndexWriterConfig(analyzer);

this.writer = new IndexWriter(FSDirectory.open(Paths.get(indexDir)),conf);

}

高亮显示

依赖

org.apache.lucene

lucene-highlighter

5.3.1

高亮显示的步奏:

1、通过查询对象,获取查询得分对象

2、通过得分对象,获取对应的片段

3、实例化一个html格式化对象

4、通过html格式化实例和查询得分实例,来实例化Lucene提供的高亮显示类对象。

5、将前面获取到的得分片段,设置到高亮显示的的实例对象中。

6、通过分词器获取TokenStream令牌流对象

7、通过令牌和原有的片段,去拿高亮展示后的片段

相关代码:

public class Demo7 {

private Integer ids[] = { 1, 2, 3 };

private String citys[] = { "青岛", "南京", "上海" };

// private String descs[]={

// "青岛是个美丽的城市。",

// "南京是个有文化的城市。",

// "上海市个繁华的城市。"

// };

private String descs[] = { "青岛是个美丽的城市。",

"南京是一个文化的城市南京,简称宁,是江苏省会,地处中国东部地区,长江下游,濒江近海。全市下辖11个区,总面积6597平方公里,2013年建成区面积752.83平方公里,常住人口818.78万,其中城镇人口659.1万人。[1-4] “江南佳丽地,金陵帝王州”,南京拥有着6000多年文明史、近2600年建城史和近500年的建都史,是中国四大古都之一,有“六朝古都”、“十朝都会”之称,是中华文明的重要发祥地,历史上曾数次庇佑华夏之正朔,长期是中国南方的政治、经济、文化中心,拥有厚重的文化底蕴和丰富的历史遗存。[5-7] 南京是国家重要的科教中心,自古以来就是一座崇文重教的城市,有“天下文枢”、“东南第一学”的美誉。截至2013年,南京有高等院校75所,其中211高校8所,仅次于北京上海;国家重点实验室25所、国家重点学科169个、两院院士83人,均居中国第三。[8-10]",

"上海市个繁华的城市。" };

private FSDirectory dir;

/**

* 每次都生成索引文件

*

* @throws Exception

*/

@Before

public void setUp() throws Exception {

dir = FSDirectory.open(Paths.get("E:\\temp\\T224\\lucene\\demo2\\indexDir"));

IndexWriter indexWriter = getIndexWriter();

for (int i = 0; i < ids.length; i++) {

Document doc = new Document();

doc.add(new IntField("id", ids[i], Field.Store.YES));

doc.add(new StringField("city", citys[i], Field.Store.YES));

doc.add(new TextField("desc", descs[i], Field.Store.YES));

indexWriter.addDocument(doc);

}

indexWriter.close();

}

/**

* 获取索引输出流

*

* @return

* @throws Exception

*/

private IndexWriter getIndexWriter() throws Exception {

// Analyzer analyzer = new StandardAnalyzer();

Analyzer analyzer = new SmartChineseAnalyzer();

IndexWriterConfig conf = new IndexWriterConfig(analyzer);

return new IndexWriter(dir, conf);

}

/**

* luke查看索引生成

*

* @throws Exception

*/

@Test

public void testIndexCreate() throws Exception {

}

/**

* 测试高亮

*

* @throws Exception

*/

@Test

public void testHeight() throws Exception {

IndexReader reader = DirectoryReader.open(dir);

IndexSearcher searcher = new IndexSearcher(reader);

SmartChineseAnalyzer analyzer = new SmartChineseAnalyzer();

QueryParser parser = new QueryParser("desc", analyzer);

// Query query = parser.parse("南京文化");

Query query = parser.parse("南京文明");

TopDocs hits = searcher.search(query, 100);

// 查询得分项

QueryScorer queryScorer = new QueryScorer(query);

// 得分项对应的内容片段

SimpleSpanFragmenter fragmenter = new SimpleSpanFragmenter(queryScorer);

// 高亮显示的样式

SimpleHTMLFormatter htmlFormatter = new SimpleHTMLFormatter("", "");

// 高亮显示对象

Highlighter highlighter = new Highlighter(htmlFormatter, queryScorer);

// 设置需要高亮显示对应的内容片段

highlighter.setTextFragmenter(fragmenter);

for (ScoreDoc scoreDoc : hits.scoreDocs) {

Document doc = searcher.doc(scoreDoc.doc);

String desc = doc.get("desc");

if (desc != null) {

// tokenstream是从doucment的域(field)中抽取的一个个分词而组成的一个数据流,用于分词。

TokenStream tokenStream = analyzer.tokenStream("desc", new StringReader(desc));

System.out.println("高亮显示的片段:" + highlighter.getBestFragment(tokenStream, desc));

}

System.out.println("所有内容:" + desc);

}

}

}

Lucene中各个核心类的作用:https://blog.csdn.net/kevinelstri/article/details/52317977

综合案例

4.5.2

1.10.1

5.3.1

2.10.3

4.12

1.2.16

5.1.44

1.2.47

2.5.16

4.0.1

1.2

1.1.2

8.0.47

junit

junit

${junit.version}

test

mysql

mysql-connector-java

${mysql.version}

org.apache.httpcomponents

httpclient

${httpclient.version}

org.jsoup

jsoup

${jsoup.version}

log4j

log4j

${log4j.version}

net.sf.ehcache

ehcache

${ehcache.version}

com.alibaba

fastjson

${fastjson.version}

org.apache.struts

struts2-core

${struts2.version}

javax.servlet

javax.servlet-api

${servlet.version}

provided

org.apache.lucene

lucene-core

${lucene.version}

org.apache.lucene

lucene-queryparser

${lucene.version}

org.apache.lucene

lucene-analyzers-smartcn

${lucene.version}

org.apache.lucene

lucene-highlighter

${lucene.version}

jstl

jstl

${jstl.version}

taglibs

standard

${standard.version}

org.apache.tomcat

tomcat-jsp-api

${tomcat-jsp-api.version}

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

import javax.servlet.http.HttpServletRequest;

import org.apache.lucene.analysis.cn.smart.SmartChineseAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.queryparser.classic.QueryParser;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.ScoreDoc;

import org.apache.lucene.search.TopDocs;

import org.apache.lucene.search.highlight.Highlighter;

import org.apache.lucene.store.Directory;

import org.apache.struts2.ServletActionContext;

import com.javaxl.blog.dao.BlogDao;

import com.javaxl.blog.util.LuceneUtil;

import com.javaxl.blog.util.PropertiesUtil;

import com.javaxl.blog.util.StringUtils;

/**

* IndexReader

* IndexSearcher

* Highlighter

* @author Administrator

*

*/

public class BlogAction {

private String title;

private BlogDao blogDao = new BlogDao();

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String list() {

try {

HttpServletRequest request = ServletActionContext.getRequest();

if (StringUtils.isBlank(title)) {

List> blogList = this.blogDao.list(title, null);

request.setAttribute("blogList", blogList);

}else {

Directory directory = LuceneUtil.getDirectory(PropertiesUtil.getValue("indexPath"));

DirectoryReader reader = LuceneUtil.getDirectoryReader(directory);

IndexSearcher searcher = LuceneUtil.getIndexSearcher(reader);

SmartChineseAnalyzer analyzer = new SmartChineseAnalyzer();

// 拿一句话到索引目中的索引文件中的词库进行关键词碰撞

Query query = new QueryParser("title", analyzer).parse(title);

Highlighter highlighter = LuceneUtil.getHighlighter(query, "title");

TopDocs topDocs = searcher.search(query , 100);

//处理得分命中的文档

List> blogList = new ArrayList<>();

Map map = null;

ScoreDoc[] scoreDocs = topDocs.scoreDocs;

for (ScoreDoc scoreDoc : scoreDocs) {

map = new HashMap<>();

Document doc = searcher.doc(scoreDoc.doc);

map.put("id", doc.get("id"));

String titleHighlighter = doc.get("title");

if(StringUtils.isNotBlank(titleHighlighter)) {

titleHighlighter = highlighter.getBestFragment(analyzer, "title", titleHighlighter);

}

map.put("title", titleHighlighter);

map.put("url", doc.get("url"));

blogList.add(map);

}

request.setAttribute("blogList", blogList);

}

} catch (Exception e) {

e.printStackTrace();

}

return "blogList";

}

}

import java.io.IOException;

import java.nio.file.Paths;

import java.sql.SQLException;

import java.util.List;

import java.util.Map;

import org.apache.lucene.analysis.cn.smart.SmartChineseAnalyzer;

import org.apache.lucene.document.Document;

import org.apache.lucene.document.Field;

import org.apache.lucene.document.StringField;

import org.apache.lucene.document.TextField;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import com.javaxl.blog.dao.BlogDao;

import com.javaxl.blog.util.PropertiesUtil;

/**

* 构建lucene索引

* @author Administrator

* 1。构建索引 IndexWriter

* 2、读取索引文件,获取命中片段

* 3、使得命中片段高亮显示

*

*/

public class IndexStarter {

private static BlogDao blogDao = new BlogDao();

public static void main(String[] args) {

IndexWriterConfig conf = new IndexWriterConfig(new SmartChineseAnalyzer());

Directory d;

IndexWriter indexWriter = null;

try {

d = FSDirectory.open(Paths.get(PropertiesUtil.getValue("indexPath")));

indexWriter = new IndexWriter(d , conf );

// 为数据库中的所有数据构建索引

List> list = blogDao.list(null, null);

for (Map map : list) {

Document doc = new Document();

doc.add(new StringField("id", (String) map.get("id"), Field.Store.YES));

// TextField用于对一句话分词处理 java培训机构

doc.add(new TextField("title", (String) map.get("title"), Field.Store.YES));

doc.add(new StringField("url", (String) map.get("url"), Field.Store.YES));

indexWriter.addDocument(doc);

}

} catch (IOException e) {

e.printStackTrace();

} catch (InstantiationException e) {

e.printStackTrace();

} catch (IllegalAccessException e) {

e.printStackTrace();

} catch (SQLException e) {

e.printStackTrace();

}finally {

try {

if(indexWriter!= null) {

indexWriter.close();

}

} catch (IOException e) {

// TODO Auto-generated catch block

e.printStackTrace();

}

}

}

}

import java.io.IOException;

import java.nio.file.Paths;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.index.DirectoryReader;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.index.IndexWriterConfig;

import org.apache.lucene.index.IndexWriterConfig.OpenMode;

import org.apache.lucene.search.IndexSearcher;

import org.apache.lucene.search.Query;

import org.apache.lucene.search.highlight.Formatter;

import org.apache.lucene.search.highlight.Highlighter;

import org.apache.lucene.search.highlight.QueryTermScorer;

import org.apache.lucene.search.highlight.Scorer;

import org.apache.lucene.search.highlight.SimpleFragmenter;

import org.apache.lucene.search.highlight.SimpleHTMLFormatter;

import org.apache.lucene.store.Directory;

import org.apache.lucene.store.FSDirectory;

import org.apache.lucene.store.RAMDirectory;

/**

* lucene工具类

* @author Administrator

*

*/

public class LuceneUtil {

/**

* 获取索引文件存放的文件夹对象

*

* @param path

* @return

*/

public static Directory getDirectory(String path) {

Directory directory = null;

try {

directory = FSDirectory.open(Paths.get(path));

} catch (IOException e) {

e.printStackTrace();

}

return directory;

}

/**

* 索引文件存放在内存

*

* @return

*/

public static Directory getRAMDirectory() {

Directory directory = new RAMDirectory();

return directory;

}

/**

* 文件夹读取对象

*

* @param directory

* @return

*/

public static DirectoryReader getDirectoryReader(Directory directory) {

DirectoryReader reader = null;

try {

reader = DirectoryReader.open(directory);

} catch (IOException e) {

e.printStackTrace();

}

return reader;

}

/**

* 文件索引对象

*

* @param reader

* @return

*/

public static IndexSearcher getIndexSearcher(DirectoryReader reader) {

IndexSearcher indexSearcher = new IndexSearcher(reader);

return indexSearcher;

}

/**

* 写入索引对象

*

* @param directory

* @param analyzer

* @return

*/

public static IndexWriter getIndexWriter(Directory directory, Analyzer analyzer)

{

IndexWriter iwriter = null;

try {

IndexWriterConfig config = new IndexWriterConfig(analyzer);

config.setOpenMode(OpenMode.CREATE_OR_APPEND);

// Sort sort=new Sort(new SortField("content", Type.STRING));

// config.setIndexSort(sort);//排序

config.setCommitOnClose(true);

// 自动提交

// config.setMergeScheduler(new ConcurrentMergeScheduler());

// config.setIndexDeletionPolicy(new

// SnapshotDeletionPolicy(NoDeletionPolicy.INSTANCE));

iwriter = new IndexWriter(directory, config);

} catch (IOException e) {

e.printStackTrace();

}

return iwriter;

}

/**

* 关闭索引文件生成对象以及文件夹对象

*

* @param indexWriter

* @param directory

*/

public static void close(IndexWriter indexWriter, Directory directory) {

if (indexWriter != null) {

try {

indexWriter.close();

} catch (IOException e) {

indexWriter = null;

}

}

if (directory != null) {

try {

directory.close();

} catch (IOException e) {

directory = null;

}

}

}

/**

* 关闭索引文件读取对象以及文件夹对象

*

* @param reader

* @param directory

*/

public static void close(DirectoryReader reader, Directory directory) {

if (reader != null) {

try {

reader.close();

} catch (IOException e) {

reader = null;

}

}

if (directory != null) {

try {

directory.close();

} catch (IOException e) {

directory = null;

}

}

}

/**

* 高亮标签

*

* @param query

* @param fieldName

* @return

*/

public static Highlighter getHighlighter(Query query, String fieldName)

{

Formatter formatter = new SimpleHTMLFormatter("", "");

Scorer fragmentScorer = new QueryTermScorer(query, fieldName);

Highlighter highlighter = new Highlighter(formatter, fragmentScorer);

highlighter.setTextFragmenter(new SimpleFragmenter(200));

return highlighter;

}

}