人脸表情判别,口罩识别

一、dlib,face_recognition以及opencv-python库安装

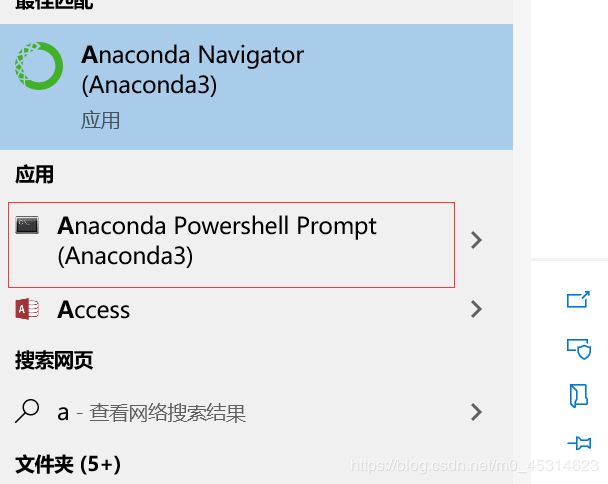

dlib安装:

需要安装cmake、boost、dlib

命令行安装

pip install cmake

pip install boost

pip install dlib

二、dlib的68点模型

链接: https://pan.baidu.com/s/10ZZNw86SqZL3-0D2XqC6tg

提取码: p2fc

三、python实现人脸识别和表情识别

import sys

import dlib # 人脸识别的库dlib

import numpy as np # 数据处理的库numpy

import cv2 # 图像处理的库OpenCv

class face_emotion():

def __init__(self):

# 使用特征提取器get_frontal_face_detector

self.detector = dlib.get_frontal_face_detector()

# dlib的68点模型,使用作者训练好的特征预测器

self.predictor = dlib.shape_predictor("F:/face.dat")

# 建cv2摄像头对象,这里使用电脑自带摄像头,如果接了外部摄像头,则自动切换到外部摄像头

self.cap = cv2.VideoCapture(0)

# 设置视频参数,propId设置的视频参数,value设置的参数值

self.cap.set(3, 480)

# 截图screenshoot的计数器

self.cnt = 0

def learning_face(self):

# 眉毛直线拟合数据缓冲

line_brow_x = []

line_brow_y = []

# cap.isOpened() 返回true/false 检查初始化是否成功

while (self.cap.isOpened()):

# cap.read()

# 返回两个值:

# 一个布尔值true/false,用来判断读取视频是否成功/是否到视频末尾

# 图像对象,图像的三维矩阵

flag, im_rd = self.cap.read()

# 每帧数据延时1ms,延时为0读取的是静态帧

k = cv2.waitKey(1)

# 取灰度

img_gray = cv2.cvtColor(im_rd, cv2.COLOR_RGB2GRAY)

# 使用人脸检测器检测每一帧图像中的人脸。并返回人脸数rects

faces = self.detector(img_gray, 0)

# 待会要显示在屏幕上的字体

font = cv2.FONT_HERSHEY_SIMPLEX

# 如果检测到人脸

if (len(faces) != 0):

# 对每个人脸都标出68个特征点

for i in range(len(faces)):

# enumerate方法同时返回数据对象的索引和数据,k为索引,d为faces中的对象

for k, d in enumerate(faces):

# 用红色矩形框出人脸

cv2.rectangle(im_rd, (d.left(), d.top()), (d.right(), d.bottom()), (0, 0, 255))

# 计算人脸热别框边长

self.face_width = d.right() - d.left()

# 使用预测器得到68点数据的坐标

shape = self.predictor(im_rd, d)

# 圆圈显示每个特征点

for i in range(68):

cv2.circle(im_rd, (shape.part(i).x, shape.part(i).y), 2, (0, 255, 0), -1, 8)

# cv2.putText(im_rd, str(i), (shape.part(i).x, shape.part(i).y), cv2.FONT_HERSHEY_SIMPLEX, 0.5,

# (255, 255, 255))

# 分析任意n点的位置关系来作为表情识别的依据

mouth_width = (shape.part(54).x - shape.part(48).x) / self.face_width # 嘴巴咧开程度

mouth_higth = (shape.part(66).y - shape.part(62).y) / self.face_width # 嘴巴张开程度

# 通过两个眉毛上的10个特征点,分析挑眉程度和皱眉程度

brow_sum = 0 # 高度之和

frown_sum = 0 # 两边眉毛距离之和

for j in range(17, 21):

brow_sum += (shape.part(j).y - d.top()) + (shape.part(j + 5).y - d.top())

frown_sum += shape.part(j + 5).x - shape.part(j).x

line_brow_x.append(shape.part(j).x)

line_brow_y.append(shape.part(j).y)

# self.brow_k, self.brow_d = self.fit_slr(line_brow_x, line_brow_y) # 计算眉毛的倾斜程度

tempx = np.array(line_brow_x)

tempy = np.array(line_brow_y)

z1 = np.polyfit(tempx, tempy, 1) # 拟合成一次直线

self.brow_k = -round(z1[0], 3) # 拟合出曲线的斜率和实际眉毛的倾斜方向是相反的

brow_hight = (brow_sum / 10) / self.face_width # 眉毛高度占比

brow_width = (frown_sum / 5) / self.face_width # 眉毛距离占比

# 眼睛睁开程度

eye_sum = (shape.part(41).y - shape.part(37).y + shape.part(40).y - shape.part(38).y +

shape.part(47).y - shape.part(43).y + shape.part(46).y - shape.part(44).y)

eye_hight = (eye_sum / 4) / self.face_width

# 分情况讨论

# 张嘴,可能是开心或者惊讶

if round(mouth_higth >= 0.03):

if eye_hight >= 0.056:

cv2.putText(im_rd, "amazing", (d.left(), d.bottom() + 20), cv2.FONT_HERSHEY_SIMPLEX,

0.8,

(0, 0, 255), 2, 4)

else:

cv2.putText(im_rd, "happy", (d.left(), d.bottom() + 20), cv2.FONT_HERSHEY_SIMPLEX, 0.8,

(0, 0, 255), 2, 4)

# 没有张嘴,可能是正常和生气

else:

if self.brow_k <= -0.3:

cv2.putText(im_rd, "angry", (d.left(), d.bottom() + 20), cv2.FONT_HERSHEY_SIMPLEX, 0.8,

(0, 0, 255), 2, 4)

else:

cv2.putText(im_rd, "nature", (d.left(), d.bottom() + 20), cv2.FONT_HERSHEY_SIMPLEX, 0.8,

(0, 0, 255), 2, 4)

# 标出人脸数

cv2.putText(im_rd, "Faces: " + str(len(faces)), (20, 50), font, 1, (0, 0, 255), 1, cv2.LINE_AA)

else:

# 没有检测到人脸

cv2.putText(im_rd, "No Face", (20, 50), font, 1, (0, 0, 255), 1, cv2.LINE_AA)

# 添加说明

im_rd = cv2.putText(im_rd, "S: screenshot", (20, 400), font, 0.8, (0, 0, 255), 1, cv2.LINE_AA)

im_rd = cv2.putText(im_rd, "Q: quit", (20, 450), font, 0.8, (0, 0, 255), 1, cv2.LINE_AA)

# 按下s键截图保存

if (k == ord('s')):

self.cnt += 1

cv2.imwrite("screenshoot" + str(self.cnt) + ".jpg", im_rd)

# 按下q键退出

if (k == ord('q')):

break

# 窗口显示

cv2.imshow("camera", im_rd)

# 释放摄像头

self.cap.release()

# 删除建立的窗口

cv2.destroyAllWindows()

if __name__ == "__main__":

my_face = face_emotion()

my_face.learning_face()

四、基于CNN的表情识别

确定嘴唇的位置

# 显示嘴部特征点

# Draw the positions of someone's lip

import dlib # 人脸识别的库 Dlib

import cv2 # 图像处理的库 OpenCv

from get_features import get_features # return the positions of feature points

path_test_img = "C:\Users\ASUS\Desktop\timg.jpg"

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor('C:\Users\ASUS\Desktop\face.dat')

# Get lip's positions of features points

positions_lip = get_features(path_test_img)

img_rd = cv2.imread(path_test_img)

# Draw on the lip points

for i in range(0, len(positions_lip), 2):

print(positions_lip[i], positions_lip[i+1])

cv2.circle(img_rd, tuple([positions_lip[i], positions_lip[i+1]]), radius=1, color=(0, 255, 0))

cv2.namedWindow("img_read", 2)

cv2.imshow("img_read", img_rd)

cv2.waitKey(0)

判断人物是否微笑

# use the saved model

from sklearn.externals import joblib

from get_features import get_features

import ML_ways_sklearn

import cv2

# path of test img

path_test_img = "C:\Users\ASUS\Desktop\timg.jpg"

# 提取单张40维度特征

positions_lip_test = get_features(path_test_img)

# path of models

path_models = "F:/data/data_models/"

print("The result of"+path_test_img+":")

print('\n')

# ######### LR ###########

LR = joblib.load(path_models+"model_LR.m")

ss_LR = ML_ways_sklearn.model_LR()

X_test_LR = ss_LR.transform([positions_lip_test])

y_predict_LR = str(LR.predict(X_test_LR)[0]).replace('0', "no smile").replace('1', "with smile")

print("LR:", y_predict_LR)

# ######### LSVC ###########

LSVC = joblib.load(path_models+"model_LSVC.m")

ss_LSVC = ML_ways_sklearn.model_LSVC()

X_test_LSVC = ss_LSVC.transform([positions_lip_test])

y_predict_LSVC = str(LSVC.predict(X_test_LSVC)[0]).replace('0', "no smile").replace('1', "with smile")

print("LSVC:", y_predict_LSVC)

# ######### MLPC ###########

MLPC = joblib.load(path_models+"model_MLPC.m")

ss_MLPC = ML_ways_sklearn.model_MLPC()

X_test_MLPC = ss_MLPC.transform([positions_lip_test])

y_predict_MLPC = str(MLPC.predict(X_test_MLPC)[0]).replace('0', "no smile").replace('1', "with smile")

print("MLPC:", y_predict_MLPC)

# ######### SGDC ###########

SGDC = joblib.load(path_models+"model_SGDC.m")

ss_SGDC = ML_ways_sklearn.model_SGDC()

X_test_SGDC = ss_SGDC.transform([positions_lip_test])

y_predict_SGDC = str(SGDC.predict(X_test_SGDC)[0]).replace('0', "no smile").replace('1', "with smile")

print("SGDC:", y_predict_SGDC)

img_test = cv2.imread(path_test_img)

img_height = int(img_test.shape[0])

img_width = int(img_test.shape[1])

# show the results on the image

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.putText(img_test, "LR: "+y_predict_LR, (int(img_height/10), int(img_width/10)), font, 0.8, (84, 255, 159), 1, cv2.LINE_AA)

cv2.putText(img_test, "LSVC: "+y_predict_LSVC, (int(img_height/10), int(img_width/10*2)), font, 0.8, (84, 255, 159), 1, cv2.LINE_AA)

cv2.putText(img_test, "MLPC: "+y_predict_MLPC, (int(img_height/10), int(img_width/10)*3), font, 0.8, (84, 255, 159), 1, cv2.LINE_AA)

cv2.putText(img_test, "SGDC: "+y_predict_SGDC, (int(img_height/10), int(img_width/10)*4), font, 0.8, (84, 255, 159), 1, cv2.LINE_AA)

cv2.namedWindow("img", 2)

cv2.imshow("img", img_test)

cv2.waitKey(0)

五、口罩识别

import cv2

detector= cv2.CascadeClassifier('E:/opencv/opencv/build/etc/haarcascades/haarcascade_frontalface_default.xml')

mask_detector=cv2.CascadeClassifier('F:/cascade.xml')

cap = cv2.VideoCapture(0)

while True:

ret, img = cap.read()

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = detector.detectMultiScale(gray, 1.1, 3)

for (x, y, w, h) in faces:

#参数分别为 图片、左上角坐标,右下角坐标,颜色,厚度

face=img[y:y+h,x:x+w] # 裁剪坐标为[y0:y1, x0:x1]

mask_face=mask_detector.detectMultiScale(gray, 1.1, 5)

for (x2,y2,w2,h2) in mask_face:

cv2.rectangle(img, (x2, y2), (x2 + w2, y2 + h2), (0, 0, 255), 2)

cv2.imshow('mask', img)

cv2.waitKey(3)

cap.release()

cv2.destroyAllWindows()

cascade.xml是训练好的分类器,链接:

https://github.com/demon-12/source

opencv/opencv/build/etc/haarcascades/haarcascade_frontalface_default.xml是opencv中自带的人脸识别xml文件,opencv中还有其他的比如笑脸识别,猫脸识别,眼睛识别等等。