Flink入门笔记(一):Flink安装部署

文章目录

- 1. 安装flink(一)

- 2. flink Standalone模式部署

- 2.1. 修改配置文件

- 3. YARN模式安装

- 3.1. Hadoop安装

- 3.1.1. Hadoop下载与解压

- 3.1.2. Hadoop配置文件的配置

- 3.1.3. 启动hadoop

- 3.1.4. 启动hadoop过程中需要输入密码,非常繁琐,因此需要在Linux下配置免密码登录。

- 3.2. flink on yarn 提交任务

Flink安装与配置

1. 安装flink(一)

1.下载flink

1.官网

2.清华镜像源

2.解压

$ tar -zxvf flink-1.8.1-bin-scala_2.11.tgz

$ mv flink-1.8.1 flink

3.启动flink

# 启动flink的命令

$ flink/bin/start-cluster.sh

# 查看进程

[hadoop@192 app]$ jps

10003 StandaloneSessionClusterEntrypoint

10521 Jps

10447 TaskManagerRunner

4.webUI查看

打开浏览器,输入:http://192.168.154.130:8081

5.启动一个flink example

flink/bin/flink run flink/examples/batch/WordCount.jar --input /home/hadoop/file/test.txt --output /home/hadoop/file/output.txt

2. flink Standalone模式部署

2.1. 修改配置文件

1.修改flink-conf.yaml

$ vim conf/flink-conf.yaml

# 指定jobmanageer的主机

jobmanager.rpc.address: hadoop-master

2.修改slaves

# 只需要修改为主机名;单机部署,因此只有一台机器

hadoop-master

3.域名解析

修改c:\windows\system32\drivers\etc\hosts文件

添加

192.168.193.128 hadoop-master

4.启动集群

$ flink/bin/start-cluster.sh

5.webUI查看

http://hadoop-master:8081/#/overview

3. YARN模式安装

3.1. Hadoop安装

3.1.1. Hadoop下载与解压

1.下载hadoop

Apache原生的hadoop在生产上几乎不会用,开发人员一般都是部署Hadoop的发行版Cloudera Hadoop即CDH.

CDH5.4.3下载地址

[CDH5.4.3文档]

选择hadoop-2.6.0-cdh5.4.3/

2.解压

$ tar -zxvf hadoop-2.6.0-cdh5.4.3.tar.gz

$ mv hadoop-2.6.0-cdh5.4.3 hadoop

3.配置JAVA_HOME路径

hadoop的底层是java开发的,hadoop的运行依赖于java

- 修改hadoop-env.sh

export JAVA_HOME=/home/hadoop/app/jdk

3.1.2. Hadoop配置文件的配置

要启动hadoop并运行,必须要做一些配置文件的配置。主要用来配置:hdfs文件副本数、指定mr运行在yarn上、指定YARN的老大(ResourceManager)的地址、reducer获取数据的方式等。

配置文件在目录/home/hadoop/app/hadoop/etc/hadoop下

1.配置core-site.xml

- 首先创建临时文件目录

mkdir /home/hadoop/app/hadoop/data - 修改core-site.xml

<property>

<name>fs.defaultFSname>

<value>hdfs://hadoop-master:8020value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>/home/hadoop/app/hadoop/data/tmpvalue>

property>

2.配置 hdfs-site.xml

<property>

<name>fs.replicationname>

<value>1value>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>hadoop-master:50090value>

property>

3.配置mapred-site.xml

- 首先要修改文件名

$ mv mapred-site.xml.template mapred-site.xml

- 其次配置mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>

4.配置yarn-site.xml

<property>

<name>yarn.resourcemanager.hostnamename>

<value>hadoop-mastervalue>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

5.修改slaves

hadoop-master

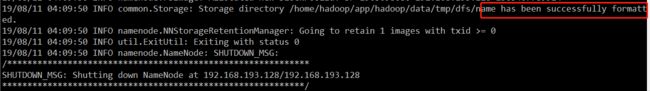

3.1.3. 启动hadoop

1.格式化hadoop

bin/hdfs namenode -format

sbin/start-all.sh

[hadoop@hadoop-master hadoop]$ jps

2721 TaskManagerRunner

3524 SecondaryNameNode

3924 NodeManager

3972 Jps

3258 NameNode

3658 ResourceManager

2285 StandaloneSessionClusterEntrypoint

3374 DataNode

3.1.4. 启动hadoop过程中需要输入密码,非常繁琐,因此需要在Linux下配置免密码登录。

$ ssh-keygen

$ cd .ssh

$ ls

id_rsa id_rsa.pub

$ cat id_rsa.pub >> authorized_keys

# 赋权限:

chmod 600 authorized_keys

$ ssh localhost

【备注】为了避免出错,先不要执行此命令。因为执行后,会在.ssh/目录下产生一个known_hosts文件。拷贝到其他主机后悔报错出问题。

可以直接登录到localhost

hadoop@hadoop1:~/.ssh$ ls

authorized_keys id_rsa id_rsa.pub

【备注】拷贝到其他主机

scp -r .ssh hadoop@hadoop1:/home/hadoop

3.2. flink on yarn 提交任务

启动hadoop,先启动hdfs,再启动yarn

$ sbin/start-dfs.sh

$ sbin/start-yarn.sh

【备注】hadoop的监控页面

http://hadoop-master:8088/ – Yarn监控页面

http://hadoop-master:50070/ – Hdfs监控页面

再hadoop-master节点提交Yarn-Session,使用安装目录下bin目录中的yarn-session.sh脚本提交。

./bin/flink run -m yarn-cluster ./examples/batch/WordCount.jar