双目摄像头的标定及测距(Ubuntu16.04 + OpenCV)

因为识别物体之后需要测距,然而带深度带RGB图的摄像头都好贵,所以入手了一个双目摄像头,长下面这个样子:

淘宝买的,只要35块钱…壳都没有,我是直接贴在电脑上的(请忽略杂乱的背景)

卖家把我拉进了个群,群里有开摄像头的方法,写的清清楚楚,包括标定和测距,所以问题不是很大。

摄像头开启后长这个样子:

代码也很短:(这个摄像头有一个脚本 .sh 用来开启,估计是一个类似于驱动的东西)

#include 下面将说一下标定和测距的过程,不涉及原理,后期有时间再写,主要贴代码及效果图

标定:

注意点:

1、拍摄数量建议30到40,开始的时候我只拍了10张,测距的时候发现数据怎么都不对

2、建议摄像头固定好,水平放置,标定板也就是棋盘不同角度不同距离多拍几次

如果标定完后测距的时候发现算出来的深度图和彩色图基本上对不上,那就建议重新标定,标定纸最好最好不要拿在手上,最好贴在一个硬的板子上

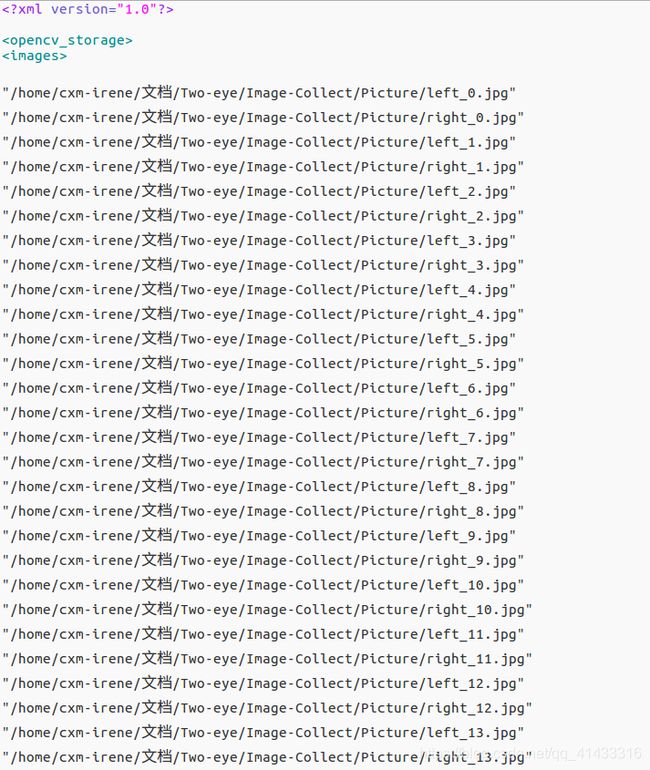

用左右摄像头拍摄照片后,将图片的绝对路径写进一个xml文件里,如下所示:

如果是自己写这个xml文件的话,末尾别忘了这两句:

附上标定的代码(无开摄像头拍照的内容):

#if 1

#include 需要改动的也就是几个路径以及摄像头的参数:

#define w 9 //棋盘格宽的黑白交叉点个数

#define h 6 //棋盘格高的黑白交叉点个数

const float chessboardSquareSize = 24.6f; //每个棋盘格方块的边长 单位 为 mm

直接运行就好

过程中会显示这样的图片:

这就表示这张照片是有效的。程序运行结束后,会生成一个 intrinsics.yml 文件,这个文件里保存了摄像头的参数:左右内参矩阵、左右畸变参数、平移向量、旋转向量,内容如下图所示:

得到了参数后,就可以进行测距了

测距

分别用BM算法和SGBM算法进行尝试,所有参数的读取都是直接读的 intrinsics.yml 文件

BM

代码:

#include (origin) << endl;

point3 = xyz.at<Vec3f>(origin);

point3[0];

//cout << "point3[0]:" << point3[0] << "point3[1]:" << point3[1] << "point3[2]:" << point3[2]<

cout << "世界坐标:" << endl;

cout << "x: " << point3[0] << " y: " << point3[1] << " z: " << point3[2] << endl;

d = point3[0] * point3[0]+ point3[1] * point3[1]+ point3[2] * point3[2];

d = sqrt(d); //mm

// cout << "距离是:" << d << "mm" << endl;

d = d / 10.0; //cm

cout << "距离是:" << d << "cm" << endl;

// d = d/1000.0; //m

// cout << "距离是:" << d << "m" << endl;

break;

case EVENT_LBUTTONUP: //鼠标左按钮释放的事件

selectObject = false;

if (selection.width > 0 && selection.height > 0)

break;

}

}

/*****主函数*****/

int main()

{

FileStorage fs("/home/cxm-irene/文档/Two-eye/Check/build/intrinsics.yml", FileStorage::READ);

if (fs.isOpened())

{

cout << "read";

fs["M1"] >> cameraMatrixL;fs["D1"] >> distCoeffL;

fs["M2"] >> cameraMatrixR;fs["D2"] >> distCoeffR;

fs["R"] >> R;

fs["T"] >> T;

fs["Q"] >> Q;

cout << "M1-D1" << cameraMatrixL << endl << distCoeffL << endl;

cout << "M2-D2" << cameraMatrixL << endl << distCoeffL << endl;

cout << "R" << R <<endl;

cout << "T" << T <<endl;

cout << "Q" << Q <<endl;

fs.release();

}

/*

立体校正

*/

// Rodrigues(rec, R); //Rodrigues变换

stereoRectify(cameraMatrixL, distCoeffL, cameraMatrixR, distCoeffR, imageSize, R, T, Rl, Rr, Pl, Pr, Q, CALIB_ZERO_DISPARITY,

0, imageSize, &validROIL, &validROIR);

initUndistortRectifyMap(cameraMatrixL, distCoeffL, Rl, Pr, imageSize, CV_32FC1, mapLx, mapLy);

initUndistortRectifyMap(cameraMatrixR, distCoeffR, Rr, Pr, imageSize, CV_32FC1, mapRx, mapRy);

/*

读取图片

*/

rgbImageL = imread("/home/cxm-irene/文档/Two-eye/Image-Collect/Picture/left_0.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageL, grayImageL, CV_BGR2GRAY);

rgbImageR = imread("/home/cxm-irene/文档/Two-eye/Image-Collect/Picture/right_0.jpg", CV_LOAD_IMAGE_COLOR);

cvtColor(rgbImageR, grayImageR, CV_BGR2GRAY);

imshow("ImageL Before Rectify", grayImageL);

imshow("ImageR Before Rectify", grayImageR);

/*

经过remap之后,左右相机的图像已经共面并且行对准了

*/

remap(grayImageL, rectifyImageL, mapLx, mapLy, INTER_LINEAR);

remap(grayImageR, rectifyImageR, mapRx, mapRy, INTER_LINEAR);

/*

把校正结果显示出来

*/

Mat rgbRectifyImageL, rgbRectifyImageR;

cvtColor(rectifyImageL, rgbRectifyImageL, CV_GRAY2BGR); //伪彩色图

cvtColor(rectifyImageR, rgbRectifyImageR, CV_GRAY2BGR);

//单独显示

//rectangle(rgbRectifyImageL, validROIL, Scalar(0, 0, 255), 3, 8);

//rectangle(rgbRectifyImageR, validROIR, Scalar(0, 0, 255), 3, 8);

imshow("ImageL After Rectify", rgbRectifyImageL);

imshow("ImageR After Rectify", rgbRectifyImageR);

//显示在同一张图上

Mat canvas;

double sf;

int w, h;

sf = 600. / MAX(imageSize.width, imageSize.height);

w = cvRound(imageSize.width * sf);

h = cvRound(imageSize.height * sf);

canvas.create(h, w * 2, CV_8UC3); //注意通道

//左图像画到画布上

Mat canvasPart = canvas(Rect(w * 0, 0, w, h)); //得到画布的一部分

resize(rgbRectifyImageL, canvasPart, canvasPart.size(), 0, 0, INTER_AREA); //把图像缩放到跟canvasPart一样大小

Rect vroiL(cvRound(validROIL.x*sf), cvRound(validROIL.y*sf), //获得被截取的区域

cvRound(validROIL.width*sf), cvRound(validROIL.height*sf));

//rectangle(canvasPart, vroiL, Scalar(0, 0, 255), 3, 8); //画上一个矩形

cout << "Painted ImageL" << endl;

//右图像画到画布上

canvasPart = canvas(Rect(w, 0, w, h)); //获得画布的另一部分

resize(rgbRectifyImageR, canvasPart, canvasPart.size(), 0, 0, INTER_LINEAR);

Rect vroiR(cvRound(validROIR.x * sf), cvRound(validROIR.y*sf),

cvRound(validROIR.width * sf), cvRound(validROIR.height * sf));

//rectangle(canvasPart, vroiR, Scalar(0, 0, 255), 3, 8);

cout << "Painted ImageR" << endl;

//画上对应的线条

for (int i = 0; i < canvas.rows; i += 16)

line(canvas, Point(0, i), Point(canvas.cols, i), Scalar(0, 255, 0), 1, 8);

imshow("rectified", canvas);

/*

立体匹配

*/

namedWindow("disparity", CV_WINDOW_AUTOSIZE);

// 创建SAD窗口 Trackbar

createTrackbar("BlockSize:\n", "disparity", &blockSize, 16, stereo_match);

// 创建视差唯一性百分比窗口 Trackbar

createTrackbar("UniquenessRatio:\n", "disparity", &uniquenessRatio, 50, stereo_match);

// 创建视差窗口 Trackbar

createTrackbar("NumDisparities:\n", "disparity", &numDisparities, 16, stereo_match);

//鼠标响应函数setMouseCallback(窗口名称, 鼠标回调函数, 传给回调函数的参数,一般取0)

setMouseCallback("disparity", onMouse, 0);

stereo_match(0, 0);

waitKey(0);

return 0;

}

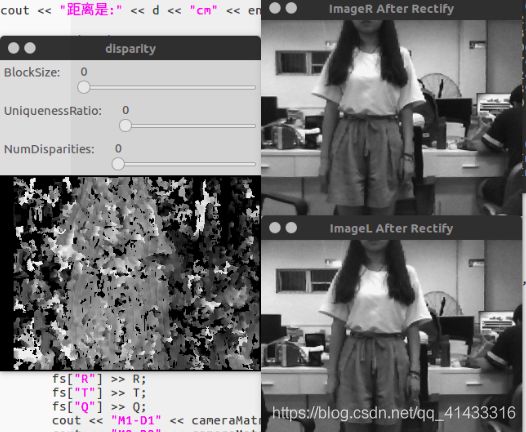

效果图如下,先放出BlockSize、UniquenessRatio、NumDisparities均为0的时候的样子:

因为识别的对象是人,所以测试的时候也就直接站人了,虽然结果图很模糊,但也能大致地看出是个人了,调参完后得到一个看上去还可以的结果:

点击人站的地方的某一处,终端会显示出距离,这个距离是距离摄像头的直线距离:

![]()

SGBM

代码:

#if 1

#include 同样也给出结果图,其实参数啥的都差不多:

也是一样,点击某一处显示出距离:

不过和BM显示的不一样的是,这里是xyz的值,单位为mm

BM和SGBM测出来的距离都差不太多,主要我也忘了我站的位置了…

总而言之,标定很重要,得到的参数将直接决定视差图算距离的好坏,后期有时间再整理视差图算距离以及立体影像匹配的原理叭