举例说明VOC格式数据集 mAP metric的实现过程

举例说明VOC格式数据集 mAP metric的实现过程

- 1.在图片上显示模型输出的pred box与gt box

- 2.准备实例数据,用于mAP计算

- 3.获取计算mAP的中间结果

- 4.计算每一类别的AP

- 5.求mAP

- 参考文献

mAP1是目标检测任务中的重要衡量指标,有必要对其实现过程进行深入了解。

本blog通过实例,对其计算过程进行刨根问底式说明。

这里仍然使用的是voc格式的安全帽数据集(该数据集只有两类:hat和person),以训练好的eriklindernoren YOLO_V3模型输出结果来进行示例。

本blog选择3张图片来说明效果。

1.在图片上显示模型输出的pred box与gt box

图中使用不同颜色或者text来区分pred box/gt box以及类别:

- 红框代表gt box;

- 蓝框代表pred box;

- text p代表类别person;

- text h代表类别hat。

| 图片标号 | person 个数__pred | person 个数__gt | hat个数__pred | hat个数__gt |

|---|---|---|---|---|

| 图1 | 3 | 3 | 1 | 1 |

| 图2 | 0 | 0 | 6 | 5 |

| 图3 | 4 | 5 | 1 | 1 |

| 总和 | 7 | 8 | 8 | 7 |

可以看出,模型输出结果还是不错的~识别出来的pred box与gt box match的很好。但在特殊情况下也会存在多检或漏检。

2.准备实例数据,用于mAP计算

用于mAP 计算的初始数据有两部分:

- nms处理后的模型输出结果,其格式为:

x1,y1,x2,y2,object_conf,class_conf,class_pred

上述3张图片 pred_box的数据分别为;

[tensor([[230.1156, 74.9446, 298.6934, 156.2077, 0.9975, 0.9992, 1.0000],

[ 39.8082, 129.0310, 95.2867, 195.0942, 0.9937, 0.9997, 1.0000],

[159.5252, 100.5233, 220.2535, 171.9712, 0.9359, 0.9885, 0.0000],

[339.1216, 60.4164, 412.5896, 124.4851, 0.8818, 0.9762, 1.0000]]),

tensor([[146.8568, 124.1864, 218.3401, 203.3283, 0.9978, 1.0000, 0.0000],

[290.4929, 93.0175, 370.5332, 185.8229, 0.9970, 1.0000, 0.0000],

[357.9865, 115.9314, 405.5560, 177.8217, 0.9940, 0.9998, 0.0000],

[ 64.7163, 126.6348, 116.8986, 188.5721, 0.9917, 0.9996, 0.0000],

[265.2778, 129.1731, 301.0858, 175.3111, 0.9735, 0.9996, 0.0000],

[ -2.2735, 103.3965, 65.6311, 226.4554, 0.9633, 0.9999, 0.0000]]),

tensor([[149.8786, 78.6017, 190.1439, 125.4006, 0.9993, 0.9996, 1.0000],

[236.5606, 82.6184, 279.0927, 132.0075, 0.9949, 0.9999, 1.0000],

[ 75.3668, 99.0641, 127.2310, 165.2129, 0.9885, 0.9999, 1.0000],

[145.5203, 197.4038, 293.8440, 395.9724, 0.9708, 0.9998, 0.0000],

[263.8979, 112.8633, 314.5363, 163.6216, 0.9637, 0.9999, 1.0000]])]

- gt box的数据,其格式为:

img_index,class,x1,y1,x2,y2

3张图片 对应的gt box数据分别为;

tensor([[ 0.0000, 0.0000, 158.0800, 100.4741, 220.8128, 174.7062],

[ 0.0000, 1.0000, 36.4832, 129.9303, 92.8096, 190.0866],

[ 0.0000, 1.0000, 235.5184, 77.4187, 296.9616, 153.5924],

[ 0.0000, 1.0000, 339.8512, 56.9571, 416.0000, 126.0625],

[ 1.0000, 0.0000, 149.7808, 127.5761, 216.8816, 201.3523],

[ 1.0000, 0.0000, 294.5280, 95.9670, 371.0720, 186.9230],

[ 1.0000, 0.0000, 361.6496, 122.5838, 407.1184, 175.8435],

[ 1.0000, 0.0000, 265.1168, 128.1366, 303.3888, 178.0594],

[ 1.0000, 0.0000, 0.5616, 100.9593, 54.9328, 226.8742],

[ 2.0000, 0.0000, 151.8412, 199.6592, 290.5208, 406.2864],

[ 2.0000, 1.0000, 75.5854, 110.9264, 121.3416, 167.7936],

[ 2.0000, 1.0000, 81.8236, 92.8928, 125.5051, 136.5728],

[ 2.0000, 1.0000, 153.2383, 79.7264, 188.5928, 126.8592],

[ 2.0000, 1.0000, 239.8957, 81.8064, 274.5587, 132.4336],

[ 2.0000, 1.0000, 271.8062, 112.3200, 309.9272, 160.1600]])

注意:上述数据中的x1,y1,x2,y2均为相对于原图的绝对坐标。

3.获取计算mAP的中间结果

此部分对应于eriklindernoren YOLOv3的get_batch_statistics子程序。

计算mAP所需要的数据有以下四个:

- true_positives

- pred_scores

- pred_labels

- labels

batch_metrics = []

for sample_i in range(len(outputs)): #sample_i:batch_id

if outputs[sample_i] is None:

continue

output = outputs[sample_i]

pred_boxes = output[:, :4]

pred_scores = output[:, 4]

pred_labels = output[:, -1]

true_positives = np.zeros(pred_boxes.shape[0])

annotations = targets[targets[:, 0] == sample_i][:, 1:]

target_labels = annotations[:, 0] if len(annotations) else []

if len(annotations):

detected_boxes = []

target_boxes = annotations[:, 1:]

for pred_i, (pred_box, pred_label) in enumerate(zip(pred_boxes, pred_labels)):

# If targets are found break

if len(detected_boxes) == len(annotations):

break

# Ignore if label is not one of the target labels

if pred_label not in target_labels:

continue

iou, box_index = bbox_iou(

pred_box.unsqueeze(0), target_boxes).max(0)

if iou >= cfg.iou_thres and box_index not in detected_boxes:

true_positives[pred_i] = 1

detected_boxes += [box_index]

batch_metrics.append([true_positives, pred_scores, pred_labels])

示例使用的3张图片作为一个batch进行处理。

bbox_iou子函数用于为某个pred_box匹配iou最大的那个gt box。

batch_metrics的结果为:

[[array([1., 1., 1., 1.]),

tensor([0.9975, 0.9937, 0.9359, 0.8818]),

tensor([1., 1., 0., 1.])],

[array([1., 1., 1., 0., 1., 1.]),

tensor([0.9978, 0.9970, 0.9940, 0.9917, 0.9735, 0.9633]),

tensor([0., 0., 0., 0., 0., 0.])],

[array([1., 1., 1., 1., 1.]),

tensor([0.9993, 0.9949, 0.9885, 0.9708, 0.9637]),

tensor([1., 1., 1., 0., 1.])]]

其中:

第1张图片

true_positives为[1., 1., 1., 1.],即四个pred_box全为TP;

预测类别为[1., 1., 0., 1.],0为hat,1为person;

第2张图片

true_positives为[1., 1., 1., 0., 1., 1.],即六个pred_box中有一个是FP;

预测类别为[0., 0., 0., 0., 0., 0.],即全为hat。

第3张图片

true_positives为[1., 1., 1., 1., 1.],即五个pred_box全为TP;

预测类别为[1., 1., 1., 0., 1.]。

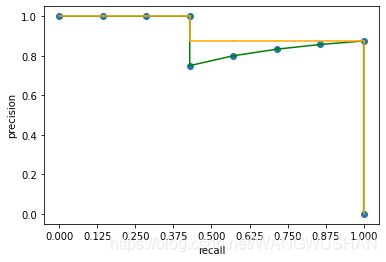

4.计算每一类别的AP

此对应于eriklindernoren YOLOv3 ap_per_class及compute_ap子程序。

tp, conf, pred_cls, target_cls=true_positives, pred_scores, pred_labels, labels

# Sort by objectness

i = np.argsort(-conf)

tp, conf, pred_cls = tp[i], conf[i], pred_cls[i]

# Find unique classes

unique_classes = np.unique(target_cls)

# Create Precision-Recall curve and compute AP for each class

ap, p, r = [], [], []

for c in tqdm(unique_classes, desc="Computing AP"):

i = pred_cls == c

n_gt = (target_cls == c).sum() # Number of ground truth objects

n_p = i.sum() # Number of predicted objects

if n_p == 0 and n_gt == 0:

continue

elif n_p == 0 or n_gt == 0:

ap.append(0)

r.append(0)

p.append(0)

else:

# Accumulate FPs and TPs

fpc = (1 - tp[i]).cumsum()

tpc = (tp[i]).cumsum()

# Recall

recall_curve = tpc / (n_gt + 1e-16)

r.append(recall_curve[-1])

# Precision

precision_curve = tpc / (tpc + fpc)

p.append(precision_curve[-1])

# AP from recall-precision curve

ap.append(compute_ap(recall_curve, precision_curve))

依次求出两个类别的各信息,并计算各类别AP值。

这里不使用VOC2007的11-point interpolation,而使用Interpolating all points,详见参考1:

其AP值为:

A P = 0.14285714 ∗ 1.0 + 0.14285715 ∗ 1.0 + 0.14285714 ∗ 1.0 + 0.14285714 ∗ 0.875 + 0.14285714 ∗ 0.875 + 0.14285715 ∗ 0.875 + 0.14285714 ∗ 0.875 = 0.92857142875 \qquad \qquad AP=0.14285714*1.0+0.14285715*1.0+0.14285714*1.0+0.14285714*0.875+\\ \qquad \qquad \qquad \quad 0.14285714*0.875+0.14285715*0.875+0.14285714*0.875\\ \qquad \quad \qquad \quad =0.92857142875 AP=0.14285714∗1.0+0.14285715∗1.0+0.14285714∗1.0+0.14285714∗0.875+0.14285714∗0.875+0.14285715∗0.875+0.14285714∗0.875=0.92857142875

其AP值为:

A P = 0.125 ∗ 1.0 + 0.125 ∗ 1.0 + 0.125 ∗ 1.0 + 0.125 ∗ 1.0 + 0.125 ∗ 1.0 + 0.125 ∗ 1.0 + 0.125 ∗ 1.0 + 0.125 ∗ 0 = 0.875 \qquad \qquad AP=0.125*1.0+0.125*1.0+0.125*1.0+0.125*1.0+\\ \qquad \qquad \qquad \quad 0.125*1.0+0.125*1.0+0.125*1.0+0.125*0\\ \qquad \quad \qquad \quad =0.875 AP=0.125∗1.0+0.125∗1.0+0.125∗1.0+0.125∗1.0+0.125∗1.0+0.125∗1.0+0.125∗1.0+0.125∗0=0.875

5.求mAP

这一步就非常简单了:

m A P = ( 0.92857 + 0.875 ) / 2 = 0.901785 \qquad \qquad mAP=(0.92857+0.875)/2=0.901785 mAP=(0.92857+0.875)/2=0.901785

完整的示例代码见我的GitHub:https://github.com/cv-ape/a-simple-example-for-VOC-mAP。

参考文献

- https://github.com/rafaelpadilla/Object-Detection-Metrics

- Evaluation metrics for object detection and segmentation: mAP

- https://github.com/cv-ape/a-simple-example-for-VOC-mAP

- https://github.com/eriklindernoren/PyTorch-YOLOv3/blob/master/pytorchyolo/utils/utils.py

mAP:mean Average Precision ↩︎