深度学习之基于卷积神经网络实现服装图像识别

本博客与手写数字识别大同小异。

1.导入所需库

import tensorflow as tf

from tensorflow.keras import datasets, layers, models

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Conv2D, Flatten, Dropout, MaxPooling2D

from tensorflow.keras.preprocessing.image import ImageDataGenerator

import os

import numpy as np

import matplotlib.pyplot as plt

2.数据准备

本阶段需要做的工作:

①下载好我们所需要的服装图像库。

②将图片标准化。

③调整图像的大小。

(类比与手写数字识别的数据处理阶段)

训练集和测试集分别为60000和10000

def DataPre():

# 导入数据

(train_x, train_y), (test_x, test_y) = datasets.fashion_mnist.load_data()

# 标准化

train_x, test_x = train_x / 255.0, test_x / 255.0

# 调整数据???

train_x = train_x.reshape((60000, 28, 28, 1))

test_x = test_x.reshape((10000, 28, 28, 1))

return train_x,train_y,test_x,test_y

3.搭建网络

网络结构为:3层卷积池化层+Flatten+两层全连接层。

(可以尝试一下更深的网络结构,硬件条件允许的情况下

def ModelBuild():

# 搭建模型

model = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(28, 28, 1)),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation=tf.nn.softmax),

layers.Dense(10)

])

model.summary() # 打印网络结构

model.compile(

optimizer = 'adam',

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics = ['accuracy']

)

return model

网络模型为:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 26, 26, 32) 320

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 13, 13, 32) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 11, 11, 64) 18496

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 5, 5, 64) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 3, 3, 64) 36928

_________________________________________________________________

flatten (Flatten) (None, 576) 0

_________________________________________________________________

dense (Dense) (None, 0) 0

_________________________________________________________________

dense_1 (Dense) (None, 64) 64

_________________________________________________________________

dense_2 (Dense) (None, 10) 650

=================================================================

Total params: 56,458

Trainable params: 56,458

Non-trainable params: 0

_________________________________________________________________

Train on 60000 samples, validate on 10000 samples

4.模型训练

由于硬件原因,epochs设置的是10,经过实验证明,epochs在50的时候,效果是明显好于10的情况。

def Modeltrain(model,train_x,train_y,test_x,test_y):

# 训练模型

history = model.fit(train_x, train_y, epochs=10,validation_data=(test_x, test_y))

return history

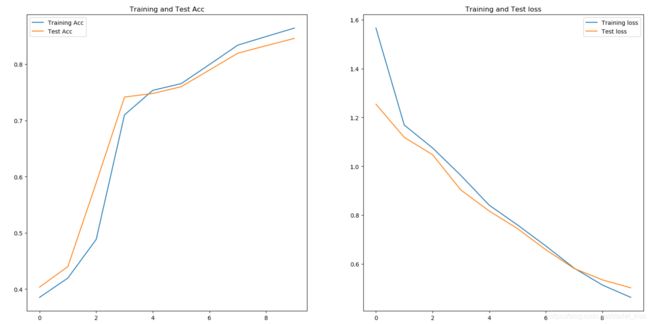

5.结果可视化

accuracy = history.history["accuracy"]

test_accuracy = history.history["val_accuracy"]

loss = history.history["loss"]

test_loss = history.history["val_loss"]

epochs_range = range(10)

plt.figure(figsize=(50, 5))

plt.subplot(1, 2, 1)

plt.plot(epochs_range, accuracy, label="Training Acc")

plt.plot(epochs_range, test_accuracy, label="Test Acc")

plt.legend()

plt.title("Training and Test Acc")

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label="Training loss")

plt.plot(epochs_range, test_loss, label="Test loss")

plt.legend()

plt.title("Training and Test loss")

plt.show()

6.结果

10000/1 - 1s - loss: 0.2716 - accuracy: 0.8840

并没有出现过拟合的情况,但是准确率并不是特别高,在增加epochs的情况下是可以提高准确率的。但是训练速度会明显变慢,而且提升效果并不大。可以尝试一下利用迁移学习,利用别人搭建好的网络,准确率可能会上升。

努力加油a啊