使用OpenCV显示SiftGPU提取的特征点和特征匹配

使用OpenCV显示SiftGPU提取的特征点和特征匹配,主要包括以下几个步骤:

- SiftGPU特征点提取

- OpenCV读入图像

- SiftGPU特征点类型到OpenCV特征点类型KeyPoint的转换

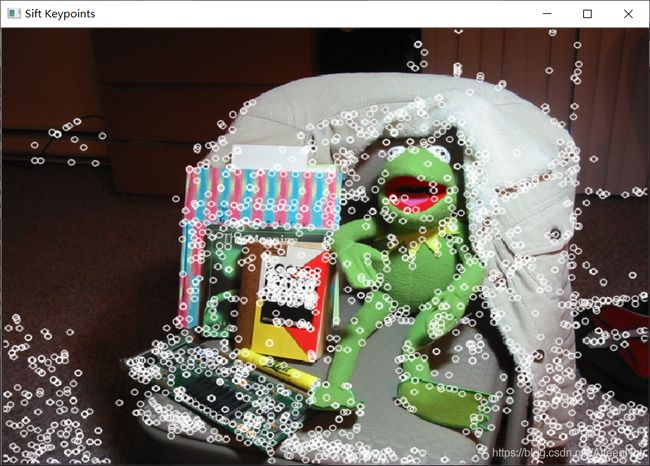

- OpenCV特征点显示

- SiftGPU描述子类型到OpenCV描述子类型的转换

- SiftGPU特征匹配

- SiftGPU特征匹配到OpenCV匹配DMatch类型的转换

具体代码如下:

代码采用image.txt输入待处理的图像路径,此处根据自己实际处理的图像自行设置。

#include (0, 0);

//float cmin = _cvDes.at(0, 0);

//for (int cimm = 0; cimm < _cvDes.rows; ++cimm){

// for (int cjmm = 0; cjmm < 128; ++cjmm){

// if (cmax < _cvDes.at(cimm,cjmm))

// cmax = _cvDes.at(cimm, cjmm);

// if (cmin > _cvDes.at(cimm, cjmm))

// cmin = _cvDes.at(cimm, cjmm);

// }

//}

//cout << "OpenCV Descriptors Max= " << cmax << " , Min=" << cmin << endl;

cvDescriptors.push_back(_cvDes);

}

map<pair<int, int>, vector<DMatch>> matches_matrix;

map<pair<int, int>, int> matches_num;

SiftMatchGPU matcher;

matcher.VerifyContextGL();

for (int i = 0; i < imgCount - 1; ++i){

for (int j = i + 1; j < imgCount; ++j){

matcher.SetDescriptors(0, num[i], &descriptors[i][0]);

matcher.SetDescriptors(1, num[j], &descriptors[j][0]);

int(*match_buf)[2] = new int[num[i]][2];

int num_match = matcher.GetSiftMatch(num[i], match_buf);

matches_num[make_pair(i, j)] = num_match;

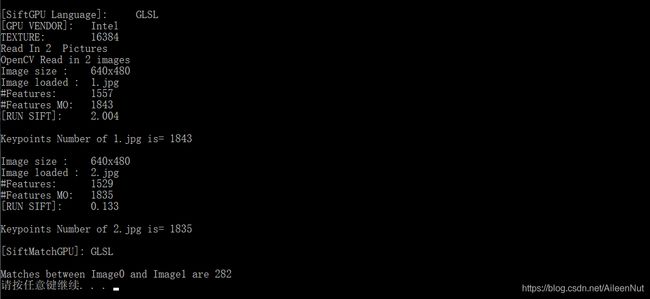

cout << "Matches between Image" << i << " and Image" << j << " are " << num_match << endl;

//定义OpenCV的match类型,并赋值quryIdx和trainIdx

vector<DMatch> matches_tmp;

for (int mk = 0; mk < num_match; ++mk){

DMatch match;

match.queryIdx = match_buf[mk][0];

match.trainIdx = match_buf[mk][1];

Point2f p1, p2;

p1.x = cvKeypoints[i][match.queryIdx].pt.x;

p1.y = cvKeypoints[i][match.queryIdx].pt.y;

p2.x = cvKeypoints[j][match.trainIdx].pt.x;

p2.y = cvKeypoints[j][match.trainIdx].pt.y;

float dist;

dist = (float)sqrtf(((p1.x - p2.x)*(p1.x - p2.x) + (p1.y - p2.y)*(p1.y - p2.y)));

match.distance = dist;

//cout << "Image" << i << " & Image" << j << " Match " << mk << ": " << endl;

//cout << "Idx: queryIdx is= " << match.queryIdx << " , trainIdx is= " << match.trainIdx << endl;

//cout << " Keypoint1: " << p1.x << " " << p1.y << endl;

//cout << " Keypoint2: " << p2.x << " " << p2.y << endl;

//cout << " Distance: " << match.distance << endl;

matches_tmp.push_back(match);

}

matches_matrix[make_pair(i, j)] = matches_tmp;

delete[] match_buf;

//OpenCV匹配显示

Mat cvImgMatches;

drawMatches(images[i], cvKeypoints[i], images[j], cvKeypoints[j], matches_tmp, cvImgMatches);

char showName[100];

sprintf(showName, "%s%d%s%d", "Matches between ", i, " and ", j);

namedWindow(showName, WINDOW_NORMAL);

imshow(showName, cvImgMatches);

waitKey(0);

}

}

system("pause");

return 0;

}