pytorch实现inception模型原理及代码

pytorch模型之Inception

inception模型

alexnet、densenet、inception、resnet、squeezenet、vgg等常用经典的网络结构,提供了预训练模型,可以通过简单调用来读取网络结构和预训练模型。今天我们来解读一下inception的实现

inception原理

一般来说增加网络的深度和宽度可以提升网络的性能,但是这样做也会带来参数量的大幅度增加,同时较深的网络需要较多的数据,否则容易产生过拟合现象。除此之外,增加神经网络的深度容易带来梯度消失的现象。而Inception (又名GoogLeNet)网络较好地解决了这个问题:

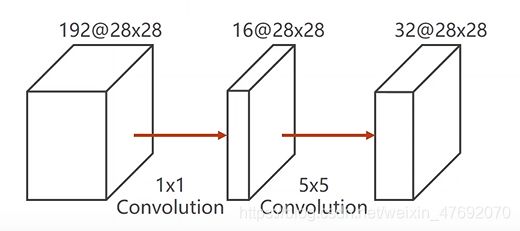

首先我们来看这样一个运算:

如图,一个输入的张量,它有192个通道,图像大小为2828,然后我们用55做卷积,那么做卷积所用的浮点数运算就是这样一个数值:

5^2× 28^2× 192× 32=120,422,400

1^2 ×28^2 ×192 ×16+5^2× 28^2×16 × 32=12,433,648

通过卷积改变通倒数,看似结构复杂了实则运算量大大减少

inception代码

import torch

import torch.nn as nn

import torch.nn.functional as F

class Inception(nn.Module):

def __init__(self,in_channels):

super(Inception,self).__init__()

self.branch1 = nn.Sequential(

nn.Conv2d(in_channels,16,kernel_size=1)

)

self.branch5 = nn.Sequential(

nn.Conv2d(in_channels,16,kernel_size=1),

nn.Conv2d(16, 24, kernel_size=5,padding=2)

)

self.branch3 = nn.Sequential(

nn.Conv2d(in_channels, 16, kernel_size=1),

nn.Conv2d(16, 24, kernel_size=3,padding=1),

nn.Conv2d(24, 24, kernel_size=3,padding=1)

)

self.branch_pool = nn.Conv2d(in_channels,24,kernel_size=1)

def forward(self, x):

branch1 = self.branch1(x)

branch5 = self.branch5(x)

branch3 = self.branch3(x)

branch_pool = F.avg_pool2d(x, kernel_size=3,stride=1,padding=1)

branch_pool = self.branch_pool(branch_pool)

return torch.cat((branch1,branch5,branch3,branch_pool),dim=1)

class GoogModel(nn.Module):

def __init__(self):

super(GoogModel,self).__init__()

self.conv1 = nn.Conv2d(1,10,kernel_size=5)

self.conv2 = nn.Conv2d(88,20,kernel_size=5)

self.Inceptionl = lnceptionA(in_channels=10)

self.Inception2 = lnceptionA(in_channels=20)

self.mp = nn. MaxPoo12d(2)

self.fc = nn. Linear(3*3*1024,10)

def forward(self,x):

in_size = x.size(O)

x = F.relu(self.mp(self.conv1(x)))

x = self.Inception1(x)

x = F.relu(self.mp(self.conv2(x)))

x = self.Inception2(x)

x = x.view(in_size,-1)

v = self.fc(x)

return x