谱聚类的实现

开始用accumulate做加和,结果发现laplacian矩阵求特征值后最小的特征值不是0,这是有问题的,聚类的准确率不是很高,原因也找不到。后来一步步查,发现diag矩阵有问题,最终查到accumulate是int相加,不准确,修改后准确率增加。

// spectral-cluster.cpp : 定义控制台应用程序的入口点。

//

#include "stdafx.h"

#include <iostream>

#include<vector>

#include<set>

#include <numeric>

#include <cv.h>

#include <highgui.h>

/*********************author Marshall**************************/

/************************2015.10.20****************************/

/*********************version 1.0******************************/

using namespace std;

class spectral;

class kmeans

{

friend class spectral;

private:

double*dataset;

unsigned int k;

unsigned int dim;

unsigned int numofdata;

typedef vector<double> Centroid;

vector<Centroid> center;

vector<set<int>>cluster_ID;

vector<Centroid>new_center;

vector<set<int>>new_cluster_ID;

double threshold;

int iter;

private:

void init();

void assign();

double distance(Centroid cen, int k2);

void split(vector<set<int>>&clusters, int kk);

void update_centers();

bool isfinish();

void show_result();

public:

kmeans(double*data, unsigned int datanums, const int dim, const int numofcluster)

:dataset(data), numofdata(datanums), dim(dim), k(numofcluster)

{

threshold = 0.0000001;

}

void apply();

};

//template <typename T>

void kmeans::init()

{

center.resize(k);

set<int>bb;

for (int i = 0; i < k; i++)

{

int id = double(rand()) / double(RAND_MAX + 1.0)*numofdata;

while (bb.find(id) != bb.end())

{

id = double(rand()) / double(RAND_MAX + 1.0)*numofdata;

}

bb.insert(id);

center[i].resize(dim);

for (int j = 0; j < dim; j++)

center[i][j] = dataset[id*dim + j];

}

}

bool kmeans::isfinish()

{

double error = 0;

for (int i = 0; i < k; i++)

{

for (int j = 0; j < dim; j++)

error += pow(center[i][j] - new_center[i][j], 2);

}

return error < threshold ? true : false;

}

void kmeans::assign()

{

for (int j = 0; j < numofdata; j++)

{

double mindis = 10000000;

int belongto = -1;

for (int i = 0; i < k; i++)

{

double dis = distance(center[i], j);

if (dis < mindis)

{

mindis = dis;

belongto = i;

}

}

new_cluster_ID[belongto].insert(j);

}

for (int i = 0; i < k; i++)

{

if (new_cluster_ID[i].empty())

{

split(new_cluster_ID, i);

}

}

}

double kmeans::distance(Centroid cen, int k2)

{

double dis = 0;

for (int i = 0; i < dim; i++)

dis += pow(cen[i] - dataset[k2*dim + i], 2);

return sqrt(dis);

}

void kmeans::split(vector<set<int>>&clusters, int kk)

{

int maxsize = 0;

int th = -1;

for (int i = 0; i < k; i++)

{

if (clusters[i].size() > maxsize)

{

maxsize = clusters[i].size();

th = i;

}

}

#define DELTA 3

vector<double>tpc1, tpc2;

tpc1.resize(dim);

tpc2.resize(dim);

for (int i = 0; i < dim; i++)

{

tpc2[i] = center[th][i] - DELTA;

tpc1[i] = center[th][i] + DELTA;

}

for (set<int>::iterator it = clusters[th].begin(); it != clusters[th].end(); it++)

{

double d1 = distance(tpc1, *it);

double d2 = distance(tpc2, *it);

if (d2 < d1)

{

clusters[kk].insert(*it);

}

}

_ASSERTE(!clusters[kk].empty());

for (set<int>::iterator it = clusters[kk].begin(); it != clusters[kk].end(); it++)

clusters[th].erase(*it);

}

void kmeans::update_centers()

{

for (int i = 0; i < k; i++)

{

Centroid temp;

temp.resize(dim);

for (set<int>::iterator j = new_cluster_ID[i].begin(); j != new_cluster_ID[i].end(); j++)

{

for (int m = 0; m < dim; m++)

temp[m] += dataset[(*j)*dim + m];

}

for (int m = 0; m < dim; m++)

temp[m] /= new_cluster_ID[i].size();

new_center[i] = temp;

}

}

void kmeans::apply()

{

init();

new_center.resize(k);

new_cluster_ID.resize(k);

assign();

update_centers();

iter = 0;

while (!isfinish())

{

center = new_center;

cluster_ID = new_cluster_ID;

new_center.clear();

new_center.resize(k);

new_cluster_ID.clear();

new_cluster_ID.resize(k);

assign();

update_centers();

iter++;

}

//new_cluster_ID.clear();

}

class spectral

{

private:

unsigned int dim;

unsigned int N;

double**normalized_data;

double*laplacian_matrix;

double*diag_matrix;

double*similarity_matrix;

bool is_normalized;

double sigma2;

unsigned int k_nearest;

unsigned int K;

unsigned int numofcluster;

private:

void create_similarity_matrix();

void create_diag_matrix();

void create_laplacian_matrix();

void eigen_of_laplacian(double*&newdata);

//void kmeans();

void normalize_data(double*&data, int datanums, int dim);

double euclidean(const double*data1, const double*data2);

bool is_knearest(const double*distance_matrix, const int kk, const int mm);

public:

spectral(double**data, const int N, const int dim, const int numofcluster);

void spectral_cluster(int*pClusters);

~spectral();

};

spectral::~spectral()

{

}

spectral::spectral(double**data, const int N, const int dim, const int numofcluster)

:N(N), dim(dim), numofcluster(numofcluster)

{

K = 4;

k_nearest = 15;

sigma2 = 600;

normalized_data = data;

is_normalized = false;

}

void spectral::normalize_data(double*&data, int datanums, int dim)

{

double*mins = new double[dim];

memset(mins, 0x3f3f3f3f, sizeof(double)*dim);

double*maxs = new double[dim];

memset(maxs, 0, sizeof(double)*dim);

for (int i = 0; i < datanums; i++)

for (int j = 0; j < dim; j++)

{

if (data[i*dim + j] > maxs[j])

{

maxs[j] = data[i*dim + j];

}

if (data[i*dim + j] < mins[j])

mins[j] = data[i*dim + j];

}

for (int i = 0; i < datanums; i++)

for (int j = 0; j < dim; j++)

data[i*dim + j] = 100 * (data[i*dim + j] - mins[j]) / (maxs[j] - mins[j]);

delete[]maxs, mins;

}

double spectral::euclidean(const double*data1, const double*data2)

{

double dis = 0;

for (int i = 0; i < dim; i++)

dis += pow(data1[i] - data2[i], 2);

return dis;

}

bool spectral::is_knearest(const double*distance_matrix,

const int kk, const int mm)

{

double dis = distance_matrix[kk*N + mm];

int count = 0;

//还要考虑distance_matrix[kk*N + kk]=0;

//因为k_nearest相比N很小(大多数情况),判断false好

for (int i = 0; i < N; i++)

if (i != kk&&distance_matrix[kk*N + i] < dis)

{

count++;

if (count > k_nearest)

return false;

}

return true;

}

void spectral::create_similarity_matrix()

{

double*distance_matrix = new double[N*N];

memset(distance_matrix, 0, N*N*sizeof(double));

for (int i = 0; i < N - 1; i++)

for (int j = i + 1; j < N; j++)

{

distance_matrix[i*N + j] = euclidean

(normalized_data[i], normalized_data[j]);

distance_matrix[j*N + i] = distance_matrix[i*N + j];

}

if (similarity_matrix)

delete[]similarity_matrix;

similarity_matrix = new double[N*N];

memset(similarity_matrix, 0, N*N*sizeof(double));

//这里使用对称k最近邻

for (int i = 0; i < N - 1; i++)

{

for (int j = i + 1; j < N; j++)

{

if (is_knearest(distance_matrix, i, j)

|| is_knearest(distance_matrix, j, i))

{

similarity_matrix[i*N + j] = similarity_matrix[j*N + i]

= 100 * exp(-distance_matrix[i*N + j] / sigma2);

}

//cout << similarity_matrix[i*N + j] << " ";

}

//cout << endl << endl << endl << endl;

}

/*for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

{

cout << similarity_matrix[i*N + j] << " ";

}

cout << endl << endl << endl << endl;

}*/

delete[]distance_matrix;

}

void spectral::create_diag_matrix()

{

if (diag_matrix)

delete[]diag_matrix;

diag_matrix = new double[N*N];

memset(diag_matrix, 0, N*N*sizeof(double));

for (int i = 0; i < N; i++)

{

//accumulate是int相加,不准确,修改后准确率增加

//diag_matrix[i*N + i] = accumulate(similarity_matrix + i*N, similarity_matrix + i*N + N, 0);

double sum = 0;

for (int j = 0; j < N; j++)

sum += similarity_matrix[i*N + j];

diag_matrix[i*N + i] = sum;

//cout << "diag" << i << " " << diag_matrix[i*N + i] << endl;

}

}

void spectral::create_laplacian_matrix()

{

if (laplacian_matrix)

delete[]laplacian_matrix;

laplacian_matrix = new double[N*N];

for (int i = 0; i < N; i++)

for (int j = i; j < N; j++)

{

laplacian_matrix[i*N + j] = laplacian_matrix[j*N + i]

= diag_matrix[i*N + j] - similarity_matrix[i*N + j];

}

if (is_normalized)

{

for (int i = 0; i < N; i++)

for (int j = 0; j < N; j++)

{

int temp = i == j ? 1 : 0;

laplacian_matrix[i*N + j] = temp - 1.0 / sqrt(diag_matrix[j*N + j]

+ 1.0e-13)*laplacian_matrix[i*N + j] * 1.0 / sqrt(diag_matrix[i*N + i] + 1.0e-13);

}

}

delete[]diag_matrix, similarity_matrix;

}

void spectral::eigen_of_laplacian(double*&newdata)

{

//CvMat Ma = cvMat(N, N, CV_64FC1, laplacian_matrix);

CvMat *pSrcMatrix = cvCreateMat(N, N, CV_64FC1);

// E = 对应的特征向量 (每行)

CvMat* E = cvCreateMat(N, N, CV_64FC1);

CvMat* l = cvCreateMat(N, 1, CV_64FC1);

for (int i = 0; i < N; i++)

{

cvmSet(l, i, 0, 0);

for (int j = 0; j < N; j++)

{

cvmSet(pSrcMatrix, i, j, laplacian_matrix[i*N + j]);

cvmSet(E, i, j, 0);

}

}

cvEigenVV(pSrcMatrix, E, l); // l = A的特征值 (降序排列)

for (int i = 0; i < N; i++)

cout << cvmGet(l, i, 0) << " ";//半正定,最小的特征值应该是0,应该在此处验证

for (int i = 0; i < N; i++)

{

//cout << cvmGet(E, N - 1 , i);

for (int j = 0; j < K; j++)

{

newdata[i*K + j] = cvmGet(E, N - 2 - j, i);

}

}

delete[]laplacian_matrix;

// 释放矩阵所占内存空间

cvReleaseMat(&pSrcMatrix);

cvReleaseMat(&E);

cvReleaseMat(&l);

}

void spectral::spectral_cluster(int*pClusters)

{

create_similarity_matrix();

create_diag_matrix();

create_laplacian_matrix();

double*newdata = new double[N*K];

eigen_of_laplacian(newdata);

//normalize_data(newdata, this->N, dim);

kmeans km(newdata, N, K, this->numofcluster);

km.apply();

delete[]newdata;

newdata = NULL;

int count = 0;

for (int i = 0; i < km.new_cluster_ID.size(); i++)

for (set<int>::iterator it = km.new_cluster_ID[i].begin(); it != km.new_cluster_ID[i].end(); it++)

{

pClusters[*it] = i;

count++;

}

_ASSERTE(count == N);

}

int _tmain(int argc, _TCHAR* argv[])

{

#define MAX_CLUSTERS 5

CvScalar color_tab[MAX_CLUSTERS];

IplImage* img = cvCreateImage(cvSize(500, 500), IPL_DEPTH_8U, 3);

CvRNG rng = cvRNG(cvGetTickCount());

CvPoint ipt;

color_tab[0] = CV_RGB(255, 0, 0);

color_tab[1] = CV_RGB(0, 255, 0);

color_tab[2] = CV_RGB(100, 100, 255);

color_tab[3] = CV_RGB(255, 0, 255);

color_tab[4] = CV_RGB(255, 255, 0);

int clusterNum = 4;

int sampleNum = 500;

int feaNum = 2;

CvMat* sampleMat = cvCreateMat(sampleNum, 1, CV_32FC2);

/* generate random sample from multigaussian distribution */

//产生高斯随机数

for (int k = 0; k < clusterNum; k++)

{

CvPoint center;

int topIndex = k*sampleNum / clusterNum;

int bottomIndex = (k == clusterNum - 1 ? sampleNum : (k + 1)*sampleNum / clusterNum);

CvMat* localMat = cvCreateMatHeader(bottomIndex - topIndex, 1, CV_32FC2);

center.x = cvRandInt(&rng) % img->width;

center.y = cvRandInt(&rng) % img->height;

cvGetRows(sampleMat, localMat, topIndex, bottomIndex, 1); //此函数不会给localMat分配空间,不包含bottomIndex

cvRandArr(&rng, localMat, CV_RAND_NORMAL,

cvScalar(center.x, center.y, 0, 0),

cvScalar(img->width*0.1, img->height*0.1, 0, 0));

}

// shuffle samples 混乱数据

for (int i = 0; i < sampleNum / 2; i++)

{

CvPoint2D32f* pt1 = (CvPoint2D32f*)sampleMat->data.fl + cvRandInt(&rng) % sampleNum;

CvPoint2D32f* pt2 = (CvPoint2D32f*)sampleMat->data.fl + cvRandInt(&rng) % sampleNum;

CvPoint2D32f temp;

CV_SWAP(*pt1, *pt2, temp);

}

double** pSamples = new double*[sampleNum];

for (int i = 0; i < sampleNum; i++)

pSamples[i] = new double[feaNum];

int* pClusters = new int[sampleNum];

for (int i = 0; i < sampleNum; i++)

{

//feaNum=2

pSamples[i][0] = (cvGet2D(sampleMat, i, 0)).val[0];

//cout << pSamples[i][0] << " ";

pSamples[i][1] = (cvGet2D(sampleMat, i, 0)).val[1];

//cout << pSamples[i][1] << endl;

}

cvReleaseMat(&sampleMat);

cvZero(img);

for (int i = 0; i < sampleNum; i++)

{

//feaNum=2

ipt.x = pSamples[i][0];

ipt.y = pSamples[i][1];

cvCircle(img, ipt, 1, cvScalar(255, 255, 255), CV_FILLED, CV_AA, 0);

}

cvSaveImage("origin.jpg", img);

//执行谱聚类

spectral sp(pSamples, sampleNum, feaNum, clusterNum);

sp.spectral_cluster(pClusters);

cvZero(img);

for (int i = 0; i < sampleNum; i++)

{

//feaNum=2

int cluster_idx = pClusters[i];

ipt.x = pSamples[i][0];

ipt.y = pSamples[i][1];

cvCircle(img, ipt, 1, color_tab[cluster_idx], CV_FILLED, CV_AA, 0);

}

delete[] pClusters;

pClusters = NULL;

delete[] pSamples;

pSamples = NULL;

cvNamedWindow("clusters");

cvShowImage("clusters", img);

cvWaitKey(0);

cvSaveImage("clusters.jpg", img);

cvReleaseImage(&img);

system("pause");

cvDestroyWindow("clusters");

return 0;

}

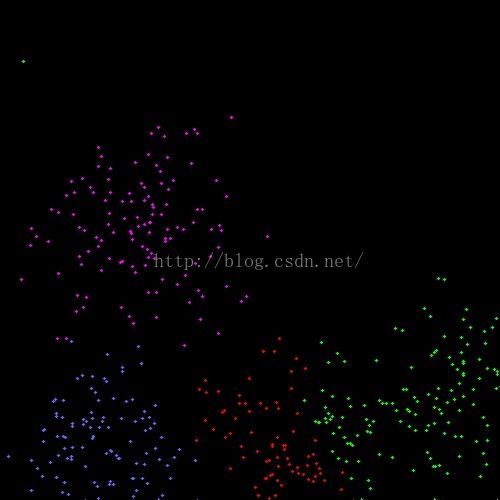

下面是聚类结果。

嫌c++冗长的看这个python的

#!/usr/bin/python

# copyright (c) 2008 Feng Zhu, Yong Sun

import heapq

from functools import partial

from numpy import *

from scipy.linalg import *

from scipy.cluster.vq import *

import pylab

def line_samples ():

vecs = random.rand (120, 2)

vecs [:,0] *= 3

vecs [0:40,1] = 1

vecs [40:80,1] = 2

vecs [80:120,1] = 3

return vecs

def gaussian_simfunc (v1, v2, sigma=1):

tee = (-norm(v1-v2)**2)/(2*(sigma**2))

return exp (tee)

def construct_W (vecs, simfunc=gaussian_simfunc):

n = len (vecs)

W = zeros ((n, n))

for i in xrange(n):

for j in xrange(i,n):

W[i,j] = W[j,i] = simfunc (vecs[i], vecs[j])

return W

def knn (W, k, mutual=False):

n = W.shape[0]

assert (k>0 and k<(n-1))

for i in xrange(n):

thr = heapq.nlargest(k+1, W[i])[-1]

for j in xrange(n):

if W[i,j] < thr:

W[i,j] = -W[i,j]

for i in xrange(n):

for j in xrange(i, n):

if W[i,j] + W[j,i] < 0:

W[i,j] = W[j,i] = 0

elif W[i,j] + W[j,i] == 0:

W[i,j] = W[j,i] = 0 if mutual else abs(W[i,j])

vecs = line_samples()

W = construct_W (vecs, simfunc=partial(gaussian_simfunc, sigma=2))

knn (W, 10)

D = diag([reduce(lambda x,y:x+y, Wi) for Wi in W])

L = D - W

evals, evcts = eig(L,D)

vals = dict (zip(evals, evcts.transpose()))

keys = vals.keys()

keys.sort()

Y = array ([vals[k] for k in keys[:3]]).transpose()

res,idx = kmeans2(Y, 3, minit='points')

colors = [(1,2,3)[i] for i in idx]

pylab.scatter(vecs[:,0],vecs[:,1],c=colors)

pylab.show()