《机器学习实战》笔记:朴素贝叶斯 (2)文本分类

背景:以在线社区留言板为例,对内容进行甄别,侮辱性abusive标为1,非侮辱性normal为0

首先还是导入numpy

from numpy import *新建一个可用数据集

def loadDataSet():

postingList=[['my', 'dog', 'has', 'flea', 'problems', 'help', 'please'],

['maybe', 'not', 'take', 'him', 'to', 'dog', 'park', 'stupid'],

['my', 'dalmation', 'is', 'so', 'cute', 'I', 'love', 'him'],

['stop', 'posting', 'stupid', 'worthless', 'garbage'],

['mr', 'licks', 'ate', 'my', 'steak', 'how', 'to', 'stop', 'him'],

['quit', 'buying', 'worthless', 'dog', 'food', 'stupid']]

classVec = [0,1,0,1,0,1] #1 is abusive, 0 not

return postingList,classVec#返回矩阵(列表的列表)+标签向量看过去好像是有stupid的都被标侮辱性了。这里矩阵还是一行一行来看的,每行算是一个特征向量。

创建词表

def createVocabList(dataSet):#输入:矩阵

vocabSet = set([]) #set:元素不重复的list

for document in dataSet:

vocabSet = vocabSet | set(document) #集合求并,新词表=旧词表+每行新单词

return list(vocabSet)#返回词表向量词表最终得到的是一个向量,包含所有在矩阵里出现过的单词

将词表转为0/1向量

def setOfWords2Vec(vocabList, inputSet):#输入:词表向量+待测文档向量

returnVec = [0]*len(vocabList)

for eachWord in inputSet:

if eachWord in vocabList:

returnVec[vocabList.index(eachWord)] = 1

else: print "the word: %s is not in my Vocabulary!" % eachWord

return returnVec#返回词表0/1向量,反映那些词汇在文档中是否出现这个函数比如说词表是a~z,输入文档abc,那么返回向量111000000...

以上完成了准备工作。

下面是朴素贝叶斯分类器训练函数:

def trainNB0(trainMatrix,trainCategory):#输入:文档0/1矩阵+标签向量

numTrainDocs = len(trainMatrix)#return the num of lines,训练文档数量,1行=1文档

numWords = len(trainMatrix[0]) #return the num of columns,词表长度

pAbusive = sum(trainCategory)/float(numTrainDocs)#计算侮辱性文档的概率 p(c1),p(c0)=1-p(c1)

p0Num = zeros(numWords); p1Num = zeros(numWords) #词表0/1向量清零

p0Denom = 0.0; p1Denom = 0.0 #单词总量清零

for i in range(numTrainDocs):

if trainCategory[i] == 1:

p1Num += trainMatrix[i]#类别为1的文档中,词表0/1向量

p1Denom += sum(trainMatrix[i])#类别为1的文档中,单词总量

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = p1Num/p1Denom #标签为abusive的文档中,词汇表的各个单词的出现概率向量

p0Vect = p0Num/p0Denom #标签为normal的文档中,词汇表的各个单词的出现概率向量

return p0Vect,p1Vect,pAbusive #返回 abusive和normal的词频(概率),和最简单的p(c1)测试一下

listOPosts, listClasses = loadDataSet()

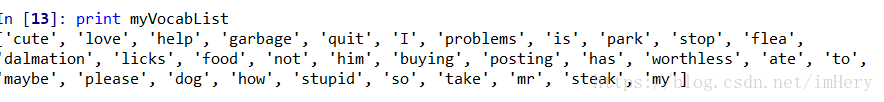

myVocabList = createVocabList(listOPosts)

trainMat=[]

for post in listOPosts:

trainMat.append(setOfWords2Vec(myVocabList, post))

p0V, p1V, pAb = trainNB0(trainMat, listClasses)

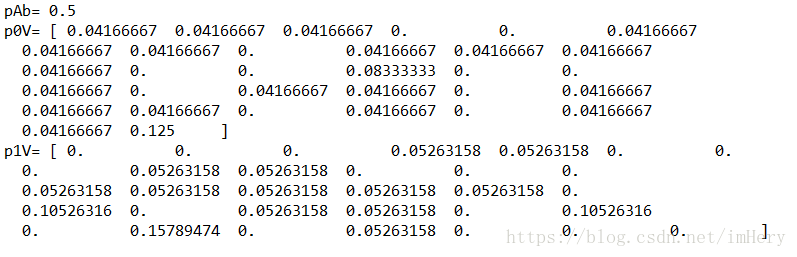

print 'pAb=',pAb, '\n', 'p0V=',p0V, '\n', 'p1V=',p1V载入数据,构建包含所有词的词表myVocabList,再用for循环填充trainMat,套入trainNB0函数

毫无疑问pAb=0.5

词表第一个词cute 在类别0中出现1次 1/(7+8+9)=0.0416 在类别1中没出现 0 符合实际情况

但是这个分类器存在一些不足:

1、

![]() 如果其中一个概率值为0 那么最后乘积也为0

如果其中一个概率值为0 那么最后乘积也为0

为避免这种影响,可以将所有词的出现数初始化为1,并将分母初始化为2

p0Num = ones(numWords); p1Num = ones(numWords) #change to ones()

p0Denom = 2.0; p1Denom = 2.0 #change to 2.0

2、

另外,太多很小的数相乘会造成下溢出,最后目的是比较分子大小而不是数值,那么可以先逐个取对数再相加

p1Vect = log(p1Num/p1Denom) #change to log()

p0Vect = log(p0Num/p0Denom) #change to log()

修改完之后

def trainNB0(trainMatrix,trainCategory):

numTrainDocs = len(trainMatrix)

numWords = len(trainMatrix[0])

pAbusive = sum(trainCategory)/float(numTrainDocs)

p0Num = ones(numWords); p1Num = ones(numWords) #change to ones()

p0Denom = 2.0; p1Denom = 2.0 #change to 2.0

for i in range(numTrainDocs):

if trainCategory[i] == 1:

p1Num += trainMatrix[i]

p1Denom += sum(trainMatrix[i])

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = log(p1Num/p1Denom) #change to log()

p0Vect = log(p0Num/p0Denom) #change to log()

return p0Vect,p1Vect,pAbusive分类器构建好了,它可以得到训练集中的数据统计信息,用于贝叶斯公式分子的计算,

现在考虑输入待测向量,词表0/1形式的那种,分类器给出预测结果,如下函数:

def classifyNB(vec2Classify, p0Vec, p1Vec, pClass1):#输入:待测向量,两类词频,p(c1)

p1 = sum(vec2Classify * p1Vec) + log(pClass1) #待测向量位乘全部词频=待测向量存在单词词频,P1为贝叶斯公式分子

p0 = sum(vec2Classify * p0Vec) + log(1.0 - pClass1)

if p1 > p0:

return 1

else:

return 0最后测试函数,跟之前进行的测试差不多,进行一个封装

def testingNB():

listOPosts,listClasses = loadDataSet()

myVocabList = createVocabList(listOPosts)

trainMat=[]

for postinDoc in listOPosts:

trainMat.append(setOfWords2Vec(myVocabList, postinDoc))

p0V,p1V,pAb = trainNB0(array(trainMat),array(listClasses))

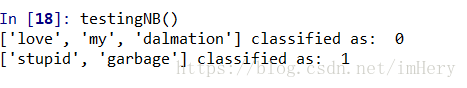

testEntry = ['love', 'my', 'dalmation']

thisDoc = array(setOfWords2Vec(myVocabList, testEntry))

print testEntry,'classified as: ',classifyNB(thisDoc,p0V,p1V,pAb)

testEntry = ['stupid', 'garbage']

thisDoc = array(setOfWords2Vec(myVocabList, testEntry))

print testEntry,'classified as: ',classifyNB(thisDoc,p0V,p1V,pAb)果然出现stupid的还是归为侮辱类的了