Circle Loss: A Unified Perspective of Pair Similarity Optimization

Circle Loss: A Unified Perspective of Pair Similarity Optimization

- Abstract

- 1. Introduction

- 2. A Unified Perspective

- 3. A New Loss Function

- 3.1. Self-paced Weighting

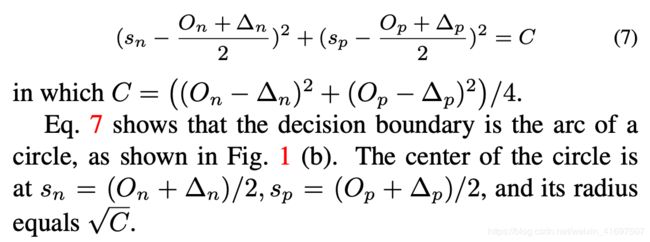

- 3.2. Within-class and Between-class Margins

- 3.3. The Advantages of Circle Loss

- 4. Experiment

- 4.1. Settings

- 4.2. Face Recognition

- 4.3. Person Re-identification

- 4.4. Fine-grained Image Retrieval

- 4.5. Impact of the Hyper-parameters

- 4.6. Investigation of the Characteristics

- 5. Conclusion

- References

Abstract

This paper provides a pair similarity optimization view- point on deep feature learning, aiming to maximize the within-class similarity sp and minimize the between-class similarity sn. We find a majority of loss functions, includ- ing the triplet loss and the softmax plus cross-entropy loss, embed sn and sp into similarity pairs and seek to reduce (sn − sp). Such an optimization manner is inflexible, be- cause the penalty strength on every single similarity score is restricted to be equal. Our intuition is that if a similarity score deviates far from the optimum, it should be empha- sized. To this end, we simply re-weight each similarity to highlight the less-optimized similarity scores. It results in a Circle loss, which is named due to its circular decision boundary. The Circle loss has a unified formula for two elemental deep feature learning approaches, i.e., learning with class-level labels and pair-wise labels. Analytically, we show that the Circle loss offers a more flexible optimiza- tion approach towards a more definite convergence target, compared with the loss functions optimizing (sn − sp). Ex- perimentally, we demonstrate the superiority of the Circle loss on a variety of deep feature learning tasks. On face recognition, person re-identification, as well as several fine- grained image retrieval datasets, the achieved performance is on par with the state of the art.

本文提供了关于深度特征学习的一对相似度优化观点,旨在最大化类内相似度sp并最小化类间相似度sn。我们发现了大多数损失函数,包括三重态损失和softmax加上交叉熵损失,将sn和sp嵌入相似对并试图降低(sn-sp)。这种优化方式是不灵活的,因为每个相似度得分上的惩罚强度都被限制为相等。我们的直觉是,如果相似性得分偏离最佳值,则应强调它。为此,我们仅需对每个相似度重新加权,以突出显示未优化的相似度得分。这会导致圆损失,由于其圆形决策边界而被命名。 Circle loss具有两种基本的深度特征学习方法的统一公式,即使用类级标签和成对标签进行学习。从分析上,我们表明,与损失函数优化(sn-sp)相比,Circle损失为更确定的收敛目标提供了更灵活的优化方法。实验上,我们证明了Circle Loss在各种深度特征学习任务中的优越性。在人脸识别,人员重新识别以及几个细粒度的图像检索数据集上,实现的性能与最新技术水平相当。

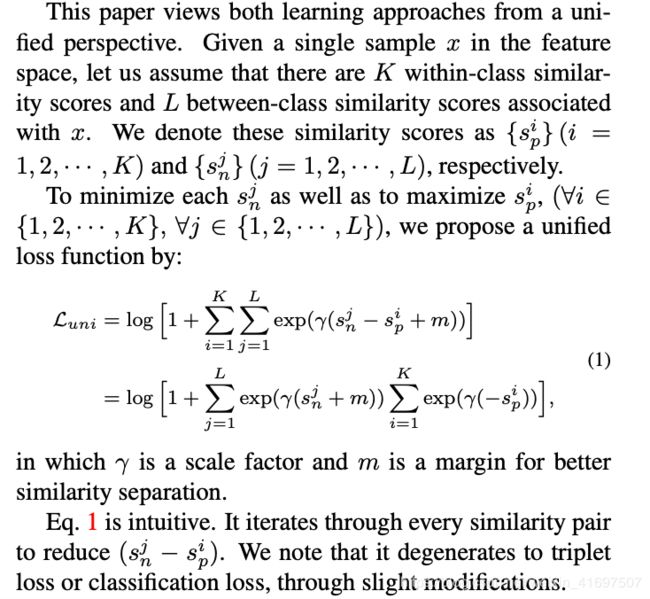

Figure 1: Comparison between the popular optimization manner of reducing (sn −sp) and the proposed optimization manner of reducing (αnsn −αpsp). (a) Reducing (sn −sp) is prone to inflexible optimization (A, B and C all have equal gradients with respect to sn and sp), as well as am- biguous convergence status (both T and T ′ on the decision boundary are acceptable). (b) With (αnsn −αpsp), the Cir- cle loss dynamically adjusts its gradients on sp and sn, and thus benefits from flexible optimization process. For A, it emphasizes on increasing sp; for B, it emphasizes on reduc- ing sn. Moreover, it favors a specified point T on the circu- lar decision boundary for convergence, setting up a definite convergence target.

图1:流行的还原优化方法(sn -sp)与建议的还原优化方法(αnsn-αpsp)比较。 (a)减少(sn -sp)倾向于不灵活的优化(A,B和C相对于sn和sp都具有相同的梯度),以及模糊的收敛状态(决策边界上的T和T’都一样) 是可以接受的)。 (b)利用(αnsn-αpsp),圆环损耗动态地调整其在sp和sn上的梯度,因此得益于灵活的优化过程。 对于A,它强调增加sp。 对于B,它强调减少sn。 此外,它有利于在圆周决策边界上指定点T进行收敛,从而确定确定的收敛目标。

1. Introduction

This paper holds a similarity optimization view towards two elemental deep feature learning approaches, i.e., learn- ing from data with class-level labels and from data with pair-wise labels. The former employs a classification loss function (e.g., Softmax plus cross-entropy loss [25, 16, 36]) to optimize the similarity between samples and weight vec- tors. The latter leverages a metric loss function (e.g., triplet loss [9, 22]) to optimize the similarity between samples. In our interpretation, there is no intrinsic difference between these two learning approaches. They both seek to minimize between-class similarity sn, as well as to maximize within- class similarity sp.

From this viewpoint, we find that many popular loss functions (e.g., triplet loss [9, 22], Softmax loss and its vari- ants [25, 16, 36, 29, 32, 2]) share a similar optimization pat- tern. They all embed sn and sp into similarity pairs and seek to reduce (sn −sp). In (sn −sp), increasing sp is equivalent to reducing sn. We argue that this symmetric optimization manner is prone to the following two problems.

• Lack of flexibility for optimization. The penalty strength on sn and sp is restricted to be equal. Given the specified loss functions, the gradients with respect to sn and sp are of same amplitudes (as detailed in Section 2). In some corner cases, e.g., sp is small and sn already ap- proaches 0 (“A” in Fig. 1 (a)), it keeps on penalizing sn with large gradient. It is inefficient and irrational.

本文针对两种基本的深度特征学习方法(即从具有类级别标签的数据和具有成对标签的数据中学习)持相似性优化观点。前者采用分类损失函数(例如,Softmax加交叉熵损失[25,16,36])来优化样本和权重向量之间的相似度。后者利用度量损失函数(例如,三重损失[9,22])来优化样本之间的相似度。在我们的解释中,这两种学习方法之间没有内在的区别。他们都试图最小化类间相似度sn,以及最大化类内相似度sp。

从这个角度来看,我们发现许多流行的损失函数(例如,三重损失[9,22],Softmax损失及其变体[25、16、36、29、32、2])具有相似的优化模式。它们都将sn和sp嵌入相似对,并寻求减小(sn -sp)。在(sn -sp)中,增加sp等于减少sn。我们认为这种对称优化方式容易出现以下两个问题。

•缺乏优化的灵活性。 sn和sp的惩罚强度被限制为相等。给定指定的损耗函数,关于sn和sp的梯度具有相同的幅度(如第2节中所述)。在某些极端情况下,例如,sp很小,并且sn已经接近0(图1(a)中的“ A”),它会继续以大梯度惩罚sn。它效率低下且不合理。

• Ambiguous convergence status. Optimizing (sn−sp)

•收敛状态不明确。 优化(sn-sp)

Being simple, Circle loss intrinsically reshapes the char- acteristics of the deep feature learning from the following three aspects:

First, a unified loss function. From the unified similar- ity pair optimization perspective, we propose a unified loss function for two elemental learning approaches, learning with class-level labels and with pair-wise labels.

Second, flexible optimization. During training, the gradient back-propagated to sn (sp) will be amplified by αn (αp). Those less-optimized similarity scores will have larger weighting factors and consequentially get larger gra- dient. As shown in Fig. 1 (b), the optimization on A, B and C are different to each other.

Third, definite convergence status. On the circular de- cision boundary, Circle loss favors a specified convergence status (“T” in Fig. 1 (b)), as to be demonstrated in Sec- tion 3.3. Correspondingly, it sets up a definite optimization target and benefits the separability.

The main contributions of this paper are summarized as follows:

简单来说,圆损失从以下三个方面从本质上重塑了深度特征学习的特征:

一是统一亏损功能。从统一相似度对优化的角度来看,我们为两种基本学习方法(使用类级标签和逐对标签的学习方法)提出了统一的损失函数。

第二,灵活优化。在训练期间,反向传播到sn(sp)的梯度将被αn(αp)放大。那些不太优化的相似性分数将具有较大的权重因子,因此会获得较大的梯度。如图1(b)所示,对A,B和C的优化互不相同。

第三,确定收敛状态。在圆弧决策边界上,圆弧损耗倾向于指定的收敛状态(图1(b)中的“ T”),如第3.3节所示。相应地,它建立了明确的优化目标并有利于可分离性。

本文的主要贡献概述如下:

• We propose Circle loss, a simple loss function for deep feature learning. By re-weighting each similarity score under supervision, Circle loss benefits the deep feature learning with flexible optimization and definite conver- gence target.

• We present Circle loss with compatibility to both class- level labels and pair-wise labels. Circle loss degener- ates to triplet loss or Softmax loss with slight modifi- cations.

• We conduct extensive experiment on a variety of deep feature learning tasks, e.g. face recognition, person re- identification, car image retrieval and so on. On all these tasks, we demonstrate the superiority of Circle loss with performance on par with the state of the art.

•我们提出了Circle损失,这是用于深度特征学习的简单损失功能。 通过在监督下对每个相似度得分重新加权,Circle loss通过灵活的优化和确定的收敛目标而受益于深度特征学习。

•我们提出了Circle损失,它与类级别标签和成对标签都兼容。 圆度损失经过轻微的修改,可退化为三重态损失或Softmax损失。

•我们对各种深度特征学习任务进行了广泛的实验,例如 人脸识别,人员重新识别,汽车图像检索等。 在所有这些任务上,我们展示了Circle损失与性能相媲美的优越性。

2. A Unified Perspective

Deep feature learning aims to maximize the within-class similarity sp, as well as to minimize the between-class sim- ilarity sn. Under the cosine similarity metric, for example, weexpectsp →1andsn →0.

To this end, learning with class-level labels and learn- ing with pair-wise labels are two paradigms of approaches and are usually considered separately. Given class-level labels, the first one basically learns to classify each train- ing sample to its target class with a classification loss, e.g. L2-Softmax [21], Large-margin Softmax [15], Angu- lar Softmax [16], NormFace [30], AM-Softmax [29], Cos- Face [32], ArcFace [2]. In contrast, given pair-wise la- bels, the second one directly learns pair-wise similarity in the feature space in an explicit manner, e.g., constrastive loss [5, 1], triplet loss [9, 22], Lifted-Structure loss [19], N-pair loss [24], Histogram loss [27], Angular loss [33], Margin based loss [38], Multi-Similarity loss [34] and so on.

深度特征学习旨在最大化类内相似度sp,以及最小化类间相似度sn。 在余弦相似度度量下,例如,weexpectsp→1andsn→0。

为此,使用类级别的标签学习和使用逐对标签的学习是方法的两个范例,通常被单独考虑。 给定班级级别的标签,第一个基本上学会通过分类损失(例如, L2-Softmax [21],大边距Softmax [15],Angular角Softmax [16],NormFace [30],AM-Softmax [29],CosFace [32],ArcFace [2]。 相反,给定成对标签,第二个以显式方式直接学习特征空间中的成对相似性,例如,对比损失[5,1],三重态损失[9,22],提升结构 损失[19],N对损失[24],直方图损失[27],角度损失[33],边际损失[38],多相似损失[34]等。

Figure 2: The gradients of the loss functions. (a) Triplet loss. (b) AMSoftmax loss. © The proposed Circle loss. Both triplet loss and AMSoftmax loss present lack of flexibility for optimization. The gradients with respect to sp (left) and sn (right) are restricted to equal and undergo a sudden decrease upon convergence (the similarity pair B). For example, at A, the within-class similarity score sp already approaches 1, and still incurs large gradient. Moreover, the decision boundaries are parallel to sp = sn, which allows ambiguous convergence. In contrast, the proposed Circle loss assigns different gradients to the similarity scores, depending on their distances to the optimum. For A (both sn and sp are large), Circle loss lays emphasis on optimizing sn. For B, since sn significantly decreases, Circle loss reduces its gradient and thus enforces mild penalty. Circle loss has a circular decision boundary, and promotes accurate convergence status.

图2:损失函数的梯度。 (a)三重态损失。 (b)AMSoftmax损失。 (c)建议的循环损失。 三重态损失和AMSoftmax损失都缺乏优化的灵活性。 相对于sp(左)和sn(右)的梯度被限制为相等,并且在收敛时(相似性对B)会突然减小。 例如,在A处,类内相似性得分sp已经接近1,并且仍会产生较大的梯度。 此外,决策边界与sp = sn平行,从而允许模棱两可的收敛。 相比之下,拟议的Circle损失会根据相似度得分与最佳评分之间的距离将不同的梯度分配给相似度得分。 对于A(sn和sp都很大),Circle loss将重点放在优化sn上。 对于B,由于sn显着降低,因此Circle损失会减小其梯度,因此会施加轻微的惩罚。 圆损失具有圆形决策边界,并可以提高准确的收敛状态。

generates to AM-Softmax [29, 32], an important variant of Softmax loss:

生成AM-Softmax [29,32],Softmax损失的重要变体:

Moreover, with m = 0, Eq. 2 further degenerates to Normface [30]. By replacing the cosine similarity with in- ner product and setting γ = 1, it finally degenerates to Soft- max loss (i.e., softmax plus cross-entropy loss).

此外,当m = 0时, 2进一步退化为Normface [30]。 通过用内积代替余弦相似度并将γ= 1设置,它最终退化为Softmax损失(即softmax加上交叉熵损失)。

Specifically, we note that in Eq. 3, the “ exp(·)” op- eration is utilized by Lifted-Structure loss [19], N-pair loss [24], Multi-Similarity loss [34] and etc., to conduct “soft” hard mining among samples. Enlarging γ gradually reinforces the mining intensity and when γ → +∞, it re- sults in the canonical hard mining in [22, 8].

具体来说,我们注意到在等式中。 如图3所示,“exp(·)”运算可用于提升结构损失[19],N对损失[24],多重相似损失[34]等,以进行“软”硬开采 在样本中。 增大γ会逐渐增强开采强度,当γ→+∞时,会导致[22,8]中的规范硬开采。

Gradient analysis. Eq. 2 and Eq. 3 show triplet loss, Softmax loss and its several variants can be interpreted as specific cases of Eq. 1. In another word, they all optimize (sn − sp). Under the toy scenario where there are only a single sp and sn, we visualize the gradients of triplet loss and AMSoftmax loss in Fig. 2 (a) and (b), from which we draw the following observations:

• First, before the loss reaches its decision boundary (upon which the gradients vanish), the gradients with respect to both sp and sn are the same to each other. The status A has {sn , sp } = {0.8, 0.8}, indicating good within-class compactness. However, A still re- ceives large gradient with respect to sp. It leads to lack of flexibility during optimization.

• Second, the gradients stay (roughly) constant before convergence and undergo a sudden decrease upon con- vergence. The status B lies closer to the decision boundary and is better optimized, compared with A. However, the loss functions (both triplet loss and AM- Softmax loss) enforce approximately equal penalty on A and B. It is another evidence of inflexibility.

梯度分析。等式2和等式图3显示了三重态损失,Softmax损失及其几个变体可以解释为等式的特定情况。换句话说,它们都优化(sn-sp)。在只有一个sp和sn的玩具场景下,我们可视化图2(a)和(b)中的三重态损失和AMSoftmax损失的梯度,从中得出以下观察结果:

•首先,在损失到达其决策边界(梯度消失时)之前,相对于sp和sn的梯度彼此相同。状态A的{sn,sp} = {0.8,0.8},表示良好的类内紧凑性。但是,A相对于sp仍会收到较大的梯度。这导致优化期间缺乏灵活性。

•其次,梯度在收敛之前保持(大致)恒定,并且在收敛时突然减小。与A相比,状态B更接近决策边界,并且优化程度更好。但是,损失函数(三元组损失和AM-Softmax损失)对A和B施加的惩罚几乎相等。这是不灵活的另一证据。

These problems originate from the optimization manner of minimizing (sn − sp ), in which reducing sn is equivalent to increasing sp. In the following Section 3, we will trans- fer such an optimization manner into a more general one to facilitate higher flexibility.

这些问题源于最小化(sn-sp)的优化方式,其中减小sn等于增大sp。 在下面的第3节中,我们将把这种优化方式转换为更通用的方式,以提高灵活性。

3. A New Loss Function

3.1. Self-paced Weighting

We consider to enhance the optimization flexibility by allowing each similarity score to learn at its own pace, de- pending on its current optimization status. We first neglect the margin item m in Eq. 1 and transfer the unified loss function (Eq. 1) into the proposed Circle loss by:

我们考虑通过允许每个相似性得分根据其当前优化状态按照自己的步调学习,从而提高优化灵活性。 我们首先忽略等式中的保证金项目m。 1并将统一损失函数(等式1)转移到建议的圆损失中,方法是:

Re-scaling the cosine similarity under supervision is a

common practice in modern classification losses [21, 30, 29, 32, 39, 40]. Conventionally, all the similarity score share an equal scale factor γ. The non-normalized weighting op- eration in Circle loss can be also interpreted as a specific scaling operation. Different from the other loss functions, Circle loss re-weights (re-scales) each similarity score in- dependently and thus allows different learning paces. We empirically show that Circle loss is robust to various γ set- tings in Section 4.5.

Discussions. We notice another difference beyond the scaling strategy. The output of softmax function in a classi- fication loss is conventionally interpreted as the probability of a sample belonging to a certain class. Since the probabil- ities are based on comparing each similarity score against all the similarity scores, equal re-scaling is prerequisite for fair comparison. Circle loss abandons such an probability- related interpretation and holds a similarity pair optimiza- tion perspective, instead. Correspondingly, it gets rid of the constraint of equal re-scaling and allows more flexible opti- mization.

在监督下重新缩放余弦相似度是

现代分类损失的常见做法[21,30,29,32,39,40]。传统上,所有相似度分数共享相等的比例因子γ。 Circle loss中的非归一化加权运算也可以解释为特定的缩放操作。与其他损失函数不同,Circle损失独立地对每个相似度评分进行重新加权(重新定标),因此允许不同的学习进度。我们根据经验证明,在第4.5节中,圆损失对各种γ设置都具有鲁棒性。

讨论。我们注意到缩放策略之外的另一个区别。通常将分类损失中softmax函数的输出解释为样本属于某个类别的概率。由于概率是基于将每个相似性得分与所有相似性得分进行比较而得出的,所以相等的重新定标是进行公平比较的前提。圆损失放弃了这种与概率有关的解释,取而代之的是拥有相似对对的观点。相应地,它摆脱了均等缩放的约束,并允许更灵活的优化。

3.2. Within-class and Between-class Margins

In loss functions optimizing (sn − sp), adding a margin m reinforces the optimization [15, 16, 29, 32]. Since sn and −sp are in symmetric positions, a positive margin on sn is equivalent to a negative margin on sp. It thus only requires a single margin m. In Circle loss, sn and sp are in asymmetric position. Naturally, it requires respective margins for sn and sp, which is formulated by:

在损失函数优化(sn-sp)中,增加余量m可以加强优化[15、16、29、32]。 由于sn和-sp处于对称位置,因此sn上的正余量等于sp上的负余量。 因此,仅需要单个余量m。 在“圆损耗”中,sn和sp处于不对称位置。 自然,它需要为sn和sp分别设置边距,其公式如下:

With the decision boundary defined in Eq. 8, we have another intuitive interpretation of Circle loss. It aims to op- timize sp → 1 and sn → 0. The parameter m controls the radius of the decision boundary and can be viewed as a relaxation factor. In another word, Circle loss expects

在等式中定义决策边界。 8,我们对圆损有另一种直观的解释。 它旨在优化sp→1和sn→0。参数m控制决策边界的半径,可以将其视为松弛因子。 换句话说,Circle loss期望

Hence there are only two hyper-parameters, i.e., the scale factor γ and the relaxation margin m. We will experimen- tally analyze the impacts of m and γ in Section 4.5.

因此,只有两个超参数,即比例因子γ和弛豫裕度m。 我们将在第4.5节中对m和γ的影响进行实验分析。

3.3. The Advantages of Circle Loss

The gradients of Circle loss with respect to sjn and sip are derived as follows:

圆损耗相对于sjn和sip的梯度推导如下:

Under the toy scenario of binary classification (or only

a single sn and sp), we visualize the gradients under dif- ferent settings of m in Fig. 2 ©, from which we draw the following three observations:

• Balanced optimization on sn and sp. We recall that the loss functions minimizing (sn − sp ) always have equal gra- dients on sp and sn and is inflexible. In contrast, Circle loss presents dynamic penalty strength. Among a specified sim- ilarity pair {sn, sp}, if sp is better optimized in comparison to sn (e.g., A = {0.8, 0.8} in Fig. 2 ©), Circle loss assigns larger gradient to sn (and vice versa), so as to decrease sn with higher superiority. The experimental evidence of bal- anced optimization is to be accessed in Section 4.6.

• Gradually-attenuated gradients. At the start of train- ing, the similarity scores deviate far from the optimum and gains large gradient (e.g., “A” in Fig. 2 ©). As the train- ing gradually approaches the convergence, the gradients on the similarity scores correspondingly decays (e.g., “B” in Fig. 2 ©), elaborating mild optimization. Experimental re- sult in Section 4.5 shows that the learning effect is robust to various settings of γ (in Eq. 6), which we attribute to the automatically-attenuated gradients.

在二元分类的玩具场景下(或仅

单个sn和sp),我们在图2(c)的m的不同设置下可视化了梯度,从中我们得出以下三个观察结果:

•对sn和sp的均衡优化。我们记得损失函数最小化(sn-sp)在sp和sn上总是具有相等的梯度,并且是不灵活的。相反,圆损失表现出动态的惩罚强度。在指定的相似对{sn,sp}中,如果与sn相比,sp的优化效果更好(例如,图2(c)中的A = {0.8,0.8}),则圆损耗为sn分配了较大的梯度(并且反之亦然),以便以更高的优势减少sn。平衡优化的实验证据将在第4.6节中提供。

•逐渐衰减的渐变。在训练开始时,相似度得分偏离最佳值,并获得了较大的梯度(例如,图2(c)中的“ A”)。随着训练逐渐趋于收敛,相似度分数上的梯度相应地衰减(例如,图2(c)中的“ B”),从而进行了适度的优化。 4.5节中的实验结果表明,学习效果对于γ的各种设置(在等式6中)是鲁棒的,我们将其归因于自动衰减的梯度。

• A (more) definite convergence target. Circle loss has a circular decision boundary and favors T rather than T′ (Fig. 1) for convergence. It is because T has the smallest gap between sp and sn, compared with all the other points on the decision boundary. In another word, T ′ has a larger gap between sp and sn and is inherently more difficult to maintain. In contrast, losses that minimize (sn − sp) have a homogeneous decision boundary, that is, every point on the decision boundary is of the same difficulty to reach. Ex- perimentally, we observe that Circle loss leads to a more concentrate similarity distribution after convergence, as to be detailed in Section 4.6 and Fig. 5.

•(更多)明确的收敛目标。 圆损失具有圆形决策边界,并且倾向于T而不是T’(图1)进行收敛。 这是因为与决策边界上的所有其他点相比,T在sp和sn之间的间隙最小。 换句话说,T′在sp和sn之间具有较大的间隙,并且固有地更难以维护。 相反,最小化(sn-sp)的损失具有均匀的决策边界,也就是说,决策边界上的每个点都具有相同的难度。 实验上,我们观察到圆损失会导致收敛后更集中的相似度分布,详见第4.6节和图5。

4. Experiment

We comprehensively evaluate the effectiveness of Circle loss under two elemental learning approaches, i.e., learn- ing with class-level labels and learning with pair-wise la- bels. For the former approach, we evaluate our method on face recognition (Section 4.2) and person re-identification (Section 4.3) tasks. For the latter approach, we use the fine-grained image retrieval datasets (Section 4.4), which are relatively small and encourage learning with pair-wise labels. We show that Circle loss is competent under both settings. Section 4.5 analyzes the impact of the two hyper- parameters, i.e., the scale factor γ in Eq. 6 and the relaxation factor m in Eq. 8. We show that Circle loss is robust under reasonable settings. Finally, Section 4.6 experimentally confirms the characteristics of Circle loss.

我们通过两种基本的学习方法(即,使用班级标签学习和使用成对标签学习)来全面评估Circle损失的有效性。 对于前一种方法,我们评估我们在面部识别(第4.2节)和人员重新识别(第4.3节)任务上的方法。 对于后一种方法,我们使用细粒度的图像检索数据集(第4.4节),该数据集相对较小,并鼓励使用成对标签学习。 我们表明,在两种情况下,圆环损失都可以胜任。 第4.5节分析了两个超参数(即等式1中的比例因子γ)的影响。 6和等式中的松弛因子m。 8.我们证明,在合理的设置下,圆环损耗是可靠的。 最后,第4.6节通过实验证实了圆环损耗的特征。

4.1. Settings

Face recognition. We use the popular dataset MS- Celeb-1M [4] for training. The native MS-Celeb-1M data is noisy and has a long-tailed data distribution. We clean the dirty samples and exclude the tail identities (≤ 3 im- ages per identity). It results in 3.6M images and 79.9K identities. For evaluation, we adopt MegaFace Challenge 1 (MF1) [12], IJB-C [17], LFW [10], YTF [37] and CFP- FP [23] datasets and the official evaluation protocols. We also polish the probe set and 1M distractors on MF1 for more reliable evaluation, following [2]. For data pre- processing, we resize the aligned face images to 112 × 112 and linearly normalize the pixel values of RGB images to [−1, 1] [36, 15, 32]. We only augment the training samples by random horizontal flip. We choose the popular residual networks [6] as our backbones. All the models are trained with 182k iterations. The learning rate is started with 0.1 and reduced by 10× at 50%, 70% and 90% of total iter- ations respectively. The default hyper-parameters of our method are γ = 256 and m = 0.25 if not specified. For all the model inference, we extract the 512-D feature em- beddings and use cosine distance as metric.

人脸识别。我们使用流行的数据集MS-Celeb-1M [4]进行训练。 MS-Celeb-1M原始数据比较嘈杂,并且数据分布很长。我们清洗脏样品并排除尾巴身份(每个身份≤3个图像)。结果为360万张图像和79.9K个身份。为了进行评估,我们采用了MegaFace Challenge 1(MF1)[12],IJB-C [17],LFW [10],YTF [37]和CFP-FP [23]数据集以及官方评估协议。我们还会根据[2]对MF1上的探针组和1M干扰器进行抛光,以进行更可靠的评估。对于数据预处理,我们将对齐的面部图像调整为112×112的大小,并将RGB图像的像素值线性标准化为[-1,1] [36,15,32]。我们仅通过随机水平翻转来增加训练样本。我们选择流行的残差网络[6]作为我们的骨干网。所有模型都经过182k次迭代训练。学习率从0.1开始,并分别以总迭代的50%,70%和90%降低10倍。如果未指定,我们方法的默认超参数为γ= 256和m = 0.25。对于所有模型推断,我们提取512-D特征嵌入并使用余弦距离作为度量。

Person re-identification. Person re-identification (re- ID) aims to spot the appearance of a same person in dif- ferent observations. We evaluate our method on two pop- ular datasets, i.e., Market-1501 [41] and MSMT17 [35]. Market-1501 contains 1,501 identities, 12,936 training im- ages and 19,732 gallery images captured with 6 cameras. MSMT17 contains 4,101 identities, 126,411 images cap- tured with 15 cameras and presents long-tailed sample dis- tribution. We adopt two network structures, i.e. a global feature learning model backboned on ResNet50 and a part- feature model named MGN [31]. We use MGN with consid- eration of its competitive performance and relatively con- cise structure. The original MGN uses a Sofmax loss on each part feature branch for training. Our implementation concatenates all the part features into a single feature vec- tor for simplicity. For Circle loss, we set γ = 256 and m = 0.25.

人员重新识别。 人物重新识别(re-ID)旨在在不同的观察结果中发现同一个人的外表。 我们在两个受欢迎的数据集(即Market-1501 [41]和MSMT17 [35])上评估了我们的方法。 Market-1501包含1,501个身份,12,936个训练图像和用6个摄像机捕获的19,732个画廊图像。 MSMT17包含4,101个身份,用15个摄像机捕获的126,411张图像,并显示了长尾样本分布。 我们采用两种网络结构,即基于ResNet50的全局特征学习模型和名为MGN的部分特征模型[31]。 我们使用MGN时要考虑其竞争性能和相对简洁的结构。 原始MGN在每个零件特征分支上使用Sofmax损失进行训练。 为了简化起见,我们的实现将所有零件特征连接到一个特征向量中。 对于圆损失,我们将γ设置为256,将m设置为0.25。

Fine-grained image retrieval. We use three datasets for evaluation on fine-grained image retrieval, i.e. CUB- 200-2011 [28], Cars196 [14] and Stanford Online Prod- ucts [19]. CARS-196 contains 16, 183 images which be- longs to 196 class of cars. The first 98 classes are used for training and the last 98 classes are used for testing. CUB- 200-2010 has 200 different class of birds. We use the first 100 class with 5, 864 images for training and the last 100 class with 5, 924 images for testing. SOP is a large dataset consists of 120, 053 images belonging to 22, 634 classes of online products. The training set contains 11, 318 class includes 59,551 images and the rest 11,316 class includes 60, 499 images are for testing. The experimental setup fol- lows [19]. We use BN-Inception [11] as the backbone to learn 512-D embeddings. We adopt P-K sampling trat- egy [8] to construct mini-batch with P = 16 and K = 5. For Circle loss, we set γ = 80 and m = 0.4.

Table 1: Identification rank-1 accuracy (%) on MFC1 dataset with different backbones and loss functions.

细粒度的图像检索。我们使用三个数据集评估细粒度的图像,即CUB-200-2011 [28],Cars196 [14]和斯坦福在线产品[19]。 CARS-196包含16张183张图像,属于196类汽车。前98个班级用于培训,后98个班级用于测试。 CUB- 200-2010有200种不同的鸟类。我们使用前100个班级提供5张864张图像进行训练,最后100个班级使用5张924张图像进行测试。 SOP是一个大型数据集,包含120、053个图像,这些图像属于22、634类在线产品。训练集包含11个,318个类,包括59,551张图像,其余11,316个类,包括60个,499张图像供测试。实验设置如下[19]。我们使用BN-Inception [11]作为骨干学习512-D嵌入。我们采用P-K采样策略[8]来构建P = 16和K = 5的小批量。对于圆损失,我们将γ= 80设置为m = 0.4。

表1:具有不同主干和损失函数的MFC1数据集的识别等级1准确性(%)。

Table 2: Face verification accuracy (%) on LFW, YTF and CFP-FP with ResNet34 backbone.

表2:使用ResNet34主干的LFW,YTF和CFP-FP上的人脸验证准确性(%)。

Table 3: Comparison of true accept rates (%) on the IJB-C 1:1 verification task.

表3:IJB-C 1:1验证任务的真实接受率(%)的比较。

Table 4: Evaluation of Circle loss on re-ID task. We report R-1 accuracy (%) and mAP (%).

表4:重新ID任务的圆环损失评估。 我们报告了R-1准确性(%)和mAP(%)。

4.2. Face Recognition

For face recognition task, we compare Circle loss against several popular classification loss functions, i.e., vanilla Softmax, NormFace [30], AM-Softmax [29] (or CosFace [32]), ArcFace [2]. Following the original pa- pers [29, 2], we set γ = 64, m = 0.35 for AM-Softmax and γ = 64, m = 0.5 for ArcFace.

We report the rank-1 accuracy on MegaFace Challenge 1 dataset (MFC1) in Table 1. On all the three backbones, Circle loss marginally outperforms the counterparts. For example, with ResNet34 as the backbone, Circle loss sur- passes the most competitive one (ArcFace) by +0.13%. With ResNet100 as the backbone, while ArcFace achieves a high rank-1 accuracy of 98.36%, Circle loss still outper- forms it by +0.14%.

Table 2 summarizes face verification results on LFW [10], YTF [37] and CFP-FP [23]. We note that perfor- mance on these datasets is already near saturation. Specif- ically, ArcFace is higher than AM-Softmax by +0.05%, +0.03%, +0.07% on three datasets, respectively. Circle loss remains the best one, surpassing ArcFace by +0.05%, +0.06% and +0.18%, respectively.

We further compare Circle loss with AM-Softmax on IJB-C 1:1 verification task in Table 3. Our implementa- tion of Arcface is unstable on this dataset and achieves abnormally low performance, so we did not compare Cir- cle loss against Arcface. With ResNet34 as the backbone, Circle loss significantly surpasses AM-Softmax by +1.30% and +4.92% on “TAR@FAR=1e-4” and “TAR@FAR=1e- 5”, respectively. With ResNet100 as the backbone, Circle loss still maintains considerable superiority.

对于人脸识别任务,我们将Circle损失与几种流行的分类损失函数(即香草Softmax,NormFace [30],AM-Softmax [29](或CosFace [32]),ArcFace [2])进行比较。按照原始文件[29,2],我们将γ= 64,对于AM-Softmax设置为m = 0.35,对于γ= 64,对于ArcFace设置为m = 0.5。

我们在表1中报告了MegaFace Challenge 1数据集(MFC1)的1级准确度。在所有三个主干上,Circle损失略胜于同行。例如,以ResNet34为骨干,Circle损失比最有竞争力的损失(ArcFace)高0.13%。以ResNet100为骨干,虽然ArcFace达到了98.36%的1级高准确度,但圆环损耗仍然比其高0.14%。

表2总结了LFW [10],YTF [37]和CFP-FP [23]的面部验证结果。我们注意到,这些数据集的性能已经接近饱和。具体来说,在三个数据集上,ArcFace比AM-Softmax高出+0.05%,+ 0.03%和+ 0.07%。圆环损耗仍然是最好的圆环,分别超过ArcFace +0.05%,+ 0.06%和+ 0.18%。

我们在表3中进一步将Circle loss与AM-Softmax在IJB-C 1:1验证任务上进行了比较。我们在此数据集上实现Arcface不稳定,并且性能异常低下,因此我们没有将Arc损失与Arcface进行比较。以ResNet34为骨干,在“ TAR @ FAR = 1e-4”和“ TAR @ FAR = 1e-5”上,圆环损耗分别大大超过AM-Softmax + 1.30%和+ 4.92%。以ResNet100为骨干,Circle Loss仍然保持相当的优势。

4.3. Person Re-identification

We evaluate Circle loss on re-ID task in Table 4. MGN [31] is one of the state-of-the-art method and is featured for learning multi-granularity part-level features. Originally, it uses both Softmax loss and triplet loss to fa- cilitate a joint optimization. Our implementation of “MGN (ResNet50) + AMSoftmax” and “MGN (ResNet50)+ Circle loss” only use a single loss function for simplicity.

We make three observations from Table 4. First, com- paring Circle loss against state of the art, we find that Cir- cle loss achieves competitive re-ID accuracy, with a con- cise setup (no more auxiliary loss functions). We note that “JDGL” is slightly higher than “MGN + Circle loss” on MSMT17 [35]. JDGL [42] uses generative model to aug- ment the training data, and significantly improves re-ID over long-tailed dataset. Second, comparing “Circle loss” with “AMSoftmax”, we observe the superiority of Circle loss, which is consistent with the experimental results on face recognition task. Third, comparing “ResNet50 + Circle loss” against “MGN + Circle loss”, we find that part-level features bring incremental improvement to Circle loss. It implies that Circle loss is compatible to the part-model spe- cially designed for re-ID.

我们在表4中评估了关于re-ID任务的Circle损失。MGN [31]是最先进的方法之一,用于学习多粒度零件级特征。最初,它同时使用Softmax损失和Triplet损失来促进联合优化。为了简化起见,我们对“ MGN(ResNet50)+ AMSoftmax”和“ MGN(ResNet50)+圆损”的实现仅使用单个损失函数。

我们从表4中得出三个观察结果。首先,将Circle损失与现有技术进行比较,我们发现,通过简单的设置(没有更多的辅助损失功能),圆形损失达到了具有竞争力的re-ID准确性。我们注意到,在MSMT17上,“ JDGL”略高于“ MGN +圆环损耗” [35]。 JDGL [42]使用生成模型来增强训练数据,并显着改善了长尾数据集的re-ID。其次,将“圆损”与“ AMSoftmax”进行比较,观察到圆损的优越性,与人脸识别任务的实验结果相吻合。第三,将“ ResNet50 +环损”与“ MGN +环损”进行比较,我们发现部件级功能为环损带来了增量改进。这表明Circle损失与专门为re-ID设计的零件模型兼容。

Table 5: Comparison with state of the art on CUB-200-2011, Cars196 and Stanford Online Products. R@K(%) is reported.

表5:与CUB-200-2011,Cars196和斯坦福在线产品上的最新技术比较。 报告了R @ K(%)。

Figure 3: Impact of two hyper-parameters. In (a), Circle loss presents high robustness on various settings of scale factor γ. In (b), Circle loss surpasses the best performance of both AMSoftmax and ArcFace within a large range of relaxation factor m.

图3:两个超参数的影响。 在(a)中,圆损在比例因子γ的各种设置下表现出很高的鲁棒性。 在(b)中,在大的松弛因子m范围内,圆环损耗超过了AMSoftmax和ArcFace的最佳性能。

Figure 4: The change of sp and sn values during training. We linearly lengthen the curves within the first 2k iterations to highlight the initial training process (in the green zone). During the early training stage, Circle loss rapidly increases sp, because sp deviates far from the optimum at the initial- ization and thus attracts higher optimization priority.

图4:训练期间sp和sn值的变化。 我们在前2k次迭代中线性延长曲线,以突出显示初始训练过程(在绿色区域中)。 在训练的早期阶段,Circle损失会迅速增加sp,因为sp在初始化时偏离了最优值,因此吸引了更高的优化优先级。

4.4. Fine-grained Image Retrieval

We evaluate the compatibility of Circle loss to pair-wise labeled data on three fine-grained image retrieval datasets, i.e., CUB-200-2011, Cars196, and Standford Online Prod- ucts. On these datasets, majority methods [19, 18, 3, 20, 13, 34] adopt the encouraged setting of learning with pair- wise labels. We compare Circle loss against these state- of-the-art methods in Table 5. We observe that Circle loss achieves competitive performance, on all of the three datasets. Among the competing methods, LiftedStruct [19] and Multi-Simi [34] are specially designed with elaborate hard mining strategies for learning with pair-wise labels. HDC [18], ABIER [20] and ABE [13] benefit from model ensemble. In contrast, the proposed Circle loss achieves performance on par with the state of the art, without any bells and whistles.

我们在三个细粒度的图像检索数据集(即CUB-200-2011,Cars196和Standford Online产品)上评估了Circle loss与成对标记数据的兼容性。 在这些数据集上,多数方法[19、18、3、20、13、34]采用成对标签鼓励学习。 我们在表5中将Circle损失与这些最新方法进行了比较。我们观察到,在所有三个数据集上,Circle损失均达到了竞争表现。 在竞争的方法中,LiftedStruct [19]和Multi-Simi [34]是经过精心设计的,具有精心设计的硬挖掘策略,用于按对标记学习。 HDC [18],ABIER [20]和ABE [13]受益于模型集成。 相比之下,建议的Circle损失可以达到与现有技术相当的性能,而没有任何风吹草动。

4.5. Impact of the Hyper-parameters

Figure 5: Visualization of the similarity distribution after convergence. The blue dots mark the similarity pairs crossing the decision boundary during the whole training process. The green dots mark the similarity pairs after convergence. (a) AMSoftmax seeks to minimize (sn − sp). During training, the similarity pairs cross the decision boundary through a wide passage. After convergence, the similarity pairs scatter in a relatively large region in the (sn , sp ) space. In (b) and ©, Circle loss has a circular decision boundary. The similarity pairs cross the decision boundary through a narrow passage and gather into a relatively concentrated region.

图5:收敛后的相似度分布的可视化。 蓝色点标记整个训练过程中跨越决策边界的相似度对。 绿点在收敛后标记相似对。 (a)AMSoftmax寻求最小化(sn-sp)。 在训练过程中,相似度对通过决策通道跨越决策边界。 收敛之后,相似度对在(sn,sp)空间中的相对较大区域中分散。 在(b)和(c)中,圆损失具有圆形决策边界。 相似对通过狭窄的通道越过决策边界,并聚集到相对集中的区域。

We analyze the impact of two hyper-parameters, i.e., the scale factor γ in Eq. 6 and the relaxation factor m in Eq. 8 on face recognition tasks.

The scale factor γ determines the largest scale of each similarity score. The concept of scale factor is critical in a lot of variants of Softmax loss. We experimentally evaluate its impact on Circle loss and make a comparison with sev- eral other loss functions involving scale factors. We vary γ from 32 to 1024 for both AMSoftmax and Circle loss. For ArcFace, we only set γ to 32, 64 and 128, as it becomes un- stable with larger γ in our implementation. The results are visualized in Fig. 3. Compared with AM-Softmax and Ar- cFace, Circle loss exhibits high robustness on γ. The main reason for the robustness of Circle loss on γ is the auto- matic attenuation of gradients. As the training progresses, the similarity scores approach toward the optimum. Con- sequentially, the weighting scales along with the gradients automatically decay, maintaining a mild optimization.

The relaxation factor m determines the radius of the circular decision boundary. We vary m from −0.2 to 0.3 (with 0.05 as the interval) and visualize the results in Fig. 3 (b). It is observed that under all the settings from −0.1 to 0.25, Circle loss surpasses the best performance of Arcface, as well as AMSoftmax, presenting considerable degree of robustness.

我们分析了两个超参数(即等式中的比例因子γ)的影响。 6和等式中的松弛因子m。 8关于面部识别任务。

比例因子γ确定每个相似性评分的最大比例。比例因子的概念在Softmax损耗的许多变体中至关重要。我们通过实验评估其对Circle损失的影响,并与其他涉及比例因子的其他损失函数进行比较。对于AMSoftmax和Circle损失,我们将γ从32变为1024。对于ArcFace,我们仅将γ设置为32、64和128,因为在我们的实现中,随着γ的增大它变得不稳定。结果显示在图3中。与AM-Softmax和ArcFace相比,Circle loss对γ表现出很高的鲁棒性。圆损失对γ的鲁棒性的主要原因是梯度的自动衰减。随着训练的进行,相似性分数趋于最佳。因此,权重比例和梯度会自动衰减,从而保持适度的优化。

弛豫因子m确定圆形决策边界的半径。我们将m从-0.2更改为0.3(以0.05为间隔),并将结果可视化在图3(b)中。可以看出,在从-0.1到0.25的所有设置下,圆环损耗都超过了Arcface和AMSoftmax的最佳性能,表现出相当高的鲁棒性。

4.6. Investigation of the Characteristics

Analysis of the optimization process. To intuitively understand the learning process, we show the change of sn and sp during the whole training process in Fig. 4, from which we draw two observations:

First, at the initialization, all the sn and sp scores are small. It is because in the high dimensional feature space, randomized features are prone to be far away from each other [40, 7]. Correspondingly, sp get significantly larger weights (compared with sn), and the optimization on sp dominates the training, incurring a fast increase in similar- ity values in Fig. 4. This phenomenon evidences that Circle loss maintains a flexible and balanced optimization.

Second, at the end of training, Circle loss achieves both better within-class compactness and between-class discrep- ancy (on the training set), compared with AMSoftmax. Considering the fact that Circle loss achieves higher perfor- mance on the testing set, we believe that it indicates better optimization.

优化过程分析。 为了直观地了解学习过程,我们在图4中显示了整个训练过程中sn和sp的变化,从中我们得出两个观察结果:

首先,在初始化时,所有的sn和sp得分都很小。 这是因为在高维特征空间中,随机特征倾向于彼此远离[40,7]。 相应地,sp的权重显着增大(与sn相比),sp的优化支配了训练,从而导致图4中的相似性值快速增加。这种现象表明Circle loss保持了灵活而均衡的优化。

其次,在训练结束时,与AMSoftmax相比,Circle损失可以同时实现更好的班级内部紧实度和班级间差异(在训练集上)。 考虑到Circle loss在测试集上可获得更高的性能,我们认为这表明优化效果更好。

Analysis of the convergence. We analyze the conver- gence status of Circle loss in Fig. 5. We investigate two issues: how do the similarity pairs consisted of sn and sp cross the decision boundary during training and how do the similarity pairs distribute in the (sn , sp ) space after con- vergence. The results are shown in Fig. 5. In Fig. 5 (a), AMSoftmax loss adopts the optimal setting of m = 0.35. In Fig. 5 (b), Circle loss adopts a compromised setting of m = 0.325. The decision boundaries of (a) and (b) are tangent to each other, allowing an intuitive comparison. In Fig. 5 ©, Circle loss adopts its optimal setting of m = 0.25. Comparing Fig. 5 (b) and © against Fig. 5 (a), we find that Circle loss presents a relatively narrower passage on the de- cision boundary, as well as a more concentrated distribution for convergence (especially when m = 0.25). It indicates that Circle loss facilitates more consistent convergence for all the similarity pairs, compared with AMSoftmax loss. This phenomenon confirms that Circle loss has a more defi- nite convergence target, which promotes better separability in the feature space.

收敛性分析。我们在图5中分析了Circle损失的收敛状态。我们研究了两个问题:在训练过程中,由sn和sp组成的相似性对如何越过决策边界,以及相似性对如何在(sn,sp)中分布收敛后的空间。结果如图5所示。在图5(a)中,AMSoftmax损失采用m = 0.35的最佳设置。在图5(b)中,圆损耗采用m = 0.325的折衷设置。 (a)和(b)的决策边界相互切线,从而可以进行直观的比较。在图5(c)中,圆损采用其m = 0.25的最佳设置。将图5(b)和(c)与图5(a)进行比较,我们发现圆损失在决策边界上呈现出相对较窄的通道,并且在收敛时呈现出更加集中的分布(特别是当m = 0.25)。这表明,与AMSoftmax损失相比,Circle损失有助于所有相似对的更一致的收敛。这种现象证实了圆损失具有更明确的收敛目标,从而促进了特征空间中更好的可分离性。

5. Conclusion

This paper provides two insights into the optimization process for deep feature learning. First, a majority of loss functions, including the triplet loss and popular clas- sification losses, conduct optimization by embedding the between-class and within-class similarity into similarity pairs. Second, within a similarity pair under supervision, each similarity score favors different penalty strength, de- pending on its distance to the optimum. These insights result in Circle loss, which allows the similarity scores to learn at different paces. The Circle loss benefits deep fea- ture learning with high flexibility in optimization and a more definite convergence target. It has a unified formula for two elemental learning approaches, i.e., learning with class-level labels and learning with pair-wise labels. On a variety of deep feature learning tasks, e.g., face recog- nition, person re-identification, and fine-grained image re- trieval, the Circle loss achieves performance on par with the state of the art.

本文为深度特征学习的优化过程提供了两种见解。首先,大多数损失函数(包括三元组损失和流行的分类损失)通过将类间和类内相似性嵌入相似对来进行优化。其次,在监督下的相似度对中,每个相似度得分偏向于不同的惩罚强度,这取决于其到最佳值的距离。这些见解会导致Circle损失,从而使相似度分数以不同的速度学习。 Circle损失使深度功能学习受益于优化的高度灵活性和更明确的收敛目标。它具有用于两种基本学习方法的统一公式,即使用类级标签进行学习和使用逐对标签进行学习。在各种深度特征学习任务上,例如人脸识别,人员重新识别和细粒度的图像检索,Circle损失可实现与现有技术相当的性能。

References

[1] S. Chopra, R. Hadsell, and Y. LeCun. Learning a similarity metric discriminatively, with application to face verification. 2005 IEEE Computer Society Conference on Computer Vi- sion and Pattern Recognition (CVPR’05), 1:539–546 vol. 1, 2005. 2

[2] J.Deng,J.Guo,N.Xue,andS.Zafeiriou.Arcface:Additive angular margin loss for deep face recognition. In Proceed- ings of the IEEE Conference on Computer Vision and Pattern Recognition, 2019. 1, 2, 5, 6

[3] W. Ge. Deep metric learning with hierarchical triplet loss. In The European Conference on Computer Vision (ECCV), September 2018. 7

[4] Y. Guo, L. Zhang, Y. Hu, X. He, and J. Gao. Ms-celeb-1m: A dataset and benchmark for large-scale face recognition. In European Conference on Computer Vision, 2016. 5

[5] R.Hadsell,S.Chopra,andY.LeCun.Dimensionalityreduc- tion by learning an invariant mapping. In IEEE Computer Society Conference on Computer Vision and Pattern Recog- nition (CVPR), volume 2, pages 1735–1742. IEEE, 2006. 2

[6] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In CVPR, 2016. 5

[7] L.He,Z.Wang,Y.Li,andS.Wang.Softmaxdissection:To- wards understanding intra- and inter-clas objective for em- bedding learning. CoRR, abs/1908.01281, 2019. 8

[8] A. Hermans, L. Beyer, and B. Leibe. In defense of the triplet loss for person re-identification. arXiv preprint arXiv:1703.07737, 2017. 3, 6

[9] E. Hoffer and N. Ailon. Deep metric learning using triplet network. In International Workshop on Similarity-Based Pattern Recognition, pages 84–92. Springer, 2015. 1, 2

[10] G. B. Huang, M. Ramesh, T. Berg, and E. Learned-Miller. Labeled faces in the wild: A database for studying face recognition in unconstrained environments. Technical Re- port 07-49, University of Massachusetts, Amherst, October 2007. 5, 6

[11] S. Ioffe and C. Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167, 2015. 6

[12] I. Kemelmacher-Shlizerman, S. M. Seitz, D. Miller, and E. Brossard. The megaface benchmark: 1 million faces for recognition at scale. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4873– 4882, 2016. 5, 6

[13] W. Kim, B. Goyal, K. Chawla, J. Lee, and K. Kwon. Attention-based ensemble for deep metric learning. In The European Conference on Computer Vision (ECCV), Septem- ber 2018. 7

[14] J. Krause, M. Stark, J. Deng, and L. Fei-Fei. 3d object rep- resentations for fine-grained categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, pages 554–561, 2013. 5, 7

[15] W. Liu, Y. Wen, Z. Yu, M. Li, B. Raj, and L. Song. Sphereface: Deep hypersphere embedding for face recog- nition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 212–220, 2017. 2, 4, 5

[16] W. Liu, Y. Wen, Z. Yu, and M. Yang. Large-margin softmax loss for convolutional neural networks. In ICML, 2016. 1, 2, 4

[17] B. Maze, J. Adams, J. A. Duncan, N. Kalka, T. Miller, C. Otto, A. K. Jain, W. T. Niggel, J. Anderson, J. Cheney, et al. Iarpa janus benchmark-c: Face dataset and protocol. In 2018 International Conference on Biometrics (ICB), pages 158–165. IEEE, 2018. 5, 6

[18] H. Oh Song, S. Jegelka, V. Rathod, and K. Murphy. Deep metric learning via facility location. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017. 7

[19] H. Oh Song, Y. Xiang, S. Jegelka, and S. Savarese. Deep metric learning via lifted structured feature embedding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4004–4012, 2016. 2, 3, 5, 6, 7

[20] M. Opitz, G. Waltner, H. Possegger, and H. Bischof. Deep metric learning with bier: Boosting independent embeddings robustly. IEEE Transactions on Pattern Analysis and Ma- chine Intelligence, pages 1–1, 2018. 7

[21] R. Ranjan, C. D. Castillo, and R. Chellappa. L2-constrained softmax loss for discriminative face verification. arXiv preprint arXiv:1703.09507, 2017. 2, 4

[22] F. Schroff, D. Kalenichenko, and J. Philbin. Facenet: A unified embedding for face recognition and clustering. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 815–823, 2015. 1, 2, 3

[23] S. Sengupta, J.-C. Chen, C. Castillo, V. M. Patel, R. Chel- lappa, and D. W. Jacobs. Frontal to profile face verification in the wild. In 2016 IEEE Winter Conference on Applica- tions of Computer Vision (WACV), pages 1–9. IEEE, 2016. 5, 6

[24] K. Sohn. Improved deep metric learning with multi-class n-pair loss objective. In NIPS, 2016. 2, 3

[25] Y. Sun, X. Wang, and X. Tang. Deep learning face repre- sentation from predicting 10,000 classes. In Proceedings of the IEEE conference on computer vision and pattern recog- nition, pages 1891–1898, 2014. 1

[26] Y. Sun, L. Zheng, Y. Yang, Q. Tian, and S. Wang. Beyond part models: Person retrieval with refined part pooling (and a strong convolutional baseline). In The European Conference on Computer Vision (ECCV), September 2018. 6

[27] E.UstinovaandV.S.Lempitsky.Learningdeepembeddings with histogram loss. In NIPS, 2016. 2

[28] C. Wah, S. Branson, P. Welinder, P. Perona, and S. Belongie. The Caltech-UCSD Birds-200-2011 Dataset. Technical Re- port CNS-TR-2011-001, California Institute of Technology, 2011. 5, 7

[29] F. Wang, J. Cheng, W. Liu, and H. Liu. Additive margin softmax for face verification. IEEE Signal Processing Let- ters, 25(7):926–930, 2018. 1, 2, 3, 4, 6

[30] F. Wang, X. Xiang, J. Cheng, and A. L. Yuille. Normface: L2 hypersphere embedding for face verification. In Proceed- ings of the 25th ACM international conference on Multime- dia, pages 1041–1049. ACM, 2017. 2, 3, 4, 6