吴恩达机器学习视频--神经网络反向传播算法公式推导

反向传播算法

基础知识

我们在计算神经网络预测结果时采用了正向传播方法,从第一层开始正向一层一层进行计算算,直到最后一层的 h θ ( x ) h_\theta (x) hθ(x)。在不作正则化处理的情况下,逻辑回归中的代价函数如下所示:

J ( θ ) = − 1 m [ ∑ j = 1 m y ( i ) l o g h θ ( x i ) + ( 1 − y ( i ) ) l o g ( 1 − h θ ( x ( i ) ) ) ] J(\theta)=-\frac{1}{m}[\sum_{j=1}^my^{(i)}logh_{\theta}(x^{i})+(1-y^{(i)})log(1-h_\theta(x^{(i)}))] J(θ)=−m1[j=1∑my(i)loghθ(xi)+(1−y(i))log(1−hθ(x(i)))]

在神经网络中可以有很多输出变量,所以 h θ ( x ) h_\theta(x) hθ(x)是一个维度为 K K K的向量。此时代价函数如下所示:

J ( θ ) = − 1 m [ ∑ i = 1 m ∑ k = 1 k y k ( i ) l o g ( h θ ( x ( i ) ) ) k + ( 1 − y k ( i ) ) l o g ( 1 − ( h θ ( x ( i ) ) ) ) k ] J(\theta)=-\frac{1}{m}\left[\sum_{i=1}^{m} \sum_{k=1}^{k}y_k^{(i)}log\left(h_\theta(x^{(i)})\right)_k + \left(1-y_k^{(i)}\right)log\left(1-\left(h_\theta(x^{(i)})\right)\right)_k \right] J(θ)=−m1[i=1∑mk=1∑kyk(i)log(hθ(x(i)))k+(1−yk(i))log(1−(hθ(x(i))))k]

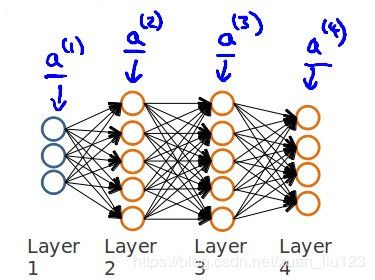

为了计算代价函数的偏导数 ∂ J ( θ ) ∂ θ i j ( l ) \frac{\partial J(\theta)}{\partial \theta_{ij}^{(l)}} ∂θij(l)∂J(θ),我们需要一种反向传播算法,也就是首先计算最后一层的误差,然后再一层一层反向求出各层的误差,直到倒数第二层(第一层不存在误差)。以下面例子说明反向传播算法。

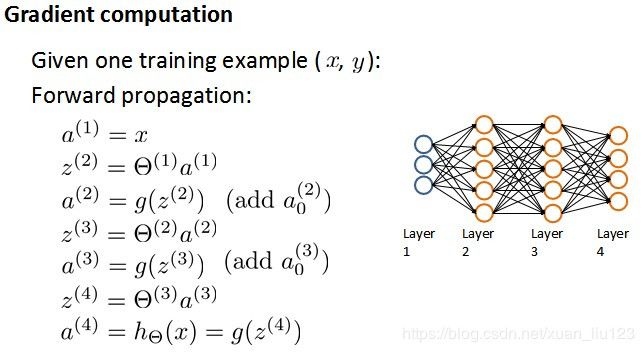

假设我们的训练集只有一个实例 ( x ( 1 ) , y ( 1 ) ) (x^{(1)},y^{(1)}) (x(1),y(1)),神经网络是一个四层的神经网络,其中 K = 4 , S L = 4 , L = 4 K=4,S_L=4,L=4 K=4,SL=4,L=4:

前向传播算法

反向传播相关公式推导

我们从最后一层的误差算起,误差是激活单元的预测 ( a k ( 4 ) ) (a_k^{(4)}) (ak(4))与实际值 ( y k ) (y^k) (yk)之间的误差, ( k = 1 : k ) (k=1:k) (k=1:k)。

用 δ \delta δ表示误差,则 δ ( 4 ) = a ( 4 ) − y \delta^{(4)}=a^{(4)}-y δ(4)=a(4)−y。我们用这个误差值来计算前一层的误差。

此时,我们可令代价函数为: J ( θ ) = − y l o g h ( x ) − ( 1 − y ) l o g ( 1 − h ( x ) ) J(\theta)=-ylogh(x)-(1-y)log(1-h(x)) J(θ)=−ylogh(x)−(1−y)log(1−h(x))

误差计算公式 δ ( l ) = ∂ J ( θ ) ∂ z ( l ) \delta^{(l)}=\frac{\partial J(\theta)}{\partial z^{(l)}} δ(l)=∂z(l)∂J(θ)

推导 δ ( 3 ) , δ ( 2 ) \delta^{(3)},\delta^{(2)} δ(3),δ(2)(链式求导法则):

δ ( 3 ) = ∂ J ∂ z ( 3 ) \delta^{(3)}=\frac{\partial J}{\partial z^{(3)}} δ(3)=∂z(3)∂J

= ∂ J ∂ a ( 4 ) ⋅ ∂ a ( 4 ) ∂ z ( 4 ) ⋅ ∂ z ( 4 ) ∂ a ( 3 ) ⋅ ∂ a ( 3 ) ∂ z ( 3 ) =\frac{\partial J}{\partial a^{(4)}}\cdot \frac{\partial a^{(4)}}{\partial z^{(4)}}\cdot \frac{\partial z^{(4)}}{\partial a^{(3)}}\cdot \frac{\partial a^{(3)}}{\partial z^{(3)}} =∂a(4)∂J⋅∂z(4)∂a(4)⋅∂a(3)∂z(4)⋅∂z(3)∂a(3)

= ( − y a ( 4 ) + ( 1 − y ) 1 − a ( 4 ) ) ⋅ ∂ g ( z ( 4 ) ) ∂ z ( 4 ) ⋅ θ ( 3 ) ⋅ ∂ g ( z ( 3 ) ) ∂ z ( 3 ) =\left(\frac{-y}{a^{(4)}} + \frac{(1-y)}{1-a^{(4)}}\right)\cdot \frac{\partial g(z^{(4)})}{\partial z^{(4)}}\cdot \theta^{(3)}\cdot \frac{\partial g(z^{(3)})}{\partial z^{(3)}} =(a(4)−y+1−a(4)(1−y))⋅∂z(4)∂g(z(4))⋅θ(3)⋅∂z(3)∂g(z(3))

= ( − y a ( 4 ) + ( 1 − y ) 1 − a ( 4 ) ) ⋅ a ( 4 ) ⋅ ( 1 − a ( 4 ) ) ⋅ θ ( 3 ) ⋅ g ′ ( z ( 3 ) ) =\left(\frac{-y}{a^{(4)}} + \frac{(1-y)}{1-a^{(4)}}\right)\cdot a^{(4)}\cdot(1-a^{(4)})\cdot \theta^{(3)}\cdot g'(z^{(3)}) =(a(4)−y+1−a(4)(1−y))⋅a(4)⋅(1−a(4))⋅θ(3)⋅g′(z(3))

= ( a ( 4 ) − y ) ⋅ θ ( 3 ) ⋅ a ( 3 ) ⋅ ( 1 − a ( 3 ) ) =(a^{(4)}-y)\cdot \theta^{(3)}\cdot a^{(3)} \cdot(1-a^{(3)}) =(a(4)−y)⋅θ(3)⋅a(3)⋅(1−a(3))

= ( θ ( 3 ) ) T ⋅ δ ( 4 ) ⋅ g ′ ( z ( 3 ) ) ( 考 虑 维 度 问 题 ) =(\theta^{(3)})^T\cdot \delta^{(4)}\cdot g'(z^{(3)}) (考虑维度问题) =(θ(3))T⋅δ(4)⋅g′(z(3))(考虑维度问题)

同理得:

δ ( 2 ) = ∂ J ∂ z ( 2 ) \delta^{(2)}=\frac{\partial J}{\partial z^{(2)}} δ(2)=∂z(2)∂J

= ∂ J ∂ a ( 4 ) ⋅ ∂ a ( 4 ) ∂ z ( 4 ) ⋅ ∂ z ( 4 ) ∂ a ( 3 ) ⋅ ∂ a ( 3 ) ∂ z ( 3 ) ⋅ ∂ z ( 3 ) ∂ a ( 2 ) ⋅ ∂ a ( 2 ) ∂ z ( 2 ) =\frac{\partial J}{\partial a^{(4)}}\cdot \frac{\partial a^{(4)}}{\partial z^{(4)}}\cdot \frac{\partial z^{(4)}}{\partial a^{(3)}}\cdot \frac{\partial a^{(3)}}{\partial z^{(3)}}\cdot \frac{\partial z^{(3)}}{\partial a^{(2)}}\cdot \frac{\partial a^{(2)}}{\partial z{(2)}} =∂a(4)∂J⋅∂z(4)∂a(4)⋅∂a(3)∂z(4)⋅∂z(3)∂a(3)⋅∂a(2)∂z(3)⋅∂z(2)∂a(2)

= δ ( 3 ) ⋅ θ ( 2 ) ⋅ g ′ ( z ( 2 ) ) =\delta^{(3)} \cdot \theta^{(2)} \cdot g'(z^{(2)}) =δ(3)⋅θ(2)⋅g′(z(2))

= ( θ ( 2 ) ) T ⋅ δ ( 3 ) ⋅ g ′ ( z ( 2 ) ) ( 考 虑 维 度 问 题 ) =(\theta^{(2)})^T\cdot \delta^{(3)}\cdot g'(z^{(2)}) (考虑维度问题) =(θ(2))T⋅δ(3)⋅g′(z(2))(考虑维度问题)

推导误差矩阵 Δ ( 3 ) , Δ ( 2 ) \Delta^{(3)}, \Delta^{(2)} Δ(3),Δ(2):

Δ ( 3 ) = ∂ J ∂ θ ( 3 ) = ∂ J ∂ a ( 4 ) ⋅ ∂ a ( 4 ) ∂ z ( 4 ) ⋅ ∂ z ( 4 ) ∂ θ ( 3 ) = ( a ( 4 ) − y ) ⋅ a ( 3 ) = a ( 3 ) ⋅ δ ( 4 ) \Delta^{(3)}=\frac{\partial J}{\partial \theta^{(3)}} =\frac{\partial J}{\partial a^{(4)}} \cdot \frac{\partial a^{(4)}}{\partial z^{(4)}} \cdot \frac{\partial z^{(4)}}{\partial \theta^{(3)}} =(a^{(4)}-y)\cdot a^{(3)} =a^{(3)}\cdot \delta^{(4)} Δ(3)=∂θ(3)∂J=∂a(4)∂J⋅∂z(4)∂a(4)⋅∂θ(3)∂z(4)=(a(4)−y)⋅a(3)=a(3)⋅δ(4)

同理得:

Δ ( 2 ) = ∂ J ∂ θ ( 2 ) = ∂ J ∂ a ( 4 ) ⋅ ∂ a ( 4 ) ∂ z ( 4 ) ⋅ ∂ z ( 4 ) ∂ a ( 3 ) ⋅ ∂ a ( 3 ) ∂ z ( 3 ) ⋅ ∂ z ( 3 ) ∂ θ ( 2 ) = δ ( 3 ) ⋅ a ( 2 ) = a ( 2 ) ⋅ δ ( 3 ) \Delta^{(2)}=\frac{\partial J}{\partial \theta^{(2)}} =\frac{\partial J}{\partial a^{(4)}} \cdot \frac{\partial a^{(4)}}{\partial z^{(4)}} \cdot \frac{\partial z^{(4)}}{\partial a^{(3)}} \cdot \frac{\partial a^{(3)}}{\partial z^{(3)}} \cdot \frac{\partial z^{(3)}}{\partial \theta^{(2)}} =\delta^{(3)}\cdot a^{(2)} =a^{(2)}\cdot \delta^{(3)} Δ(2)=∂θ(2)∂J=∂a(4)∂J⋅∂z(4)∂a(4)⋅∂a(3)∂z(4)⋅∂z(3)∂a(3)⋅∂θ(2)∂z(3)=δ(3)⋅a(2)=a(2)⋅δ(3)

至此,推导了相关公式,这里主要需要了解的是误差的计算公式,然后利用链式求导法则得到结果,通过神经网络查询变量之间的相关关系。