hadoop排序

目的:用hadoop做一个简单的排序项目

-

- 准备文件

- 项目分析

- 代码

- 运行jar包

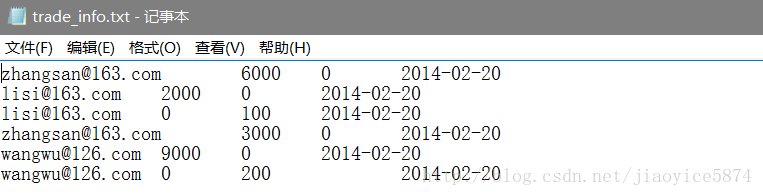

准备文件

文件含义

以上文件可以理解为某淘宝用户的收入与支出情况(简单理解)

第一列:淘宝账号

第二列:某一订单的收入

第三列:某一订单的支出(退款情况)

第四列:订单时间

排序规则

对商家的总收入和总支出进行求和

按照商家的总收入从高到低进行排序,总收入相同时,支出少的商家排在前面。

项目分析

此项目中,先要对商家所有的订单进行求和,然后再进行排序。显然,要进行两次的mapreduce。第一次mapreduce的结果作为第二次mapreduce的输入。

思路

1、第一次map输入的key、value类型为LongWritable和Text,输出的key、value类型为Text和收入和支出(这里封装成InfoBean对象)

2、第一次reduce的输入为第一次map的输出,唯一不同的是reduce的value是迭代的,输出的key、value类型为Text和InfoBean.

3、第一次mapreduce主要是完成订单求和功能(具体项目中是业务需求),第二次mapreduce主要是完成排序功能。

4、排序规则为按照收入的高低进行排序,所以第二次map的key即为InfoBean(收入情况封装在InfoBean中),value为NullWritable。

5、第二次reduce输出的key、value即为Text和InfoBean.下面直接上代码。

代码

InfoBean.java:封装

package cn.master.hadoop.mr.sort;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.WritableComparable;

public class InfoBean implements WritableComparable<InfoBean>{

private String account;

private double income;

private double expenses;

private double surplus;

public void set(String account,double income,double expenses){

this.account = account;

this.income = income;

this.expenses = expenses;

}

public void write(DataOutput out) throws IOException {

// TODO Auto-generated method stub

out.writeUTF(account);

out.writeDouble(income);

out.writeDouble(expenses);

out.writeDouble(surplus);

}

public void readFields(DataInput in) throws IOException {

// TODO Auto-generated method stub

this.account = in.readUTF();

this.income = in.readDouble();

this.expenses = in.readDouble();

this.surplus = in.readDouble();

}

public int compareTo(InfoBean o) {

// TODO Auto-generated method stub

if(this.income == o.getIncome()){

return this.expenses > o.getExpenses() ? 1 :-1;

}else {

return this.income > o.getIncome() ? -1 : 1;

}

}

@Override

public String toString() {

return this.income + "\t" + this.expenses + "\t" + this.surplus;

}

public String getAccount() {

return account;

}

public void setAccount(String account) {

this.account = account;

}

public double getIncome() {

return income;

}

public void setIncome(double income) {

this.income = income;

}

public double getExpenses() {

return expenses;

}

public void setExpenses(double expenses) {

this.expenses = expenses;

}

public double getSurplus() {

return surplus;

}

public void setSurplus(double surplus) {

this.surplus = surplus;

}

}

SumStep.java:完成订单求和

package cn.master.hadoop.mr.sort;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class SumStep {

public static class SumMapper extends Mapper<LongWritable, Text, Text, InfoBean>{

private Text k = new Text();

private InfoBean v = new InfoBean();

@Override

protected void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

//接收数据

String line = value.toString();

String[] fileds = line.split("\t");

String account = fileds[0];

double in = Double.parseDouble(fileds[1]);

double out = Double.parseDouble(fileds[2]);

k.set(account);

v.set(account, in, out);

context.write(k, v);

}

}

public static class SumReducer extends Reducer<Text, InfoBean,Text, InfoBean>{

private InfoBean v = new InfoBean();

@Override

protected void reduce(Text key, Iterable values,Context context)

throws IOException, InterruptedException {

double in_sum = 0;

double out_sum = 0;

for (InfoBean infoBean : values) {

in_sum += infoBean.getIncome();

out_sum +=infoBean.getExpenses();

}

v.set("", in_sum, out_sum);

context.write(key, v);

}

}

public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(SumStep.class);

job.setMapperClass(SumMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(InfoBean.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

job.setReducerClass(SumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(InfoBean.class);

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true);

}

}

SortStep.java:排序

package cn.master.hadoop.mr.sort;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class SortStep {

public static class SortMapper extends Mapper{

private InfoBean k = new InfoBean();

@Override

protected void map(LongWritable key, Text value,

Context context)

throws IOException, InterruptedException {

//接收数据

String line = value.toString();

String[] fileds = line.split("\t");

String account = fileds[0];

double in = Double.parseDouble(fileds[1]);

double out = Double.parseDouble(fileds[2]);

k.set(account, in, out);

context.write(k, NullWritable.get());

}

}

public static class SortReducer extends Reducer{

private Text k = new Text();

@Override

protected void reduce(InfoBean bean, Iterable values,

Context context) throws IOException, InterruptedException {

String acount = bean.getAccount();

k.set(acount);

context.write(k, bean);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

Job job = Job.getInstance(conf);

job.setJarByClass(SortStep.class);

job.setMapperClass(SortMapper.class);

job.setMapOutputKeyClass(InfoBean.class);

job.setMapOutputValueClass(NullWritable.class);

FileInputFormat.setInputPaths(job, new Path(args[0]));

job.setReducerClass(SortReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(InfoBean.class);

FileOutputFormat.setOutputPath(job, new Path(args[1]));

job.waitForCompletion(true);

}

}

运行jar包

将项目打成jar包后,在hadoop环境下运行。

命令 hadoop jar /路径(jar包的路径) 主程序入口 源文件 生成文件

hadoop jar /root/mrc.jar cn.master.hadoop.mr.sort.SumStep /trade_info.txt /sum

hadoop jar /root/mrc.jar cn.master.hadoop.mr.sort.SortStep /sum /sort

查看

hadoop fs -ls /sort

hadoop fs -cat /sort/part-r-00000