- 【AI论文】迈向大型推理模型:大型语言模型增强推理综述

东临碣石82

人工智能语言模型自然语言处理

摘要:语言长久以来被视为人类推理不可或缺的工具。大型语言模型(LLM)的突破激发了利用这些模型解决复杂推理任务的浓厚研究兴趣。研究人员已经超越了简单的自回归词元生成,引入了“思维”的概念——即代表推理过程中间步骤的词元序列。这一创新范式使LLM能够模仿复杂的人类推理过程,如树搜索和反思性思维。近期,一种新兴的学习推理趋势采用强化学习(RL)来训练LLM掌握推理过程。这种方法通过试错搜索算法自动生成

- 【强化学习】PyTorch-RL框架

大雨淅淅

人工智能pytorch人工智能python深度学习机器学习

目录一、框架简介二、核心功能三、学习环境配置四、学习资源五、实践与应用六、常见问题与解决方案七、深入理解强化学习概念八、构建自己的强化学习环境九、调试与优化十、参与社区与持续学习一、框架简介PyTorch-RL是一个基于PyTorch框架的深度强化学习项目。它充分利用了PyTorch的强大功能,提供了易于使用且高效的深度强化学习算法实现。该项目的主要编程语言是Python,旨在帮助开发者快速实现和

- 蓝桥杯真题 - 子树的大小 - 题解

ExRoc

蓝桥杯算法c++

题目链接:https://www.lanqiao.cn/problems/3526/learning/个人评价:难度2星(满星:5)前置知识:无整体思路整体将节点编号−1-1−1,通过找规律可以发现,节点iii下一层最左边的节点编号是im+1im+1im+1,最右边的节点编号是im+mim+mim+m;用l,rl,rl,r分别标记当前层子树的最小节点编号与最大节点编号,每次让最左边的节点往下一层的

- 【机器学习:三十二、强化学习:理论与应用】

KeyPan

机器学习机器学习机器人人工智能深度学习数据挖掘

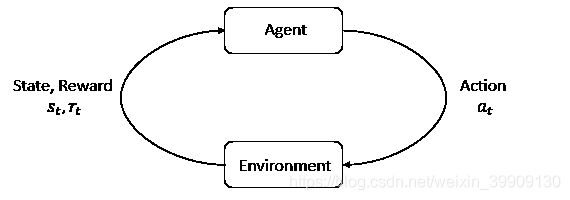

1.强化学习概述**强化学习(ReinforcementLearning,RL)**是一种机器学习方法,旨在通过试验与反馈的交互,使智能体(Agent)在动态环境中学习决策策略,以最大化累积奖励(CumulativeReward)。相比监督学习和无监督学习,强化学习更关注长期目标,而非简单地从标签中学习。核心概念智能体(Agent):进行学习和决策的主体。环境(Environment):智能体所在

- 《AI语言模型的关键技术探析:系统提示、评估方法与提示工程》

XianxinMao

人工智能语言模型自然语言处理

文章主要内容摘要1.系统提示(SystemPrompt)定义:用于设置模型行为、角色和工作方式的特殊指令重要性:定义模型行为边界影响输出质量和一致性可将通用模型定制为特定领域助手挑战:技术集成复杂兼容性问题效果难以精确预测2.模型评估方法创新方向:自一致性(Self-Consistency)评估PlanSearch方法强化学习(RL)应用核心特点:多次采样和交叉验证策略空间探索动态权重调整实践价值

- 【深度强化学习】DQN:深度Q网络算法——从理论讲解到源码解析

视觉萌新、

深度强化学习深度Q网络DQN

【深度强化学习】DQN:深度Q网络算法——从理论讲解到源码解析介绍常用技巧算法步骤DQN源码实现网络结构训练策略DQN算法进阶双深度Q网络(DoubleDQN)竞争深度Q网络(DuelingDQN)优先级经验回放(PER)噪声网络(noisy)本文图片与源码均来自《EasyRL》:https://github.com/datawhalechina/easy-rl介绍 核心思想:训练动作价值函数Q

- css 在div左上角添加类似书签的标记

嗬呜阿花

STYLELISTcss前端html

效果图html半导体CSS.mark{float:left;margin:06rpx;position:relative;padding:0;width:24px;color:#fff;writing-mode:sideways-rl;text-align:center;}.mark::after{position:absolute;content:"";left:0;top:100%;borde

- OpenAI o1 的价值意义及“强化学习的Scaling Law” & Kimi创始人杨植麟最新分享:关于OpenAI o1新范式的深度思考

光剑书架上的书

ChatGPT大数据AI人工智能计算人工智能算法机器学习

OpenAIo1的价值意义及“强化学习的ScalingLaw”蹭下热度谈谈OpenAIo1的价值意义及RL的Scalinglaw。一、OpenAIo1是大模型的巨大进步我觉得OpenAIo1是自GPT4发布以来,基座大模型最大的进展,逻辑推理能力提升的效果和方法比预想的要好,GPT4o和o1是发展大模型不同的方向,但是o1这个方向更根本,重要性也比GPT4o这种方向要重要得多,原因下面会分析。为什

- 缩小模拟与现实之间的差距:使用 NVIDIA Isaac Lab 训练 Spot 四足动物运动

AI人工智能集结号

人工智能

目录在IsaacLab中训练四足动物的运动能力目标观察和行动空间域随机化网络架构和RL算法细节先决条件用法训练策略执行训练好的策略结果使用JetsonOrin在Spot上部署经过训练的RL策略先决条件JetsonOrin上的硬件和网络设置Jetson上的软件设置运行策略开始开发您的自定义应用程序由于涉及复杂的动力学,为四足动物开发有效的运动策略对机器人技术提出了重大挑战。训练四足动物在现实世界中上

- Codeforces Round 969 (Div. 2 ABCDE题) 视频讲解

阿史大杯茶

Codeforces算法c++数据结构

A.Dora’sSetProblemStatementDorahasasetssscontainingintegers.Inthebeginning,shewillputallintegersin[l,r][l,r][l,r]intothesetsss.Thatis,anintegerxxxisinitiallycontainedinthesetifandonlyifl≤x≤rl\leqx\leq

- 论文速读|全身人型机器人控制学习与序列接触

28BoundlessHope

人形机器人文献阅读人工智能机器人

项目地址:WoCoCo:LearningWhole-BodyHumanoidControlwithSequentialContactsWoCoCo(Whole-BodyControlwithSequentialContacts)框架通过将任务分解为多个接触阶段,简化了策略学习流程,使得RL策略能够通过任务无关的奖励和模拟到现实的设计来学习复杂的人型机器人控制任务。该框架仅需要对每个任务指定少量任务

- 【3.7】贪心算法-解分割平衡字符串

攻城狮7号

贪心算法算法c++

一、题目在一个平衡字符串中,'L'和'R'字符的数量是相同的。给你一个平衡字符串s,请你将它分割成尽可能多的平衡字符串。注意:分割得到的每个字符串都必须是平衡字符串。返回可以通过分割得到的平衡字符串的最大数量。示例1:输入:s="RLRRLLRLRL"输出:4解释:s可以分割为"RL"、"RRLL"、"RL"、"RL",每个子字符串中都包含相同数量的'L'和'R'。示例2:输入:s="RLLLLR

- 基于强化学习的制造调度智能优化决策

松间沙路hba

智能调度强化学习制造智能排程车间调度APS强化学习

获取更多资讯,赶快关注上面的公众号吧!文章目录调度状态和动作设计调度状态的设计调度动作的设计基于RL的调度算法基于值函数的RL调度算法SARSAQ-learningDQN基于策略的RL调度算法基于RL的调度应用基于RL的单机调度基于RL的并行机调度基于RL的流水车间调度基于RL的作业车间调度基于RL的其他调度RL与元启发式算法在调度中的集成应用讨论问题领域算法领域应用领域参考文献生产调度作为制造系

- 深度学习学习经验——强化学习(rl)

Linductor

深度学习学习经验深度学习学习人工智能

强化学习强化学习(ReinforcementLearning,RL)是一种机器学习方法,主要用于让智能体(agent)通过与环境的互动,逐步学习如何在不同情况下采取最佳行动,以最大化其获得的累积回报。与监督学习和无监督学习不同,强化学习并不依赖于已标注的数据集,而是通过智能体在环境中的探索和试错来学习最优策略。强化学习的主要特点:基于试错学习:强化学习中的智能体通过与环境的互动,不断尝试不同的行动

- 粒子群优化算法和强化算法的优缺点对比,以表格方式进行展示。详细解释

资源存储库

笔记笔记

粒子群优化算法(PSO)和强化学习算法(RL)是两种常用的优化和学习方法。以下是它们的优缺点对比,以表格的形式展示:特性粒子群优化算法(PSO)强化学习算法(RL)算法类型优化算法学习算法主要用途全局优化问题,寻找最优解学习和决策问题,优化策略以最大化长期奖励计算复杂度较低,通常不需要梯度信息;计算复杂度与粒子数量和迭代次数有关较高,涉及到策略网络的训练和环境交互;复杂度取决于状态空间、动作空间以

- 请介绍一下大数据主要是干什么的?决策支持预测分析用户行为分析个性化服务操作优化风险管理创新与产品开发加拿大卡尔加里大学历史背景学术结构研究和创新校园设施

盛溪的猫猫

感悟大数据英语加拿大

目录请介绍一下大数据主要是干什么的?决策支持预测分析用户行为分析个性化服务操作优化风险管理创新与产品开发加拿大卡尔加里大学历史背景学术结构研究和创新校园设施国际化学生生活大语言模型目前的问题卡尔加里经济地理和气候文化和活动教育交通绿色城市AVL树的旋转单右旋(LL旋转)单左旋(RR旋转)左右旋(LR旋转)右左旋(RL旋转)请介绍一下大数据主要是干什么的?大数据是一个涉及从极其庞大和复杂的数据集中提

- TinyUSB 基本使用

czy8787475

DDM单片机

由于早期时候我们产品基于STM32开发,自然而然的用了STM32的USB库,这个本身没什么问题,库也很完善,而且有官方在完善,这本来是个不错的东西,但是随着ST的缺货,问题就越来越多,比如别人的芯片可不会兼容ST的库,如果是标准设备那还好,如果像我们还做HOTPKey这样的,移植起来就相当的麻烦.一开始他们推荐我使用RL-USB,但是RL-USB始终是挂载RTX上的,至于哪一天RTX也出毛病,这就

- 【强化学习】day1 强化学习基础、马尔可夫决策过程、表格型方法

宏辉

强化学习python算法强化学习

写在最前:参加DataWhale十一月组队学习记录【教程地址】https://github.com/datawhalechina/joyrl-bookhttps://datawhalechina.github.io/easy-rl/https://linklearner.com/learn/detail/91强化学习强化学习是一种重要的机器学习方法,它使得智能体能够在环境中做出决策以达成特定目标。

- 今日arXiv最热NLP大模型论文:无需数据集,大模型可通过强化学习与实体环境高效对齐 | ICLR2024

夕小瑶

自然语言处理人工智能深度学习

引言:将大型语言模型与环境对齐的挑战虽然大语言模型(LLMs)在自然语言生成、理解等多项任务中取得了显著成就,但是在面对看起来简单的决策任务时,却常常表现不佳。这个问题的主要原因是大语言模型内嵌的知识与实际环境之间存在不对齐的问题。相比之下,强化学习(RL)能够通过试错的方法从零开始学习策略,从而确保内部嵌入知识与环境的对齐。但是,怎样将先验知识高效地融入这样的学习过程是一大挑战,为了解决这一差距

- 【RL】Bellman Optimality Equation(贝尔曼最优等式)

大白菜~

人工智能算法机器学习人工智能深度学习

Lecture3:OptimalPolicyandBellmanOptimalityEquationDefinitionofoptimalpolicystatevalue可以被用来去评估policy的好坏,如果:vπ1(s)≥vπ2(s) foralls∈Sv_{\pi_1}(s)\gev_{\pi_2}(s)\;\;\;\;\;\text{forall}s\inSvπ1(s)≥

- Codeforces CF1516D Cut

PYL2077

题解#Codeforces数论倍增线段树数据结构

题目大意给出一个长度为nnn的序列aaa,以及qqq次询问每次询问给出l,rl,rl,r,问最少需要把区间[l,r][l,r][l,r]划分成多少段,满足每段内元素的LCM等于元素的乘积这数据范围,这询问方式,一看就是DS题首先,我们考虑LCM的性质。如果一段区间内的数的LCM等于所有元素之积,那么这个区间中的数一定两两互质。我们设nxtinxt_inxti表示iii后面第一个与aia_iai不互

- Linux下安装java11(亲测)

小白想要逆袭

开发环境配置与部署linux运维服务器

1.首先下载java11yumsearchjava-11-openjdk1.1选择相应版本(本人是x86_64)(ps:如果不知道选择哪个版本可以输入arch或者uname-a命令查看系统版本信息)1.2进行下载yuminstalljava-11-openjdk.x86_64-y2.查看java11下载位置ls-rl$(whichjava)3.进行环境配置vim/etc/profile3.1使配置

- 成语故事:乘兴而来

墨殇一语

【乘兴而来】chéngxìngérlái,意思是趁着兴致来到,结果很扫兴的回去。出自于《晋书.王徽之传》:“徽之曰:‘本乘兴而来,兴尽而返,何必见安道耶?’”王徽之是东晋时的大书法家王羲之的三儿子,生性高傲,不愿受人约束,行为豪放不拘。虽说在朝做官,却常常到处闲逛,不处理官衙内的日常事务。后来,他干脆辞去官职,隐居在山阴(今绍兴),天天游山玩水,饮酒吟诗,倒也落得个自由自在。有一年冬天,鹅毛大雪纷

- 算法竞赛例题讲解:平方差 第十四届蓝桥杯大赛软件赛省赛 C/C++ 大学 A 组 C平方差

若亦_Royi

C++算法算法蓝桥杯c语言

题目描述给定LLL和RRR,问L≤x≤RL\leqx\leqRL≤x≤R中有多少个数xxx满足存在整数yyy,zzz使得x=y2−z2x=y^{2}-z^{2}x=y2−z2。输入格式输入一行包含两个整数LLL,RRR,用一个空格分隔。输出格式输出一行包含一个整数满足题目给定条件的xxx的数量。输入输出样例输入#115输出#14说明/提示【样例说明】1=12−021=1^{2}−0^{2}1=12

- 【RL】Bellman Equation (贝尔曼等式)

大白菜~

人工智能概率论人工智能算法机器学习

Lecture2:BellmanEquationStatevalue考虑grid-world的单步过程:St→AtRt+1,St+1S_t\xrightarrow[]{A_t}R_{t+1},S_{t+1}StAtRt+1,St+1ttt,t+1t+1t+1:时间戳StS_tSt:时间ttt时所处的stateAtA_tAt:在stateStS_tSt时采取的actionRt+1R_{t+1}Rt+

- 【RL】Basic Concepts in Reinforcement Learning

大白菜~

人工智能机器学习算法人工智能深度学习

Lecture1:BasicConceptsinReinforcementLearningMDP(MarkovDecisionProcess)KeyElementsofMDPSetState:ThesetofstatesS\mathcal{S}S(状态S\mathcal{S}S的集合)Action:thesetofactionsA(s)\mathcal{A}(s)A(s)isassociatedf

- AVL树

土豆有点

AVL树是高度平衡的而二叉树。它的特点是:AVL树中任何节点的两个子树的高度最大差别为1。如果在AVL树中进行插入或删除节点后,可能导致AVL树失去平衡。这种失去平衡的可以概括为4种姿态:LL(左左),LR(左右),RR(右右)和RL(右左)。下面给出它们的示意图:image.png上图中的4棵树都是"失去平衡的AVL树",从左往右的情况依次是:LL、LR、RL、RR。除了上面的情况之外,还有其它

- DQN的理论研究回顾

Jay Morein

强化学习与多智能体深度学习学习

DQN的理论研究回顾1.DQN简介强化学习(RL)(Reinforcementlearning:Anintroduction,2nd,ReinforcementLearningandOptimalControl)一直是机器学习的一个重要领域,近几十年来获得了大量关注。RL关注的是通过与环境的交互进行连续决策,从而根据当前环境制定指导行动的策略,目标是实现长期回报最大化。Q-learning是RL中

- Sklearn、TensorFlow 与 Keras 机器学习实用指南第三版(八)

绝不原创的飞龙

人工智能tensorflow

原文:Hands-OnMachineLearningwithScikit-Learn,Keras,andTensorFlow译者:飞龙协议:CCBY-NC-SA4.0第十八章:强化学习强化学习(RL)是当今最激动人心的机器学习领域之一,也是最古老的之一。自上世纪50年代以来一直存在,多年来产生了许多有趣的应用,特别是在游戏(例如TD-Gammon,一个下棋程序)和机器控制方面,但很少成为头条新闻。

- PyTorch 2.2 中文官方教程(八)

绝不原创的飞龙

人工智能pytorch

训练一个玛丽奥玩游戏的RL代理原文:pytorch.org/tutorials/intermediate/mario_rl_tutorial.html译者:飞龙协议:CCBY-NC-SA4.0注意点击这里下载完整的示例代码作者:冯元松,SurajSubramanian,王浩,郭宇章。这个教程将带你了解深度强化学习的基础知识。最后,你将实现一个能够自己玩游戏的AI马里奥(使用双深度Q网络)。虽然这个

- tomcat基础与部署发布

暗黑小菠萝

Tomcat java web

从51cto搬家了,以后会更新在这里方便自己查看。

做项目一直用tomcat,都是配置到eclipse中使用,这几天有时间整理一下使用心得,有一些自己配置遇到的细节问题。

Tomcat:一个Servlets和JSP页面的容器,以提供网站服务。

一、Tomcat安装

安装方式:①运行.exe安装包

&n

- 网站架构发展的过程

ayaoxinchao

数据库应用服务器网站架构

1.初始阶段网站架构:应用程序、数据库、文件等资源在同一个服务器上

2.应用服务和数据服务分离:应用服务器、数据库服务器、文件服务器

3.使用缓存改善网站性能:为应用服务器提供本地缓存,但受限于应用服务器的内存容量,可以使用专门的缓存服务器,提供分布式缓存服务器架构

4.使用应用服务器集群改善网站的并发处理能力:使用负载均衡调度服务器,将来自客户端浏览器的访问请求分发到应用服务器集群中的任何

- [信息与安全]数据库的备份问题

comsci

数据库

如果你们建设的信息系统是采用中心-分支的模式,那么这里有一个问题

如果你的数据来自中心数据库,那么中心数据库如果出现故障,你的分支机构的数据如何保证安全呢?

是否应该在这种信息系统结构的基础上进行改造,容许分支机构的信息系统也备份一个中心数据库的文件呢?

&n

- 使用maven tomcat plugin插件debug关联源代码

商人shang

mavendebug查看源码tomcat-plugin

*首先需要配置好'''maven-tomcat7-plugin''',参见[[Maven开发Web项目]]的'''Tomcat'''部分。

*配置好后,在[[Eclipse]]中打开'''Debug Configurations'''界面,在'''Maven Build'''项下新建当前工程的调试。在'''Main'''选项卡中点击'''Browse Workspace...'''选择需要开发的

- 大访问量高并发

oloz

大访问量高并发

大访问量高并发的网站主要压力还是在于数据库的操作上,尽量避免频繁的请求数据库。下面简

要列出几点解决方案:

01、优化你的代码和查询语句,合理使用索引

02、使用缓存技术例如memcache、ecache将不经常变化的数据放入缓存之中

03、采用服务器集群、负载均衡分担大访问量高并发压力

04、数据读写分离

05、合理选用框架,合理架构(推荐分布式架构)。

- cache 服务器

小猪猪08

cache

Cache 即高速缓存.那么cache是怎么样提高系统性能与运行速度呢?是不是在任何情况下用cache都能提高性能?是不是cache用的越多就越好呢?我在近期开发的项目中有所体会,写下来当作总结也希望能跟大家一起探讨探讨,有错误的地方希望大家批评指正。

1.Cache 是怎么样工作的?

Cache 是分配在服务器上

- mysql存储过程

香水浓

mysql

Description:插入大量测试数据

use xmpl;

drop procedure if exists mockup_test_data_sp;

create procedure mockup_test_data_sp(

in number_of_records int

)

begin

declare cnt int;

declare name varch

- CSS的class、id、css文件名的常用命名规则

agevs

JavaScriptUI框架Ajaxcss

CSS的class、id、css文件名的常用命名规则

(一)常用的CSS命名规则

头:header

内容:content/container

尾:footer

导航:nav

侧栏:sidebar

栏目:column

页面外围控制整体布局宽度:wrapper

左右中:left right

- 全局数据源

AILIKES

javatomcatmysqljdbcJNDI

实验目的:为了研究两个项目同时访问一个全局数据源的时候是创建了一个数据源对象,还是创建了两个数据源对象。

1:将diuid和mysql驱动包(druid-1.0.2.jar和mysql-connector-java-5.1.15.jar)copy至%TOMCAT_HOME%/lib下;2:配置数据源,将JNDI在%TOMCAT_HOME%/conf/context.xml中配置好,格式如下:&l

- MYSQL的随机查询的实现方法

baalwolf

mysql

MYSQL的随机抽取实现方法。举个例子,要从tablename表中随机提取一条记录,大家一般的写法就是:SELECT * FROM tablename ORDER BY RAND() LIMIT 1。但是,后来我查了一下MYSQL的官方手册,里面针对RAND()的提示大概意思就是,在ORDER BY从句里面不能使用RAND()函数,因为这样会导致数据列被多次扫描。但是在MYSQL 3.23版本中,

- JAVA的getBytes()方法

bijian1013

javaeclipseunixOS

在Java中,String的getBytes()方法是得到一个操作系统默认的编码格式的字节数组。这个表示在不同OS下,返回的东西不一样!

String.getBytes(String decode)方法会根据指定的decode编码返回某字符串在该编码下的byte数组表示,如:

byte[] b_gbk = "

- AngularJS中操作Cookies

bijian1013

JavaScriptAngularJSCookies

如果你的应用足够大、足够复杂,那么你很快就会遇到这样一咱种情况:你需要在客户端存储一些状态信息,这些状态信息是跨session(会话)的。你可能还记得利用document.cookie接口直接操作纯文本cookie的痛苦经历。

幸运的是,这种方式已经一去不复返了,在所有现代浏览器中几乎

- [Maven学习笔记五]Maven聚合和继承特性

bit1129

maven

Maven聚合

在实际的项目中,一个项目通常会划分为多个模块,为了说明问题,以用户登陆这个小web应用为例。通常一个web应用分为三个模块:

1. 模型和数据持久化层user-core,

2. 业务逻辑层user-service以

3. web展现层user-web,

user-service依赖于user-core

user-web依赖于user-core和use

- 【JVM七】JVM知识点总结

bit1129

jvm

1. JVM运行模式

1.1 JVM运行时分为-server和-client两种模式,在32位机器上只有client模式的JVM。通常,64位的JVM默认都是使用server模式,因为server模式的JVM虽然启动慢点,但是,在运行过程,JVM会尽可能的进行优化

1.2 JVM分为三种字节码解释执行方式:mixed mode, interpret mode以及compiler

- linux下查看nginx、apache、mysql、php的编译参数

ronin47

在linux平台下的应用,最流行的莫过于nginx、apache、mysql、php几个。而这几个常用的应用,在手工编译完以后,在其他一些情况下(如:新增模块),往往想要查看当初都使用了那些参数进行的编译。这时候就可以利用以下方法查看。

1、nginx

[root@361way ~]# /App/nginx/sbin/nginx -V

nginx: nginx version: nginx/

- unity中运用Resources.Load的方法?

brotherlamp

unity视频unity资料unity自学unityunity教程

问:unity中运用Resources.Load的方法?

答:Resources.Load是unity本地动态加载资本所用的方法,也即是你想动态加载的时分才用到它,比方枪弹,特效,某些实时替换的图像什么的,主张此文件夹不要放太多东西,在打包的时分,它会独自把里边的一切东西都会集打包到一同,不论里边有没有你用的东西,所以大多数资本应该是自个建文件放置

1、unity实时替换的物体即是依据环境条件

- 线段树-入门

bylijinnan

java算法线段树

/**

* 线段树入门

* 问题:已知线段[2,5] [4,6] [0,7];求点2,4,7分别出现了多少次

* 以下代码建立的线段树用链表来保存,且树的叶子结点类似[i,i]

*

* 参考链接:http://hi.baidu.com/semluhiigubbqvq/item/be736a33a8864789f4e4ad18

* @author lijinna

- 全选与反选

chicony

全选

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

<html>

<head>

<title>全选与反选</title>

- vim一些简单记录

chenchao051

vim

mac在/usr/share/vim/vimrc linux在/etc/vimrc

1、问:后退键不能删除数据,不能往后退怎么办?

答:在vimrc中加入set backspace=2

2、问:如何控制tab键的缩进?

答:在vimrc中加入set tabstop=4 (任何

- Sublime Text 快捷键

daizj

快捷键sublime

[size=large][/size]Sublime Text快捷键:Ctrl+Shift+P:打开命令面板Ctrl+P:搜索项目中的文件Ctrl+G:跳转到第几行Ctrl+W:关闭当前打开文件Ctrl+Shift+W:关闭所有打开文件Ctrl+Shift+V:粘贴并格式化Ctrl+D:选择单词,重复可增加选择下一个相同的单词Ctrl+L:选择行,重复可依次增加选择下一行Ctrl+Shift+L:

- php 引用(&)详解

dcj3sjt126com

PHP

在PHP 中引用的意思是:不同的名字访问同一个变量内容. 与C语言中的指针是有差别的.C语言中的指针里面存储的是变量的内容在内存中存放的地址 变量的引用 PHP 的引用允许你用两个变量来指向同一个内容 复制代码代码如下:

<?

$a="ABC";

$b =&$a;

echo

- SVN中trunk,branches,tags用法详解

dcj3sjt126com

SVN

Subversion有一个很标准的目录结构,是这样的。比如项目是proj,svn地址为svn://proj/,那么标准的svn布局是svn://proj/|+-trunk+-branches+-tags这是一个标准的布局,trunk为主开发目录,branches为分支开发目录,tags为tag存档目录(不允许修改)。但是具体这几个目录应该如何使用,svn并没有明确的规范,更多的还是用户自己的习惯。

- 对软件设计的思考

e200702084

设计模式数据结构算法ssh活动

软件设计的宏观与微观

软件开发是一种高智商的开发活动。一个优秀的软件设计人员不仅要从宏观上把握软件之间的开发,也要从微观上把握软件之间的开发。宏观上,可以应用面向对象设计,采用流行的SSH架构,采用web层,业务逻辑层,持久层分层架构。采用设计模式提供系统的健壮性和可维护性。微观上,对于一个类,甚至方法的调用,从计算机的角度模拟程序的运行情况。了解内存分配,参数传

- 同步、异步、阻塞、非阻塞

geeksun

非阻塞

同步、异步、阻塞、非阻塞这几个概念有时有点混淆,在此文试图解释一下。

同步:发出方法调用后,当没有返回结果,当前线程会一直在等待(阻塞)状态。

场景:打电话,营业厅窗口办业务、B/S架构的http请求-响应模式。

异步:方法调用后不立即返回结果,调用结果通过状态、通知或回调通知方法调用者或接收者。异步方法调用后,当前线程不会阻塞,会继续执行其他任务。

实现:

- Reverse SSH Tunnel 反向打洞實錄

hongtoushizi

ssh

實際的操作步驟:

# 首先,在客戶那理的機器下指令連回我們自己的 Server,並設定自己 Server 上的 12345 port 會對應到幾器上的 SSH port

ssh -NfR 12345:localhost:22

[email protected]

# 然後在 myhost 的機器上連自己的 12345 port,就可以連回在客戶那的機器

ssh localhost -p 1

- Hibernate中的缓存

Josh_Persistence

一级缓存Hiberante缓存查询缓存二级缓存

Hibernate中的缓存

一、Hiberante中常见的三大缓存:一级缓存,二级缓存和查询缓存。

Hibernate中提供了两级Cache,第一级别的缓存是Session级别的缓存,它是属于事务范围的缓存。这一级别的缓存是由hibernate管理的,一般情况下无需进行干预;第二级别的缓存是SessionFactory级别的缓存,它是属于进程范围或群集范围的缓存。这一级别的缓存

- 对象关系行为模式之延迟加载

home198979

PHP架构延迟加载

形象化设计模式实战 HELLO!架构

一、概念

Lazy Load:一个对象,它虽然不包含所需要的所有数据,但是知道怎么获取这些数据。

延迟加载貌似很简单,就是在数据需要时再从数据库获取,减少数据库的消耗。但这其中还是有不少技巧的。

二、实现延迟加载

实现Lazy Load主要有四种方法:延迟初始化、虚

- xml 验证

pengfeicao521

xmlxml解析

有些字符,xml不能识别,用jdom或者dom4j解析的时候就报错

public static void testPattern() {

// 含有非法字符的串

String str = "Jamey친ÑԂ

- div设置半透明效果

spjich

css半透明

为div设置如下样式:

div{filter:alpha(Opacity=80);-moz-opacity:0.5;opacity: 0.5;}

说明:

1、filter:对win IE设置半透明滤镜效果,filter:alpha(Opacity=80)代表该对象80%半透明,火狐浏览器不认2、-moz-opaci

- 你真的了解单例模式么?

w574240966

java单例设计模式jvm

单例模式,很多初学者认为单例模式很简单,并且认为自己已经掌握了这种设计模式。但事实上,你真的了解单例模式了么。

一,单例模式的5中写法。(回字的四种写法,哈哈。)

1,懒汉式

(1)线程不安全的懒汉式

public cla

决定:

决定:

是初始的状态分布

是初始的状态分布 ~

~

~

~

特定

特定 发生的概率:

发生的概率:

期望return:

期望return:![J(\pi)=\int\limits_{\tau}P(\tau|\pi)R(\tau)=\mathop{E}\limits_{\tau\sim\pi}[R(\tau)]](http://img.e-com-net.com/image/info8/fe0a604c5148480ba85afb7704c80da7.gif)

,最优策略;

,最优策略;![\mathop{E}\limits_{a\sim\pi}[Q^{\pi}(s,a)]=\int_{a}\pi(a_0=a|s_0=s)Q^{\pi}(s,a)](http://img.e-com-net.com/image/info8/f9e5bad3aea042a885104c8be8c9c250.gif)